Although many game developers have decided to support AMD’s proprietary Mantle application programming interface (API), whereas Intel Corp. even expressed interest in exploring the API, Nvidia Corp. has been relatively quiet about one of its arch-rival’s key technologies. This does not mean that the company does not have any opinion about Mantle. According to Nvidia, the proprietary API simply does not bring any tangible benefits.

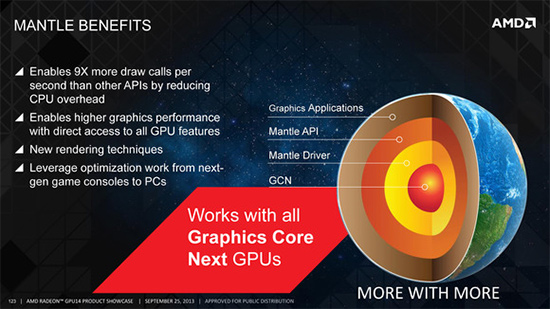

AMD’s Mantle is a cross-platform API designed specifically for graphics processing units based on graphics core next (GCN) architecture (e.g., AMD Radeon R9, R7 and HD 7000-series). The main purpose of the API is to allow game developers to access hardware on the low level and get higher performance because of the lack of limitations of the current APIs. According to AMD’s internal testing, Mantle can bypass all the bottlenecks modern PC/API architectures have, it enables nine times more draw calls per second than DirectX and OpenGL due to lower CPU overhead.

Higher performance can be transformed into better quality of graphics or enable games to be played on lower-cost hardware. Microsoft’s upcoming DirectX 12 API is designed with similar goals in mind, but it is about 1.5 years away from the release, which makes Mantle particularly valuable to game developers who want to bring something new to gamers. Although Nvidia is working with game designers to enable proprietary effects in titles as part of its GameWorks programme, it does not see any value in AMD’s Mantle.

“We do not know much about Mantle, we are not part of Mantle,” said Tom Petersen, a distinguished engineer at Nvidia, in an interview with PC Magazine, reports Dark Side of Gaming. “If they see value there they should go for it. And if they can convince game developers to go for it, go for it. It’s not an Nvidia thing. The key thing is to develop great technologies that deliver benefits to gamers. Now in the case of Mantle it’s not so clear to me that there is a lot of obvious benefits there.”

According to Nvidia, well-made DirectX drivers can deliver the right level of performance in games and there is no need to develop a proprietary API for that.

“If you go look at the numbers, compare our DX drivers today to Mantle games, I don’t think you’re going to notice any big improvement,” said Rev Lebaradian, senior director of engineering at Nvidia.

While Nvidia criticises AMD’s Mantle and AMD criticizes Nvidia’s GameWorks, the ultimate goal of both efforts is similar. Both developers of graphics processing units want to add technologies to games that will exclusively work on their hardware. Mantle allows to add unique graphics effects for AMD Radeon (even though it does not necessarily use proprietary hardware capabilities), whereas GameWorks adds distinctive effects for Nvidia GeForce (using exclusive hardware capabilities in certain cases). [yframe url='http://www.youtube.com/watch?v=aG2kIUerD4c']

Discuss on our Facebook page, HERE.

KitGuru Says: What is clear from Nvidia’s comments about AMD’s Mantle is that it will not develop its own proprietary API and will wait for Microsoft’s DirectX 12 instead. Thanks to GameWorks programme, it can still add exclusive capabilities to select titles if it wants to…

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

No tangible benefits huh?

I ran the built in Thief benchmark with Mantle off, then ran it again with it on.

Frames per second (R9 290 GPU, FX-8350 CPU)

No Mantle:

Min: 28.6

Max: 74.6

Avg: 45.9

Mantle:

Min: 56.9

Max: 83.7

Avg: 68.8

I’d say that’s a pretty big benefit.

The 337.50 NVidia drivers showed similar gains.

and its still basically in beta. Nvidia likes to pretend they are the only ones innovating and investing, yet when presented with someting pivotal like Mantle they brush it off. I still am not impressed by what they claim they have innovated and launched. The biggest deal is physx and that is hard to find because they love their closed crap. They seem scared to compete on the hardware front alone

These gains?

http://www.extremetech.com/gaming/180088-nvidias-questionable-geforce-337-50-driver-or-why-you-shouldnt-trust-manufacturer-provided-numbers

These test were run on the same set of drivers (14.7 RC1), just with the in-game Mantle option turned on.

http://www.hardwarecanucks.com/forum/hardware-canucks-reviews/66075-nvidia-337-50-driver-performance-review.html

That really doesn’t matter. It’s not possible for NVidia to flick an API switch, instead they heavily optimised their drivers. So comparing between one driver from NVidia to the optimised verison is good enough to compared between mantle on and off.

The mantle gains are really good, but the NVidia stuff is just showing what you can still do with Direct X.

NVidia have innovated quite a lot, whether or not you choose to recognise it is up to you. But PhysX is a great example, as you mentioned. So is G-Sync (because, they were first to bring an actual product you can buy and use right now).

Funnily enough, things went sour with AMD and NVidia around about the time they bought Aegia. NVidia offered a licence and AMD turned it down, bitterly. Since they it’s been tit for god damn tat between the two.

EDIT:

It’s just a fucking shame NVidia like to mark up their tech price massively, or make it propitiatory. The biggest reason I currently use NVidia is Linux drivers, and that’s it.

nvidia bought physx. g-sync is the first for desktop gaming, sure. But its not going to be what really works for us. it will be adaptive sync that becomes widely accepted and makes the benefits available to a wider audience.

I don’t know about nvidia offering physx but it would be kind of silly to license tech like that. GPU makers do not license game engines to allow the games to run on their cards nor do they license other tech like that. It’s like TV makers having a license to show every movie. Nvidia shouldn’t have it closed, they are the oddity.

There’s no doubt that DirectX is still good. Mantle has a ton of promise though.

Go to 20:50 and enjoy.

THEY ALMOST ADMIT that THERE ARE benefits from Mantle ..

…before hitting the brakes and avoiding in the last second to say the little bad “AMD” word and changing that word with “low level API”.

THEY ADMIT IT. Nothing else to say.

I run several High End systems and mantle is slower…yes I said that..slower.

(Obviously I don’t use it). (290x in crossfire)

PS. Where the F’ing hell are the driver updates……not the same since they sacked half the staff.

PPS. I run both Nvidia and AMD…..guess which one I would recommend…

Not gonna lie hardwarecanucks should really stick to peripheral and case reviews. But the most reputable sites including anandtech found a very small or nearly no gain at all. While yes there were gains there were no “Up to 67% better performance in single GPU configurations!”

there is no shame in admitting if a company you don’t like did something better then a company you do like. All this means is the company you like will be forced to work harder to make a more competitive product. While no one got the performance gains that nvidia promised there were performance gains. And you know for a fact nvidia only did it to show you don’t need a new API to match AMD in performance. While they didn’t quite beat them they did come pretty close on some occasions.

The point is all this back and forth between them will always be beneficial for us and we shouldnt hate on either one if they fall behind. We should just be standing on the sidelines saying “FIGHT FIGHT FIGHT!!!”

nvidia fanboy spotted

I beg to differ, for me the drivers increased my benchmark score on Star Swarm by over 5000 frames and 16 additional FPS. Just from driver optimisations alone.

I noticed large improvements in CPU heavy games (Skyrim by far had the most gain) but little gains in other titles.

If you need proof just let me know and i’ll get some uploads.

Retard spotted. Reading my posts can quite easily show the opposite.

NVidia bought Aegia and thus the employees worked for it. NVidia have developed PhysX beyond the state it was in when they acquired the tech in 2007. The fact taht they acquired the tech doesn’t lessen the fact that it has matured significantly udner NVidia.

And they offered a licence to AMD and AMD refused. I’m sure you can Google Fu and find this out yourself. The information is there. IT happened.

And FreeSync a) isn’t free and b) isn’t here. It will cost extra money. 100% guarantee you that. If you think monitor manufacturers aren’t going to slap a premium on it and advertise it as “Hyper Super Cool Realism Smooth Gamez Mode” for an extra $100 then you’re kidding yourself. It also isn’t here and hasn’t really got any manufacturers talking about including it (as far as I know). It has made its way into the Display port optional spec and isn’t even compulsory, so it’s not like every monitor released in 2-3 years will have eDP compatible panels and be the revolution AMD want it to be.

Anyway, It sounds like I’m downplaying FreeSync, and I am, but only in reply to your downplaying the innovations NVidia have brought to the table. FreeSync has the potential to be great. I like AMD’s stuff and I have been a user in the past.

But, that’s the thing, Mantle hasn’t if NVidia aren’t on board. It needs the whole market to be a thing that gains traction. I was kind of hoping NVidia would be interested, but it seems the usually bitterness between the two is going to continue for some time. Much to the disappointment of gamers.

Why do I need to admit a company I don’t like did such and such? I don’t like AMD? All I said was the NVidia drivers give similar gains. How can you discern I don’t like AMD from that? My previous card was an AMD. I’m with NVidia now for Linux drivers.

I wouldn’t call making improvements innovating. Thats just a result of having the time. they did not create it and a lot of their “innovations” aren’t ground breaking. Different ways to do aa, ssao etc. hair tech. All can be had in other ways. Gsync maybe considered a big deal, but its a non-issue now. Adaptive sync monitors are expected Q4 2014-Q1 2015. I doubt they will charge much more for it but maybe they will. It will still be more widespread and likely cost less than Gsync. Also can be supported by more companies than nvidia (intel will jump on that pronto).

“Freesync” is no longer AMD. Its just an industry standard now. they may have helped a lot in getting it where it is but its for everybody now. Good for the industry. I think it will eventually become ubiquitous. Its really overdue that we have variable refresh rates. Everybody will support it, except maybe nvidia (HIGHLY doubt they won’t, eventually).

Nvidia trying to sell physx to AMD may have happened but, like I said, that would be silly for AMD to pay for. nvidia can keep it though because it will eventually be a non-issue when an open standard emerges as the industry standard. Even less of an issue than having just a few games supporting it. Nvidia is trying to be proprietary and closed in an industry that frowns on it and is not suited to it.

SLI? Which gpu?

it doesn’t need nvidia if its good enough. It’s not impossible for nvidia to skip out pivotal technology like mantle and adaptive sync while trying to promote their closed crap and end up losing market share like crazy.

I’m not outright saying that mantle is bad but of course mantle will look better because that is done using AMD dx11 drivers. What nvidia doing is keep optimizing their dx11 drivers to match mantle performance. what happen if AMD also doing optimization on their dx11 drivers like nvidia did?

“Freesync” is no longer AMD

FreeSync is AMD solution. the standard was Adaptive Sync on Vesa part. those two are not the same thing.

http://support.amd.com/en-us/kb-articles/Pages/freesync-faq.aspx

what people are mistaken was when AMD start the freesync initiative many believe that it will be a monitor that will work with any GPU from the get go. if you have been following the story for a while this will be no the case even before AMD comes with the above FAQ. the FAQ above just reinforce some of the suspicion that people are having with FreeSync. for nvidia or intel gpu to use Adaptive Sync they need to rework their gpu internal to add the support needed by the standard to enable Adaptive Sync.

and i dare to bet the “free-sync” monitor won’t be cheap either. maybe it will be cheaper than g-sync but it will not going to be as cheap as regular monitor. just look some “gaming” monitor which have price premium on it. most likely company that sell monitor with this tech want to charge premium for it’s ability capable of turning on dynamic refresh rates in games.

so the question is will nvidia going to rework their (future) GPU so they can use Adaptive Sync?

losing market share like crazy? honestly i would like to see that. when AMD coming up with 290X/290 back in october 2013 and with the mining craze many people expecting nvidia will lose market share to AMD. but for two quarters straight (Q4 2014, Q1 2015) their market share are not changing at 35%. and that is despite 290X is very competitive to the very expensive GTX780 Ti

lol. Yeah they are different things and we should be talking about adaptive sync, not freesync. That is just AMD calling their approach to supporting the standard “project freesync”. The actual hardware feature in these monitors is not AMD and is open to anyone. Intel could call it realSync, nvidia could call it wesuckSync.

AMD doesn’t know who might and might not support it right away. Even AMD has to rework some of their GPUs. Most of their APUs support it by their nature and I would guess intel might have some support for it already in their iGPUs. We could all be surprised if nvidia already has support in their design. Either way, I didn’t expect full support but new GPUs are around the corner anyway.

I am expecting affordable monitors. Those gaming monitors have high frequency, high resolution and low response times which drive up premium. Lower end monitors could support adaptive sync without all the extra high end features. The quality will likely vary in terms of frequency range support

nvidia has to support it. It would eventually be like nvidia GPUs not having video output ports just because AMD proposed the output standard.

AMD had a supply problem during that period. If both gamers and miners could have gotten the cards they wanted then things would have been different. Prices would not have skyrocketed if the cards weren’t so limited. Shame for AMD somewhat but at the same time the market would be saturated right now with miners selling their cards.

Nvidia can lose market share more easily if they start missing out on key features. I doubt the majority of their customers are fanboys. Right now AMD has to fight off the bad reputation they have in some people’s minds and probably tighten up their GPUs (fix their cooler designs etc)

“Lower end monitors could support adaptive sync without all the extra high end features”

that adaptive sync itself can be high end feature for monitor maker and justify them asking premium price for it.

“nvidia has to support it. It would eventually be like nvidia GPUs not having video output ports just because AMD proposed the output standard.”

only that nvidia already have their own solution. they don’t need to spend another R&D just so they can support a tech that is similar to their own G-Sync. and i don’t think that your analogy is correct in this situation. if Adaptive Sync monitor will work with any GPU without the need specific feature from the graphic (so it will work on intel/nvidia/amd) card then you might be correct. that is what happen when only one party try to push a standard. their solution only revolve around their existing technology. the solution might be approved as industry standard but it doesn’t mean it will adopted by other party easily just like that.

I think I already said intel might support it already. The actual hardware stuff isn’t new and existed in some CHIPs due to their nature. AMD just added it to their higher end products is all. It sounds pretty simple on the hardware side for the companies to add it. Just need a display port output and the capability. Nvidia will have to support it eventually. There is no way Gsync will compete with this unless it actually fails in execution. Intel will or already can support it. They aren’t stupid and know they would benefit. Plus they don’t have to pay the mean kid in the park (nvidia) to benefit. It’s simply ideal.

Anyway, we will see when this all is sorted out.

Problem with those NVIDIA driver gains was that a 270x would get higher fps than a 780ti in some games. So once they optimized the drivers it was a huge gain.

if it really that simple then why only GCN 1.1 parts are capable of using FreeSync in games?

because AMD added that capability in GCN 1.1. it not being present in chips essentially several years old doesn’t make it hard. It’s interesting enough that those chips even with their age support it outside of games.

GCN 1.1 exist even before nvidia come up with G-Sync back in october last year. That’s mean AMD did not add the necessary tech needed for Adaptive Sync to work with GCN 1.1 after knowing about G-Sync. and at CES january this year when they first introduce FreeSync concept their GPU has the tech for a long time already:

“He explained that this particular laptop’s display happened to support a feature that AMD has had in its graphics chips “for three generations”: dynamic refresh rates.”

http://techreport.com/news/25867/amd-could-counter-nvidia-g-sync-with-simpler-free-sync-tech

but when more detail about FreeSync comes out why only the latest GCN part (1.1) are capable to using FreeSync in games? it is most likely it is not as easy as AMD have would thought it would be in the first place when they come with the idea.

No, it really, really does need NVidia to be a thing. The LAST thing we need in the PC gaming market, and any tech minded person with their head not up their arse knows this, is a bunch of competing API’s on competing GPU’s. I’m old enough to remember 3DFX and Glide. It was a mess and no-one wants that again.

G-Sync was the pivotal technology. Adaptive-Sync was a reaction shot that sprung from G-Sync. Therefore G-Sync is the very definition of pivotal.

I’d have a R280 right now if AMD’s Linux drivers weren’t absolutely garbage (and this has been proven times over) I made the move from Windows to Linux as a software developer because Linux is hands down superior to Windows on that front, and I’m not quite ready to mortgage my house and move to Apple computers. But, I still like to play games and I have a nice amount of Linux enabled AAA games on my Steam account.

In about 2-3 years AMD need to fix their Linux drivers, massively, or make sure Mantle runs well on Linux (which isn’t hard, since it’s basically a bunch of OpenGL extensions) in order to get their foot in the door of Steamboxes. If not only because at this point SteamOS will have a lot more AAA games available (Unreal 4 has been developed to be Linux compatible from the get go, and a fair few Unreal 3 games are being ported over) and AMD cannot afford to have NVidia as the only solution to Linux gaming.

except AMD isn’t like nvidia. Mantle doesn’t affect directx and directx is going nowhere. Mantle would just help those who want more where dx falls short.

Gsync isn’t the first example of variable refresh rates, it was just the loudest. Typical nvidia style. AMD already released GPUs that could support it and one would expect they at least had it planned to be able to get it out so fast.

Who cares about the Khronos w/e? They are going to publish it for everyone like they usually do.

nvidias closed crap is not successful. Physx is a million years old and still hard to find for example. Most other things are graphical alternatives to already existing tech.

Now you just sound like a retarded fanboy the more you say “closed crap”. You also don’t seem to know shit about anything. Typical fanboy.

The Khronos Group are the group in charge of OpenGL, CL, VX and other open technologies. ARM, NVidia, Sony, AMD, Apple, Valve, Unity, Pixar etc are all members and contribute financially and technologically. AMD are open t the idea of submitting it to Khronos when they’re finished. At which point, it might become part of OpenGL and work it’s way into ARM and Intel chipsets. I care about that, the industry cares about that and you should care about that.

AdaptiveSync does not need any GPU hardware changes to work. Technically, AMD released over 500 GPU’s that could handle it, if only they wrote the driver support. Even a HD4870 could, had they not made that device line legacy. Same for G-Sync. The GPU limitation is just something NVidia added, same as AMD didn’t enable all their past GPU, just the latest.

G-Sync was the first to the market, with an actual product. They were the first to the market with a board that handled scaling rather than a) relying on Display Port standards and eDP and b) is technically capable (and this is planned) of handling more than just DisplayPort (meaning G-Sync will be coming to HDMI in the future, albeit, without audio support). As much as you want to try and belittle and wash that away, it’s still going to be there. It’s an advancement and it’s one that lit a fire up AMD’s arse and got them talking about AdaptiveSync/FreeSync.

PhysX is a nice middleware API. It’s compatible with any Unity based game, for free, as it is with any Unreal Engine game also. Developers choose not to use it, because realistically, it just adds eye candy at the expense of performance. However, the games it’s in it adds nicely. You don’t like it? That’s up to you, but even the most ardent AMD users I know, who aren’t total fuckwits, are willing to admit when something is nice.

There could be a 100 instances brought up of NVidia and AMD being dickheads to one and other over the years. Both companies are as bad as the other (NVidia negging performance in Batman on AMD systems, naughty naughty, AMD being shady with TressFX in Tomb Raider, causing NVidia to get gamecode late.. NVidia and Gameworks. AMD and TrueAudio… Thelist goes on and on.)… Don’t be that guy that acts like a dickhead and seems to think one side is morally superior to the other. They’re American corporations. They’re shit. To them you’re shit. Neither of them give a flying sea monkey about you.

don’t care about linux

“closed crap” is not a fanboy comment. I don’t like it and if AMD were doing it I wouldn’t like it either. Closed is crap and nvidia must clearly be a cesspool because they love it.

Open is open. Unless you mean allowing them to change it rather than just make drivers for and use it. Ultimately I wouldn’t care about that unless AMD and DICE do a crap job at it and need the help.

Richard Huddy, “AMD Graphics Gaming Scientist,” has said that there is a need for hardware support, at least to use it for gaming. It’s not all in the drivers.

A year from now nobody will know what Gsync is unless nvidia goes stupid on the PR. Similar to how nobody knows many of the early implementations of data storage etc. that just weren’t up to standard. It can be first all it wants, its irrelevant.

Oh I like physx in some games. In fact I held off playing borderlands 2 till I could get physx working well enough. My point is that it is where it is because its closed crap. It could have been great and almost ubiquitous, but… nvidia.

AMD wasn’t shady with tressFX from my understanding. Nvidia had issues with the game in general, not just tressFX and yeah that was because of late game code. The reason AMD had access to the code was because they were already involved in the game so even though the developers were “tweaking until the last minute”, AMD was more up-to-date. The difference? Nvidia could see all the source and quickly sort out their drivers. In addition, they could actually RUN tressFX since it wasn’t closed crap.

Nvidia gameworks and trueAudio should not be in the same line. They are different. TrueAudio is hardware to enhance audio. I don’t remember hearing about it needing game devs to do anything that would affect nvidia. Gameworks is the opposite of how TressFX was handled, how Mantle will be handled etc.

So… you’ve identified correctly some closed crap from nvidia that is detrimental to the industry, and some open tech from AMD. I know they are companies looking for money, but their approach seems to currently be different. Feel free to come up with a valid example from AMD that is the same as gameworks or as closed as physx. Mentioning some stuff that is clearly working on nvidia hardware or talking about added value like trueaudio doesn’t do it.

I was done with you after my last post. You’re a moron.

easy to call people names. You have yet to show your opinion is warranted. Can’t be that hard to find something like gameworks and physx on the AMD side of things. But I understand if you want to admit you were wrong and just name call instead.

No, I’m right, you’re simply a moron steeped in bias with his head too far up his arse to see otherwise.

“closed crap” “closed crap” “closed crap” “closed crap” “closed crap”

“i dont care about linux”

Moron.

Then you’re a moron.

Think they need to be corrected there even from their own users like myself, Mantle does bring benefits even without doing a thing. It pushed forward everyone else’s lazy ass to get Direct 12 happening faster so we all benefit!

Thank you AMD.

you don’t have no amd gpu sounds like youre an NVidia fanboy

This has nothing to do with driver optimization for DX11. The main problem is the limitation of DX11. The software has to communicate through DX with your GPU. However, the number of draw calls to the GPU are a huge bottleneck nowadays. This means that your CPU has to do a lot more work. This is why Mantle yields the best results on lower end CPUs. It basically reduces the load on your CPU, thus preventing it from becoming the bottleneck and keeping you from getting the most out of your GPU.