At the Siggraph 2014 conference Intel Corp. demonstrated how the new DirectX 12 application programming interface can speed up rendering performance and improve battery life of mobile devices. The results of the demo were nothing but impressive: the new low-level API not only rather dramatically improves performance, but also allows to cut-down power consumption significantly.

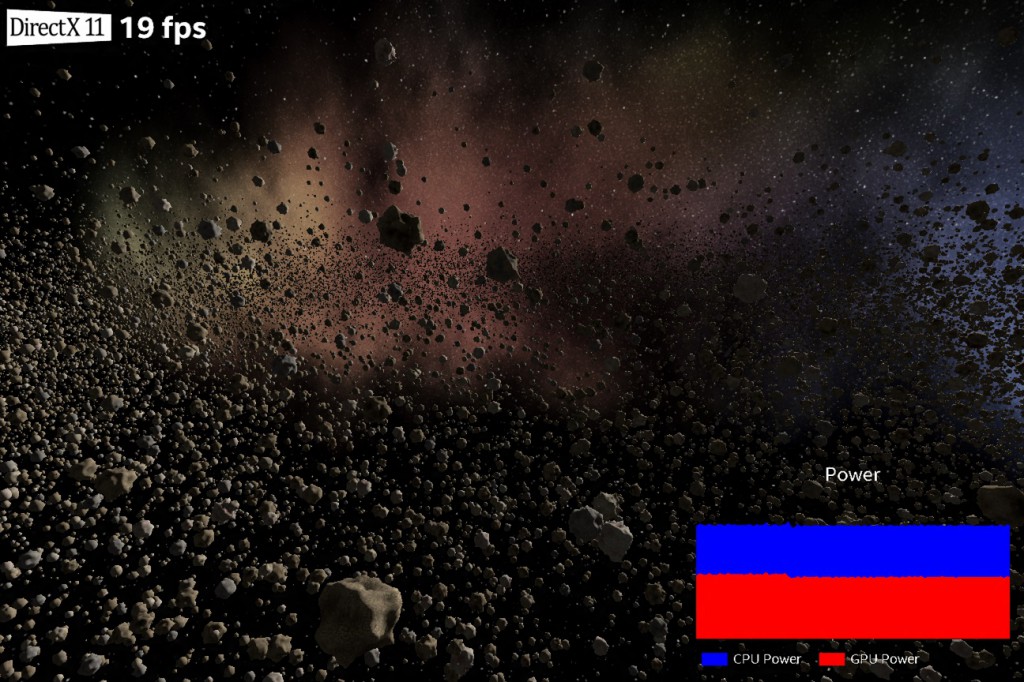

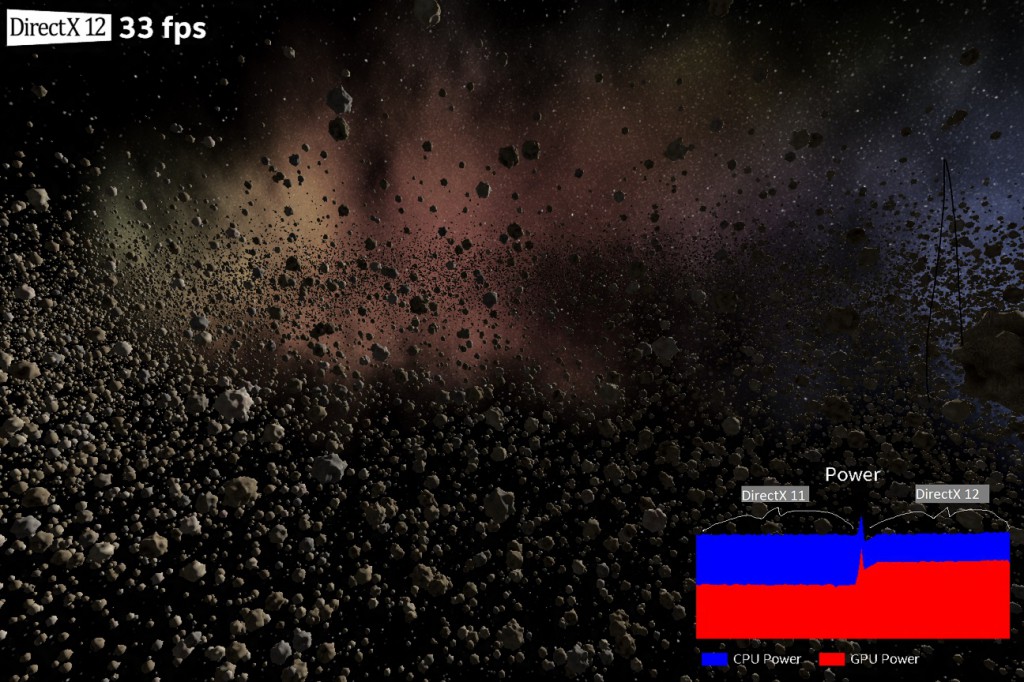

In the demo Intel renders a scene of 50,000 fully dynamic and unique asteroids in one of two modes: maximum performance and maximum power saving. The application can switch between the DirectX 11 and DirectX 12 APIs. The hardware used was a Microsoft Surface Pro 3 tablet with an Intel Core i-series “Haswell” processor.

By simply switching to the DirectX 12 renderer, the application increases performance from 19fps to 33fps, or by ~70 per cent. Since the DirectX 12 is designed for low overhead, multi-threaded rendering, reduced CPU power requirement allows the chip to increase the clock-rate of the graphics core, thus boosting performance without exceeding thermal design power of the microprocessor.

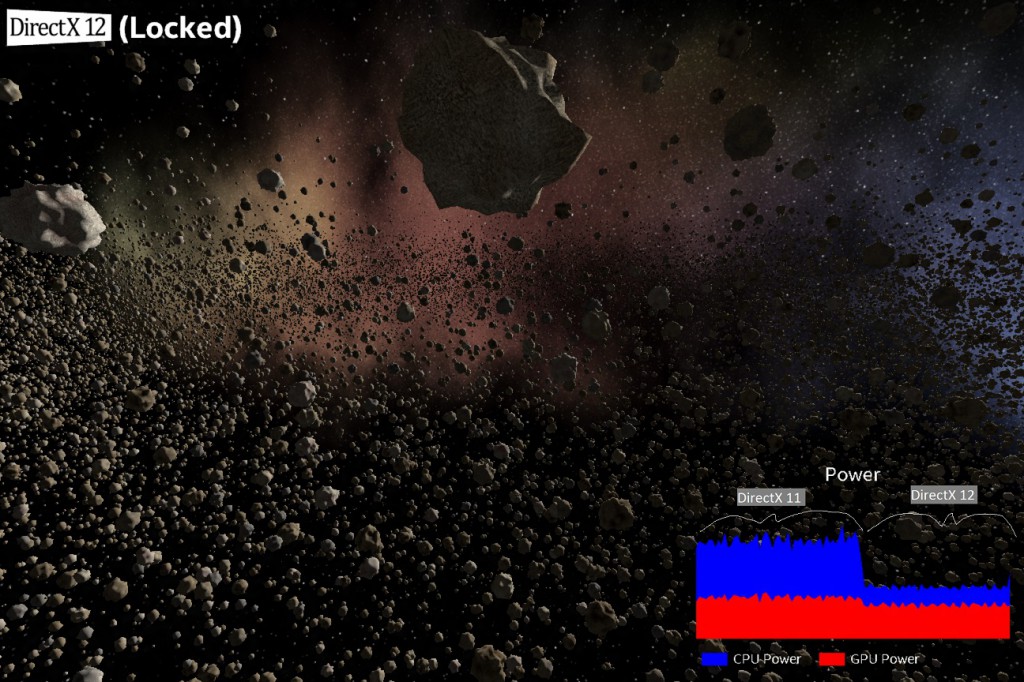

When it comes to power saving, when the demo is switched to the DX12 renderer (but the fps is kept on the same level as in the case of the DX11 renderer), the hardware uses less than half the CPU power when compared to DirectX 11, resulting in a cooler device with longer battery life.

The demo clearly shows that Microsoft’s forthcoming DirectX 12 application programming interface can both boost performance and cut-down power consumption (the exact balance between the two will be decided by software developers). Game developers will certainly boost performance (or quality) of video games designed for desktops, but for many other applications lower power consumption may be more important.

Discuss on our Facebook page, HERE.

KitGuru Says: It remains to be seen how the real-world games benefit from the DX12 API. 70 per cent performance improvement in a complex scene is impressive, but actual games may behave a lot differently than Intel’s demo.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Did you get to test it in person, or was it a premade demo that microsoft made? Because if it was premade with their own stuff I don’t trust the demo to have a 70ish percentage boost. Considering its microsoft we are talking about here :/

Why these gains seem almost as good as the recent driver update from nvidia. Oh, wait…

As usual, we lack information about the context of this demo. As for AMD Mantle, the 70% gain is certainly observable only with a specific context like a CPU bound scenario.

The gain will probably be much lower (or even negligible) in a GPU bound scenario … which is pretty common on a gamer’s rig.

Whatever, i’m taking every optimization they get ^^

As usual, we lack information about the context of this demo. As for AMD Mantle, the 70% gain is certainly observable only with a specific context like a CPU bound scenario.

The gain will probably be much lower (or even negligible) in a GPU bound scenario … which is pretty common on a gamer’s rig.

Whatever, i’m taking every optimization they get ^^