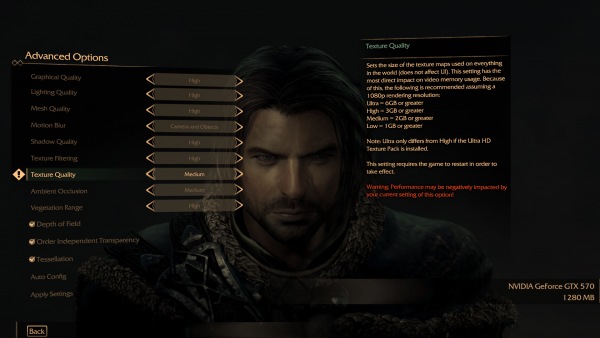

Much like Bethesda's The Evil Within, Warner Bros' upcoming game, Middle Earth: Shadow of Mordor will also have a high Video RAM requirement for ultra textures, a screenshot of the game's PC graphical settings has shown.

If you want to play Shadow of Mordor on ultra then you're going to need a pretty high end card as apparently there is a 6GB VRAM requirement, meaning that users may need a very specific GPU in order to play at full detail settings. To make things worse, that's the recommended requirement for 1080p, rather than 4K, a resolution that you would expect to eat up that much VRAM.

Flagship cards from both Nvidia and AMD currently only ship with 4GB of VRAM, with the exception of the GTX Titans and some specific custom made GTX 780 and AMD R9 290x cards.

We've already had some reason to doubt the quality of Middle Earth: Shadow of Mordor's PC port as Warner Bros refused to send out PC review codes before launch day, while outlets with console copies can start publishing reviews ahead of launch this week.

However, there is also the possibility that the PC version of this game is fine and maybe this is all just a horrible mistake in wording on the game's settings pages. Although, that may be unlikely.

Warner Bros has yet to comment on this so it looks like we are going to have to wait for the reviews to hit to find out if the PC version of Shadow of Mordor is actually any good.

Discuss on our Facebook page, HERE.

KitGuru Says: A 6GB VRAM requirement seems pretty ridiculous, especially considering very few GPUs on the market are packing that much. What do you guys think of this? Is this making you doubt the quality of the PC version? Could this have something to do with the PS4's 8GB of onboard GDDR5 memory?

Source: NeoGAF

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

No

it is not. It has 4.5GB Useable Unified Memory. Which means it can

allocate it dynamically. the standard split is 2.5GB VRAM to 2GB System

Ram. That can dynamically change though. Even so…. No reason for this

amount of VRAM being used.

Personally I think ultra = high uncompressed to try and make out they gave PC Gamers something

The game probably requires 4GB GDDR5. I would question if this picture is actually accurate to what they are making. I would not expect a company to make something that we could not run on current high-end hardware. If this is true however to get the max Graphics we all would have to upgrade to extreme hardware and probably that the engine is extremely inefficient.

it’s a high end game, and with 4k becoming alarmingly close to affordable (for a monitor at least, not the GPUs you’d need to run everything on full!), I think it’s great that Dev’s are properly targeting the next gen of hardware (390X, titan 2/980ti (or whatever GM110 comes out as!) etc.)

the way I see it, this just means that

a) it will look incredible on medium textures

b) when the hardware catches up, and we can finally play it on ultra, it will still look great (you all remember crysis…right?!)

it looks like a pretty cool game, so I’ll probably check it out when it goes cheaper, but I’m not gonna sink £2k+ to play it….GTA5 on the other hand, will make me go to X99, 32GB DDR4 and SLI 980s if that’s what it takes 😛

Companies love thinking like this. lmao

Very wrong and unnecessary. Where the art of gaming went to? I’m pretty sure they can achieve the same level of details using 40% of what they are wanting.

preparing to 4K resolution? Bullshit. 4K resolution will never be a true thing in my option because it doesnt reflect really in better graphics, too many dots for too few space, means your eyes will interlace them….

You’re so naive it’s hilarious. Somehow I think you need to graduate high school before you can even think about spending 2k on a PC.

4K resolution is a very valid thing for gaming and does improve graphics, not sure on what you mean about your eyes interlacing them. I have a 4K monitor (60Hz) and I use two R9 290’s to drive it. The graphics are noticeably better and very nice. That being said, it was way too expensive.

If my R290 4GB isn’t enough then tough ****, ridiculous video requirements.

Played whole day on a 4k TV on my friends house, I felt no difference at all. Could it be psicological to me or to you?

Was it a PC or a console you played on?

PC, with a R9 295×2

I think “difference” would depend on display size…more noticeable on larger screens. How big?

32″, Played bioshock infinite and dota!

Well, if it depends on distance or size, more then 32″ I think its unreasonable. Many PC games 40″ is pretty annoying due to the fact we need to rotate alot the neck, also, If you get some distance, ou get the same effect of a 32″ on a 40″ TV ofc, like I said, your eyes ends interlacing the pixels.

No need for that. If you think they’re ignorant then you need to use your arguments. You don’t prove anything to anyone by being a twit on the net.

I’m hoping, as someone else has stated, that this in fact means that the game will looks terrific with mid-range devices and on mid settings, and when we’ll finally have really good cards to play it, we can play it on max and no think ‘man, this game is so old, look at those terrible graphics.’ Instead we’d enjoy a good old game that looks as good on max settings as the then-current games.

I suspect it’s poor optimisation rather than high end, so the medium textures will look like medium textures. Lazy optimisation aimed effectively at the consoles, and badly ported to PC in other words.

Yeah I am hoping I have a 770 with 4GB which I bought because of Titanfall. So I will see what happens. I do remember Crytek doing this with the Crysis series so we’ll see

Already did!

– Still in high school.

there arent any flagship gpus that come with 6gb :/ i mean its just the titan and the 295 or other multigpu cards that have that much Vram.

mostly the middle range’s 3rd party comes with 6gb which is also very rare.. :/ i have a msi r9 290x gaming Oc… which is much more powerful than the gtx 770 wih 6gb vram.. i just find this to be absurd. i hope shared memory works.

i need more VRAM GPU in my Videocard…. :/

is time for Titan Black

i need more VRAM GPU in my Videocard…. :/

is time for Titan Black

Let’s be honest, using a good argument would be a waste of time because the persons stance wouldn’t allow for one. Making a large, unfounded statement as he did shows he is not about a good argument but about justifying a viewpoint that almost certainly stems from a personally held belief that is independent of the facts. Therefore, the best thing to do is go “stfu, stop talking balls” and leave it at that 😉

complete load of bs. check the steam page.

I think you’d be surprised by how reasonable people can be when you treat them sensitively and rationally.

Look into a 4k’s affect on anti-aliasing…you don’t even need it anymore.

yeah and calling me a kid was such a valid and compelling argument. you disagree, but it was a lot better founded than anything you’ve offered up, unfortunately your stance won’t allow you to accept that.

you guys can think what you want about how I’m a puppet to nVidia/Intel, I really couldn’t care less, I’d rather they continued to push graphics, even if they aren’t doing it in the most efficient way, rather than stagnating like they have been for years and pumping out games you could run on q6600/5850

But how are they pushing graphics? You’re idea that absurd amounts of vram = pushing graphics is akin to thinking high fuel consumption on a car automatically translates to performance. It doesn’t. Star citizen is pushing boundaries. This game is must pushing shoddy ports and coding. Backing them is just encouraging lazy coding practices.

Having been on many a forum, my radar is fine tuned to emotive arguments. As shown in his latest reply, his argument is indeed emotive and not based on rational thinking at all. In these cases, calling the person out is really the most that can be done as they are not going to accept a logically superior argument.

I’ve still not seen even a shred of fact, data or evidence in any of your posts here. your argument contains no logic, just assumptions (that the game will not look ‘good enough’ to justify what you perceive to be unrealistic requirements) and name calling. As such I don’t think ‘logically superior’ really applies.

STFU, stop talking balls (can we leave it at that? 😉 )

Here’s the facts kid: a top end gaming pc is considerably more powerful than any of the consoles. Yet apparently we will need an almost unheard amount of vram. On top of that, they have refused to give out pc codes. When we consider all of that, where we are in the console life spans, and watchdogs as well as them not marketing graphics, it all points towards a shitty port. Whereas your argument is ****WEEEEE HIGH SPECS MUST BE GOOD WEEEEEEEE!!!***** I’ll bet you £50 right now that its a shitty port. Put your money where your mouth is brah.

again with the name calling rubbish!

– “high end gaming PC is more powerful than a console” of course it is, which is exactly why we should be using that power to the best of our ability, unless you’re claiming/speculating that the console version is going to be running the same textures and shader code as a PC on ultra

– “almost unheard of” = NOT unheard of

– not giving out PC codes – yes this may be a fact, but your assumed reasoning behind this is conjecture, still, thanks for bowing to my demands and referencing facts.

– console life cycle – yeah it’s early on, and no doubt optimisation will get better as time goes on, as we saw with PS2, XBOX, 360 and everything before, however, with both new consoles being x86 based, there’s no reason to believe that ports will be ‘shoddy’, or even that they will really exist, as co-development across platforms is much more flexible this time round.

– watchdogs – yes, you are correct, a game came out recently with that name….I fail to see how that fact backs up your point. (before you start, I’m aware that it runs like a sniffer dog fresh from a ketamine bust, but the ‘recommended’ VRAM for that was 2GB)

– not marketing graphics – fair enough, but they’re making a big song and dance about the behavioural nuances, so the graphics might not be their main selling point. this doesn’t mean that the graphics wont be good, amazing, ground breaking, or any other adjective, just that there is more to this game than good graphics.

“Whereas your argument is ****WEEEEE HIGH SPECS MUST BE GOOD WEEEEEEEE!!!*****” – no, it wasn’t, my argument was that in order for graphics to progress, they need to target the most powerful hardware available at the time (or in the pipeline) for ultra settings, and that stalling to be in line with what the consoles can handle is holding back PC games from being all they can be (or at least has been for the last 4-5 years)

Interlacing the pixels? What on earth does that mean? Sounds like nonsense to me.

Everything about the game screams “bad port” but you think having OTT specs means it’s pushing limits, rather than being badly coded.

So put £50 where your mouth is.

Less than 4% of PC gamers have 4GB VRAM on their GPU’s said one guy on The Evil Within article and he’s right, who has that much VRAM available?, they make is sound like your gaming on a 4k screen.

PhoneyVirus

https://twitter.com/PhoneyVirus

https://phoneyvirus.wordpress.com/

poor PC gamer, get your wallet and go upgrade ur graphic card, again…

start using the fucking system RAM idiots!

In addition to Poor Coding skills, This is just a ploy to force people

away from PC and towards Consoles, even the next gen consoles dont have

6GB of VRAM, and it also means console owners will get a fixed medium

quality graphics

In addition to Poor Coding skills, This is just a ploy to force people

away from PC and towards Consoles, even the next gen consoles dont have

6GB of VRAM, and it also means console owners will get a fixed medium

quality graphics

You need to upgrade your card every 3-4 years. Afterall this game on high will look and play better than on PS4/XBO.

While the Witcher 3 has an annoying texture and NPC pop-in issue due to a LACK of VRAM usage.