Regular readers will know of the challenge submitted to KitGuru by OCZ – to kill 5 of their ARC 100 Solid State Drives. If you need a recap, then head back to this original page posted on December 10th.

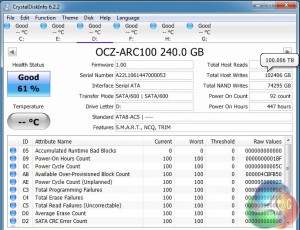

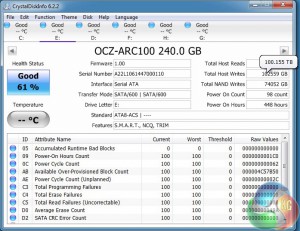

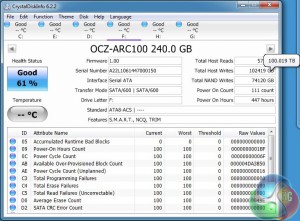

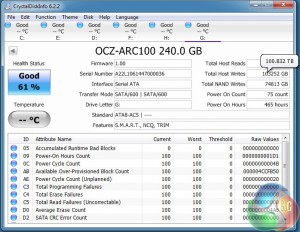

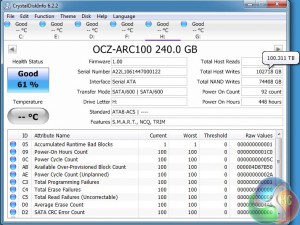

The drives all passed the warranty figure of 22TB at the close of December – our next test was to get them all past the 100TB mark. Would any fail?

Read original ‘challenge' editorial on 10th December, HERE.

Read 17th December 2014 ‘challenge' update, HERE.

Read 27th December 2014 22TB mark update, HERE.

We have no idea how long the 5 drives will last, but it will be interesting. OCZ are hoping that this test will go some way to repair their reputation which suffered in previous years after well known problems with the Sandforce 2281 controller. OCZ no longer use this controller and have introduced a new ‘ShieldPlus Warranty System' to reassure customers.

The ‘ShieldPlus Warranty system’ eliminates the requirement for ‘proof of purchase’ … if a drive fails. A brand new SSD is shipped to the OCZ customer of the same capacity, in advance. When the replacement is received by the customer they can then send their old faulty drive back with a prepaid envelope. Yes it all does seem too good to be true, but we can’t find any catch.

Anyway, be sure to check KitGuru regularly for updates on this OCZ ‘death test’. This is the first time any SSD company have proposed this idea so we have to give some credit where it is due! 100TB is a nice milestone for all 5 drives to hit – no major failures yet, but we will keep you updated!

Discuss on our Facebook page, over HERE.

KitGuru says: More updates coming as we get them!

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

No “major” failures yet. So what minor failure have you had?

How do these compare to other drives, I am not sure what other drives would archive in this situation? We have been researching into purchasing 30+ consumer ssds to put into some new servers, we understand consumer drives are not designed for this but these will be used with hardware raid10, ceph n+1 storage with NFS backup.

Said in the video one drive has has a bad block fault. But this does not stop the drive from functioning.

You might be interesting in this article, Richard.

http://www.storagereview.com/in_the_lab_giving_client_ssds_an_edge_in_enterprise

There was a test with Samsung 840’s to on a Dutch Website. They almost reached 800TB writes before they died.

For example: I have a Sandisk Extreme Cruzer 32GB ( which is recognised as a Sandisk SSD U100 32GB. Rated for max 20TB on Writes, but i think it will do more then that. It already has done 348GB so far with Remaining life at 100% and Wear Range Delta ( Wear Leveling count ) at 18. I expected the life go down to 99% at around 300GB. Great usb flash drive to.

Thank you for this, been having a little read around on the OCZ ARC 100’s and they will not work for my situation, as we are using HP Enterprise hardware, we need the SSDs to have a thermal sensor else the server fans run at constant 100%.

Why boast about your YouTube channel, but then embed a Vimeo player?

Apologies, never really bother with the videos, just scan the text.

Do not use Micron drives for enterprise Write workloads… then again if you are looking at Ceph… you must not be interested in performance anyways. If you want cheap… I would suggest ebaying around for intel S3700 or S3500 drives… you can get them for 50c/gb… Micron cannot code garbage collection to save a life.

Thank you for your reply. Why do you hint “if we are using Ceph we are not looking at performance”. Our plan is to trial 3x HP servers minimum – each server containing 2xSAS 15k for OS, 1x Small SSD for Ceph Cache, 4x 500gb SSD, hw raid card is limited to 200k iops and we will potentially be using 10Gbe for Ceph. We are trying to aim for a balance between performace/space/high-availability with virtual machines. We currently have the 3 servers, raid cards and sas disks…. if its too much hastle we could simply go 8xSAS but we wont get anywhere near the same performance.

So… ceph performance is… interesting. It has a lot of potential but isn’t there yet. We used 10Gbe links on our cluster and never came close to maxing out the links… throughput tests could hit 900MB/s but never while running cephs internal benchmarks. I think I best performance was a little over 200MB/s 6 nodes 15 drives each replicas=3 … SSD for metadata. Not sure what hardware raid card you are talking about… even the old P420 can do 450k iops. P430 can do 1M.

Do you have a user account at any of the main forums so we can continue this in a more on topic location. lol

Thank you, this has been very useful, we are using the P420 but officially on HP it says max 200k IOPS, We do have a bunch of P410’s but didn’t want to try them as HP say max 50k IOPS.

Huh… probably just playing it safe on the quickspecs… Also to hit that you need to have the Smart path on… Otherwise you will start capping out around 200k ish. On the latest firmware it should auto-enable for SSD volumes.

P420s are much quicker than P410s… the generational improvement of these controllers is outstanding.

You can find me in freenode #servethehome

The main reason for linking to that article was more to do with over-provisioning the SSDs, rather than recommending Micron drives. 🙂

No “major” failures yet. So what minor failure have you had?

http://17oxen.com/best-external-hard-drives/