Advanced Micro Devices said this week that it would provide special discounts to owners of the GeForce GTX 970 graphics cards. It is not surprising that AMD is trying to capitalize on the scandal with incorrect specifications of the GTX 970. Unfortunately, AMD did not provide any exact details on the matter.

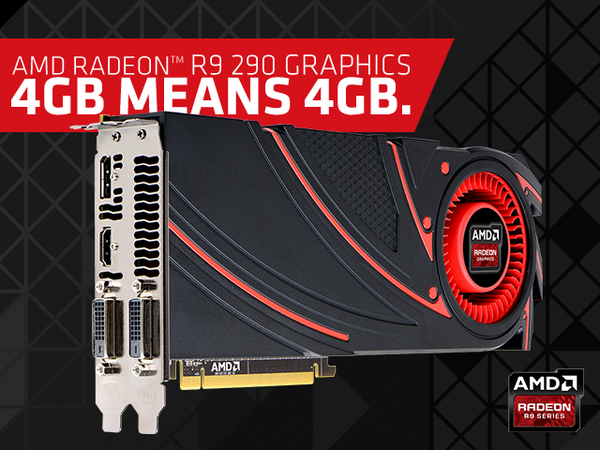

“Anyone returning their GTX970 and wanting a great deal on a Radeon with a full 4GB please let us know,” said Roy Taylor, corporate vice president of global channel sales at AMD, in a Twitter post.

Earlier this week it was confirmed by Nvidia that it had incorrectly stated specifications of its GeForce GTX 970 graphics card. Due to architectural limitations of the GM204-200 graphics processing unit used on the graphics adapter, the graphics solution cannot use more than 3.5GB of onboard memory at full speed. As a result, in certain cases performance of the GeForce GTX 970 drops.

Many owners of Nvidia GeForce GTX 970-based graphics cards now want to return their adapters to stores because of incorrect specifications. Since Nvidia has not yet announced any plans to settle the dispute with angry GTX 970 owners, annoyed clients will naturally return their adapters to retailers and/or accept discounts on Radeon R9 290 or R9 290X graphics cards from AMD once it announces an appropriate campaign officially.

Nvidia did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: It will be very interesting to learn what exactly AMD will offer to owners of Nvidia GeForce GTX 970 graphics cards.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

i know its not gonna be a popular idea but just because the specs were slightly misleading doesnt change the fact that it is an amazing card for the price. in very very very rare cases will that 500mb of slow memory actually do anything to slow your pc. for 99.99% of gaming the gtx 970 will do its job just like it was advertised. FYI i hate nvidia schemes too but ill stay with them until amd provides a cool, low power card at this performance.

If I remember correctly, didn’t the 660 have 1.5gb of vram, then have a 512mb buffer at 128bit? No one seemed to care about that at the time…

so because its 3.5 instead of 4 people are talking bad about the card? what are you people skyrim on texture pack players?

Windows uses vRAM too. Windows 8 with 2 monitors plugged in and a borderless windowed mode game (if you are a multitasker like me) instantly means upwards of ~400MB vRAM already being used. That means you’re limited instantly to 3GB or sometimes even less, depending on what you have running, which means that the card is broke and not what users BOUGHT.

no not even that would do it. i used to have a 3gb gtx 660ti (also had memory issues i heard) and i modded the hell outta skyrim, had plenty of vram to spare. maybe if your talking about 4k modded skyrim but then you would need sli for smooth gameplay so no issue

I have an asus trix. I upgraded from a 660ti 2gb and even on that 660ti i had no problems with texture pack skyrim. I have 3 monitors on my computer. But 2weeks ago i decide to insert my old 5770 radeon for the other monitor so i can have more juice since i have put every graphic settings in nvidia control panel to high settings. but other than that 3.5gb is still way more than enough. in my opinion.

Last week when i played skyrim with all the texture packs it sucked 2.4GB vram leaving 1.1GB free(since its 3.5gb). so…3.5gb is still plenty

The 660 and 660Ti had it differently. They used a 192-bit mem bus, so fast vRAM was 1.5GB and slow vRAM was 48GB/s (more if you OC’d). The 970 is even worse; that is only ~28GB/s at BEST, and Memory clock OCs don’t help it much at all. The 660 and 660Ti had a single 512MB block attached to a single memory controller with full L2 cache access. There were also 3GB versions of the cards that did not have the problem. And also, SLI doubled the bandwidth to 96GB/s (or more), so users with that probably noticed it less too. Especially on windows 7 and fullscreening games on a single monitor, where many games’d barely use over the 1.5GB vRAM. Now, it might be a real problem with running newer games released after the new consoles being released due to super unoptimization and all, but back then there was very little way to notice.

you don’t even get the point lol. yeah 3.5gb is plenty or more than enough for average users, but that doesn’t matter. people who bought the card didn’t get what they paid for. simple.

660Ti 3GB cards don’t have the issue; only the 2GB. It’s because they added three 512MB chips on three 64-bit controllers (totalling 192-bit mem bus) and a final 512MB chip on one of those controllers, limiting the final 512MB to 48GB/s at stock memory clocks. The 3GB card would have had two extra 512MB chips on the remaining two 64-bit controllers, meaning the second 1.5GB of vRAM ran at the full 144GB/s. Or they could have just used three 1GB chips on each 64-bit controllers.

And my point is, people are just being retarded for that 500mb. When the GTX 770 was released it was at the same price. 2gb vram. Now people turning in the 970 just because it has 500mb less? that they dont even need? thats stupid in my opinion lol. Not like you gonna buy that Radeon with the 4gb and is actually gonna use the 4gb. People with a single card will never need a 4gb. 4K gamers are running sli and 3ways so why do u need the 4gb? why are people being so difficult on the 500mb?

Kind of miss leading there AMD.. The GTX 970 dose in fact have 4GIG of useable Vram no matter how you try and slice it..

Even @3.5GIG of vram most 4k games will still whistle on by. Even 3×1080 monitors in Surround vision will whistle on by

Who in there right mind would give up a decent gaming video card for a AMD Driver broken card.. and yes to even this date AMD Radeon drivers are the worst… and before any one says…… I own both amd and Nvidia cards a few pcs around the house.. I can tell you right now which pc have less Driver crashes or complete system BSOD due to drivers…… Take a wild guess….

on another note this almost sound like the issues some GTX 570 boards had there with cuda cores… The defected boards had one block of cores disabled and then through together to make the 560TI448Core Editions… It was a massive success.. 100$ cheaper then the 570 and more powerful then the 560TI…. Price for performance was amazing.. I personally owned one of these boards

The problem is both of the next-gen consoles have 4GB VRAM so over the next year I imagine some games will make the most of it, sure it’s not an issue for todays games on the 970 but I see it becoming an issue in the future that you have to lower settings to use less RAM or suffer poor FPS.

The problem is both of the next-gen consoles have 4GB VRAM so over the next year I imagine some games will make the most of it, sure it’s not an issue for todays games on the 970 but I see it becoming an issue in the future that you have to lower settings to use less RAM or suffer poor FPS.

in the feature meaning in 2years, when people are already sli’ing GTX 1080’s ?

That 2 but the reason i dont see why i need to return mine is because the 64rop that is actually a 56, what? like…i will get 3less fps or something? not like it will bother me from playing my games lol same as the 3.5gb. And like i stated no one ever used 4gb of a single card before. Those on 4K monitors are always running sli’s and 3ways.

I love my 970, FU AMD , u cant make good cards but u can mock good ones, go research a efficient and a new architecture because u are re branding ur cards for almost 4 years now , time for something new or time to go home u losers

the whole point being is that nvidia sold the card with misleading advertising and got suckers like you to buy it. you actually prove how ignorant someone can be. truly the only loser here is you.

the whole point being is that nvidia sold the card using misleading advertising. and they only owned upto it when they got caught. i dont care how good the card is, anyone that tries to justify this type of blatant lie to sell a product should be brought before the courts, heavily fined and all owners should get a full refund directly from nvidia, not the distributors. im glad i didnt buy a gtx970 piece of garbage

Where’s the Intel ad that says four cores means four cores?

plz -_- 3.5 GB still beats 4GB heater R9 290X

Better hope games don’t advance, I easily see them taking up more than 3.5GB of VRAM this year on 1440p or even 1080p. So the card has enough power to play games, you push every setting to max, including textures and HERE COMES THE STUTTER.

I agree till “Piece of garbage”. It’s still a good card!

You considers GPUBoss Review meaningful ?? LOL

“The GTX 970 dose in fact have 4GIG of useable Vram no matter how you try and slice it”

Yeah theoretically usable 4GB. But reality is 3.5GB 256bit and the remaining 500 MB is 32Bit (28GB/Sec).

LOOL you are the loser that you bought GTX970 and later nVidia admitted that GTX970 is a crippled one

Fraud is Fraud, not all people can compromise like you can

As of now it can hardly slow down your games. However, in the long run the card will be less future-proof, which is kinda bad when you pay so much for it.

Quad Core processors are dying out now mate, 6 and beyond is the way forward!

this is totally stupid. consider for a second: if it goes beyond 3.5GB vRAM then performance drop like hell

just freaking deactivate the use of the 0.5GB and you are fine!!!!! is so freaking simple to solve i dont even know why nvidia is refusing to fix it!!!! 3.5GB of VRAM is a lot by any standard and its not like they cant fix this with firmware, hell they should have just sold the 970 with 3.5GB instead of 4GB, cheaper for them to produce, more benefits for them, hell even a 3GB vRAM 970 would be awesome, when did they lose all their brains in nvidia?

Using 3930MB to 4060MB in ac unity with a 970 sli no stutter. I am k thanks AMD.

What this guy said ^

At those kinds of speeds you may as well just fall back on your DDR3 system RAM. Which any Windows system can do very easily, and does so at times. So the extra 0.5GB not he GPU is pretty much just giving you half a GB of system RAM. Big whoop.

Well it would only affect people using multiple monitors while gaming as when the game stars using more than 3.5GB Vram it will begin to stutter dye to the lower 500 memory

Go ahead and enjoy your GTX 970 with 4GB ehem 3.5GB + a slower 0.5gb card. Lolz.

I have 2 of these 970s in SLI – perform flawlessly. This is a desperate attempt by AMD to trash NVIDIA. Unless you’re running benchmarks all day, the real world use is negligible.

As a businessman I appreciate AMD jumping on what is a rare opportunity to cash in on your competitors screw up. As far as the GTX 970 being way better than the 290 or 290x, well… it is really about equal give or take. I know because I actually have both cards. A 4GB MSI Radeon R9-290 and a MSI Gaming GTX 970 4GB (ok technically 3.5GB + .5GB). The R9 290 performs just as well all things being equal (in the same rig/same set up), and actually beats the 970 in a few games (Tomb Raider, Hitman Absolution). I like the 290 and have it in my wife’s rig right now. The GTX 970 comes out on top in more games though, at least by a few FPS in BF4/BF3 (yes even using Mantle), COD, Crysis 3, Metro, Splinter Cell Blacklist, FarCry 4, Watchdogs (only about 4 FPS diff. on Watchdogs at same settings). I know the 290x is even a little better than the 290, but my MSI easily OC’s to equal a 290x. I play at 1440p on a 27″ ASUS monitor. What I can tell you is that setting both cards fans at 50%, the 970 will be at 55-60C, and the 290 will be at 65-75C. The 290 is audible too. Not really “loud” – but compared to the 970 it is. The 970 is quiet as a mouse. I really haven’t had any big driver snafus with either so I’d say they’re about equal there. Power draw is almost double on the AMD card though. I like both cards, but if they were the same price I would still take a 970. No game uses over 4GB unless you are gaming at 4K, and those people gaming at 4K are a small minority (plus if you’re a 4K gamer, you wouldn’t have bought a 970 anyway unless you’re a fool). Now if the 290/290x’s are going to sell for $100 less than a 970, I would take it in a heartbeat. Its really just as good or really- really close. Just warmer and noisier. But the GTX 970 is still an awesome card. If you have one you know what I’m talking about. Its crazy fast, and very cool and quiet. – But was Nvidia wrong? -oh yeah they were. Did they know about the incorrect info/specs. No question they did. Shame on you Nvidia. That’s bad business.

This is terrible!!

https://www.youtube.com/watch?v=spZJrsssPA0

U r just low life twat. Ur comment is below minimum. Enjoy ur 970 while u can. Ur comment is so wrong on so many levels. I dont even know why i reply to a turd.

That means u r using half of the memory capacity per card. And ur comment shows how little inteligent u r. Anyways amd card is 15 months older. So k thanks nvidia for little efort from ur side. Dumb ass.

290 as well as 970 can play 1440p with ease. It doesnt matter to me how good the card is lying is lying. Therefore as a free man driven by a morale and common sense i stay away from fraudsters. On other hand i bought my 290 for £120. Now that is saving. No power bill will compensate that in 50years and i can live with few less frames. And next year i do exactly the same with new generation. Ppl these days are spoiled and what is very common with nvidia fanboys they will take what ever marketing departemnt or some online reviewers will spit at them. Try to think rationally. Of course if ur parents wallets are fat or u r just rich ignore everything i just said. I dont know anyone who can afford to upgrade every single year to stay on top of this silly war. Bunch of dumb ass fanboys forget principles and refer to card performance blinded by lie and fraud. Now go back to ur pc and enjoy ur cod game.

GPU Boss isn’t great. Anandtech is better.

http://www.anandtech.com/bench/product/1068?vs=1355

Fraud is Fraud indeed. But some people just overdo it. like i said they are returning the card for something that doesnt even bother them in their gaming performance.

lol the keyword here is “turd”

Actually no, in a multi GPU setup the memory is not added together so he is using all of his memory capacity

“And ur comment shows how little inteligent u r.”

cya later

Finally someone who actually gets it, unlike most of the people commenting here

once again slice it how u want to but its still 4GIG of useable memory

don’t get me wrong I not defending what nvidia did here but sticking to the facts… 4 Gig of useable memory is still 4 gig of useable memory…

its just a poor attempt at amd taking a pot shot here but skewing the facts

GTXBilal, wow your username wasn’t a giveaway.

Intel does 2c/4t CPUs. Also, its more about what/how they advertise than the actual hardware.

nicely said..but still people who buy NVidia still don’t realize that the only reason they are fast is because they are cheating with IQ optimizations.. They have been doing this since the 5800 ultra.. Another Scandal. If you compare the Image quality to ATI’s put everything on high you would notice the difference.. Do me a favor and compare Skyrim and tell me you don’t notice the blooming on the NVidia card rig? I have a gtx 780ti and 3 amd 290xs. I do my own comparison’s because YOU CAN”T TRUST REVIEWS or even Nvidia paid commenters on forums.. sad world..lol.

Thanks for this balanced take on the cards. I’m actually keeping my GTX 970 as it performs rather well when I’m playing in 1080p or 2k (via DSR). I can’t really afford a decent 4k monitor this year, but when the time comes that 4k displays are within financial reach, I’ll remember nVidia’s lies. 🙂

Yes, that means I won’t buy another GTX 970 and SLi it. I’ll sell off this card and probably get an AMD-based product. I just hope AMD doesn’t churn out 300W TDP GPUs; I don’t want to upgrade my 750W PSU, and the Philippines is the most expensive when it comes to electricity in Southeast Asia. :/

http://www.anandtech.com/bench/product/1059?vs=1355

GPUBOSS is unreliable, second it does not state how it tested. In a game that uses more than 3.5GB of VRAM, the R9 290 is better. Also, lets be honest. 4GB? Hardly impressive, forget ‘future proof’.

What I find really funny is that they believed they where going to get away with it. Nvidia its 2015 not 1995. Nothing stay hidden for long even with all the misdirections of government and companies. I do wonder what where they think at the top when the came up with the idea to do what they did.

Think this way, you by a car and your told its a 4 cylinder engine but actually running only 3 cylinder and the 4th when it does come on stutters your car. So tell me do you want to by a car like that?

LOL failed. Still if your happy with Nvidia that fine its not like most people will have the $$ to have a monitor that will push the 970 right now and if they do, the 980 does the job just fine.

The software that shows the vram usage can be slightly buggy. As in my old rig of 6870 CF will show it using 1993mb of vram. That is 993mb over the 1GB GDDR. Which means I’m supposed to be using 993mb of DDR3 System RAM. Which means I’m supposed to dip below 30 FPS. That isn’t the case. Some time monitoring software will just add up both cards. And you have to divide the total usage. My GTX580 3GB ED (Yeah Relics I know, But those cuda cores are still fast.) Will show up as either 1.5gb or 6gb in some software. MSI Afterburner shown that I maxed out my vram usage but GPUz shows me that I only used up appox 3GB of the total 6GB. Software >.> So after burner read my cards as 1.5GB while GPUz read it as 6GB, both are wrong.

VRAM size is very important though. From the reports I’ve seen of the 0.5GB section it seems like if it uses that. The drop is like your system trying to use HDD or RAM space to store the data. AMD did a good call there.

Now before somebody says “AMD Fanboy” I own equal amounts of Nvidia, Intel, and AMD products. Hell my first graphics card was the 9800GTX+ with the very classic C2D.

But what Nvidia did here is wrong. And anybody who wants AMD dead needs to rethink that idea. Nvidia was able to push card prices so damn high because AMD didn’t make a current gen/year card. I remember days where 400 bucks = high end and 500 to 600 is dual GPU. Now it’s 500 for single GPU.

According to nVidia it was a miscommunication between the engineers and the sales people and they listed that the 970 had the same number of ROPs (i think that’s what it was) as the 980 but it actually didn’t so unless that’s some bs lie then you have to give them a little bit of slack.

That’s is geek-priceless!

You sir, deserve a like.

Should I give up my 970s (SLI) for two 290x? I game at 1080p and from where I live the 290s are more expensive than 970s.

If you don’t know your OWN top-of-the-line-products there is still something _seriously_ wrong in the chain of your company. Something HAD to be kept secret, for all the wrong (and very ill) reasons.

How do you call the “mistake” made by Nvidia then? The wrong specifications? Glorious?

Also, the people that initially complained about performance problems, you are insulting them with that speech of yours.

Jezus, You nvidia people don’t even have respect for each other. Incredible.

Rebranding cards for 4 years now? In what cave where you hiding when AMD launched the 7970? It destroyed the competition, for example.

Which is natural. For example, Nvidia’s 980 is doing the same now. Both companies take turns in leading performance.

People that don’t even realise the latter fact fall in a category called “Mega Fanboys.”

Tag. You’re it.

Haha, Turd. that one cracked me up.

“Turd”

Heck, 970 is fine at 1080p, unless you see it otherwise. The devil will be in the performance in the future, not sure how rapidly games will use more VRAM. Of that no one can be sure.

If it isn’t running well, or you are REALLY looking for an upgrade, wait for AMD’s 300 series imho.

You obviously cannot read. I said real world useage is negligible.

Well you know, you’re right. Its just 500 megs, not really a big deal. I sincerely agree!

But nvidia made a big deal out of it when they (actually) LIED TO EVERYONE.

The fact that doesnt seem to bother you could tell me 2 things:

1) Negligence? (This is not an insult, I could be wrong)

2) You are already used to nvidia lying to you

Well, call me crazy, but I don’t think giving even less respect to people is the cure here…

What are you talking about? Where am I disrespecting anybody?

“I have 2 of these 970s in SLI – perform flawlessly. This is a desperate

attempt by AMD to trash NVIDIA. Unless you’re running benchmarks all

day, the real world use is negligible.”

I have two, they work great – AMD is running a smear campaign for their under-performing card.

How is that disrespectful?

That would be funny if AMD hadn’t been crippling their own kit with such a horrible drivers. For linux users at least, AMD is pretty much no-go as the drivers are simply douche….

Look, People got lied to, and you are trying to cover that up. That is unrespectful for their sakes. Thats enough!

Well everyone has there own way to see something and i respect everyone’s opinion. I know NVIDIA has Lied several time with their previous card too. But I am not 1 of those Computer guys who goes on a website to compare different cards to see which one has better performance than others, i rather just buy it and try it for myself and to see if the card is good for the way I use it. I started going this After i went from a Radeon 5770 to a GTX 460 realising that Nvidia gives better performance than some radeon’s even tho the rdaeon rated higher than the gtx. What i also love about the 970 is the powerconsumption and the 1×8 powerconnector that can also make it easier for SLI users. So even with the 500mb less i still dont see a reason why i should turn it in you know? im still happy with my asus strix 970because it does more than what i want it to do anyways

I cannot tell if you’re trolling or are serious….

I don’t work for NVIDIA, I’m not part of any coverup – just letting people know that it’s being blown out of proportion.

The issue goes beyond gaming at high resolution, it speaks to the cards longevity. People buy high end Nvidia cards for longevity, 3-5 years of usage at least. High resolution isn’t the only thing that eats up memory size. Some current games, and surely future games, can eat 3.5+ gb of memory at just 1080p resolutions. That effectively makes the gtx 970 defective, because for all its performance, it will stutter like crazy in future titles that eat more than 3.5GB of RAM @ 1080p. The only way to avoid this is to essentially come up with a software limit that cripples the card to 3.5GB usage only.

So it’s not that this is just a 4k resolution problem, it’s a future proof problem. The GTX 970 is going to be performing like a GTX 260 in future titles….all because of a stupid RAM configuration. The stuttering will basically be too unbearable to play anything.

the issue is they can’t disable the last 512mb with software because of the way the hardware is designed. and since the memory controller can only access either the 3.5gb or 512mb partitions one at a time, even a software work around would cause the memory controller to drop the 3.5GB to work on the 512mb, which means the performance issue still exists.

what about when games use 4GB of ram @ 1080p? The performance issue will make the gtx 970 stutter like crazy. This means the GTX 970’s longevity is SEVERELY diminished. Dying light for example, can use more than 4GB @ 1080p. =

Also, what if I want to run 8X SSAA on a game? AA requires memory. 16x Anisotropic filtering requires memory. That means it’s VERY easy to go over the 3.5GB limit @ 1080p. This card is more powerful, so obviously people will be running titles at higher AA settings.

Nvidia’s DSR feature is also important…..It allows you to run 4K resolution on a 1080p monitor for a crisper image……..this means the 3.5GB problem will persist for anyone trying to use this feature in any of today’s titles.

AND EXACTLY WHAT GAMES ARE YOU PLAYING ON IT? give it 1-2 years……you’ll be in a stutter fest.

then i want to know the people that decided that putting a bottleneck on purpose instead of selling this with only 3GB was a good idea, heck there were so many cheaper and better ways to fix this, why this overcomplicated ingeneering? why? from an engineer point of view or from an economic point of view the 0.5GB doesnt make sense at all!!

In 1-2 years I’ll have an entirely new rig, so that matters none to me.

Um….. If a game uses more than 4GB , then the 290x would suffer too. Hell, the 980 would suffer with its 4GB of memory too.

I run DSR on as many games as possible, the games are fine.

This is a mountain out of a molehill scenario.

well in a rare case I guess that would be a issues… Which game to date though on a single 1080p monitor will uses 4 GIG of vram…

largest game I know is xplane X fully cracked out and it doesn’t use more then 3gig @ 1080

once again were talking 1080p not DSR 4.0x on a 1080p ,,, on that note though I used DRS 4.x on a 760 GTX on a single 1080p monitor.. to my surprise most titles work only had AsstroCorseta crash on load so far.

I upgrade video cards every two and a half years, so I guess this problem doesn’t concern me as much. :v

And what exactly are you doing with that card after 1-2 years? reselling it right? Soo…..the person that buys it gets screwed because the longevity of the card is compromised, and when they try to run the latest games, bam…..stutter fest. So all that makes you is a prick selling people faulty hardware.

And what exactly are you doing with that card after 2 years? reselling it right? Soo…..the person that buys it gets screwed because the longevity of the card is compromised, and when they try to run the latest games, bam…..stutter fest. So all that makes you is a prick selling people faulty hardware…..

You’re silly…. A GTX 560 can still run today’s game with its 1GB of ram. The 970 is still a great card in my opinion. If you think I’d be a douchebag selling it at a steep discount to a potential buyer in 2-3 years then you don’t understand simple economics.

As a consumer I don’t feel cheated or slighted, I’ve played with my cards in 4k and had no issues.

In the end, it’s my money and not yours, so why the concern?

I can sell it, but by then they’d know it is gimped, and I wouldn’t hide that fact from them. It’d also reflect in the price I’d ask for. Prick? So extreme, you. :v

There’s also the option of putting it in an older rig for retro gaming, or I could also give it away, if I’m feeling particularly generous?

I had the 290x, t now I have a 970 g1, for me the biggest differences is its using a lot less power so I didn’t have to replace my power supply, and even when im overclocking my 970 its still very quiet, to me those alone are very good reasons to go 970. To be fair my 290x came with a triple cooler so temps while still high compared to the g1 970 the fans were still quite loud. the 970 I have is at 1531 and 3.9gig ram and it blows all game out of the water at hd with ultra settings, my 290x didn’t do that in all games. But I am very interested in what kind of offer they’ll make though I wont be swapping. When I get my ultra monitor with 3440 res and if that starts being a problem ill take NVidia to court lol, but I doubt it will be, so far im more than impressed with my 970 gtx g1 card, actually the last time I felt I got such a great card like this was the 9700 from radon in 2002 and before that the rivatnt2 ultra lol and before that the voodoo 2 with expansion card(12meg ram). So the 970 now to me finds its mantle on the history of my mind lol. To be fair though some reviewers did say that you could use this card on 4k, and some even tested it, a lot of games were around 45-55 fps, to me that’s pretty playagle and certainly a option for some people. Im buying a ultra wide monitor its just under 4k but I have a feeling itll be 60fps on ultra -with maybe 2 aa, again to me that’s acceptable.

really? so playing tombraider 2013, bioshock farcry 4,3,2,blood,wolfenstien,battlefield 4 all on ultra settings for hd most running at 130-145 fps without sync, only crisis 3 is on 60fps, or tombraider at 87 fps if you put her hair on lol (while running cool silent and low powered.),and you think that that’s because NVidia are cheating. Then all I can say is keep cheating plse. I came from ati card 10 years of them and although their 290x is very similar to my 970 it felt like I was at a airport most of the time lol, and image well that’s subjective, you say this I say that, coming from a user from both cards the image quality on both are great and not a real difference for me. Cant really trust what you write though cause you think games running on full ultra mod and at 135-145 fps is cheating lol.

calm down the guy didn’t attack you did he. If he sells his card that doesn’t make him a prick, and if someone buys it then they should do research and be confident on what they buy. I do hope your wrong cause all my tests and gear show my card is using 4 gig well 4.96 when it needs to and I don’t see any slowdown in bench marks or running multiple games. If it becomes a issue on my new monitor in 1 year I will complain too but till that happens this is a fantastic card I love it right now and it playes everything I have really smoothly, I think itll be 3-4 years till we begin to see this and even then its not exactly gona be a expensive second hand card.

haha most people don’t buy a gtx 970 without overclocking it and a fairer comparison for the 290x would be against say a g1 gtx970 overclocked, that’s 1531 on my rig. I owned the sapphire 3 fan 290x and its pretty much on par with my g1 970, but overclocking the 970 takes it to a slightly beter end than the 290x and it does this by using less power staying super cool and very quiet, something my sapphire 290x didn’t do quaite as well. But good try. If you want to compare a reference 970 then the 290 would have been a fair option.

its on this page a much fairer test of both gpus, remember the 970 is like a quiet room and the 290x is like a small jet engine.

http://www.overclockers.com/forums/showthread.php/754538-Which-to-keep-Gigabyte-G1-970-vs-MSI-Lightning-290X

Did you notice how even on stock the 970 g1 beats the 290x and not just any 290x the best of the 290xs? And the difference isnt exactly small. Just goes to show how deceptive it can be to put the wrong choice of cards up against each other.GOOD TRY THOUGH

So I bought gtx 970 and I got annoying computer freezes and so did my friend. I would like to get a deal from amd because nvidia sucks… But I dont know even who to contact about it..

I had HD 6950 and it was way better than this gtx 970.

Example CS:GO fps dropped 250-280 —> 140-210 it was kinda stable with amd…

Dont compare sapphire with anything 😀 who even buys sapphire?…

Well said………

as much as I would I cant my 970 is a brilliant card and im cant complain. I might not like or appreciate the spec mix-up or lie depending what you think bbut 1 thing is true, my gtx970 g1 card overclocks and beats the amd 290x at hd res and it does it quietly while being supercool, for me to swap it their cards would have to run cool, with a lot less power and quiet while kicking out incredible fps. But ill be thinking twice or three times in 2 years when I upgrade again. The x290x vs the same appropriate card is a overclocked 970 so comparing a plain 970 is like comparing a plain 290 so im talking 970 g1 oc againsed 290x oc and on higher than hd res the x290 does over take but im only hd gameing this next couple of years. The proof is on the net theres plenty of tests, the 970 oc kicked the 290x considerably at 1080 1920p

a 290x vs a 970 isn’t a balanced argument.

I think the benchmarks people do and they use a variety of makes tells the true story.

Sure. It’s his evaluation of the situation that is. He doesn’t like he’s leaning towards any brand.

Ah, now I see the issue. Obviously, super slow vram is going to impact performance. At 1080, which most people probably aren’t going to use 970’s for to be completely honest, they should be fine. I assume the only throttling issues are at 1440p/4K, or when using super high res textures.

It’s not only about gaming. If you’re using borderless windowed with second monitors plugged in etc, Windows can use a whole lotta vRAM in itself. Especially Windows 8 and 8.1, and possibly 10. The problem is that the card DOESN’T cut its vRAM usage at 3.5GB (fast portion), and uses the slower RAM before trying to empty its memory buffer/compress what’s in there. So games see more vRAM, try to use more vRAM, and it causes stuttering/slowdowns.

nVidia could honestly probably fix it with driver updates, but who knows what they’re doing. They just decided overclocking on laptop GPUs was a “bug” and it was now “fixed”, effectively rendering their cards instantly obsolete after 1 year each.

Good luck using a graphics card for 5 years on high settings.

Are you retarded, kiddo? The GTX970 is the better card because it’s an overclocking powerhouse. Any fool knows, you can overclock a GTX970 and get a bona fide stock GTX980 out of your GTX970, lol. Also, a lot of us own Oculus Rifts. And the GTX series works best for that application. You know nothing, so stop pretending like you do, eh? You sound like a Yank American that knows nothing. Canada is better than the USA because we’re not ignorant or suffer from gun violence and obesity, eh. Google once in awhile, kiddo.

The GTX970 also takes less power. It’s obvious, you’re a 14 year old Yank that lives in a single wide trailer.

GTX970 VS 290 IS FIN THE OC VERSION OF THE 970 USUALLY SAYS OC AFTER IT SO ID SAY A 970 OC AND A 290X OC WAS A FAIR COMPARISON. gurr cpas soz

their 290x triple fan was a good card but when I saw how much less power and quietness the 970 was I jumped ship, but sapphire did build a nice card there.

Fuck-Yes.

You don’t have access to more than 3.5g and at 1440p i notice a fair amount of games such as shadow of mordor dying light and a few others hit it and I get stuttering and heavy fps drops.

lol I love my 970 and yes I chose it over the 290x so in hd gaming it would feel like a step backwards. I ont need a new power supply and my machine is quieter than its been in years, and that’s cause amd were always so noisy. Strangely I’m getting insane fps and insane smoothness like ive never known, that’s how good the 970 gtx g1 is and if you told me it was really 2gig card then I still wouldn’t care. Just don’t do it again NVidia.

I had the 290x and a gtx970 g1 and I can tell you that the g1 was beating the 290x at around 15-20 fps at least in most games on hd. But like you say its so quiet.

cheationg with iq lol, its not cheating in games. fanboy foolishery there dude.

5 years that’s a little to long if your a gamer 2-3 maybe.