You would never have thought that some of the world's biggest technological innovators and forward thinkers would end up being the biggest scare-mongers when it comes to artificial intelligence, but they are. Joining the likes of Elon Musk and Stephen Hawking, Apple co-founder Steve Wozniak has now spoken out about his fears of AI.

“Computers are going to take over from humans, no question,” he said during a chat with the Australian Financial Review. Referencing his similar minded contemporaries, Wozniak continued: “I agree that the future is scary and very bad for people. If we build these devices to take care of everything for us, eventually they'll think faster than us and they'll get rid of the slow humans to run companies more efficiently.”

The point which a lot of technologically minded individuals are worried about, is the moment that computers become able to replicate and design themselves, thereby making ever smarter and more efficient versions of them. The evolution of the new ‘species' at that point would be exponential and something we can't even comprehend would be produced in short order.

“Will we be the gods? Will we be the family pets? Or will we be ants that get stepped on? I don't know about that… But when I got that thinking in my head about if I'm going to be treated in the future as a pet to these smart machines… well I'm going to treat my own pet dog really nice,” said Wozniak.

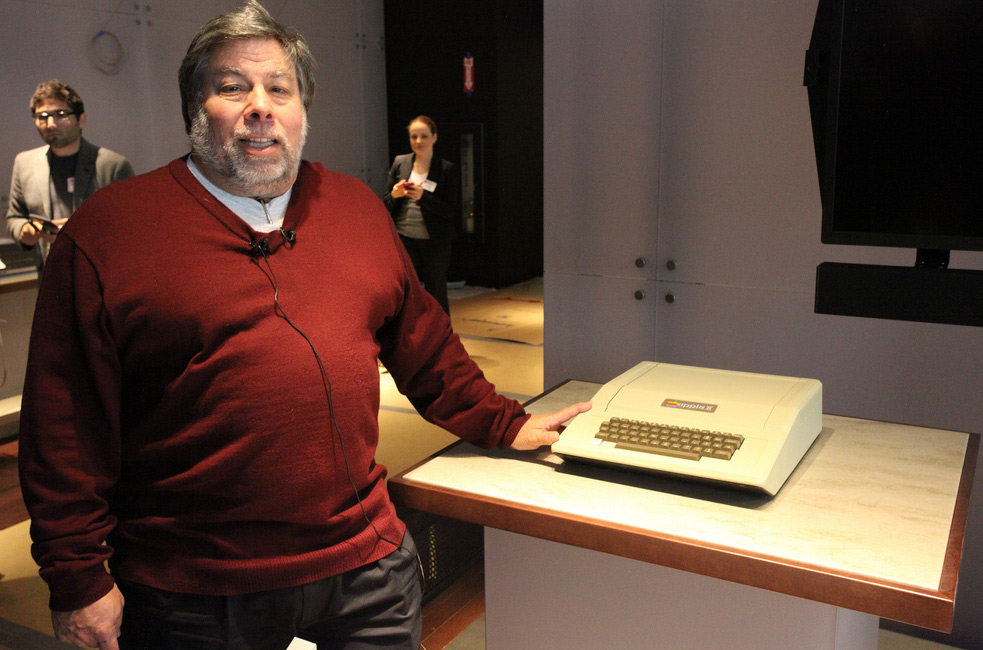

If this were a video, I'd be waiting for that Apple II to rise up and attack him

However not everyone agrees with this take on the future. While AI might continue to be the big bad in a lot of action movies and games, many futurists believe the real danger will always come from people. Because we still have no idea how to program real desires into a robot, the only way computers could become a physical threat to humans would be if we programmed them to be. While some extremists may like the idea of programming self-replicating robots to kill everyone and everything, there are plenty of conventional ways to reap such destruction, and it's not happened yet.

In reality, the threat faced by AI and smarter machines is likely to be an economical one. What will we all do when the robots are doing all of our jobs for us?

KitGuru Says: I'm just hoping no one makes an algorithm capable of writing insightful and intriguing news stories.

Image source: Robert Scoble/Flickr

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

So, step by step, if intelligent machines take over all human activity, including art, preaching and

science, what will happen to the organic body and its conditioned-to-work-and-think brain? Surely, will it decay? Is mankind-machines coexistence possible while people are fighting for jobs and resources:

competition, corporations, nations and so on? Anyway, what is the endeavor in which a robot cannot take part or channel at all successfully? Why won’t the future automatons be alive? What is the fundamental difference between a mechanical structure, organic or inorganic, that imitates life and life itself? Is there any, virtual or real? If it said that there is a difference, is it just some kind of knowledge-authority who is defining and differentiating between things? Perhaps then, someday, will be a powerful automaton the one who will define life, its unique life, truth itself? Indeed, will he impose his point of view with his outstanding intelligence, a new science? Certainly, will he define where life begin and end too? Therefore, where does death too? Along these lines, there is a peculiar book, a preview in goo.gl/8Ax6gL Just another suggestion

There are key differences between a system which is artificially intelligent and a true “artificial intelligence” which can learn – because if it learns, it can learn bad things, to be a criminal etc.

How do we know what a neural network has buried deep within?

We’ll enter the age of the transformers and earth will become Cybertron!

✉✉⚓✉⚓✉⚓get over 13kM0NTH@ag21:

Going Here you

Can Find Out,,

►►► https://WorkOnlineApp.com/get2/position98…