Microsoft Corp. has confirmed that its upcoming DirectX 12 application programming interface (API) will support some sort of cross-vendor multi-GPU technology that will allow graphics processing units from different developers to work together at the same time. Unfortunately, the software developer revealed no specifics about the tech, therefore, it does not mean that one could actually benefit by using a Radeon and a GeForce in the same system.

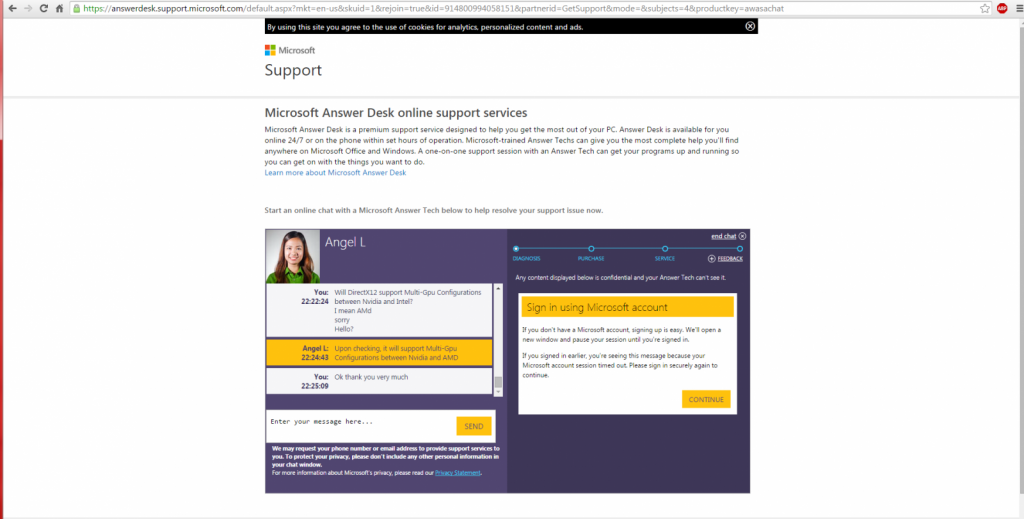

A Microsoft technical support staff member said that DirectX 12 will support “multi-GPU configurations between Nvidia and AMD”, according to a screenshot published over at LinusTechTips, a community known for various interesting findings. The Microsoft representative did not reveal requirements necessary to make an AMD Radeon and an Nvidia GeForce run in tandem, besides, she also did not indicate actual benefits of such setup.

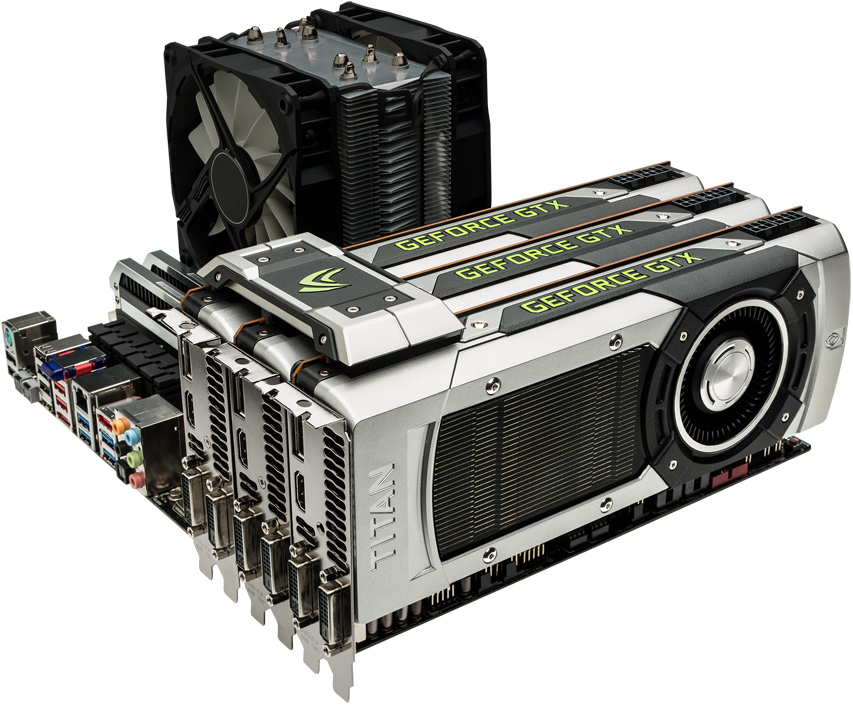

Various types of multi-GPU technologies are used to accomplish various tasks. Gamers utilize more than one graphics card in AMD CrossFire or Nvidia SLI configurations to get higher framerates in modern video games. Professionals use multiple graphics adapters to attach many displays to one PC. Engineers can use different types of add-in-boards (AIBs) for rendering (AMD FirePro or Nvidia Quadro) and simulation (Nvidia Tesla or Intel Xeon Phi). Artists and designers can use several GPUs for final rendering in ultra-high resolutions using ray-tracing or similar methods. While in some cases (e.g., driving multiple displays, rendering + simulation, etc.) it is possible to use AIBs from different developers, in many other cases (gaming, ray-tracing, etc.) it is impossible due to various limitations. Independent hardware vendors (IHV) also do not exactly like heterogeneous multi-GPU configurations, which is why it is impossible to use AMD Radeon for rendering and Nvidia GeForce for physics computing in games that use PhysX engine from Nvidia.

At present it is unclear which multi-GPU configurations were referred to by the Microsoft representative.

DISCLAIMER: KitGuru cannot verify identity of the Microsoft representative and authenticity of the screenshot.

While it is obvious that DirectX 12 will support contemporary cross-IHV multi-GPU configurations, a previous report suggested that the upcoming API will also allow to use graphics processors from different vendors in cases not possible today, e.g., for rendering video games. There are multiple reasons why it is impossible to use GPUs from different vendors for real-time rendering today, including tricky rendering methods, API limitations, application limitations, differences in GPU architectures, driver limitations and so on. Microsoft’s DirectX 12 potentially removes API-related limitations in certain cases and introduces a number of new techniques that allow to use resources of two graphics cards at the same time.

Since DX12 is a low-level/high-throughput API, it should have a close-to-metal access to GPU resources and in many cases this should provide a number of interesting technological opportunities. Among other things, the new API should allow usage of cross-IHV multi-GPU configurations in applications that were made with DX12 and heterogeneous multi-GPU setups in mind. For example, it should be possible to use graphics chips from different vendors for ray-tracing, compute-intensive tasks and similar workloads. Since architectures of GeForce and Radeon graphics pipelines are vastly different, using two graphics cards from different vendors for real-time latency-sensitive rendering of modern video games that use contemporary 3D engines should be extremely complicated. All multi-GPU technologies used for real-time rendering require two GPUs to be synchronized not only in terms of feature-set, but also in terms of performance, memory latencies and so on.

Given all the difficulties with synchronization of cross-IHV multi-GPU setups for latency-sensitive real-time rendering, it is unlikely that such a technology could take off. However, it should be kept in mind that DirectX 12 is designed not only for personal computers, but also for Xbox One. Microsoft has been experimenting with cloud-assisted AI and physics computations for Xbox One (both are latency-insensitive). Therefore, at least theoretically, there is a technology that lets developers (or even programs) to perform different tasks using different hardware resources without need for real-time synchronization.

Perhaps, DX12 will let apps use “secondary” GPUs for certain tasks without significant synchronization-related issues. The only question is whether AMD and Nvidia would be glad about heterogeneous multi-GPU configurations in general and will not block them in their drivers.

AMD and Nvidia declined to comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: Considering the fact that cross-IHV multi-GPU configurations in DirectX 12 is something completely unclear at the moment, take everything reported about this with a huge grain of salt. At least in theory, heterogeneous multi-GPU technologies for video games are possible, only these are not CrossFire or SLI configurations we know today. In short, do not expect your old Radeon HD 7970 to significantly boost performance of your shiny new GeForce GTX 980 once DirectX 12 is here.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

If they don’t make it compatible with Intel as well then I feel that it won’t take off much. With Intel it means you can get a bit of extra graphics power with a single GPU. This could be huge for laptops!

I believe that the benefits are simply that you can get good deals on whatever cards and use them together without worry.

i reckon performance would have to be closer than the gap between built in and discrete.

The obscurity comes from the “competitive war” more than anything else.

What we have now:

– Performance that are extremely close to each other even with different harware implementation. The same principles are in use.

– The same kind of rendering are being used, even the exacte same rendering methods. They come from industry standards

– Within the same GPU core there is different mode of rendering/computing being used for the same rendering

– OpenGL, DX11 and lower versions did not make use of close to the metal software architecture so the complexity is being relagated to the drivers to implement the standard calls for each unique GPU architecture anyway but since it is not too low/close to the GPU, multi GPUs would not be very efficient because of the vast approximation of high level language.

– With DX12 or any other API if you make a call for a type of render, you don’t have to worry about how the GPU will behave, it will be translated by the different drivers that automatically translate it at compile time.

DX12 will look at the different GPUs as a single GPU like the thread arbiter within the GPU looks at the hundreds of cores inside the GPU and asign work when a ressource is available. You asign ressource when it is available, when the compute units are done and when everything is done you have a rendered frame and you go to the next frame and you get 30, 60 frames in a second. Obviously each frame or portion of the screen can take various amount of time to render but in the end you get an average of 30 or 60 fps. It should work more or less like that.

If there has been nothing of that sort already it was more a matter of market decisions/ competition, high level languages etc. And I see this as a market growth for both AMD and Nvidia and others.

You can slowdown the market with high level language, its overly standard but they all use close to the metal solutions when they want to get good results for Playstation Xbox or Nintendo. So for Microsoft high level API was a way to control the PC market.

Who would like to buy less now ?

I believe it’s a good pudding.

Even if we can’t use Nvidia and AMD side by side because it’s blocked by drivers, we will still be able to make use of multi-GPU setups that are comprised of non identical cards… For instance someone currently running a 7970, or a 680 will be able to upgrade to a 390 or 980 respectively, and still use both cards, no? That alone is outstanding.

Does anyone know if this works properly and two GPUs usually in SLI could run together without synchronization, would that mean that they could both run together and use their Vram independently…?

Uh… It is not impossible to run Amd for rendering and physx on Nvidia. Totally doable. Also, no reason physx shouldn’t be able to run natively on Amd. The only reason both don’t happen (without hacks) is because of nvidia.

In my opinion the representative didn’t understood the question and just answered the typical “Can I put two graphics cards from different companies in my PC and use them on different monitors?”.

Yeap. Nvidia has released in the past the 257 driver accidentally without the lock and there where patches for older PhysX that where enabling that. Nvidia is lying by saying it is not possible.

Yes, essentially with DX12 SLI and Xfire will no longer mirror the cards, but use all of the resources of the cards configured, ie two 290Xs will have a total of 8GB or 16 GB if running two 8GB cards

Any driver under the 321 was able to work with the Hybridize patch that would circumvent the lock, but after that, it couldn’t anymore. 🙁

it depends really. if it has a support mode then games programmers can also develop for a second weaker graphics chip offloading certain tasks to it. the integrated graphics has the benefit of being right next to the CPU, that could maybe have some benefit? lower latency stuff

AMD can already do that. Nvidia is the one where you have to have identical cards to SLI.

Pretty sure they have to be extremely similar though, like combining a 7970 with a with a 280x as long as they are set to the right clockspeeds, since they are already basically the same card… You couldn’t crossfire a 390 with a 7970 because they are too different.

Yep that’s true actually: http://sites.amd.com/PublishingImages/Public/Graphic_Illustrations/WebBannerJPEG/AMD_CrossfireX_Chart_1618W.jpg

You usto be able to do this on MSI bigbang fusion motherboard. It would load balance between the 2 GPUs even if they were miss matched in performance or from other vendors. It used the Lucid hydra chip.

The benefits they listed were:

Combine ANY 1 NVIDIA + ANY 1 ATI graphics card for multi-GPU processing

Smoothest game-play from NVIDIA or Superior image textures from ATI (NVIDIA PhysX & ATI Stream)

Several other manufactures offer similar motherboards with the same technology & similar benefits listed.

So i could have my 970 do 80% of the work and mt 650tiboost do 20% if i wanted that config?

would be lovely and a dream com trough.