Advanced Micro Devices said that it will not introduce an all-new GPU micro-architecture next year, but will significantly improve its GCN [graphics core next] technology to boost performance and energy-efficiency.

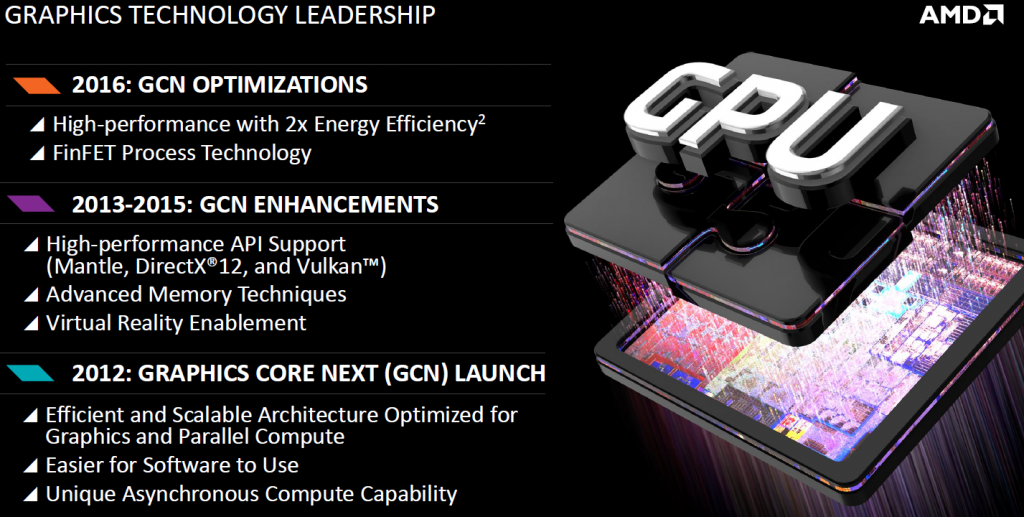

AMD originally introduced its graphics core next (GCN) architecture in late 2011 along with its Radeon HD 7970 graphics card (eventually renamed to Radeon R9 280X). The GCN architecture has gradually evolved in terms of feature-set and performance since its commercial launch, this year AMD is expected to introduce the fourth iteration of GCN in the form of the code-named “Fiji” graphics processing unit. The GCN 1.3 GPUs will feature an all-new memory controller and cache sub-system along with a number of new capabilities that are supported by Microsoft Corp.’s DirectX 12 application programming interface.

Without any doubts, AMD’s graphics processing units gained a lot since late 2011. In fact, performance increase that the upcoming flagship Radeon R9 graphics card is expected to deliver over the original Radeon HD 7970 should be incredible. What is remarkable is that all performance improvements demonstrated by the latest Radeon graphics adapters should be attributed to architectural enhancements because all GCN graphics processing units have been made using essentially the same 28nm fabrication technology.

Typically, evolution of graphics processors is a result of architectural innovations and thinner manufacturing processes. Unfortunately, new production technologies is a luxury that graphics processing units from AMD and Nvidia did not have in the recent years since TSMC had decided to focus solely on mobile system-on-chips with its 20nm CLN20SOC process. But a lot will change next year, when both TSMC and GlobalFoundries begin to offer their 16nm and 14nm FinFET fabrication processes, which can be used to produce high-end graphics processing units.

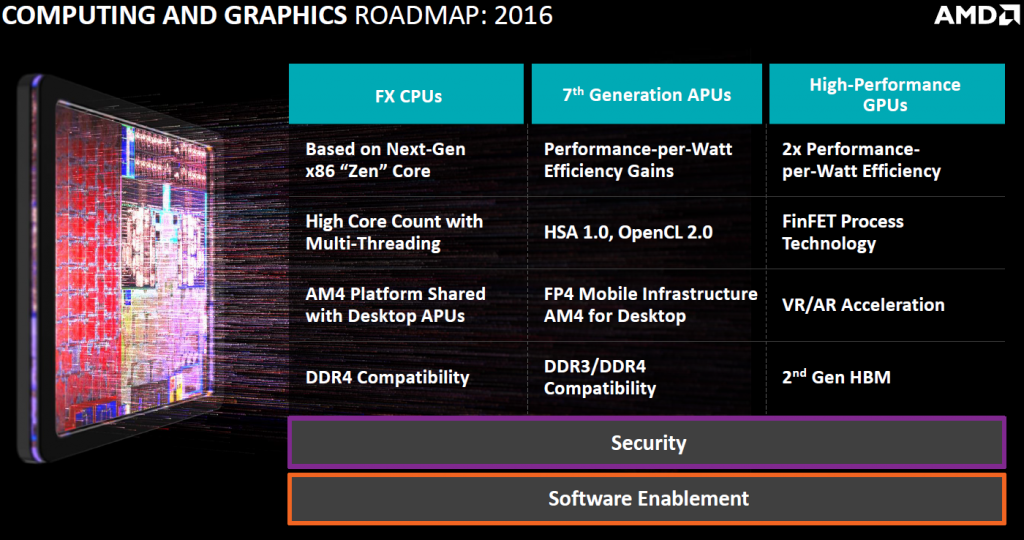

Next year AMD plans to release a yet another iteration of GCN architecture (GCN 1.4) that will be two times more energy efficient compared to existing GPUs and will support some new features, including those that accelerate virtual and augmented reality applications. AMD’s 2016 graphics processing units will be made using a FinFET process technology, which will let AMD install more stream processors and a variety of other hardware that further boosts performance into its next-gen graphics processing units.

“Raja Koduri and his team at Graphics [unit] are not stopping, like in the case of CPU cores, we are always working on next generation,” said Mark Papermaster, chief technology officer at AMD at the company’s financial analyst day. “We are tweaking the GCN to make it even more energy efficient. We are going to drive optimizations in workloads where we already excel with GCN, such as machine learning and a number of artificial intelligence applications. There is more innovation to come.”

While AMD does not reveal a lot of information regarding performance of its next GPUs, two times higher energy efficiency could potentially translate into two times higher performance in the same power envelope. Since AMD’s future Radeon graphics chips code-named “Greenland” will use second-generation high-bandwidth memory (HBM 2), it is highly likely that their performance will not be constrained by memory bandwidth. As a result, we could see a giant leap of GPU performance next year thanks to architectural improvements, increase of compute horsepower thanks to new process technologies as well as HBM 2 memory.

Discuss on our Facebook page, HERE.

KitGuru Says: AMD will not be alone with GPU performance gains. Nvidia Corp. will also benefit from newer process technologies, next-gen architecture code-named “Pascal” and HBM2 memory. Apparently, 2016 is going to be an interesting year for graphics cards.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

All of this sound good, my only concern is power and thermal usage. If they can fix these two then I’m sold.

You havent checked out how much power a 980 devours at 4k max settings ?

Have you seen the 290x sapphire vapor that runs even cooler then a 980 and matches or beats it ?

Not to mention the 295x wich is hands down one of the best gpu for 4k gaming for half the price of a Titan X wich is even hotter then a 295x or a 290x.

http://www.tweaktown.com/reviews/7110/msi-geforce-gtx-980-gaming-4g-le-video-card-review/index11.html

People should put off their fanboy glasses and avoid payed revieuw site’s 🙂

I don’t understand the concern about power consumption of people that are investing over $700 for a piece of hardware, and yet, they can’t afford to pay $2.00-$4.00 more on the power bill a month, is illogical.

< col Hiiiiiii Friends….upto I saw the draft ov $8289 , I accept that my father in law woz trully earning money parttime from there labtop. . there moms best frend has been doing this for only about 8 months and recently cleard the mortgage on there house and purchased a new Dodge . linked here HERE’S MORE DETAIL

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~m

Some people care more about being eco friendly than being a gamer…

Sure, cause 35-70W more watts will make a huge difference on the environment, if you want to be so eco friendly, buy a laptop or a console and save your apologist be, thanks.

It’s up to them whether they want to play on desktop, laptop or console. Also it’s up to them if they care more about being green. The world don’t evolve around you.

Let’s use a car for example. There are some retarded people who don’t care about being green and like more expensive sports car that polluted the air. On the other hand there are people like me who like greener, cheaper and bigger car who care more about the earth.

Another option is to take public transport which is much more eco friendly than the first two. So 35-70 watts make a big difference in the long run. You need to use a bit of common sense to know how big the impact is which you lack in those areas sadly…

Don’t take stuff out of context, even if you manage to play 20 hours a week which is borderline unhealthy, 20 hours a week by .13khw per hour does not even cause a dent on the power bill no matter what. All I can see here is a kid justifying its purchase. I don’t care where the world nor your opinion. I don’t need your apologist crap, cause makes no sense buying a GTX 970 when a cheaper R9 290X is as fast on lower resolution and once you crank up the details and resolutions, can snip the heels of a Gtx 980, something that the GTX 970 can’t plus the 3.5GB issue, compiler dependency and weak GPGPU is a turn off for me. Don’t shove your statements down your throat, everyone buys whatever they want and move on, time for you to move on.

You would save the environment more if you joined a not for profit organization that fights global warning and food waste:

“Getting food from the farm to our fork eats up 10 percent of the total U.S. energy budget, uses 50 percent of U.S. land, and swallows 80 percent of all freshwater consumed in the United States. Yet, 40 percent of food in the United States today goes uneaten. This not only means that Americans are throwing out the equivalent of $165 billion each year, but also that the uneaten food ends up rotting in landfills as the single largest component of U.S. municipal solid waste where it accounts for a large portion of U.S. methane emissions. Reducing food losses by just 15 percent would be enough food to feed more than 25 million Americans every year at a time when one in six Americans lack a secure supply of food to their tables.”

http://www.nrdc.org/food/files/wasted-food-ip.pdf

Also, you can air dry your laundry, etc. Saving 100w of power usage on our graphics card while a dryer uses 3000W or your kettle uses 1500-2000W when heating up hot water, or your oven for heating up food have a way bigger impact on the environment.

Perf/watt really only became the popular theme starting with Kepler. Before that, PC gamers hardly cared and NV users bought GTX275/280/285 or the entire Fermi stack in droves, that despite AMD outperforming NV in perf/watt with HD4000, 5000 and 6000 series. Also, it’s amusing when Maxwell users tout perf/watt but are running 4.5ghz+ overclocked i5/i7s and 1.5Ghz 970/980/Titan Xs and some of them have 5820, 5930 and 5960X overclocked that literally use 2x the power of an i7 4790K with hardly any more performance in games. People who care about perf/watt do not overclock, they undervolt at stock settings. If NV loses the perf/watt crown, some new metric will be used like VRAM, PhysX, CUDA, NV drivers, GameWorks, SLI, etc.

Every month I donate at least $50 for environmental and wildlife program my my home is full of eco friendly products so I’m good…

“everyone buys whatever they want and move on, time for you to move on.” That’s what I’m talking about your comment in the first place. Seems like it’s time for you to move on. Sad people are treating graphic cards like some valuable items. LOL!…

Yep, these nvidia fanboys are hilarious!

Like you for example, justifying your purchase, it was until Kepler and Maxwell that nVidia became power efficient, and now you suddenly got concerned about it, I bet that if nVidia wasn’t power efficient, you will be using the CUDA/PhysX crap to justify yourself, bravo!!!

Nope even though I worked as an auxiliary police officer a job which involved working long hours and dangerous environment but high salary I wouldn’t spend more than $150 each on new graphics card and cpu. So it seems you are talking about yourself. Bravo!…

when the hell did this become a ‘thing’ in PC gaming????

You got owned. Hard.

A console suits you well, console peasant.

If it didn’t become a thing they wouldn’t have focus in decreasing the used of watts…

Do you really think it is really necessary to spend more than $200 on each of any PC parts? A GPU cheaper than $150 nowadays is almost as good as the expensive ones so why spend more? I did rather spend my money on the new Kia 2015 model. Seriously sad life…

Sure, cause I am the one who is b!tching crying for the power bills LOL

I’m sure you don’t understand what I’m talking about. A new Gpu or Cpu which is just 20% better but $100 more expensive than the old ones. What kind of logic is that? I’m sure you are ones of the people who upgrade their GPU or Cpu almost every single year.

The dumbness is akin to eating Mcdonalds, drinking coke, smoking etc…

This is why there are many companies who ruined people life is still active. Same goes to intel. They made little improvements year by year but people still upgrade at a high cost. People are just dumb. So… Life is sad…

Awesome i’m running a R9 290 now very happy with it.

Cant wait for next year and VR 😉 AMD for life !!

Why does power usage bother you? It’s so extremely minor on your bill itl take 10 years to make a difference….iagree on thermals although with decent airflow shouldn’t be an issue