Nvidia has introduced its new flagship GPU for AI computing platforms, the Hopper-based H200. Alongside this announcement, the company also introduced the HGX H200, an AI platform based on the newly announced Tensor Core GPUs.

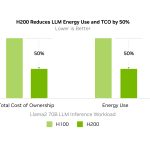

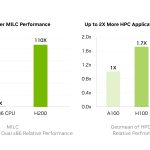

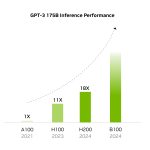

The Nvidia H200 is the first GPU to support HBM3e, a faster type of memory for accelerating generative AI and huge language models, as well as scientific computing for HPC applications. The Nvidia H200 uses 141GB of HBM3e memory running at 4.8 TB/s, about twice the capacity and 2.4x the bandwidth of the Nvidia A100. Using these GPUs, an eight-way HGX H200 can offer up to 32 petaflops of FP8 deep learning computation and 1.1TB of aggregate high-bandwidth memory.

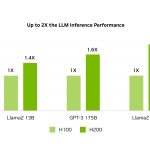

Besides the memory, the H200 improves upon its predecessor with innovative software advancements such as the Nvidia TensorRT-LLM. Launching the H200 will result in substantial performance gains, including tripling the inference speed (compared to the H100) on Llama 2, a 70 billion-parameter LLM.

The Nvidia H200 will be offered in four- and eight-way HGX server boards compatible with the hardware and software of older HGX systems. Moreover, it's available in the GH200 Grace Hopper Superchip with HBM3e. These options allow the H200 to be installed in any data centre, whether on-premises, cloud, hybrid-cloud, or edge.

Partners such as ASRock, Asus, Dell, Eviden, Gigabyte, HPE, Ingrasys, Lenovo, QCT, Supermicro, Wistron, and Wiwynn are expected to start launching systems with H200 GPUs sometime in 2024. In addition, cloud service providers such as AWS, Google Cloud, Microsoft Azure, and Oracle Cloud will also offer NVIDIA H200-based instances starting next year.

Discuss on our Facebook page, HERE.

KitGuru says: Nvidia's bet on hardware for AI applications is bearing fruit. The company already has a good lead in the AI market. Will the H200 cement this further?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards