The latest CPUs and GPUs seem to be getting hotter as each new iteration is released. However, researchers at the University of California have been testing thermal transistors as a possible solution to this problem. While still in the experimental stage, thermal transistors could offer an attractive way to remove heat from chips, which could interest companies such as AMD, Nvidia and Intel.

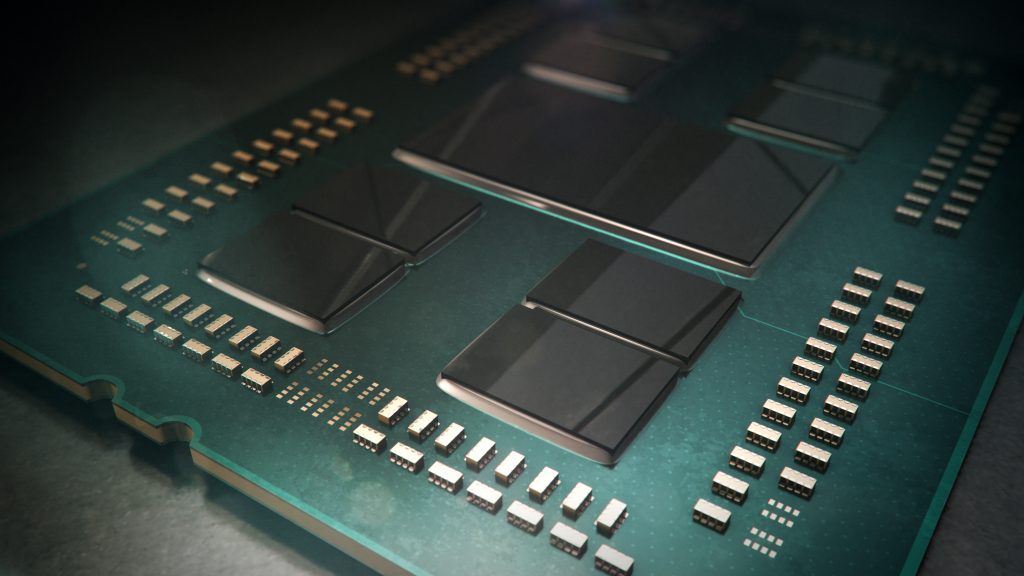

Modern semiconductor products, particularly the high-end ones, have serious issues with heat. Even though processors are getting smaller, power consumption isn't, and since electricity is converted into heat, more heat is being generated in a smaller space.

Moreover, there's the issue that chips using the latest architectures usually don't heat up uniformly, as certain parts of them will reach higher temperatures than others. These areas, named “hot spots”, mean that even if the average temperature of, for example, a CPU is acceptable, the temperature of a specific part of the CPU may already be too high, preventing it from functioning as efficiently as possible.

Initially reported by IEEE Spectrum (via Tom's Hardware), a team of researchers from the University of California, Los Angeles, have created innovative thermal transistors designed to channel heat evenly throughout the CPU using an electrical field. The breakthrough that made this possible was using a one-molecule-thick layer that becomes thermally conductive when charged with electricity.

Thermal transistors can transfer heat from a hot area, usually the cores, to a cooler area of the device, “balancing” the temperature and eliminating the hot spot. In experiments, the thermal transistors performed 13 times better than standard cooling techniques, which is already very promising for a technology still in development.

Discuss on our Facebook page, HERE.

KitGuru says: Will AMD, Nvidia and Intel use these thermal transistors in future products? If so, how long do you think it will take for that to happen?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards