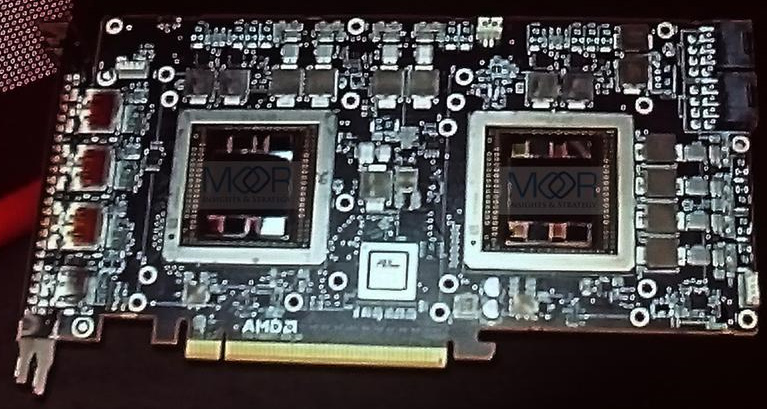

Even though AMD’s new-generation dual-chip flagship graphics card is only expected to hit the market several months from now, the company already uses such graphics card for demonstrations. The new add-in board does not resemble current-gen dual-chip graphics adapters.

The upcoming dual-chip AMD Radeon R9 Fury X graphics card – which may be officially called Radeon R9 Fury X2 or Radeon R9 Fury Maxx – features two code-named “Fiji XT” graphics processing units with 4096 stream processors and 4GB of HBM memory. In total, the graphics solution features 8192 stream processors and 8GB of high-bandwidth memory. The board has two 8-pin PCI Express power connectors, which means that it can consume up to 375W of power, which may indicate that its clock-rates and power consumption are not really high.

The graphics card looks big, but not as big as current-generation dual-GPU adapters thanks to usage of HBM memory. The board utilizes a PCI Express bridge from PLX, just like today’s dual-GPU graphics cards from AMD.

The upcoming Radeon R9 Fury X2 graphics adapter will use a dual-slot cooling system, but it is unclear whether the cooler will use liquid, like the one on the Radeon R9 295X2, or not.

AMD did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: Looks like AMD’s new dual-GPU flagship is ready. Considering the fact that AMD’s single-chip flagship – Radeon R9 Fury X – will cost $649, it is highly likely that the new dual-GPU product will cost between $1000 and $1500.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Wow, that is a small board for having 2 GPUs

The most compact Dual GPU board

The Jobs Site on the net @mk10

>>.

PlutoNetMoneyHills/point/j0bz…

Your first choice kitguru Find Here

This means that AMD are again going to have the fastest card on the market for 2-years running, as this will take over the mantle from the 295X2.

yup, with fury x being fastest single GPU and 295×2->Fury X x2 being fastest dual GPU.

I think AMD will be doing quite well for the next fiscal year.

Only because Nvidia stopped producing x2 cards back in 2011…..

Even if they still produced dual gpu cards, they would probably be slower than the 295×2 and upcoming fury x2.(Not a fanboy, currently own a 780)

2 words. Game. Works.

Dual HBM1 4+4 GB + Direct X 12 + Windows 10 + A BIOS Update for OC Unlocking = You have the Fastest GPU on the Planet.

Honestly they should put two fury nano’s in dual config.

add two more words. Is. Broken.

Game works isn’t inherently broken, but it certainly is one of the more demanding features Nvidia offers. Whether you think the performance sacrifice is worth it or not depends on the person and the hardware. I personally have two 980 Ti’s so Game works won’t be stressing my system enough where I would need to turn it off in any title as of yet.

Unfortunately for AMD owners they don’t get the use it as often because it is a feature that is designed by Nvidia which limits the amount of time AMD is allowed to optimize it for their cards.

Seems like a dick move by Nvidia IMO. As usual they try and force proprietary code and tech into the market, it only serves to extort more money from the consumer and stunt their competitors.

lol Gtx980ti custom is faster than 295×2 ;D

Titan Z was released in May 2013…

Says who??

Game Works is horse shit! Maybe nVidia should focus on using the current DX12 tools available. Seems to be working in AMDs favor for the time. nVidia could change that.