Advanced Micro Devices this week officially unwrapped its new flagship single-chip graphics card based on the highly-anticipated “Fiji” graphics processing unit. The new AMD Radeon R9 Fury X graphics adapter has a number of advantages that can make it one of the world’s highest-performing solutions. In fact, according to AMD’s own benchmarks, the new graphics card has all chances to be the world’s top single-GPU graphics card.

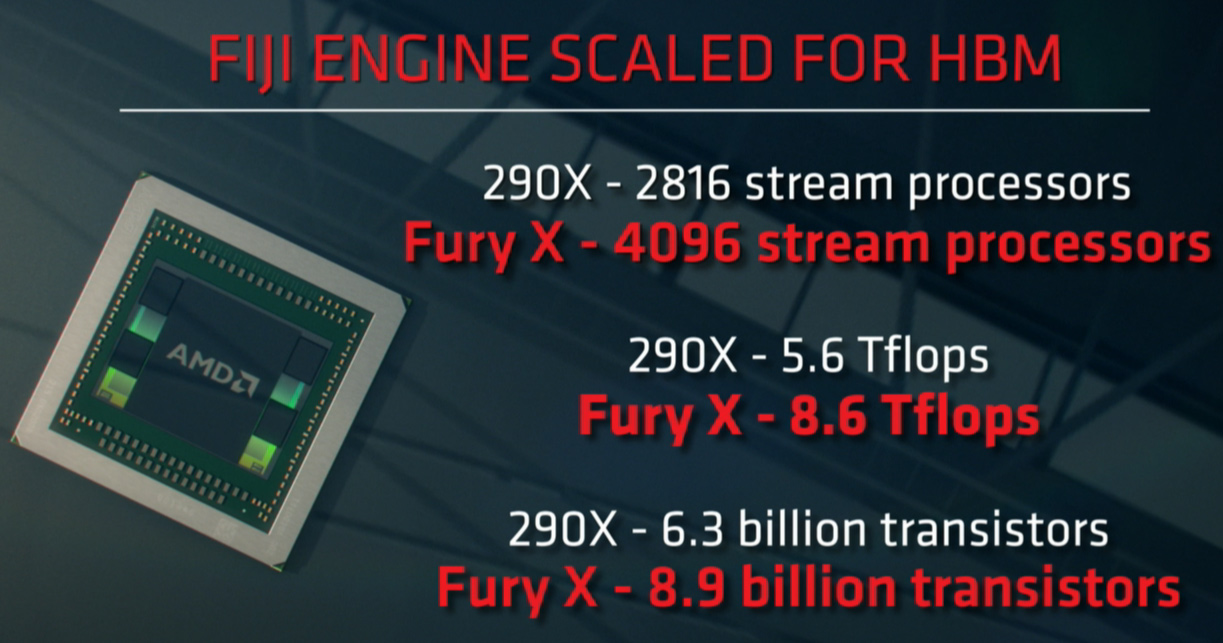

AMD Radeon R9 Fury X graphics card is based on the “Fiji XT” graphics processing unit that contains 8.9 billion of transistors and features 4096 stream processors, 256 texture units as well as 4096-bit memory bus. With 8.6 TFLOPS of compute performance and 512GB/s memory bandwidth, the adapter is the most technologically advanced graphics card ever introduced. The only drawback of the Radeon R9 Fury X is limited amount of high-bandwidth memory (HBM) onboard – 4GB – which may be a problem for a number of games.

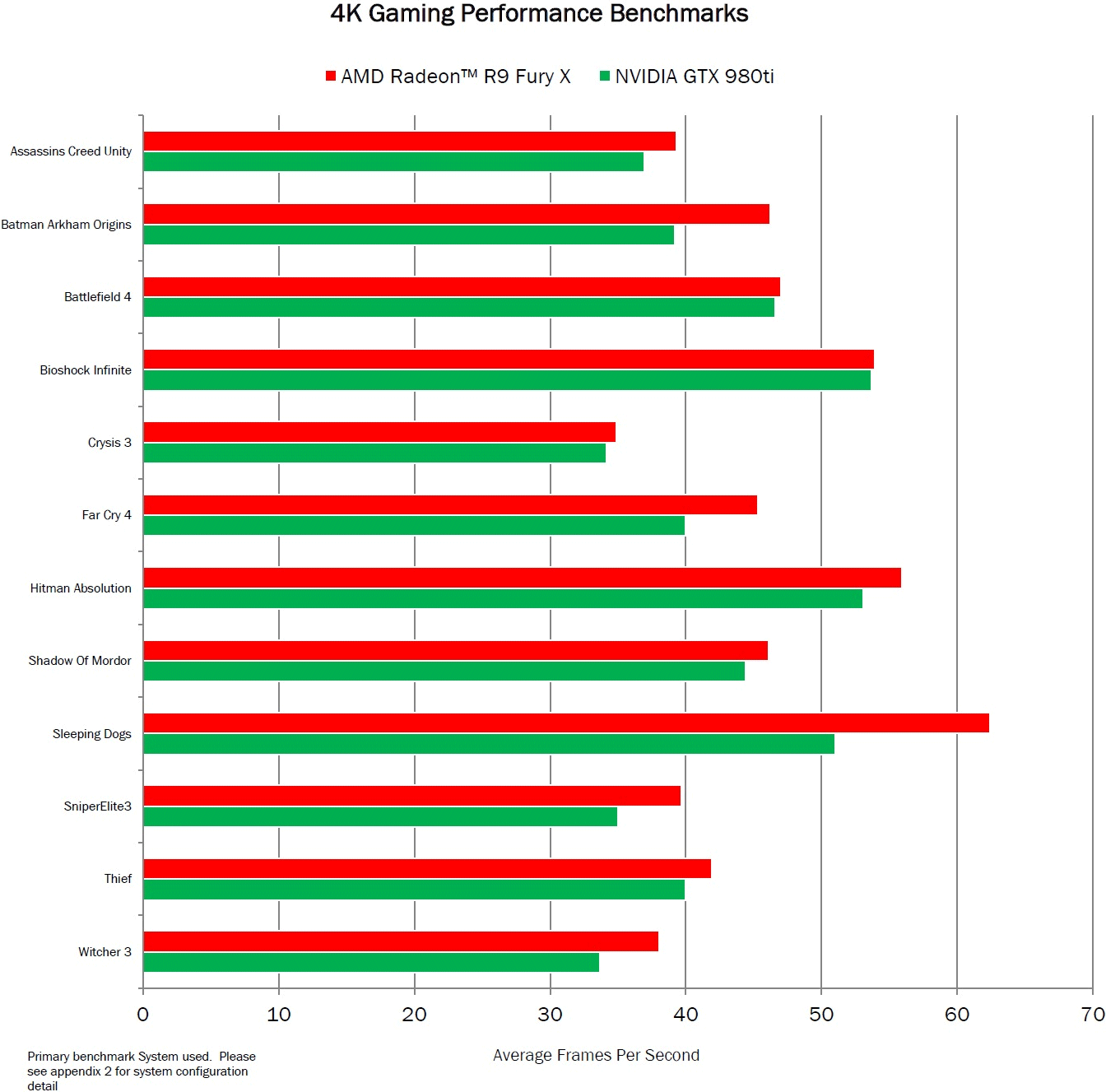

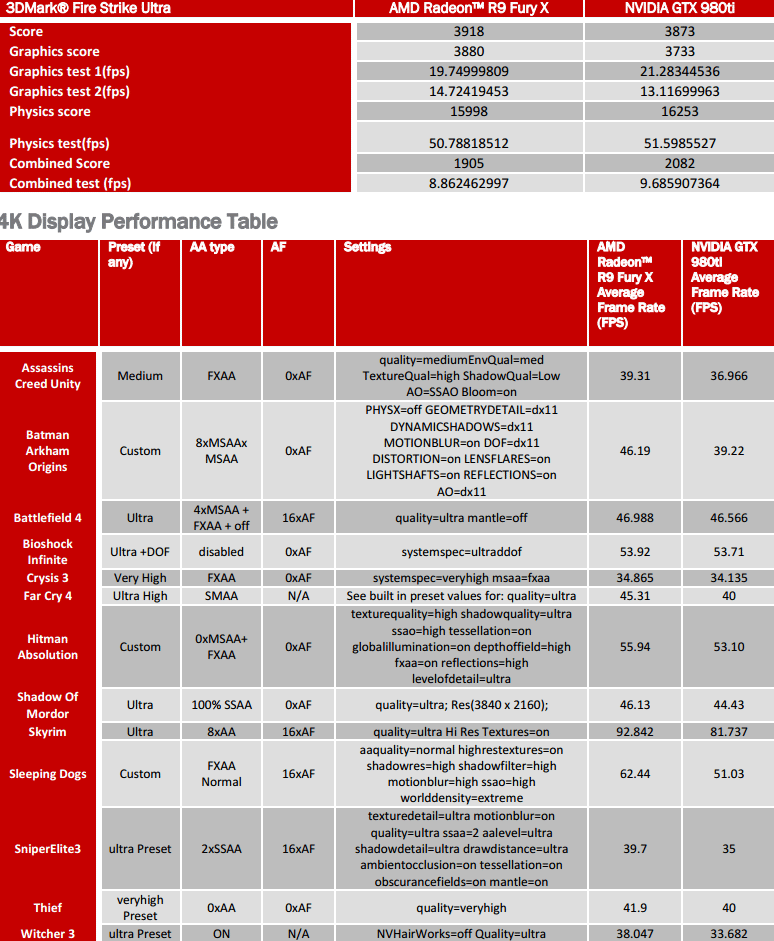

AMD officially remains silent about performance that can be expected from its Radeon R9 Fury X, but WccfTech web-site has published official performance benchmarks of the graphics board listed in the reviewer’s guide of the adapter. Such guides are written by independent hardware vendors (IHVs) to assist reviewers of their products. The documents are designed to provide reference performance numbers in order to reveal what type of performance to expect from a graphics card or a microprocessor. While the RG papers are not supposed to unveil weak points of solutions, they tend to be rather accurate and faithful.

The benchmark results were obtained on an unknown test system in various games in 3840*2160 resolution with or without antialiasing. AMD’s specialists compared the Radeon R9 Fury X to Nvidia’s GeForce GTX 980 Ti, which costs equal amount of money ($649) and which is only slightly behind the flagship GeForce GTX Titan X in terms of performance.

The test results of the Radeon R9 Fury X obtained by AMD show that it is faster than the rival in all cases. In some situations the difference is negligible and the GeForce GTX Titan X would be faster than the Radeon R9 Fury X. However, in other situations the Radeon R9 Fury X’s positions seem to be unbeatable.

From test results, it is unclear whether 4GB onboard frame-buffer limits performance of the Radeon R9 Fury X anyhow or not, but what is clear is that it can compete against its direct rival with 6GB of memory.

AMD Radeon R9 Fury X graphics board will be available next week for $649. In mid-July AMD will also ship AMD Radeon R9 Fury with basic cooling solution and lower performance for $549.

AMD did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: Without any doubts, AMD’s Radeon R9 Fury X is a really fast graphics card. With further driver optimizations it may become the world’s highest-performing graphics adapter. But will it be more successful than Nvidia’s GeForce GTX 980 Ti and Titan X? Only time will tell!

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

What type of comparison is this?

0 AF and AA in almost all comparisons in some game neither are in max config.

Not using game works things i totaly understand. But this is not like a Man would do it!

Ridiculous AMD.

Not really interesting at all considering AMD’s terrible drivers and their low minimum FPS compared to Nvidia cards… The majority of reviews just show average FPS which doesn’t tell the whole story.

AA isn’t really needed at 4K though

I like how for Crysis 3 they used FXAA instead of MSAA… the vRAM is the bottleneck there.

*sigh* and this is why we wait for other people to do testing.

Yup, and lets face it, if they’re going to cost the same or near the same amount of money, and provide equal fps, the 980 Ti with all the Nvidia technologies, driver benefits, I’m assuming much lower power consumption, temperatures, and can be overclocked much better….There is 0 reason to choose this card over a 980 ti

I counted 3 without AA which isn’t almost all.

Why would anyone turn it on if it makes the game unplayable?

One would think that it’s the same for both cards.

Fanboy???

On face value with pre-release drivers it seems a pretty good effort to me, as usual a multitude of reviews will reveal any deficiencies.

Power consumption, temperatures and noise, will be much better on this card than any Nvidia.

https://www.youtube.com/watch?v=agqO_vomcEY

http://www.eurogamer.net/articles/digitalfoundry-2015-amd-fury-x-the-new-leader-in-graphics-tech

Take your time.

This.

I have a 4k monitor by the way and theres a diference with and without AA.

This is more related to the density of the pixels than the resolution per si, but the same was said when we jumped to 1080p, no need for AA, and now we are here.

I feel that at least all of the games should be running at 16x AF, every option on ultra, off only aNYVIDIA gamewon’tworks.

They customized somethings there aren’t even changeable alone on the graphics menu of the game, like the sleeping dogs worlddensity=extreme. This is tricky and if they needed to do this, so they are not at a very good position.

Everybody thats not talking good of amd is FANBOY now?

Or people are just getting retarded with it?

Or maybe you are the fanboy because can’t accept someone that does not agree with this type of practices. If you are like me that hated when nvidia sold a 3,5g card as a 4g card or when some games seems to be made to run well on only one archtecture like nvidia does, saying that the problem is with the other brands.

I had only amd cards all of my life, today im not that proud of it i almost bought the 980ti. I had faith that AMD would bring something to the table. But something is wrong first because its just 4G, no hdmi 2.0 and many other things that makes me think that they really trying to catch up. Hope that DX12 make AMD gain some points and the 4G will not be a problem when you can crossfire, but still not good enough for today. not playable 60fps on 4k either.

Like the Far cry 4 and Sleeping dogs performance about 6 fps more in Far cry 4,ive held off buying an 980ti for this very reason,AMD please don’t let my down on the 24th,and make sure your drivers are tested and up and running and my money is yours.

I remember when they used to say that about 1080p, I guess in the end it depends on what size the screen is.

True, no single card can handle 4k at 60fps ultra settings ,Crysis 3,Gta 5,Witcher,Far cry 4.

Every game they left AA off in is a VERY demanding game on vRAM. Every game they left AA on in is either exceedingly low-impacting (vRAM-wise) forms of AA (like FXAA and SMAA) or is known for a fact to not use 4GB of vRAM or more at 4K (like BF4, which only uses ~3-3.5GB of vRAM at 4K with 4x MSAA). If they tried a game like say… Watch Dogs or Titanfall or something, where at 1080p it can use 3GB+ of vRAM (far less 4K) or even AC: Unity’s ultra + MSAA forms which use 6GB at 1440p far less 4K? THEN we’d REALLY see some things happening. Because regardless of what anyone says… you toss two of those cards in Crossfire or two 980Ti/Titan X in SLI and you *WILL* have a good gaming experience (with AA being usable) in almost any game out there at 4K, except that AMD’s solutions will prevent you from doing so due to a vRAM bottleneck. And while I want AMD to succeed (because nVidia has been acting quite anti-consumer lately; and no I’m not going to go into detail because I’d be typing forever) people cannot be cherry-picking benchmarks like this.

I initially thought those were AMD’s official benches, but I re-read and noticed it was from some other place… but that place is definitely cherry-picking benchmarks. I’m not saying that every game needs to be checked, or that only super demanding games need to be checked, but avoiding testing of unfavourable scenarios is a bad idea. It’s one thing to disable say… PhysX, which AMD cards (officially) cannot run, but everything else is fair game unless it cannot be turned on while using an AMD card. If a game can use nVidia PCSS shadows and AMD cards cannot use it, then you need to test with softest or whatever. If a game has HBAO which I’m sure IS usable on AMD cards, then it can be tested. And the flip side goes for TressFX, which heavily favours AMD cards.

Hello? 2006 called it’s wants it’s shitty driver joke back.

Sorry but it’s still valid, esp for Crossfire. Switched my 295×2 for 980’s in SLi and haven’t had a tenth of the problems! Sad but true, I want AMD to do well (as I find they hardware awesome tbh). Just get the impression that AMD haven’t got the resources to make an impact any more, even the Fury is a re-jigged Tonga chip with HBM bolted on)

Sad but true, driver/software support counts for a LOT!

@marcelotezza:disqus Power consumption WONT be better on Fury, but Temps&Noise will be, thanks to the Liquid Cooling. If Fury X will be an anti-overclock card too, like previous Radeons, then it has barely any chance at this price.

6 years with them and 0 issues with drivers. Can you please explain what’s wrong with them? You probably don’t know but still repeat the same shit others say.

Not true, by what i read it will have a big overclocking potential. Thats why the 2x 8pin conectors. 275 is the standard consumption, so yeah it will go much higher!

“AMD has targeted 275W as a typical level of power consumption under load, but electrically, the 2x eight-pin power inputs should allow for spikes of up to 375W. GPU load can be measured via to a certain extent via a series of LEDs built into the board.”

You can add any PCI-e power connector to the required ones, if the chip can’t handle higher clocks. It’s like reference 750Ti-s don’t have external power connectors, and some brands put 6pin on their 750Ti, yet you can’t overclock it further than the reference designed one. This applies to the GTX 960 too, the minimum required is a 6pin, some manufacturers add an aditional 6pin, still you can’t OC it more than the one with only one 6pin. We’ll need to wait for hands on reviews where they test their OC ability too

You don’t need MSAA at such a high resolution… Source: own experience

Never said no… but the point is some people want to do it, and if they can’t then it automatically negates the slight FPS bonus they get by using those cards. And moreso if people buy two or three of them to use in a multi-GPU configuration where they have the raw power to churn out that smexy MSAA

Lol like nvidia doesn’t have shitty drivers…

It’s not valid, their drivers are as good as Nvidias they haven’t been bad for at least the 4XXX series they had one fuck up and now everyone rides the bandwagon and the immortilization of Nvidias apparenly “amazing” drivers that Nvidia fan boys keep bleeting on about.

AMD need a purchase from samsung asap as I agree they haven’t been doing well for a while.

You assume too much, read more fanboy…

Really Tonga chip? Are you mad?

Come back when you’ve had recent experience of both sides and we’ll talk 🙂

In my experience, 90% of the people who claim that AMD has terrible drivers have never used an AMD graphics card (or, at minimum, haven’t used one since before AMD bought up ATi) and are, indeed, just repeating something they read other Nvidia fans say.

I used Nvidia exclusively from 2001 to 2012 when I bought my first AMD graphics card. The only AMD driver issues I’ve ever had were crashes from a too-aggressive overclock attempt. On the other hand, I could draw you an accurate picture of the “nv4_disp.dll” crash window, I saw it so often.

Plus, I’m pretty sure AMD/ATi have never released a driver that literally ruined/burned up stock, unmodified, non-overclocked graphics cards. Nvidia’s done it TWICE.

Yeah it may be, but at 4k you don’t really need Anti-aliasing, and if you need it it will be on low setting

They have to leave room for OCyou know dont you?

I love this card! Hbm memory is 1000 power plus vs gddr 5.

The 2x 8 Pins with the sugested power usage under load sugest good OC potential

Most people neglet the performance beneficts of a wider Bandwith bus, i can’t seem to understand it, why?

No they used fxaa because there still isn’t a single gpu that can runs crysis 3 at 4k with msaa. Crysis 3 uses very little vram compared to many games on that list.

Any low fps figures you would know would be from the 290x or 295×2, this is neither of those cards. 295×2 has lower minimums because all dual solutions have overhead that causes lower minimums than a single card, including nvidia sli.

980 ti doesn’t overclock as well as everyone thinks. All those benchmarks you see running stock it’s actually pegged at 1200 mhz so when people oc to 1400mhz it sure isn’t a 40% oc they like to claim. Not to mention nvidia locks voltage down while amd does not.

You’re wrong. Every game they turned aa off on is too demanding with aa on at 4k to even get 20fps whether running fury x or titanx. Every game they turned aa on in is still playable at 4k with aa on. Sleeping dogs is one of the most demanding games around and they crush titanx and 980 ti. Amd always has better hardware than nvidia, software is amd’s problem so we will see next week how this plays out.

These are benchmarks, reviewers couldn’t give a flying fuck about the ‘experience’, just look at some entry level cards being tormented with 1440p/4K Ultra benchmarks, how the fuck is .3 fps in anyway informative?

Also, fucking LOL at 0 AF.

Power consumption probably wont be better, i guess your right my apologies.

980ti consumes about 250 to 260 watts. running games.

Will not be a great diference and if the fury X really deliver or outperform the 980ti i will calculate the performance per watt.

Lol I use the 290x x and when 14.12 for ccc came out my computer wouldn’t even power on CCC also sucks for fan control had go resort to Msi afterburner but aside from that nightmare it runs nicenow on the omega drivers

Well iv’e made my point, you can’t rebuttal for jack, enjoy your life :^)

OMG FINALLY THEY HAVE A VIABLE BENCHMARK AND IT IS INCREDIBLY…..meh!

correct me if I am wrong but HBM being the future of GPU’s should make the amd fury process data at the near instant speed since it is physically located right next to the amd gpu. and yet it shows barely any FPS increase at 4k. I am assuming this is non OC ( wouldn’t make much difference even 980 ti and titan x are getting a water coolers too). I would still consider some hope left if they were not benchmarking it against the younger sibling of the titan x…..plus amd has no hardware supported post processing features (gameworks, amd is still in denial) to deal with while 980 ti and titan x do them just for extra points (again I am assuming they disabled those features in nvidia for the purpose of the benchmark)!

this is a grim outcome…..I am assuming this watercooled 4000 core behemoth that can only bully the titan x 2800 core baby brother will cost as much as a 980ti (water cooler cards cost about that much). I might as well buy a titan x…..

I still think any card with less then 8gb is not ready for 4k!

That’s up to you, dude. It doesn’t mean everyone else will think as you do.

AC Unity, potentially Shadows of Mordor (most of it is really cache data) and Hitman Absolution (also with 0xMSAA) are the only games that use less than Crysis 3 at 4K on that list. And both AC:U and Hitman have settings turned down for 4K.

Besides, my point is this: What if somebody uses two cards? What if someone uses 3? What if somebody does not go single GPU for 4K because they’re not an idiot? Then you CAN turn up settings more. A lot more. And AA becomes a “I can turn this on” rather than “I’d rather be above 30fps pls”.

I’d say 6GB of vRAM can manage for 4K, but 8GB or higher should be the sweet spot right now, PURELY because of how many games are extremely lavish with their vRAM usage these days. 2014 was extremely bad with high vRAM in games as well.

Hitman Absolution was getting 55fps without AA… are you saying 2x MSAA would have sent it under 30fps?

Also, Sleeping Dogs’ max AA includes SSAA. If you were to turn on max AA in Sleeping Dogs at 4K you’d be playing at 8K, which RIGHTFULLY SHOULD crush a Titan X. Using FXAA is its only choice without SSAA, which I wholeheartedly understand. Sleeping Dogs isn’t a vRAM hog either.

< ??????.+ zeldadungeon+ *********….. < Now Go Read More

27

You clearly don’t understand HBM, so stop talking.

You clearly don’t understand vRAM. There comes a limit when you cannot compress vRAM data anymore and a limit when you cannot swap data in and out of vRAM fast enough to keep a high (or consistent) FPS. And HBM could be 1TB/s for all I care… if the data is coming from a HDD or RAM or even a SSD with a low 4K random read, you’re going to be bottlenecked SOMEWHERE swapping data in and out.

Here, educate yourself some http://linustechtips.com/main/topic/198568-the-video-ram-information-guide/

There no reason to read your biase comment, after this you can quit happy!

No HDMI 2.0 for nvidia too and is selled as 2.0 stop talking like nvidia is the best.

Stay with your shitty kepler card when pascal will be realesed you will have a virtual nerf from nvidia, Enjoy your 2 years card.

How retarded you are to even try to say what GPU i’m using, i talked about nvdia having hdmi 2.0?

My actual GPU is a HD4600. Please go away…

Eccolo, iniziamo ad insultare? I bought sta per ho comprato e tu hai detto “im not that proud of it i almost bought the980Ti” mlmlmlmlmlm Coglione.

I have both, a 970 and 280X, I can honestly say neither are worse than the other when it comes to drivers, sometimes you get buggy ones or ones that reduce performance in certain games and the like, but that the same on both team red and green.

That’s said over the years since my first NVidia card the Geforce 3 Ti, I have seen far more claims of NVidia fiddling their drivers to detect benchmarks and so modify settings to get better scores. This why I take many massive difference benchmarks with a pinch of salt especially as 9/10 they are on the current “in” game. I just get the latest best bang for the buck card and that does the trick as 12 months newer is a big performance gap in video card terms, so even if they are competing models, the newest one usually has the speed advantage.

I am more interested in DX12 performance as that where we will see if HBM lives up to it’s hype. It would be expected that this card was going to be 980ti level, I guess NVidia did as well which is why they rushed the cards out or else be left lagging behind with the standard 980.

Well tbh, my main issues were to do with Crossfire and its poor support

yeah post processing needs that extra vram. isn’t that why we buy a graphic card in the first place so we can crank up the graphic setting and admire the beautiful in game vistas. 6gb manages 4k but at the cost of reducing a few settings….I call that BLASPHEMY XD

AMD’s own testing has usually been lowered by objective reviewers. Is this water cooled? Not good at all. OC’d 980tis are out and they are ALREADY 20% faster than the stock one AMD tested against.

Crysis 3 uses very little vram compared to several games on that list *sigh*

Lets get one thing straight. This Fury X will not be ‘crushing’ a Titan or 980 Ti. These are clearly selective benchmarks. You can come back and quote me on this but when the ‘proper’ benchies are done I expect to see it win some and lose some. No AMD card to date has consistently smashed it’s rival in every test.

But what to do when you realise AMD have sold you a load of selective bull. There is no way this card beats a Titan X and 980 Ti in every test. How about GTA V, Battlefield Hardline, Project Cars or Mortal Kombat X? And how about toggling Hairworks for Witcher 3? This is clearly a very good graphics card but I call bulls**t in these selective benchmarks.

I expect that too.

Not at 4K though. I can’t find it now but at 4K with like 3 Titans if you max Crysis 3 and use 4x MSAA it uses like 6GB of vRAM, which is more than most games on that list.

well my head hurts from all the bullshit i read today, but what you are saying is like saying a bottle has the same fill ratio and empty fast than a bucket, therefore you can use a bottle of the same capacity of a bucket to deliver the same amount of water, like using a bottle to put out a fire instead of a bucket, where in fact the bottle has a very narrow opening and its useless against fire, because it doesnt spill all its watter out as fast as the bucket.

yes a bigger bottle will contain more water, but until you empty it the fire has grown to double the size, and your house is now burned down by an over-overclocked GTX 980 TI, because you couldn’t get the performance you want from your misrable stock card and you have to buy 200$ of coolers and stuff, while the fury has all these included while being cheaper than the stock GTX 980 ti.

someone call emergency, this guy is on fire…

go play with your toy while the enthusiasts do their cool stuff, pesant, even titan is overpriced piece of shit, no real innovation, just pure marketing shit.

unless you have some kind of super power that allows you to see things 5 times larger than they really are, I seriously doubt you could look at 2 identical screens, one with AA and one without on a 4K monitor and tell the difference. It’s not a matter of opinion. It’s a matter of what AA does, which is smooth out ridged edges on images from the pixels being square. When you have a 4k monitor, the pixels are so small, you either need to have your nose squished up against the screen, or a magnifying glass to see individual pixels. But then again, there are the retards who run 16x AA on 1080p just to brag that they can, and then swear up and down that they can tell the difference between 16x and 4x.

They can’t sell or else they would have by now… AMD has a license agreement with Intel to make x86 chips which doesn’t transfer if the company is sold. If Samsung bought AMD, they’d have to renegotiate the contract, which wouldn’t be in Intel’s best interest, because it would just create more competition for them. Samsung(or anyone else buying AMD) would have to fork over billions of dollars, after spending hundreds of billions on purchasing AMD. There’s literally no reason for anyone to buy AMD unless AMD sold the GPU portion of the company alone, which would end up putting them completely under, since the GPU portion is the only thing keeping them alive(with the help of consoles). So, unless AMD gets so desperate they sell the company for basically nothing, you will only see them closing their doors if things don’t turn around.

Pretty sure I can tell the difference between 4x and 8x/16x in Skyrim XD.

That being said, I’m not one to judge what people have and use. And some people decide they want/need/whatever AA at 4K. YOU don’t, or YOU can’t tell the difference, or “most people” can’t tell the difference, just like most people can’t tell the difference between 60Hz and 120Hz but I can, etc etc.

The point is, if people feel the need for it and want to use it, then great. If a card can’t do it because of some limitation, it’s less attractive to them. Marketing that it is just makes them more ticked off.

HBM isn’t even 2x the bandwidth of the GDDR5 on the 980Ti/Titan X. It’s not a bottle and a bucket.

Also remember that the water filling the bucket comes from somewhere, and that source is RARELY the size of (or larger than) the intake of the “bucket”.

The Titan X is an extremely overpriced GPU and I do not care for it and wish people would not buy it just because it’s strong. There is such a thing as overpaying for things.

The point is, if you have a larger bucket and need to swap things in/out LESS, then you end up with a better chance of keeping things smooth with high vRAM counts. I’m not comparing an 8GB GDDR5 1000MHz 128-bit mem bus card to the HBM card here. I’m comparing a 6GB GDDR5 1750MHz 384-bit mem bus card WITH memory bandwidth optimizations that enable it to have somewhere around ~11% extra bandwidth over the regular mathematical calculation (proven using the CUDA benchmark that floated around for the 970 memory fiasco, with maxwell GPUs at same bus width and memory clockspeed as Kepler GPUs having faster bandwidth).

I’m not being a nVidia fanboy here. I’m simply pointing out the fact that the 4GB vRAM on those cards for a “true 4K card” etc is a limiter, regardless of AMD’s claims that “with HBM it’s not a limiting factor”.

If you think you can tell the difference, it’s because it’s in your mind (the diff. in AA). I would wager a large portion of money of you or anyone else picking the 16x AA over no AA on a 4k monitor less than 60% of the time in a “blind taste test” scenario.

Except that with both SLI and Crossfire, most “support” issues result in the devs not contacting nVidia or AMD and sorting out multiGPU support in a timely manner. That simply is what it is. AMD could likely benefit from more frequent driver updates, but otherwise if multiGPU is broken in game X then it’s probably not AMD or nVidia’s fault. Also, the engine plays a large role: ID Tech 5, Unity and Unreal Engine 4 will *NEVER* support AFR-type multi-GPU, due to tech that requires knowledge of previous frame frame-buffer data (at least for ID Tech 5 and UE4. Unity just sucks as an engine for anything beyond low end titles).

4GB of RAM is more than enough for 4K gaming.

If a game developer cannot code properly then why should we be paying more for manufacturers to lump more RAM onboard?

I have seen more than one developer state that 4GB should be plenty.

Purchasing videocards on their VRAM sizes is so 1990.

I agree that optimization is needed, but that doesn’t mean it’s coming, and that’s just reality. As crappy as it sounds.

You don’t know what you are taking about. AMD’s CPU IP is extremely valuable.

A lot of companies would gladly buy AMD but they have 2 problems: US Government and Intel.

AMD’s and Nvidia’s GPUs are different so you examples are irrelevant.

Did you even read what I wrote?

No not really, these apply to HD7850 series, to R9 270x and even some of the 280/280x has dual 8pin connectors, yet you can’t oc them higher.

This is pretty much a general fact, that more connectors doesn’t equal better potentials.

the benchmarks are already out, and there is 20% better performance over the GTX 980 TI in 4K, also 2nd generation HBM is already under developement, that will allow for more memory as apparently people like you are convinced that larger capacity memory is better than better performance and bandwith memory, and we will see when the cards are tested by all the people and the results will decide.

I am fully aware of HBM 2.0 and it allowing more than 4GB being out soon. But it is not out now. I do not care if you bleed AMD logos. 4GB at ~1.8x the bandwidth of 6GB or 12GB does not automagically mean memory size doesn’t matter. Or why not just make a 2GB card? It’s “fast enough” right?

I’m being wholly neutral here. AMD has benefits and downsides. nVidia has benefits and downsides. If AMD had an 8GB card, they’d have won lock, stock and barrel. No contest, especially at their price points. But they didn’t, and they made 4GB cards, and are marketing them as “true 4K cards”. If they have a limiting factor they have a limiting factor. I could not care less whether or not that limiting factor affects YOU. It is there. You can “praise be to AMD” all you want, but their cards are not perfect, and they had an opportunity to fully destroy the opposition and it was simply wasted. Whether it be an oversight for them, or it was that R&D couldn’t finish HBM 2.0 in this time window, it doesn’t matter.

If people want 4K gaming, especially in multi-GPU configs, nVidia is likely going to remain king, especially if they like multisample-type AA. It is what it is. For 1080p and 1440p? R9 Fury X all the way. Go nuts. My only point this whole time has been that 4GB would be a limiting factor at 4K, and tons of people have tried (unsuccessfully) to prove that “AA isn’t needed” or simply that “HBM is so good it doesn’t matter”. AMD made a powerful card with a slight achilles heel. nVidia has much more achilles’ heels in their entire maxwell line, but the mass public doesn’t give a crap and will buy them anyway. without any edge-case scenarios like the 4K one I’ve pitched. I don’t care about brand, I care about what the brands give me. You buy what you want; my points all stand, whether your preferences lie within them or not.

I want more benches to come out, from truly impartial places. Not places that are going to cherry-pick settings or games to make one card look good. These benchmarks’ trend shows that vRAM is a problem. Maybe later benches might prove me wrong, though I sincerely doubt it. If the card is good at some things and bad at others, it needs to be known. Not swept under a rug. And that goes doubly so for nVidia. I’m STILL annoyed at people defending the 970.

No it doesn’t apply to AMD cads, you are just making stuff up.

You do realize that dual 8pins are useless for a 280/280x.

If the card is limited by default then yes adding more pins won’t help but if the card has the ability to feed more power to the gpu it will help. And that’s what they basically said about Fury. Also Fury is AMD’s flagship card, don’t compare it to low end cards like 750Ti or 960.

Of course.

what i have seen from Nvidia and AMD is that Nvidia is the over priced fancy bourgeoisie over priced stuff, and AMD is the guys who get work done and make better things, GDDR5 and now HBM.

we all need to wait for the release and to see more tests, but AMD have done a great job and they need support to continue, if you would be ignorant and discard all this you are free, but you don’t know what you are losing.

Right. The fact that you see only bad for nVidia and only good for AMD means you’re part of the problem. Either learn to be impartial or I label you as a fanboy and proceed to disregard any input you have on any subject regarding GPUs. I have seen both the good and the bad of the tech from both green and red team. And neither side is clean. I don’t even use desktops!

nVidia has great and terrible things with their 900 series. MFAA and the tessellation improvements and how cool the cards run and the memory bandwidth improvements are all fantastic. The downsides are voltage fluctuations causing instability for the “benefit” of “reduced TDP”, and MFAA not working in SLI, and lack of CSAA support on all Maxwell GPUs, and mismatched clockspeeds in SLI causing instabilities and/or slight stuttering, etc. Their current slew of drivers is terrible for most users, causing varying amounts of crashing and I even know someone who had windows itself break on two separate computers simply by installing 353.06.

AMD has powerful cards that have fantastic crossfire scaling when drivers are doing their job, and the trend is that lack of a connecting bridge is great as it’s one less part that needs to be worried about. Also they have great driver-level MLAA solutions and their Omega drivers are pretty pro-consumer with the level of control that they offer, etc. That being said, their cards draw a ton of power and produce a ton of heat (regardless of whether or not the cooler gets rid of it, it’s being dumped into your room) and their R9 290/290X/390/390X cards have very limited overclocking room. Crossfire does not work in windowed mode AT ALL by design. The R9 Fury and Fury X cards are extremely strong for having 4GB of vRAM. And their drivers do not update nearly as fast as they could for per-game improvements.

And there’s TONS of other pluses and negatives about each team that I’m not even gonna BEGIN to explain, because I’ll make way too long a post. But YOU should go learn something about each side and make yourself impartial. I only care about what’s good and bad about each product, and I change recommendations based on that.

well thank for the info, but you gotta admit nvidia has always been doing the refining and making better use of current technology than innovating and inventing better technology, although i don’t know a lot on the software side, but its mostly true for the hardware, the upside or nvidia is that their CUDA cores are better, and they have better support for rendering programs, its just like the apple of graphics, good support but much higher prices and useless stuff tagged along, and what really grinds my gears is that they get around with it and benefit from it, I mean a graphics card for 3000$? was it the titan Z or something? imagine how much money they got from that?

just marketing bullshit, they ruined the image of the industry as well, unfair competition, when something is better in general people has to admit its better, not go around and bend their words to deliver a different image of the actual thing, if AMD is making better cards for gaming, you cant say oh but nvidia cards are better for graphics design and video editing, and discard all the benefits of the other, that what the industry is like nowadays , and if we don’t support a healthy business we are contributing in breaking up all the industry and making it a monopoly.

That’s a very accurate argument about AA using 4K monitors. You really don’t need it unless you are Antman.

Yes. I’m fully aware of all the crap both companies do. But let’s not forget the $1500 R9 295X2 when the R9 290X was $500 a pop. Or the $900 AMD FX-9590 which dropped to $300 ridiculously fast after people realized they were just higher clocked FX-8350 chips.

Anything you can call from nVidia I can counter with from AMD, and vice versa. AMD is the one who needs the unbeatable product now. I want nVidia to lose. I want them to absolutely get their butts kicked because I hate the way nVidia has been going. Their drivers have been slipping and Maxwell’s tricks for “efficiency” in drawn TDP is nothing but a big detriment. I have had people trying to stream TW3 and getting constant crashes and I had to have them overvolt their GPUs because the load present from the game and the stream is so much that the GPU crashes the drivers because it doesn’t raise voltage fast enough to cope with the load. Because “efficiency”. Or their 970 fiasco. Or their 980Ms with missing VRMs to kill overclock potential (there’s 3 extra VRM slots on the boards just open, and without adding them, they oft overheat and black-screen the PC with overclocks), or the fact that the GTX 960 is such a pathetic card with such a high placement in the naming hierarchy.

BUT AMD doesn’t get a free pass simply because nVidia is sucking right now. I will criticize both. End of story. AMD shouldn’t be marketing the cards as they currently are and that 4GB is more than likely indeed going to be a decent bottleneck. This is an AMD story, and I’ll criticize AMD cards (and the benchmarks) here. Does not make sense to come to an AMD-praising article and bash nVidia.

well if you compare 1500 to 3000 we dont have anything else to say here

I don’t know…

I did think 4GB was going to be a minor problem (mostly unoptimised texture packs at 4K take you over 4GB), however I just read Hardware Canucks review of the 390X and it didn’t seem to gain anything worth mentioning over a 980 at 4K.

Funny that a 390X has higher minimum framerates than a 980 then.

Must be those drivers again huh?

*Hardware Cunucks 390X review for proof. Measured using FCAT. 🙂

Nice try though…

Yes, $1500 for two $500 cards is the same as $3000 for two $1000 cards. 30% extra price. Both cards were overpriced, both cards should never have been bought. Get off your AMD-loving horse and go impartial or, again, I label you fanboy and disregard any input on this subject.

it not amd love, in fact i love nvidia more, but amd needs some love as well, and nvidia has all the love right now, so im feeling bad for amd 😛

also, adding 500 dolar for all the stuff that goes on the board is reasonable, as well as a liquid cooler, but adding 1000$ for an aircooled card, with about 1000 less cores than the former, it just doesnt match up.

Well a 390X is simply a rebranded 290X so I would not expect anything. The Fury X however is a mostly new architecture, if power consumption to processing power ratio is anything to go by; far less the HBM integration.

That being said, I really want to see somewhere harsh and impartial. I believe one such place is HardOCP where they flat out compare card performance/vRAM usage/etc between games, and if any problems appear they note every bit of it. I haven’t seen hardware canuck’s review mind you, but again, a R9 390X is nothing “new”.

I can definitely see the difference in AC:Unity. So much so that I prefer 1440p with 4x FX/TXAA/8xMSAA to 2160p with no AA.

Factually speaking this is not someone with a 4K TV looking at a 40 inch UHD screen from 12 feet away, this is me looking at the 28″ screen from 1.5ft away. With a 157 PPI number, and my head only 3 inches further away than Apple carried out testing when it decided we needed 300 PPI to ‘not see pixels’, it should have been quite easy for you to predict… even without owning your own UHD screen.

Erm, sounds like Nvidia too. I have a GTX 970 that would not go past 30% utilization for like the first 4 months that I had it, despite it pushing a UHD display it seemingly hardlimited itself there. Then, it went away for maybe 3 or 4 restarts… then it came back but only around 1.5hrs into gaming sessions on games like Titanfall and Crysis 3.

Three driver cleaner safemode re-installs and lots of faffing about later and suddenly it just disappeared. It’s been months since that and it’s only happened once since… instantly repaired by driver rollback, but upon installing the driver update again it was fine and has continued to be.

And you have to use MSI Afterburner for most fans, there certainly isn’t a Fan Controller built into Nvidia Experience or Control Panel. Hasn’t been an Nvidia-made Fan Control app for a long time.

Really Kit Guru? Every other benchmark shows the Fury X behind the 980 Ti in almost every benchmark! Just because you do not want to make AMD angry again you are just going to plain lie in your review? You are truly sad Kit Guru! And you have now lost all credibility! I will no longer trust any of your reviews!

HBM uses a diffirent technoligy.. I’ve seen a benchmark (in game) earlier that showed the 980ti using about 4GB of its Vram while the Fury X was using only 3 GB for the very same application.

True, if AMD didn’t share all his technoligy… Nvidia would still be in the dark… The only stupid move is that AMD shares while Nvidia doesn’t.

Might just be vRAM bloating. “Use more because it’s there”.

HBM is just fast. It doesn’t compensate for memory size when memory size becomes a limiter.

lol this reminds me of so many people making brash claims about how they can feel the difference from having a mechanical keyboard or some fancy costly mouse. Sure the mouse and keyboard maybe very well designed but if you are not good at a game buying some fancy peripheral is not going to make you better at said game. Too many egos trying to sound like they can feel the difference when really they can’t. We have people do the same stuff with screens having pixel densities north of 576PPI on mobile phones. Unless they stuck their faces literally on the screen it is going to be very tough and stressful for the eyes to even take note of the pixels without magnification aids.

I think half of this placebo talk is coming from those longing to be as good as the pro gamers. I honestly doubt even the pro gamers can see/feel the difference, they have signed dosh earning contracts so endorsement statements are par for the course, they just happened to be very good at the game, nothing more, simply by having quick twitch reaction times and loads of practice. The other half coming from buyer justification, they paid a lot for it so it better feel impressive. If it doesn’t simply state it a number of times to the point even the person making it up starts to believe in their own claims.