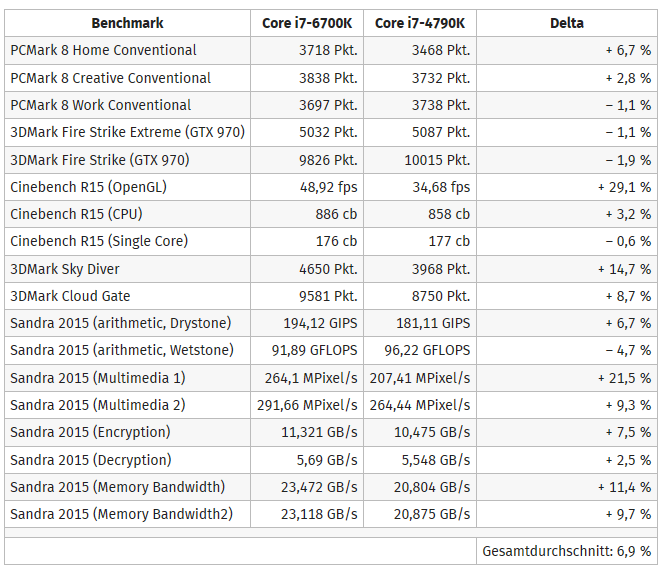

A web-site has published what it claims to be the first fully-fledged review of Intel Corp’s yet unannounced Core i7-6700K central processing unit code-named “Skylake”. As it appears, Intel’s forthcoming flagship chip for enthusiasts will be up to 29.1 per cent faster than the company’s current Core i7-4790K central processing unit.

TechBang web-site has obtained a sample of the Intel Core i7-6700K processor based on the company’s latest “Skylake” micro-architecture. The chip features four cores with Hyper-Threading technology, 8MB last level cache, an integrated graphics core as well as 95W thermal design power. The CPU operates at 4GHz and can accelerate itself to 4.20GHz or 4.40GHz, depending on workloads.

Based on test results obtained by the web-site, Intel’s Core i7-6700K central processing unit considerably outperforms its enthusiast-class predecessor – the Core i7-4790K based on the Haswell micro-architecture.

As reported before, Intel’s “Skylake” microarchitecture demonstrates excellent speed improvements in applications that demand multi-threaded performance. Maximum improvement hits nearly 30 per cent, whereas typical increase nears 10 per cent. Based on the cumulative table created by Computerbase.de web-site, apps that require single-thread compute perform similarly on current-gen and next-gen processors.

Intel did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: Given the fact that modern workloads are all multi-threaded, micro-architectural improvements of “Skylake” seem to be fine and targeted. Advantages of “Skylake” will be considerably more evident in server applications, where multi-threading performance is crucial. Moreover, thanks to the fact that “Skylake-EP” chips will support different internal architecture and AVX-3.2 512-bit instructions, expect the new server CPUs to show dramatically higher performance compared to today’s offerings.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Well then… Just give me a price tag

Well then… Just give me a price tag

What ~30% improvement?

You mean Iris Pro? Because only significant differences are in 3DMark, Cinebench (OpenGL) and Sandra multimedia…

Where CPU matters, it shows weak improvement…

+29% performance and the table give that on OpenGL test? are you kidding me? this test is GPU wise…

Looks like the CPU part is giving the minimal gains as expected…

Talk about click bait :))

Worse in some. Let’s be honest, that should not happen.

Yeah 29% faster at GPU.

CPU side it’s 99.9% Haswell.

Rofl its actually slower on both Firestrike tests paired with a GTX 970

Does anyone even use the GPU part in these processors ? I would have thought anyone getting an i7 would want a proper add in GPU. I only ask because the CPU part of the chip looks like the increase is minimal.

not worth to upgrade 🙁

When direct x 12 comes out with windows 10 it can use the onboard gpu and seperate gfx card AT THE SAME TIME. So we’ll all be using the gpu part soon.

time to upgrade from my i7 930. Direct x12 is set to unlock even more power from these chips (and some older cpu’s to) so i think it’s worth it. Especially now VR is just around the corner.

it uses the i7’s onboard gpu. Don’t forget windows 10 can use i7’s onboard gpu and any available graphics cards at the same time. Along with the much improved multicore efficiency of win 10 we should see some impressive gains when it comes out.

in your case its probably a good idea, but remember that this assumed typical increase of 10 per cent is against the TWO(2) generations old Haswell 22nm process, not the last Broadwell 14nm shrink….

also as I’ve mentioned below windows 10 can use the built in gpu AND any available gfx card at the same time. This should give it a noticeable boost in games, especially as my old i7 doesn’t have a built in gpu. So for example my gtx970 can handle the major workload of any game and the i7 6700k’s gpu can handle post processing effects on its own.

sure in your case coming from the i7 930 and board, you will see a good general improvement so you will not suffer buyers remorse , although you will not see as good a data throughput with ddr4 as people seem to be thinking….

they should have scraped antiquated ddr4 and gone directly to “hybrid memory cube” for its faster serds interconnect speeds or even HBM with its slower parallel interconnect….

It has to be a DX12 program AND be coded to run multi GPU. So it’s not an automatic, or likely common thing

………. I like with kitguru….. Going Here

< ??????.+ zeldadungeon+ *********<-Part-time working I Saw at the draft which said $19958@mk11 < Now Go Read More

<???????????????????????????????

13

What “improved multicore efficiency” are you talking about? Where is everyone getting this “Win 10 and DX12 r gonna give me liek 50% moar power” thing?

If that Skylake onboard GPU is an Iris Pro, then comparing it to an HD4600 is obviously going to be a no brainer in performance. Why not grab a mobile chip with an Iris Pro 5200 like the i7-4870HQ or i7-4980HQ and test that iGPU as a comparison? It’s not like Iris Pro doesn’t already exist for both Haswell and Broadwell.

And even beyond that, Skylake is showing as little as -1.1% performance and as much as 6.7% (and yes, that is NEGATIVE performance). And Skylake is a double generation jump too; comparing Skylake to Haswell is like comparing Haswell to Sandy Bridge; which I might add is a solid 10-20% (or more) performance boost in most cases. It’s not doing very well.

Firstly, the title is grossly misleading and Kitguru has simply traded any chance at building credibility for a catchy headline… Very stupid and short sighted.

As for the subject; at best the numbers confirmed what most already expect from Intel… that it is a non performer unless it has competition.

The whole idea of an “Enthusiast Processor” is that any unnecessary bloat (such as a piddly integrated graphics) is discarded and the CPU is designed around maximum performance gains. We see no performance gains here – and no reason to buy this product. Intel is a hurdle to PC industry growth.

Oh please… What optimization routines are there for integrated graphic parts and external GPU’s? None whatsoever. What on earth are you jabbering about?

Some dx12 optimizations can fairly easily be patched into games.

http://www.pcgamer.com/directx-12-will-be-able-to-use-your-integrated-gpu-to-improve-performance/

Windows 10 beta has some dx12 features already, benchmarks show the API is MUCH more efficient. http://www.pcworld.com/article/2900814/tested-directx-12s-potential-performance-leap-is-insane.html

the 6700K’s GPU is Skylake GT2, not Iris Pro (GT3). In fact, the i7-5775C (Broadwell GT3) scores higher in Cinebench OpenGL and 3DMark.

Depends from what, my Athlon 64 X2 4200+ could be replaced with nearly anything at this point; why not grab the current latest when/if I can find the money for a new system?

You had a CPU that is 10 year old. People here talks about upgrade worthiness for their systems which is 6 years or lower.

“Don’t forget windows 10 can use i7’s onboard gpu and any available graphics cards at the same time”

Yes its possible but honestly that feature is at the mercy of game developers to implement such programming method or not. Lets hope game developers will use the useless IGP to some use.

Even upgrading from an I7 930 would be very much worth it.

Intel 750 NVMe would be nice if he’s getting skylake.

And if you already have sandy or newer you could get an order of magnitude higher bang for your buck by putting the money for cpu+mobo upgrade to a graphics card upgrade instead. And that has been shown to actually work in real life.

Am I reading the chart right? It’s worse than before in games?

No its not unless the application is heavily AVX optimized.

“Unlinked Explicit Multiadapter is also the bottom of three-tiers of developer hand-holding. You will not see any benefits at all, unless the game developer puts a lot of care in creating a load-balancing algorithm, and even more care in their QA department to make sure it works efficiently across arbitrary configurations.”-PCPer’s Build2015 coverage

linky: http://www.pcper.com/reviews/Graphics-Cards/BUILD-2015-Final-DirectX-12-Reveal

None of this is to say I wouldn’t welcome it, but I do NOT expect it to be patched into existing games, or come from anyone but major studios with the manpower to do the coding.

I’m sorry but game benchmarks say otherwise. The difference between a 930 and a 2600k is 15-20% on its own.

Right… And that’s a benchmark designed to push against the limits of DX11. It does not mean that Windows 10 itself will boost CPU performance, nor that every program is suddenly going to be in DirectX 12. It’s a null claim at this point, unless CPU-problematic games now get a DX12 patch (unlikely). And even then, most CPU-problematic games now are just really really unoptimized, since their visuals is similar to (or sometimes worse than) previous games from years ago, while needing far far less system performance.

You do a clock for clock performance comparison then you would realizes there is not that much worth to upgrade to newer Intel CPUs.

Take a look at the overclocked performance of Core i5 750 vs Core i5 2500K (almost similar clocks) Performance difference between 1st gen Nehalem based Core i5 vs Sandy Bridge is not much.

http://semiaccurate.com/2011/01/02/intel-core-i7-2500k-review/

But but 14nm?

Yes on clock for clock performance. It’s about 15-20% better before you get Sandy Bridge’s clock speeds involved.

Also, that is the worst case scenario of all existing comparisons. Anandtech, Extremetech, Tom’s Hardware, and many others get much better results than that.

Lol… look at the difference between a 2005 CPU like the P4 HT and a 2009 CPU like the i7 920 and the difference is huge. Now compare a 2011 2600k and a 2015 Skylake and there’s barely any reason to upgrade. My computer is over 3 years old running a 4.5 GHz 3570k and now I can’t see myself replacing it until the successor to Skylake architecture. Hell, my mother is still running a Q6600 from 2007 with 8 GB DDR2 and the only thing she could really benefit from is an SSD.

lucidlogix been doing it for years, what are you jabbering about?

agreed, the title is seriously misleading. Still curious to see gaming comparisons tho.

that has to be programmed by game developers. It is not an automatic benefit. Judging by the fact that most developers try to make console parity, majority games wont ever take advantage of iGPU in multigpu setup.

yeah lets be honest here. How many developers will bother putting time to write extra codes to take advantage of iGPU just for pc gamers? None ……..

How many developers will bother putting time to write extra codes to take advantage of iGPU just for pc gamers? None …….. you are talking about a moot point.

For gaming, it is way better to get better graphics card instead. Faster CPU will only give higher max fps at lower image quality, but will not help with low fps at high resolution- where it matters. The benefits of a newer Intel processors are clear only in non-gaming tasks.

Not remotely true. If you play anything like Medieval total war or an MMO where the game is actually very intense on the CPU (usually on 1 thread, but that’s a different rant), then you will see the benefit of higher CPU performance. Beyond this, the CPU has tons of number crunching power these days to be able to assist in the rendering of a frame or calculating the physics on its own. See immediate following math.

AVX 256 allows 8 32-bit ints or floats to be manipulated under fused multiply-add operations in a single instruction much like a GPU stream processor does. Just taking the 4790K as an example:

4 cores * 8 data/(core*op) * 2 op/cycle (FMA) * 4.2 * 10^9 cycles/second = 268.8 GFlops. That may seem to be peanuts, but the truth is between compute and graphics kernels fighting over the GPU, you’re actually losing efficiency. Of course, games have to be better programmed, but the point remains. The difference Sandy Bridge and Haswell is 100% in theory, and it’s up to 85% in reality if you have the code to leverage AVX 256 and the RAM with the bandwidth to handle data streaming in such a manner.

And don’t ever forget you have AI and many other things attached to the CPU. As those become more advanced, the depth of the neural network will increase, and so will CPU intensity. It doesn’t pay to be ignorant. Do yourself a favor and stop partaking in not knowing what you’re talking about.

And even now there are still CPU bottlenecks even on a 4.5GHz 5960X in some games at 1440p and 4K. JayzTwoCents even shows this relative to the performance his colleagues get with the same setup but a higher clocked chip.

Well, you are talking about performance records and competitive gaming, not general situation. Of course performance will increase if a person upgrades 3-7 year old CPU into a skylake i5-i7. But just the same, if a person has a cpu that old, it is already unlikely that this person needs the highest performance possible. There are millions of PCs with great Intel quadcore chips running at 3GHz+, that need only a minor upgrade in memory size and a proper new graphics card to stay ok at gaming. General situation is such, that CPUs stopped advancing, overclocking is becoming exclusive to few models, CPU requirements for most games are within reach for old overclocked quadcores, nothing magical is happening in CPU field. And consoles have weak CPUs, so ports of their games do not need great CPUs on a desktop. So what I was saying- on the upgrade path, for most gamers CPU upgrade is needed last, after making sure that PC has enough RAM, computer has a latest GPU, and an SSD.

Moore’s Law has come to an end. Experts have known this since 2007~2008. There is so much raw transistors count and IPC that you can improve in over 50 years. This is it, pals, get ready for the raw performance deceleration and stop demanding better performance indefinitely from Intel. They WON´T deliver because the physical limits of small desktop single-cpus are close to its limit.

While quantum computing is still far off, our only hope of improvement in raw calculation is through parallelism, witch will make things much more expensive than we are accustomed to.

I’ve a 2500k Sandy Bridge and haven´t been impressed with the 15% improvement from Ivy Bridge, nor the 10% improvement over Haswell to be worth the upgrade from my Sandy Bridge. I wasn´t execting anything better with Skylake either. Due to the Moore’s law deceleration I have mentioned before, I was expecting 5% at best. And in fact, 2 generations later, (Don´t forget about broadwell), the improvements have barely reached 8% (29%? really kitguru?) this would accumulate to less than 40% in total to SB. Most people saying that this processor is not worth the upgrade probably are close-sighted and probably have a Haswell and are only seeing the performance side of thingsHowever, the added technologies in the platform may be worth the upgrade for the first time: DDR4, USB 3.1 with Type C connector, PCIe 4.0, Sata Express and native NVe support. I have none of those in my Z68 motherboard. The newer most refined fan controls from Haswell are also a plus since I’ve been wanting to build a semi-passive PC for a while and Qsmart fan from my MB does not allow the fan to switch itself off automatically.

On the other side, the slowly removal of DDR3 from the market would also present a problem in the next couple of years, as the chance of my computer developing memory problems and needing a replacement MB or DDR3 would only create headaches. Having a newer platform would avoid such problems witch I have experienced with my old but excellent Q6600. Raw-performance wise, that processor could still be fairly responsive for a mainstream computer today, I tried to keep using it for as long as possible as an downloading machine and server, but bad-contacts on MB and failed DDR2 modules made sustaining it impractical. as the price of low quality replacement 8gb DDR2 memory to It would buy the same amount of ddr3 PLUS a newer motherboard. Is a shame that fast processors are starting to have more extended life due to it’s performance lasting longer, yet being limited by their platform being forced to become obsolete. I guess this is what capitalism It’s all about. If they can´t improve IPC anymore, they would still force products to be switched over after a couple of years by making other things fails or become obsolete.

The Moore’s Law is coming to an end. Experts have been predicting this since 2007. This shouldn´t be neww to any of you.

The Moore’s Law is coming to an end. Experts have been predicting this since 2007. This shouldn´t be neww to any of you.

However, the shift to DDR4 and the newer technologies ARE worth the upgrade IMHO, specially because I have a SB wich has an already aging MB, even tough it’s performance is still excellent.

Because Anandtech and Tomhardware’s tests are not reliable since they are heavily inflated by hardware companies that support parts and even pays them to make tests. This also includes intel.

Unity. Unreal. Cryengine. Take your pick. Obviously theyll leverage that feature or watch their product die.

Engine developers will most definitely take advantage of the feature, and seeing as dx12 and windows 10 are coming to xbox, itll be the same engine code for pc and xbox. You seem to think all developers write their own 3d engine, i dont know how you can play games and believe that. Its ridiculous.

For all you idiots that thinked unlinked GPU mode in DirectX 12 won’t matter, it’s already in Unreal Engine 4, which powers a huge number of AAA titles. So yeah, the iGPU metrics actually do matter somewhat. http://wccftech.com/directx-12-multiadapter-technology-discrete-integrated-gpus-work-coherently-demo-shows-big-performance-gains/

People here talks about upgrade worthiness for their systems which is 6 years or lower with an Intel i5/i7 CPUs. Which in reality not worth the money. Clock for clock performance improvement compared to newer Intel CPU is negligible, which I mentioned earlier.

Of course you will gain some performance and better energy efficiency with newer Intel CPU, but that doesn’t justifies the money needed to upgrade. For a gamer all they need is to upgrade their GPU and slightly overclock their current Core i5/i7 CPU that does the trick. Upgrading to an entire new Intel CPU platform for a small boost is not worth the money.

Denial doesn’t make you right.

deym. need some uprade!

Hey you forget 2005-2009 AMD was putting out some decent CPU’s. Once Bulldozer came out Intel put on Cruise Control and started laughing their way to the bank.

No, benchmarks and testing make me right.

Except for games which are CPU-choked, it’s better to just get the new platform. It’s situation-dependent, not one size fits all.

Tom’s yes, Anandtech no. Anandtech’s numbers are always dead on with independent reviewers’ numbers. That’s why anandtech has credibility in communities like overclock.net. It has a reputation for telling the exact truth. It doesn’t matter that Anand used to work there and now works at Intel. It has been objective through and through.

CPUs never stopped advancing. Software did. If Microsoft didn’t keep Windows XP and Windows 7 alive for so long all the way back to the Pentium III in support, software studios wouldn’t be forcing so much legacy support and not allowing multiple code paths for newer instructions which are far more powerful.

SIMD is extremely magical, if you have the compiler that optimizes non-trivial code to it and you have a chip which supports it.

14nm doesn’t do anything :/

So, u play games on iGPU…. cause I can’t see any improvement in the CPU part over Haswell/Sandy Bridge….. only in that pathetic iGPU section….

People with 4770k/4790k and even 3770k, don’t need to upgrade IMO. Cause I can’t see any noticeable improvement in CPU section TBH, only on that pathetic useless iGPU :/ :/

Isolated incidents of small performance gain is not a reason to upgrade.

Sorry buddy you again failed with me. I proved with benchmarks. 😉

Software advanced or not, performance gain with newer Intel CPUs is almost Zero.

well they say it’s 30$ faster on multi thread apps. I’ll be using it for rendering vdeos and some photoshop projects

And that’s Intel’s fault when we’re at theoretical limits for legacy instructions? I think not.

Except you didn’t provide any.

Isolated incidents? Try every MMO on the planet.

then why not add freaking core the problem here is that intel try to dump shit cpu so people upgrade and when amd get new tech oups the new intel processor come out and is two time better then this one and they will say… we forgot we had that in the closet but here you go

If u have 4770/4790/4670/4690, just add a good GPU, it will do much better 🙂

Multiadapter is just one extra feature. There are many improvements with dx12. Some are apparently so low level that even dx11 games will still benefit. Benchamarks already show games are running better on win10.

Whether it’s Intel’s fault or your so called claim of software is not advancing, performance gain with newer Intel CPUs is almost Zero. Just denying facts won’t make falsity into truth.

Yes try every “MMO” on the planet and compare with Clock for Clock performance to realize its close to worthless to upgrade to newer Intel CPU’s.

Cry fatty cry….

dasfsfsf

Better power consumption and Less heat in same conditions

Does it improve performance…. I mean at least 20% from Haswell…

On the performance side ,,,, close to 5% ,,,,,but u said 14nm means nothing ,,so I replied that

And anyone reading this thread will say you provided nothing of substance and only items of speculation.

No, you realize it’s very worth it.

But that performance gain or lack thereof is dependent on the software and not on Intel’s hardware efforts which to this day still give IBM and Oracle headaches, and they’re the only supercomputing CPU makers left. Intel is pushing hard on both software and hardware. If the world doesn’t evolve, Intel can do nothing about it, and neither can AMD or Nvidia.

And anyone reading this thread will say you provided nothing of substance and only items of speculation.

Whether it’s Intel’s fault or your so called claim of software is not advancing, performance gain with newer Intel CPUs is almost Zero.

Introduction of supercomputer topic is simply your attempt to divert from the topic which proves you are unable to prove my point.

You already know it’s not worth.

No. The 6700K has 7 watt higher TDP.

I said it’s in same conditions ,,, same tasks,,, it will work much cooler ,, much efficiently

What… Hydra? It never actually worked. I’m talking about people actually getting reliable use out of what they have paid for. Not wishing that it may help maybe if I cross my fingers and are really really lucky.

i thought perf was gonna be like a 6 core i7 but nope looks like i’ll be hanging on to my 2600 for a while…

at this rate it might be take 10 nm to finally have double the performance from 32nm (lga 115x i7)

yeah moores law is defs falling apart

Not even sure if it’s worthy replacement even for ancient Core i7 920. When overclocked it’s still a formidable competitor. I mean they even have the same cache sizes, same core count and same thread count. Basically what you get is 14nm and few more instructions with higher clock.

One would expect that latest Intel high end CPU would be at least hexa-core with HT. Not this disappointment…

I am not impressed at all and your title is misleading fuck you Kitguru

Well, it doesn’t matter if it is Skylake or Broadwell, 4 cores are absolute. Only 6 cores+ for me thank you.