Today we take a look at AMD's second graphics card based on the 28nm ‘Fiji' Silicon. The Sapphire Fury card is the first to hit our labs, and it features a new version of their highly acclaimed ‘Tri-X' cooling solution. To reduce the retail price against Fury X – the new (non X) Fury model ditches the all in one liquid cooler and has 56 of the 64 compute units on the silicon enabled to yield a total of 3,584 stream processors. At around £445 inc vat it is priced almost identically to rival GTX980 solutions – but can it compete?

There is no doubt that Fury is an important SKU for AMD as it is going to be priced around £80+ less than the flagship Fury X, subsequently targeting a broader enthusiast audience interested in buying a modified GTX980.

The Fury X card certainly has potential (review HERE) but AMD really need to work on dropping UK prices a little – £530 to £600 (OCUK prices at time of publication) is going to a difficult sell for them right now. Some good news for potential Fury X customers – from what we have been told, Revision 2 of this card is already hitting retail – with noted pump and coil whine issues resolved. The Fury X walked away with our ‘Worth Considering' award last week and it will be interesting to see how the Sapphire's Tri-X air cooler deals with the Fiji architecture.

If you want a brief recap of Fiji, the new architecture and HBM memory, visit this page.

| GPU | R9 390X | R9 290X | R9 390 | R9 290 | R9 380 | R9 285 | Fury X | Fury |

| Launch | June 2015 | Oct 2013 | June 2015 | Nov 2013 | June 2015 | Sep 2014 | June 2015 | June 2015 |

| DX Support | 12 | 12 | 12 | 12 | 12 | 12 | 12 | 12 |

| Process (nm) | 28 | 28 | 28 | 28 | 28 | 28 | 28 | 28 |

| Processors | 2816 | 2816 | 2560 | 2560 | 1792 | 1792 | 4096 | 3584 |

| Texture Units | 176 | 176 | 160 | 160 | 112 | 112 | 256 | 224 |

| ROP’s | 64 | 64 | 64 | 64 | 32 | 32 | 64 | 64 |

| Boost CPU Clock | 1050 | 1000 | 1000 | 947 | 970 | 918 | 1050 | 1000 |

| Memory Clock | 6000 | 5000 | 6000 | 5000 | 5700 | 5500 | 500 | 500 |

| Memory Bus (bits) | 512 | 512 | 512 | 512 | 256 | 256 | 4096 | 4096 |

| Max Bandwidth (GB/s) | 384 | 320 | 384 | 320 | 182.4 | 176 | 512 | 512 |

| Memory Size (MB) | 8192 | 4096 | 8192 | 4096 | 4096 | 2048 | 4096 | 4096 |

| Transistors (mn) | 6200 | 6200 | 6200 | 6200 | 5000 | 5000 | 8900 | 8900 |

| TDP (watts) | 275 | 290 | 275 | 275 | 190 | 190 | 275 | 275 |

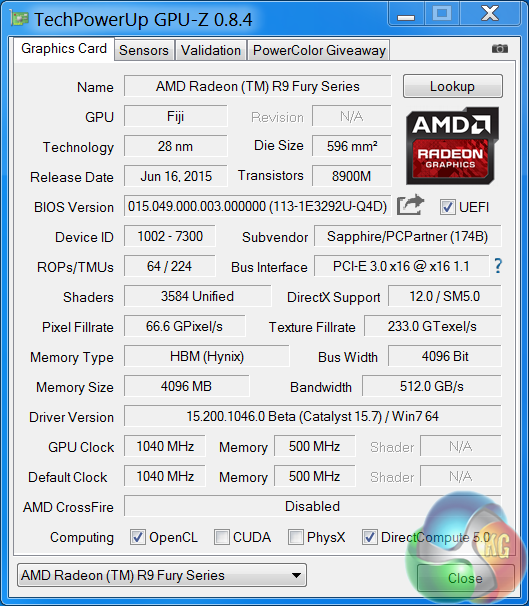

Sapphire are releasing two versions of the Tri-X Radeon R9 Fury – a standard edition with the core clock at 1,000mhz and a limited edition OC model with the BIOS set at 1,040mhz. We look at the OC model today in this review.

The R9 Fury GPU is built on the 28nm process and is equipped with 64 ROPS, 224 texture units and 3,584 shaders. The more expensive Fury X has 64 ROPS, 256 Texture units and 4,096 shaders. They both have 4GB of HBM (Hynix) memory across a super wide 4096 bit memory interface. Bandwidth rating for both cards is 512 GB/s.

I have spent the last couple of weeks testing all our Nvidia and AMD hardware with Catalyst 15.6 beta and Forceware 353.30 drivers. A couple of days before this Fury launch AMD emailed me to say that their latest Catalyst 15.7 beta driver was available.

Subsequently I haven't had time to test all AMD hardware with this driver, however I did make time before launch to test Fury and Fury X with the latest Catalyst 15.7 beta.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

You Guys shuld do the test with the new drivers. But its a great review. Thanks

“but based on research and what I have read on our own Facebook page,

this lack of HDMI 2.0 connectivity is a real bugbear for gamers.”

Let’s be honest – what percentage of gamers are actually using 4k televisions that only have HDMI 2.0 inputs? Is it even 1%?

What percentage of the gamers making noise about Fury’s lack of HDMI 2.0 are actually using 4k televisions that only have HDMI 2.0 inputs? More to the point, what percentage of the people complaining about it aren’t ever going to own a 4k television that only has HDMI 2.0 inputs, and are just complaining because they don’t like AMD and want something to hang their complaints on?

The number of people using 4k HDMI 2.0-only televisions for their monitors who are also building their own HTPC and will be disappointed by Fury’s lack of HDMI 2.0 is so small that I’m pretty sure AMD doesn’t care – they have a much bigger market to focus on. By the time 4k HDMI 2.0-only monitors become mainstream enough that it’s necessary (assuming that ever happens), Fury will be LONG obsolete.

Besides, HDMI is on its way out anyway.

Some DP to HDMI 2.0 adapters are going to hit the market soon, so no problem at all.

And if you buy a 600$ card.. i think you can afford a 10$ adapter 😉

I absolutely agree. But the people complaining about Fury’s lack of HDMI 2.0 will NEVER hear that.

It’s about future proofing and also it’s kinda weird to leave it out

Very nice card, but the Sapphire wording is upside down!

Also, more and more people will be using a TV as 4K gaming is pointless on a 28″ screen, it’s suited to a big screen experience

“future proofing” isn’t an issue because by the time there is a sizable enough proportion of gamers using 4k HDMI 2.0-only televisions for their gaming that it becomes necessary, Fury will be obsolete. I don’t have the numbers handy but I’m willing to bet a nice shiny quarter that today, and by a year from now, the number of people gaming on 4k HDMI 2.0-only televisions is and will be still less than 1% of gamers.

I’d also be willing to bet that of the people making noise about the lack of HDMI 2.0 on Fury, 85% of them (or more) do not own a 4k HDMI 2.0 television and will not own one in the next year or two – they’re complaining because they want to complain.

There are 4k tv with DP, so if we speak for future.. you can purchase a tv with dp 1.3 port.

But big problem of tv play is ghosting, many see it on fast gaming lcd, on tv panel will be way worst.

Not my problem… got a 28″ 1440p… i don’t really need a 4k tv, i don’t even use the one in my house…

Its probably a fairly small percentage, although we have seen quite a few people complain about a lack of hdmi 2.0 support on our facebook page. I own a large sony 4k tv myself and i cant really use any amd cards to game on it otherwise its 30hz. Most of the 4k tvs in the uk seem to be primarily hdmi only, although ive seen some panasonic with a single displayport, although i dont think some of their models have yet to get netflix 4k certification.

Sure its a small percentage, but i fail to see why amd cant add an hdmi 2.0 port, most devices you connect to a television have hdmi connectors (consoles, sky and virgin media boxes, blu ray players etc) so it seems unlikely it will ‘die overnight’ to be replaced by displayport. Nvidia have hdmi 2.0 support so it would be good for amd to add it.

It’ll look the right way once its plugged into your motherboard facing outwards. 🙂

why didn’t they test it , benchmark it with Pcars.. oh wait…

We did use the 15.7 beta drivers for fury and fury x. We didnt have time to test all amd hardware again as the 15.7 beta was only given to us a few days ago.

Ah good point, I’m using a bitfenix prodigy, so wouldn’t work in such a case!

15.7 beta? Don’t they have a WHQL version of it already?

why do you increase the power so much but not core voltage?

correct me if im wrong but i thought the steps where..

1. take the core as high as you can on default

2. increase power limit and see if there is more from the core

3. well your going to want to make sure there is a good handle on temps and stability at this point

4. increase core voltage and clock.. maybe need another power increase so yeah it gets a little more advanced and a understanding of what is going to tell you if your voltage,power, or just at the limit of the chip.

Unless you’re referring to a different driver, the 15.7’s aren’t beta, they’re WHQL. I think that’s where the confusion is coming from.

The suggestion I’m making is that a large proportion of the quite-a-few-people complaining about a lack of HDMI 2.0 support on your Facebook page are people who wouldn’t ever buy an AMD card even if it DID have HDMI 2.0 support. They just want another reason to complain about AMD.

Everybody who decide a purchase should consider the image quality as a factor.

There is a hard evidence in the following link, showing how inferior is the image of Titan X compared to Fury X.

the somehow blurry image of Titan X, is lacking some details, like the smoke of the fire.

If the Titan X is unable to show the full details, one can guess what other Nvidia cards are lacking.

I hope such issues to be investigated fully by reputed HW sites, for the sake of fair comparison, and to help consumers in their investments.

AMD is going to be selling DP to HDMI 2.0 adapters.

-Part-time working I Saw at the draft which said $19958@mk7

bg…

http://www.GlobalCareersDevelopersCrowd/lifetime/work...

The driver we used was the driver amd sent us directly three days before this review was published and as the gpuz screenshot shows it says 15.7 beta. The whql driver looks identical and was released around the same time this review was published (maybe a little earlier, but certainly not time enough to test both fury cards with).

We tried everything, the screenshot just shows one variable. Once our card hit 1100mhz there was nothing left regardless of settings.

Yes they do now, but three days before the review was published that is the driver amd sent us directly. Its the same driver anyway.

i’d like to see some Fury X and Fury crossfire tests from you guys. i know there are benchmarks out there but i want to see you guys do it.

Is there likely to be a re-review of the top graphics cards upon the DX12 release? It’d be interesting to see how the new hardware fairs with the new software

Why are most of the people always complaining about that lack of HDMI 2.0 on the Fury (x)? I mean there are tons of DisplayPort to HDMI cables on the market nowadays… It is not like people won’t buy this card because of that.

< ??????.+ zeldadungeon+ *********<-Part-time working I Saw at the draft which said $19958@mk11 < Now Go Read More

<???????????????????????????????

1

Because they need a reason to complain about (and bash) an AMD product.

99% of the people complaining about HDMI 2.0 would never buy an AMD card anyway but they’ll spend hours of their day finding a reason to complain about it, and then complaining about it.

Win10 preview already has DX12 running on it and it is a free download. You can test it yourself.

Go with the help of kitgur’u… My Uncle Mason recently got a stunning red Jeep Grand Cherokee SRT by working part-time off of a pc.

You can try here ⇢⇢⇢ Start Job Here

more likely Nvidia fans

i just bought a Sapphire R9 Fury X 4GB HBM AIO Liquid Cooled GPU $389.99 @ newegg.com, Wed. Sept. 14th, for the sapphire AMD reference Design board & CM AIO H2O cooler, and i could give two shits of having HDMI 2.0, as i only have either a 24″ Benq LED Monitor with only DVI-D & VGA, or a 1080p Sanyo 42″ Inch HDTV with HDMI 1.1/1.2 or whatever, its a 5 yr old HDTV 1080p max, but using VSR…

(AMD’s Virtual Super Resolution) in the Display tab of radeon settings, i can set my games to 3200×1800 resolution, almost 4k, might as well be considered 4k, it might as well be…. and looks no different to me than my friends PC with Nvidia GTX 1070 6GB GPU with HDMI 2.0 @ 4K 3200×1800….

YUP, i call them nShitia GPUs, cuz nvidia cheats in everything… i used to be an nvidia fanatic, till they destroyed 3Dfx.. fuckin’ nshita basterds..

AMD needs to dominate the GPU & CPU MARKETS….

so COMPETITION can RISE / and PRICES DROP / Drastically, on both, Nvidia and Intel hardware… example…

AM4 Zen 8c/16 Thread cpu, theoretically at $299-$399? intel equivalent $600-$2000..

(ME)Um… no thanks intel, im with AMD On this one.. sorry, your too expensive, is there still 24k gold in your CPUS intel?…(INTEL) NO? (ME)DAMN…

i wish assholes would just stop dissing AMD, one day they will dominate, and AMD haters out there will cry?? over the price they paid for their cards…….

damn

https://www.techpowerup.com/gpuz/details/844m9

I must admit I went with a GTX970 with the intention that when I gather a little more money, to go to a better card still supported by my CPU. But what I notice almost directly is that although their shaders are faster, they give me poor image and lightning quality compared to my Old HD6950 2GB wich is remarkable considering my old card is a lot older then this new GTX970. For some reason the market makes AMD look weak in theory while in practice AMD cards outshine the market. I got lured in with commercials and Nvidia lovers claiming Nvidia was a lot better… but now I get the feeling that those people work for Nvidia and get money for luring consumers in their trap.