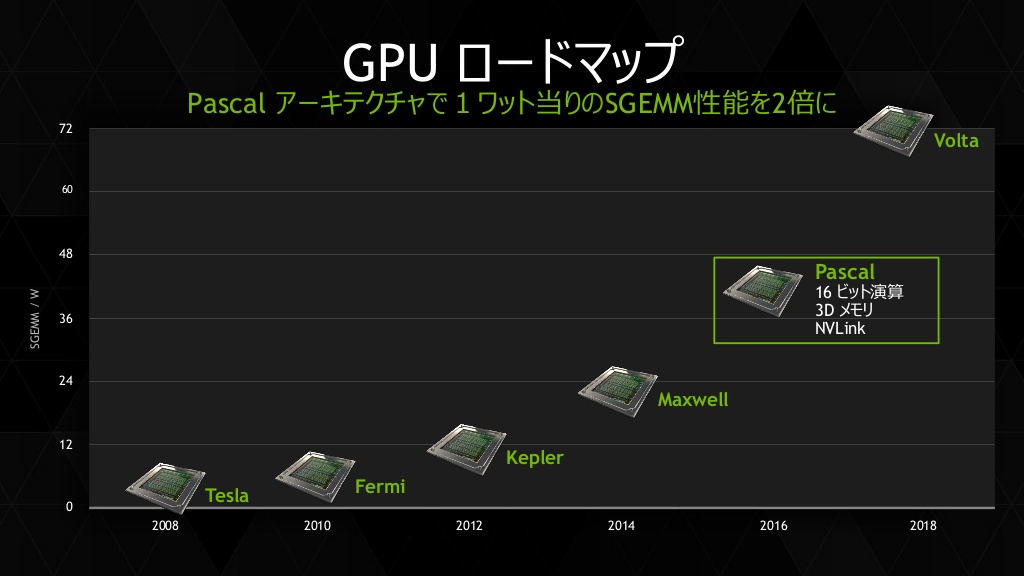

Nvidia Corp. has slightly changed its roadmap concerning GPU architectures. As it appear, its next-gen GPUs code-named “Pascal” are now due in 2016, whereas their successors will be released only in 2018.

Based on a new roadmap that Nvidia showcased at a tech conference in Japan, the company will release its code-named “Pascal” GPUs in 2016 and will follow on with “Volta” graphics processors in 2018. The “Pascal” chips will be made using 16nm FinFET process technology and will be available in 2016, reports WccfTech. Previously “Volta” was expected in 2017.

Not a lot is known about the first “Pascal” GPU. Nvidia has reportedly taped out its GP100 graphics processor back in June. Given the timeframe of the tape-out, it is highly likely that Nvidia uses TSMC’s advanced 16nm FinFET+ (CLN16FF+) manufacturing technology. Nvidia has changed its approach to roll-out of new architectures. Instead of starting from simple GPUs and introducing biggest processors quarters after the initial chips, Nvidia will begin to roll-out 16nm “Pascal” GPUs with the largest chip in the family.

Nvidia did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: It looks like Nvidia is pulling in “Pascal”, but slightly delays “Volta”. The reason for this is simple: 10nm process technology. At TSMC it will only be available for Nvidia in 2018.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

“As it appear, its next-gen GPUs code-named “Pascal” are now due in 2016, whereas their successors will be released only in 2018.”

That has always been the case for anyone paying attention — WCCFTech is not a reliable/legitimate source.

Pascal = 2016 <– This was known at least as of March 25, 2014:

http://blogs.nvidia.com/blog/2014/03/25/gpu-roadmap-pascal/

Volta = 2018 <– This was known at least as of March 17, 2015:

http://www.extremetech.com/gaming/201417-nvidias-2016-roadmap-shows-huge-performance-gains-from-upcoming-pascal-architecture

KitGuru needs to rely less on click bait articles from WCCFTech.

Also Nvidia is not launching 500mm2+ sized pascal dies at 16nm at launch. At least not on their gaming line. Where the hell did you hear that bs?

amd was using 500mm2 for there fury chip with HBM1 maybe nvidia is using the same lithography process for there chip with HBM2

It’s not about HBM1/2. It’s about the massive cost and reliability of such a huge die on a brand new fab. It’s also, more importantly, about business…meaning if you put out your biggest chip on your new generation product, you have nothing else to release for 2 to 3 years when Volta comes out. AMD put out 596mm2 with the Fury X because it’s 28nm which is a very mature fab. And also because it needed to in order to compete against Nvidia. Imagine if they’d gone with something less…like 500mm2. All their performance numbers would be 17% lower. Although one would argue that a smaller die = less heat = more room for higher clock speeds to make up for it…

There’s no way they’re going to bring out 16nm on a 980ti/titan x sized die right at the start. I will take any bets on this.

i disagree about the product release, people that buy a 980/980ti are not interested in a 960. you can release a high end card without cannibalizing the dmin tier market. or are you doubting that they can produce a die at 16nm that meets the performance maturity that they need to sell at the top end?

Those were the 2 points I made. One is the 16nm fab is new…and untested…and you can’t go large and power hungry on first generation chips. Don’t believe how unreliable a new fab is? Look at how long it took Nvidia to pick between Samsung and TSMC and finally siding with TSMC due to their longer working history and reliability.

Second point is…they will kill their own sales by offering their best card right at the release of the new generation. They will not be able to give any reason at all to their enthusiast market to upgrade their card within 3 years of launch. Whereas when you look at Kepler, people bought the 680, then the Titan came out, then the 780, and most people who bought the 680 eventually upgraded to those cards. And then when Maxwell launched, they started with just the 750 and 750ti. Then released the 980 that some bought, and finally after a while, the Titan X and 980ti which gave people (enthusiast market, again) a reason to upgrade.

Nvidia likes incremental upgrades. They don’t want you to buy a card now, and launch another card in a year that completely destroys the card you bought. They put out one card, then 12 months later they bring out another card that is generally just 10-20% faster, so it’s better than what they offered before, but not enough to piss off anyone who bought their last gen cards. And then another 6-12 months later they put out an even better card, with 50%+ better performance than their older cards, and people start upgrading again.

If on day one of the Pascal launch, if they come out with their absolute best card, they are going to have nothing interesting to bring to market for 2 to 3 years until Volta comes out. And that would be silly. Even if it were possible with the new 16nm fab. In terms of business, you need to offer a product that is a bit better than your competition, without being too much better/too costly for you. So they just need to put out a slight performance increase, but sell the card on much lower power consumption, wait for AMD to release something else, and then launch a bigger die version themselves, and back/forth they go. Just a quick reference:

FERMI

———–

GTX 580 = 520mm2

KEPLER

————-

GTX 680 = 294mm2

GTX Titan = 551mm2

MAXWELL

—————

GTX 750ti = 148mm2

GTX 980 = 398mm2

GTX Titan X = 601mm2

Do you see the pattern? Small, Medium, Big, restart. Don’t think about it based on die size. Because it’s really just about transistor count. Pascal at 16nm, even a 294mm2 sized die like the GTX 680, along with HBM2, would result in performance close to the Titan X…and perhaps even higher due to lower heat/power consumption allowing higher clocks.

Hope that helps.

means Volta is 10nm ……..confirmed………as i said earlier

They weren’t rumored. They were to be part of IBM’s supercomputers built for the department of energy. This is a delay.

So Pascal in 2016 and then a refresh/rebrand in 2017? Makes sense, it is what has worked three times so far.

my associate’s stride close aunty makes $98 an hour on the portable workstation……….Afterg an average of 19952 Dollars monthly,I’m finally getting 97 Dollars an hour,just working 4-5 hours daily online.….. Weekly paycheck… Bonus opportunities…earn upto $16k to $19k /a month… Just few hours of your free time, any kind of computer, elementary understanding of web and stable connection is what is required…….HERE I STARTED…look over here

ve.

➤➤➤➤ http://GoogleBestTimeOnlineJobsNetworkOnlineCenters/$98hourlywork…. ★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★★

No, there is no delay. You clearly didn’t bother reading the articles in my post:

http://www.anandtech.com/show/9088/nvidia-gtc-2015-keynote-live-blog

You also didn’t bother doing research on your own statement.

“Finally, Summit is expected to come online in 2017, with trials and qualifications leading up to the machine being opened to users in 2018. As it stands, when Summit launches it will be the most powerful supercomputer in the world. Its 150 PLFOPS lower bound being roughly 3x faster than the current record holder, China’s Xeon Phi powered Tianhe-2, and no other supercomputers have been announced (yet) that are expected to surpass that number.”

^ 2018 because 2017 is just testing phase. Again, 2017 had nothing to do with commercial applications which is what the article at Kit Guru claims. NV never intended to have consumer Volta in 2017.

http://www.anandtech.com/show/8727/nvidia-ibm-supercomputers

They are just going back to what was done years ago.

You can easily release the biggest chip first, That way all the rejects are then binned for the next tier down. It’s a common process they all do it.

It’s stupid to release small chip first, What do you do with the rejects, dump them and not make any money back at all?

As for not having anything else to last that’s also rubbish, All they do is refine what they already have, Such as Maxwell which was still on 28nm node and still seen quite decent gains, Was even enough to get me to upgrade from 780’s to 980ti’s. I originally planned to wait it out for 16nm gpu’s.

Maxwell wasn’t really “refined.” They just changed from being a dual-purpose gpu die for consumer/professional line, to single purpose. No more space wasted. That’s really the only difference in “refinement” they did. Real “refinement” is new architectures. Which is what Kepler, Maxwell, pascal, and volta are. Stop talking out of your ass. You went from a Kepler card, to a Maxwell card. A Maxwell 2.0 card, mind you. And you fail to see the irony in your own argument. If you have the largest Pascal card…then you won’t feel the need to upgrade until a large enough Volta card…which is what you did…you waited for the generational change…but that seems to have just got right over your head.

Smaller chips = less damaged units = gives TSMC time to refine the process, allowing for bigger dies. Otherwise, yes, as has been the case for decades…you end up paying way too much for the dies.

You understand nothing. The only thing more impressive than your level of ignorance is your ability to fool yourself into believing you have even the slightest clue what you’re saying. Look into 16nm fab costs then come talk to me about your fantasy world where a 600mm2 die is a possibility at launch.

Pascal and Volta will likely reside on the same process, just like Kepler and Maxwell.

If Intel has delayed 10nm FinFET, so will TSMC.

That still requires having nodes built, which requires having the cards.My logic is flawless.

http://www.pcper.com/files/news/2015-03-17/GTC-36.jpg <– the roadmap is still the same as from GTC 2015. Nothing has changed, clickbait article.

i doubt it. even intel has problems taming 10nm. 2018 for volta is most likely because nvidia wants to spread out their cards a little more to properly milk it . probably pulling a maxwell with an architectural improvement to keep it fresh and potential buyers interested. then if pascal has run its course introduce volta, still on 16, or perhaps even 14nm?

they hv a trick that is back end processing …..in short tsmc 10 nm is not equal to intel 10 …..for ex tsmc will fab some part of transistor using older 16nm tech nd rest on 10nm this will allow them to quickly ramp up production,lower time to market,lower cost,better yields but surly less performance than intel 10 nm….thats y its possible that they can jump to 10 nm in 2017.

samsung said they will start volume production of 10 nm in Q2-Q3 2016 means one more time samsung may beat tsmc.

tsmc,samsung,global foundries using this back-end trick to quickly jump on next node.

So you believe it will come to the labs in 2017?

Probably.

erm to have a 10nm GPU in 2018 you need volume production in 2017 and risk production by mid / early 2016 with first wafers early end of 2015 – might work but we’re talking TSMC here not exactly the most stellar track record…

yeah but we have yet to see ANY 16nm FF from TSMC, of any kind in the wild… I remind you intel has 14nm chips since q4/2014 and samsung since q2/2015 (on shelves)

u r completely correct ..intel is undisputed leader when it comes to fabs

Apple A9 is Tsmc 16nm FF …..hope we will see soon

for 10 nm i m pretty sure samsung will beat TSMC

We (I) have no confirmation of the fact that any A9’s are TSMC as initially GF/Samsung should have built them. I can not find any type of confirmation one way or the other and the chip is out in the open right now so it is rather strange…

i mean FF……..btw i m pretty sure current A9 is made by samsung not by TSMC

So should i sell my 970 in february/march and buy a Pascal card in may/june? or hold onto it for another year and wait for more refined dies to be released by TSMC? also TSMC aren’t manufacturing AMD’s new cards they will be manufactured by GlobalFoundries on a 14nm FinFet+ die as opposed to Nvidia’s 16nm process

Really just depends on what size die they decide to put out, on what price card. And don’t worry about the 14nm/16nm aspect. TSMC’s 16nm is proven. So you don’t have to worry about any fabrication improvements coming within a couple years of the new GPU launch. However, architectural changes can still be made. Just as what happened with the Maxwell 1.0 vs. Maxwell 2.0 cards. That’s always a gamble. But it all depends on what card they end up launching. So keep an eye out. But the GTX 970 is a great card. So I doubt you’d be in too much of a rush to upgrade it anyway.

Cheers.

I completely agree, however, in 2-3 years that (currently) brand new fab will be much more refined and in turn produce greater yields. Riiiiight before Volta’s launch…

In order to use a pascal based card to its fullest extent, you will have to wait a short while for motherboards to be completely compatible. For one example, the new 1.3 port, the 1.2b has gone the way of the dodo.

Save your money for now. Your current card is more than fine. Instead of upgrading spendy cards every year and a half, buy a Ben Q g sync.20 fps using g sync is smoother then 60 fps non g sync. Its an investment, but it works. Wait at least until black friday to upgrade. Trust me, your setup is golden.

Exactly