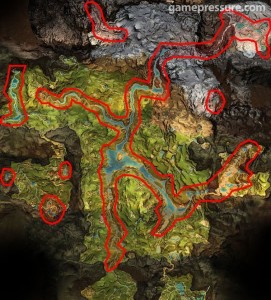

Far Cry: Primal may have completely changed setting, with the spin-off title going back to prehistoric times. However, that doesn't mean that the world's layout has changed as a comparison image between Far Cry Primal and Far Cry 4's map shows that the layout is almost unchanged, with many things remaining the same.

GamePressure was the first to spot the similarities, with Far Cry 4 and Primal having a similar layout with mountains, rivers and lakes all remaining in the same areas in both games.

Click images to enlarge.

Now Primal isn't a total copy and paste job as far as its map goes. The flow of water throughout the map is a little more connected to other parts of the map. Some things like mountains and forests are also a bit different. However, the world does seem to be built on a very familiar template so it is possible that the Primal team did work was a basic version of Far Cry 4's world in order to get the game out.

Ubisoft may have been able to get away with this if it had set Primal in the Himalayas just like Far Cry 4 but Primal is actually set in Europe so the two game worlds shouldn't really be as similar as they are.

KitGuru Says: It is clear that at least a little bit of recycling has happened here to get Far Cry Primal out so soon after Far Cry 4. However, I would imagine that the new Beast Master mechanics would help freshen up the core gameplay a little bit. Have any of you played Far Cry Primal at all? Do you think it is just more of the same?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

It’s rather shallow gameplay wise. There’s very little depth in terms of the skill tree or weapon variety. The map, yes, it’s technically just a reskin of FC4’s terrain (mind you – only part of its terrain, nearly half the fc4 map is missing, making this game drastically smaller on a world-scale front).

It’s lacking some of FC4’s graphical features also (Notably multiple AA format support, only FXAA is toggleable, no MSAA or SMAA or TXAA or SSAA), which is confusing considering its a newer game on the same engine.

Added to that the horrendous optimization. It’s very core clock dependant. My rig sits at:

5960x

32GB ddr4 @ 3200MHz

SLI TItan X

Beefy, all things considered. At stock clocks, 1440p, I can’t hold 40 fps with sli enabled. Disable it, I’ll hold ~50 fps wandering the open world. However, if I pushed my CPU to 4.6GHz up from its base 3.0, SLI suddenly leaps ahead of single card performance, and I’ll hold 90 fps average. That said, my CPU only utilizes 2 cores, core 2 and 4 in my case. Core 2 is locked at 100% usage constantly, while core 4 sits between 50 – 65%. My gpu’s at stock cpu clocks sit at a measly 40% usage or so, but @ 4.6GHz cpu move up to 75% each.

If I go to an extreme and push my cpu to a ludicrous clock speed of 5.1GHz, I find my average fps rises again to over 100 fps @ 1440p/ultra, but my core 2 remains at 100% usage. My GPU usage again rises to 85% or so. Meaning there’s still headroom.

All in all, a pretty piss poor job from Ubisoft on the optimization front. A 5960x @ 5GHz+ should never be a bottleneck in a modern game.

You must be new to Ubisoft….

Interesting, never seen a game be that core clock dependent before. My first thought was an SLI profile problem.

Looks _exactly_ like a SLI profile problem.

Now that’s what i call bone lazy.