In the early hours of this morning AMD finally unveiled its first Polaris graphics card: the RX480. With specifications that peg it as comparable in power, but far more efficient than the R9 390, AMD also twinned up two of the $200 (£140) cards against a Nvidia GTX 1080 and by all accounts they did rather well.

The RX480 is one of the most intriguing releases of this year so far. Unlike Nvidia, which went quite traditional with its GTX 1080 and 1070 release, in that they are high-performance, high-cost, AMD's latest GPU is much more affordable.

Specifications wise, it has similar hardware on board to a R9 390, though of course using the 14nm FinFet design. It's vastly more efficient at just 150w of peak power-draw and is also very affordable, with a price tag of just $200 (£140).

The purpose of this card is to make it easy for anyone to bring their PC up to spec for virtual reality. This card should be more than capable of handling current-gen VR and it may not even be that weak or a performer in general gaming too.

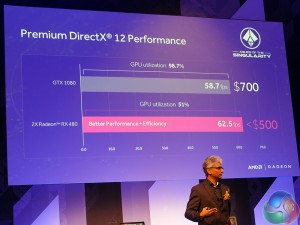

Thanks to better support for DirectX12, CrossFire and an improved architectural efficiency, AMD showed a pair of RX480s beating out a single GTX 1080 in a test of Ashes of the Singularity. We weren't privy to which settings were used, but cost wise, it would be far cheaper to buy a pair of RX480s, than it would be a single GTX 1080.

Our man on the scene, Leo Waldock did report however, that he felt the RX480s in the test system may have been modified, possibly higher-powered versions, since the cost rating was said to be sub-$500 (rather than $400), suggesting a few extra dollars were spent on those GPUs.

AMD's RX480 will launch officially on the 29th June.

Discuss onour Facebook page, HERE.

KitGuru says: Although this isn't likely to get reviewers excited for the benchmarking capabilities, if AMD has managed to package R9 390 or higher performance into a single, low-power-draw card, the RX480 could well be a winner at that price point.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

AMD needs to stop using Ashes of the Singularity as the SOLE defense against Nvidia. Its a niche game and not indicative of overall performance. Why not use something like Doom or Overwatch or, you know, something popular?

Any enthusiast would rather still have the one card though given the choice.

AoTS is a well balanced game in terms of vendor support, Nvidia has had just as much access to the source as AMD and made plenty of commits of their own. Just because AMD see a decent boost due to lower DX12 overheads doesn’t mean it is a bad performance metric.

How dare you speak with reason and sound logic! Gah…. what is this world coming to!?

This is one of the games where AMD’s architecture shines so they use it as a show case of the expected “performance”.

The truth is bitter however: Fury/Fury X already handily beat the GTX 1080 in this game which means the 480 is an underperformer … in all other games which don’t use the DX12 features which penalize the rival (Maxwell/Pascal) architecture.

The 1080 actually beats all parts in single GPU setups.

If by “well balanced” you mean developed in a close cooperation with AMD to use the features which aren’t implemented on the same level in NVIDIA’s silicon, then you’re right. However you might have noticed that pro-NVIDIA’s games with GameWorks (see how most reviewers show “Hairworks/HBAO+ disabled” in their graphs) are usually tested with this technology completely disabled and magically AMD cards don’t show exceptional gains from that.

Strangely all other games which don’t favour any vendor (there are quite a lot of them actually) run a lot faster on NVIDIA’s hardware.

This is until rabid AMD fanboys from WCCFTech discover this website. Expect a total mayhem then.

I think the reliance on driver profiles puts many people off having more than 1 card now. I would agree.

Do you know that AMD was using different settings. Nvidia settings was different on ATOS and AMD setting was looking totally washed out.

left side is AMD.

Its because AotS has been optimised for DX12, and not that many other games have yet.

In a year or two’s time we will see a big change in that, with far more games running DX12 as more systems will be using Win10.

Reviewers might not, but I am certainly excited to see the benchmarks when they arrive. No one denies the GTX1080 is an absolutely wondrous card, but this is one I could afford for my 1080p needs for a good while

They also need to stop comparing dual AMD gpus vs 1 nVidia GPU.

In my opinion the card would look more enticing if they were like, we can manage 65% of a 1080 at 35% of the price. that WOULD look interesting, but to me, 50% utilization in crossfire shows poor support and something must be limiting it CPU side. Also the fact that TWO gpus barely beat 1×1080 doesnt do much for their marketing.

I wanna see what they come up with in terms of like, the RX 490, or their new RX Fury X etc

Yes, but it is half the price.

The Fury/Fury X already handily bear the GTX 1080 in AotS? Not sure what reviews you were reading, but I might suggest reviews with more scientific testing:

http://www.tomshardware.com/reviews/nvidia-geforce-gtx-1080-pascal,4572-6.html

nVidia gpus win over AMD’s when this specific game is runnign DX11, so it is unbiased. Just accept nVidia’s inability to get async compute in their GPUs.

Agreed. And CF setup was running in 51% utilisation, so practical a single 480 won 1080 on those different settings.

Fool. There is a 4GB Version 199

There is a 8GB Version 230

230+230 = <500

QED

You’re missing the point. Point is, 2 GPUs priced below 500$ beating a single GPU that’s 700$.

This is exactly what I thought, but some douchebag said 1080p is dead already. Not all people are playing at 1440p or above. Lets wait for the benchmarks, but It seems like this one will be the 1080p King on the price/performance ratio.

150W power requirement sounds nice, but you are asking me to run 2x of these cards, bringing requirements to 300W to match performance. A single card at 180W sure sounds more appealing.

Too bad it’s AotS and not some consistent bench-marking tool.

If you say so . lol

Again with the AoTS test. Really? How about Doom, The Division, SWBF, Dark Souls III, the new HITMAN, or even BF4. I mean seriously. AoTS is a mediocre game and is highly optimized for AMD tech. Asynchronous compute and DX12 mean very little right now. Are they somewhat exciting? Ok yes- but for next year or so.

I have about 4 or 5 games I’m playing off and on right now. None in DX12 and none use AC.

PC Gamers have had resolutions above 1080p since we had CRT monitors, 1080p is just “Hi-Res” when you’re talking about TV broadcasts and whatnot.

AMD does it all open source, nvidia just plays dirty and uses proprietary technologies in conjunction with devs that develop to use their tools alone so off course it has more performance, because only their products have the low level progamming to do so…but on level plainfields it doesn’t show many gains on all products only on those who cost more than the competition and that off course it’s expected or it would be a bad sign if it wasn’t like that…

Are you kidding? Those TWO cards are worth sub $500. That’s a $200 GAP for EQUAL performance.

AMD goal isnt to provide a single mounstrous card in performance and price, its provide a cost efficient solution, and this article is a way of seeing that goal being applied…AMD is and allways was about trying to deliver more performance per dollar, and now with DX12 they have the setting to do it rather well, since crossfire shouldn’t see much trouble in working properly…

How do those 2 cards consuming 300 Watt in total compare to a single GTX 1070, which costs approx. $400 and consumes 150 Watt?

I remain sceptical. Every(!) company that intros a product only shows what it wants to show. For instance: the magic $199 that everyone is talking about is only for the 4GB model. The 8GB model is priced higher. Who will want the 4GB model, anyway? And how will the $230-250 model compare to the GTX 1060 which will appear after summer and which probably will be priced only a tad higher (while consuming a bit less)? How will factory overclocked cards compare to one-another?

BTW, with all the hype going on, how will AMD partners manage to sell the remaining 390/Fury’s in the next entire month? (Nvidia has the same problem, but reduced the period between paper launch and availability a bit.)

This may well have been mentioned previously, but did anyone notice how it’s painfully obvious that the graphics settings on the GTX 1080 gameplay are way higher than the AMD SLI setup? You can clearly see much better texture resolution and shadowing on the nVidia play. Interesting.

Dude DX 11 is old Tech now and is slowly being replaced by DX12 and Vulcan API’s so the fact that AMD Can perform Direct compute is a much more relevant fact these days,

We all know that the DX 11 coding for AMD based GPU’s was badly put together and optimized and affected AMD based products pretty severely but there is no doubt that if both AMD And Nvidia GPU’s had the same level of optimizations for the DX11 API then its likely that AMD’s R9 390X would have been more than a match for the 980TI… easily even though it still competed pretty solidly concerning the price point.

My guess is that they were using the 8 GB version for the benchmark since the $200 price point is for the 4 GB model.

Crossfire and SLI were not used for thr Ashes of the Singularity. They used DX12’s multi-GPU feature that lets you pair any two GPUs together, even AMD and Nvidia ones or mismatching cards, something SLI and Crossfire does not. Also, DX12 has better multi-GPU scaling than Crossfire and SLI. And the point was to show one that they do better at DX12 for less money, something that is true for more than just AotS, as has been proven with even their old cards vs Maxwell.

EDIT: Added some words to the final sentence.

Actually, it is a good performer for the price. There has never been a US$200 card with such good hardware before. They are squeezing what looks like a R9-390 into a $200 card. That is impressive – and it that is only looking at the specs, which does not tell the full story given it ignores any potential architectural improvements.

If you want to see that kind of comparison you will have to wait for the higher end cards, possibly Vega. There is no way that either company can offer that kind of comparison in a US$200 card.

Most gamers are still using 1080p, not 1440p.

AMD don’t expect to win enthusiasts with RX-480. RX-480 is aimed at the mainstream market not the enthusiast market. Vega is AMD’s play at winning over enthusiasts.

Hopefully DX12’s and Vulkan’s multi-GPU capabilities being in the hands of developers not driver profiles will eventually change that situation.

That is TDP, not power requirement. TDP and actual power requirements don’t match up. It is even hinted at in the full name, i.e.e, *THermal* Design Power. Not the key word is “thermal”, i.e., heat. In other words, it is about heat and power, not just power.

EDIT: Added a sentence.

I want the 4 GB model. 8 GB is utter overkill for me. In fact 4 GB is overkill for my purposes.

It’ll probably cost half as much, too. Good ol’ AMD.

Two cards being cheaper than a single equal card are often cheaper, it’s cos of the downfall of having crossfire/sli, not all games will support it. Then you’re left with a lower performing system.

You either pay more and get the single fast GPU that is always that fast in everything

Or you pay a bit less for a pair of GPUs that take a little more power overall and might be a little faster BUT wont always give you that performance.

‘Far cheaper’ for two?. That could be misleading. More like ‘around 20-30% cheaper’. And It’s still generally not as desirable to use 2 when one will do. And then there’s power drawn. I didn’t see where that was compared. Overall this article smells a bit of some agenda, not objective comparison. Include power drawn, a full set of benchmarks, and any known pitfalls of crossfire, and that would be a better article.

Nvidia has a lot of open sourced middleware like physX, VXGI, shadowworks. If you want them, then check Nvidia’s Github releases. I don’t see why that is dirty. BTW, there are more than Gameworks, you don’t know that Nvidia is boosting the entire industry even the professional field.

Who said Nvidia’s GPU cannot do the context switching?

I agree, it’s a smart move. personally the one I’m waiting for is Vega.

And Nvidia didn’t compare GTX 1080 with FuryX using games like Batman or Assassin’s creed…

Good for you. Saves you some bucks. For other people, 8GB may become more of a requirement. There are games where you see the GTX 970 (3,5GB+0,5GB) starting to struggle. And where also the R9 290X cannot keep up with the nearly identical R9 390X. Simply because those games use more than 4GB of memory. Perhaps not much more, but enough more to get a tangible performance difference. Even at “lower” resolutions. Buying anything with less than 6GB will simply not be future proof enough, in my view. Unless the graphics horsepower is not present or you accept lowering quality settings.

Exactly, the nVidia card is running at much higher graphics settings, it’s not a straight comparison at all. It also looks in places like the nVidia setup is running at slightly higher resolution.

I noticed that as well, it is way too obvious. GTX 1080 was running on higher settings and AMD lowered the result of GTX 1080 from 66fps to 58fps, then RX480 CF won…

Well, I am far from the only gamer that does not care to push their games to the maximum graphics settings, especially with modern games that can look good even on lower settings (lower as in lower than max).

I’d be tickled pink if the article read “480 almost dethroned 1080 (or dethroned)”. Healthy competition. But many people won’t want to CFX just to match specs, or in this case beat on a benchmark that doesn’t have real-world basis, with a lone 1080, unless they are seriously strapped for cash. I’m running a mini ITX crammed into a EVGA Hadron, so 2 cards is a no-go for me. So I’m either going to keep my 970 or upgrade to another nVidia card this round. Wattage as far as I can see, 1080 (180W) is going to spank 2 of these cards (each at guestimated 150W, not yet written in stone), so again.. nVidia is a winner here. I used to be a die-hard AMD/ATI fanboy because of price, but now I’m not so sure.. Their old tech can’t even work as a space heater anymore, they’re trying to be “efficient” but slower. :-/

Physics is open source ?! You havê no clube what you are talking about

But crossfire is bad…..so in the end, only one card work most of the time. I prefer one powerful card.

AMD response here; https://www.reddit.com/r/Amd/comments/4m692q/concerning_the_aots_image_quality_controversy/

that doesn’t change the fact that two cheaper cards beat one more expensive. so yeah.

also, crossfire scales pretty well. 2x 290 scales up to ~190% performance (95% per GPU roughly)

Well, here is proof:

https://developer.nvidia.com/physx-source-github

https://github.com/NVIDIAGameWorks/PhysX-3.3

if you see 404 error, then you need a github account and join Nvidia’s github group. But it is yours work to do. After following the instructions, you should be able to download PhysX’s source code and access it using Visual Studio.

I definitely know what I was talking about. If you want more Nvidia’s source code, I can give you more links of them like shadowworks lib, VXGI, Volumetric lighting and ect.

http://amdcrossfire.wikia.com/wiki/Crossfire_Game_Compatibility_List

How many game have crossfire support???? Not much!

And hey, you can buy alot of cheaper card, SLI them and beat the king too…. so? Nothing new.

Just a RX480 not powerful enough.

Nothing to see, next!

actually not the case: https://www.reddit.com/r/Amd/comments/4m692q/concerning_the_aots_image_quality_controversy/

You don’t think 2 small overclock RX480s at 50% utilization can outperform a small overclock on the 1080 at 95% utilization?

Its a load of crap. Wait for real independent testing. Every real enthusiast knows Crossfire is the least desirable multi GPU solution because of its interminable stuttering. http://www.pcper.com/reviews/Graphics-Cards/AMD-Radeon-Pro-Duo-Review/Grand-Theft-Auto-V

AMD got caught selling Cross fire for years when it didn’t actually work at all. SLI outsells it 10 to one but a top flight single GPU is always better.

Not with the reference single 6-pin PCIe power connector. Perhaps if AIB partners adjust it, then maybe.

Nice to see smart people on these forums. But to clarify, none of these graphics card companies refer to TDP for their wattage numbers. For instance, nVidia calls theirs as “Maximum Board Power”.

On top of that, TDP, in reference to processors, it just a category, hence why so many SKUs of differing specs can qualify for the rating. It is disturbing how many so-called “enthusiasts” like the title applied to themselves, but don’t inform themselves of what these terms mean.

Jack, the kinds of people getting RX-480 are formerly R9-380 and GTX960 users (or R9-285 and GTX760, etc) because that’s all they paid last time and settled for that performance level at the resolution for whatever game was available back then.

You’re worrying for people that don’t care about what you’re worrying for because they never needed what you are swearing AMD made a mistake over. This means you are out of touch with the kinds of people that RX-480 is aimed at.

High-end logic is lost on midrange users, and vice versa. They can’t convince you that you paid too much for the performance, while you can’t convince them that they ought to spend more if gaming was important.

what’s DX12 aiming to optimize? crossfire!

also, out of that massive list, only 9 games don’t support cfx, so what’s yer point.

point is, for 300$ less you get same or more FPS.

and by gods, 480 is more than powerful enough. 1440p 144 hz on an nvidia-optimized game? can do!

Crossfire have a very bad support so useless, DX12 or not. And where is your MASSIVE list?? And no 480 is not powerfull enough open your eye!!! Its like a videocard of last year ot before that. Allo?!?! AMD can do better than that!

You literally posted a list Out of 60 games, 9 don’t have support for CFX.

This is a R9 290x Crossfire chart, in comparison to a single GPU.

You can see the scaling percentage on the right.

http://cdn.overclock.net/a/a1/500x1000px-LL-a1ada14b_cfx.jpeg

Also, again. IT RAN DOOM 4 ON 1440p 144Hz you dumbass. ON HIGHEST.

Now, if running a game in 1440p doesn’t show that the GPU is powerful, then nothing is.

Seriously, it beats GTX 980 for HALF its fucking price. And it’s not even their flagship GPU.

GTX 980 it’s not a new card……compare the 480 to a 1070! My god sooooo fanboy!!!

I couldn’t agree with you more Vincent. I mean yea AOS is a good game in its own right but its not the kind of game that is gonna motivate some one to upgrade you gpu. I need to see benchmarks of games that everybody is play. Also the whole async compute thing is over done. Not saying it isn’t relevant but what exactly is it doing for the gamer of today. I just keep seeing this whole buy amd because it will run games that come out next year better. Well by then I will be on next years hardware that I am sure wells smoke anything from last year.

That and amd claims it is aiming at the mainstream. I am sorry but I don’t consider the market they are shooting for mainstream. Last year everybody I knew had 980s or at least 970s but mostly 980s. Actually over 50 percent of my gamer friends had the 980ti with massive overclocks. I only knew one person who had a fury x. The whole time he held onto the whole fury x “overclocking dream” Claiming that something would give allowing him to achieve some overclock that would pull him ahead of my Titan x @ 1500+mhz.

Don’t get me wrong I am not saying amd didn’t have some dam fine cards. Hell if you were on a budget that was the route to go. I am just alway chasing the highest fps with the highest settings. Amd has nothing to offer me there. I always want the best and I also enjoy overclocking my hardware. Now i know not everyone spends the kind of money I do on hardware but all the pc gamer friends I have spend at least a hair under $400 and are wishing they could have the next tier up.

That brings me to my next point, yea amd might gain some market share but I doubt they are racking in the cash at $200 a pop. I bet you dollars to donuts nvidia is with every 1080 and 1070 they sell. Hell they call the 1080 the enthusiast grade card and its not even really. That is the the 1080ti and titan pascal.

Trust me I want to see amd do well. I have would buy amd in a heart beat if had the top performer. I even put amd card into some of the gaming rigs I build. Yet that is usually when people are on a tighter budget. I just feel that they should of pushed the envelop a little farther. I mean they are on a whole new fabrication and they are saying hey we will give you last years performance at less power and cheaper. People want more power.

I don’t think last years power is gonna cut it anyways. I mean I can bring my titan x to its knees with fallout 4 with mods. Also I want to know how polaris overclocks. That means alot in todays market and the dropped the ball hard on the fury x. Claiming it could do what it was very weak at.

All in all tho I am optimistic and think it could go either way with amd. Who am I to judge what the average gamer wants. I do know this tho amd can’t afford to lose this battle and nvidia could lose the next 2 and be fine.

hey doom was vulcan if I am correct and nvidia killed it in performance. I really don’t think nvidia is as weak at dx12 as amd is trying to make it look. I mean amd may have the edge for a year or two but they can afford that at this point. amd has to have the edge or it could spell big trouble.

yea until the 1080 is overclocked lol.

and then nvidia will drop the 1080ti and titan.

http://www.fudzilla.com/news/graphics/40808-amd-radeon-rx-480-crossfire-rendering-differently-vs-gtx-1080

Wow only 60 !!! Check that http://www.geforce.com/games-applications/technology/sli

This is support….my god !

Why should I compare it to a GPU that costs twice as much?

Also, that list includes non-DX11 games. What is bullshit. DX9 supports multi-GPU as much as I support the american politics. I don’t.

Also, would you just STOP weakly rationalizing?

http://hexus.net/tech/news/graphics/93461-amd-exec-discusses-radeon-rx-480-ashes-singularity-demo/?utm_source=dlvr.it&utm_medium=facebook

http://videocardz.com/60860/amd-explains-image-quality-in-aots-radeon-rx-480-cf-vs-gtx-1080-demonstration

AMD’s GPUs are actually rendering more details. Color shading for you touchy sods. What gives a smoother feel to the image, and avoids heavy contrasting where you don’t need it.

“Ashes uses procedural generation based on a randomized seed at launch. The benchmark does look slightly different every time it is run. But that, many have noted, does not fully explain the quality difference people noticed.

At present the GTX 1080 is incorrectly executing the terrain shaders responsible for populating the environment with the appropriate amount of snow. The GTX 1080 is doing less work to render AOTS than it otherwise would if the shader were being run properly.”

The radically low price of the RX 480 4GB card (if that is what it will actually retail for and it is the cost of the full version of the chip), may hurt AMD in the long run. Since AMD just makes the GPU chip and sells it at a fixed price, it is up to the Add In Board manufacturers to try to make a card that is profitable at the price that AMD has arbitrarily set for them. Mind you, no AIB has to sell their cards for AMD’s MSRP but will lose sales if they do not.

The question is, CAN the AIBs make a decent enough profit on the card? If they cannot, they simply will not make the card or will sell it in only small quantities.

A good example of this with AMD was the Fury X. The list price was rumored to be $800 but was dropped to $650 by AMD to compete with the GTX 980Ti. Another rumor was that the AIBs were very unhappy at the new price as AMD was not losing any money by dropping the price, the card manufacturers were. The result? Only Sapphire really made the card in any sort of quantity and those were quickly sold out. Other manufacturers either just did not make the card or, like MSI, charged more. The Fury X never sold well.

This is a VERY aggressive price for the RX 480 and time will tell if it was the right choice for AMD.

Ok, you can STAY in “WONDERLAND” with your AMD card, and play this super fun AOST game !!

Buy 2 480 to be more powerful than ONE Nvidia card! And FYI MOST people PLAY DX9,DX10,DX11 game right now !

So have fun with your AOTS ! (but DX12 it’s not a problem now for Nvidia)

http://store.steampowered.com/hwsurvey/videocard/ …so many AMD….scary

Have fun on the red side!

Continue to sleep!

Yeah, I get disappointed when I see people treat TDp as power draw. That does not work. For actual power draw numbers I look at the reviews. In fact I look at multiple reviews since there can be variances based on several factors, such as how good the motherboard and PSU are.

Yeah, you pay 400$ to beat a 700$ card, such a stupid buy, am i right?

DX9 is outdated, DX10 was shit as it actually slowed games down, and DX11 is the popular one. And if you remove the DX9 and 10 games from your list, it goes down to roughly the same amount of games. So yeah.

Also, nVidia still has problems with async shaders. So yeah. I’ll continue enjoying myself, you keep on being an idiot.

Oh and I guess I should add, none of the nVidia GF900 and below GPUs really support DX12. Performance goes down in comparison with DX11 for nVidia, and goes up for AMD. So really, there’s no real DX12 GPUs from nVidia besides, kind of, GF1000 series. While DX12 works on R9 200 series.

Just by your poor argumentation, you need pills. Go see your doctor right now !! Enjoy a card you don’t have, and games you can’t play !! Ho and i forgot the super important thing… Async, why i forgot ? Because we don’t care @ssync.

I am finish with you !! You are so boring!

The reason why nvidia runs doom better is exactly because, vulkan isn’t implemented yet, currently it runs on dx11. Vulkan is coming soon in an update: https://en.wikipedia.org/wiki/List_of_games_with_Vulkan_support

And you’re so unintelligent!

Stop poorly rationalizing still buying nVidia hardware.

I hope you do understand, Async shaders are an essential part of DX12, and soon, none of nVidia’s GPUs will be able to catch up to AMD hardware.

I’ll enjoy my RX 480 in a few months, don’t you worry 😉

Also, I’m *done* with you. I wish I could go through one week of my life without asking this question, but what the fuck am I doing here arguing with an idiot who can’t even do grammar properly?

I’m not english Le Cave ! 😉 En français: Reste un épais comme tu es, et continue de croire que l’Async, est une bonne raison pour acheter une AMD. Ça me prouve que tu n’es qu’un CON ! 🙂 Ciao dumb@ss!

Neither am I, pederu.

Jedi govna šporkuljo neodgojena 😉

Read post #1208

http://www.overclock.net/t/1569897/various-ashes-of-the-singularity-dx12-benchmarks/1200

Developer responds and says Nvidia had more in contact with the developers than AMD. Developer’s also state Nvidia’s lack of Async Compute is the reason their graphic cards are weak.

Doom is running an older OpenGL version on AMD cards. The developer has yet to respond, but it may have something to do with Nvidia since they are working with the developers on the Vulkan version.

AMD cards – OpenGL 4.3

Nvidia cards – OpenGL 4.5

https://linustechtips.com/main/topic/595522-doom-4-performance-on-radeon-and-geforce/

Educate yourself, it’s not “Crossfire.” SLI and Crossfire is limited to gpu drivers in DX11, this is the new API with DX12/Vulkan. Low-level access gives developers more control, meaning multi-gpu is now in the developers hands.

Your very misinformed, can’t believe a majority of people commenting know nothing of what they speak…

SLI/Crossfire is limited to DX11 games via GPU drivers. In Vulkan/DX12 by giving developers low-level access api’s, multi-gpu is now in the direct hands of developers for more control.

1 – Both SLI and Crossfire are used by about 1-3% of PC gamers.

2 – Multi-GPU in DX11 are not supported everywhere

3 – issues are expected

4 – Multi-GPU in Vulkan and DX12 is completely different than Crossfire/SLI.

Crossfire and SLI is done via the gpu drivers provided by AMD/Nvidia. In the new API’s, Crossfire/SLI will cease to exist.

My point still stands, I dont see how DX11 vs DX12 changes the part where multi-gpu may or may not work, leaving some games with awesome performance, and some with rather lacking performance, just like DX11.

I know that ! For NOW MOST game are DX11! And when you said in developers hands, you talk about ressources, time and money. This is a new challenge for dev, so its not win for NOW! So educate yourself!

Physics is open source, are you drunk or you really believe that

check out the the link below, then persuade me it is not open sourced sdk.

They share source code, but not all of it, therefore everyone outside nvidia(that has all the code) struggles to run it well enough to game on it/with it, as i was saying nvidia never plays on level grounds, for example get the original ageia physics code and run it on the 2 vendors, then shrlty after when nvidia bought the company try to run that code and youll see performance on systems other than nvidia is hurt…

Code to make it run is different than code made to properly run all scenarios

dont forget dx12

For now…DX12 is nothing. I play now, not in 2 years.

I proved that PhysX is open sourced sdk and there are more. I didn’t say all Nvidia’s SDKs are and have to be open sourced. Nvidia decides to make hardware support for specific simulation or FX, AMD doesn’t. So AMD will perform bad with those FX on. There is nothing wrong about that. AMD abandons preemption on DX11 and there are tons of people still using windows 7. What is the difference? Why AMD promotes DX12 and mantle without making their hardware run better on DX12?

exactly those amd fanboy buying 2 of these thinking it will be faster than a gtx 1080 in all their games i’m lol already

yeah cause it’s one game agaisnt hundreds and by the time dx 12 comes to reality then the crap rx 480 will be long begone

sorry but even with async compute game like ash etc if you put 2 1080 it smokes 2 rx 480 in cf so ….

yeah but this amdidiot in the show who was proud of having 2 fps more in cf looool that’s arguing that 2 card is better than one or why talk about the fps then

AMD’s GPUs are actually rendering more details. loooooooooool such a amditiot

lol bien dit

So you have gone so low on decent arguments (not that you had any to start with) that now you resort to calling people names? My gods, didn’t know nVidia fanboys are so fucking dumb.

So you say that paying 700$ is the same as paying 400$?

it’s better to pay 700 and have better performance for hundred of games than poor performance with hundred of games and a few dx12 yes

yes what you re doing now

What am I doing? I’m not calling you any names, and I’m just pointing out that you don’t have any more arguments that you can use.

It’s better to pay 700 if you have that kind of money. But what’s the point if you can get good 4k performance on high settings for MUCH LESS MONEY.

It’s like nVidia fans have no self awareness, or money saving instincts.

to have good performance even in dx 11 numerous games that the amd rx480 crap can’t , maybe the next one…

Nvidia still realases pieces of shit like optimus instead of doing it properly. Yes AMD has inferior mobile CPU’s, but their way of using AMD graphics in laptops to preserve power is supremely supperior to anything that the garbage that is optimus has achieved. Optimus drivers are buggy, and they drop models very oftan, AMD on the other hand still supports old OS’es from point of sale to today and also has excellent open driver specs as well as beats the optimus offerings hands down in power saving due to the fact that the nvidia laptops never really disables the intel GPU but rather preempts and works around it until it finally sends the data back to the intel gpu for the output. It is a complete mess.

Compare it to the 1060 then thats priced at $249 and still 33% faster / better performance than the rx480 and even beats the fury x in alot of instances

Yeah pretty stupid when you can just spend 249 on 1 gtx1060 which is a much better card and even out performs the 480 in all current dx12 games but 1 , including doom..and guess what , when dx12 actually becomes mainstream and relevant nvidia will release more performance boosting drivers for it keeping it far ahead of the 480… sad amd really dropped the ball again on this. hope they dont do the same with Zen

lol wtf are you talking about? 470 beats the 1060 in DX12/vulkan.

I mean, nVidia literally gimped their own performance in a gameworks title – fallout 4, and that in turn increase AMD performance by a LOT. Heck, even R9 Nano beats the 1070 in Vulkan.

besides 480 did exactly what AMD said it’s gonna do.

LOL wrong! obviously you havent seen recent benchmarks … and ofc a $1100 R9 Nano (aka slightly lower end specced Radeon Pro Duo(aka Fury X2) ) beats a $350 card thats like comparing a GTX Titan XP to the RX460 or pretty much ANYTHING in the AMD line up hahaha

http://www.ixbt.com/short/images/2016/Jul/NVIDIA-GeForce-GTX-1060-Performance-2-680×900.jpg

http://www.techspot.com/articles-info/1209/bench/Witcher_02.png

http://cdn.mos.cms.futurecdn.net/oFpxDqBAMYm4NyD8S48fqC-650-80.png

http://i.imgur.com/kJWU4AS.png

http://i.imgur.com/HwR8IEj.png

and to be fair and unbiased i ll even throw the doom benchmark in for you .. but will also even state both cards reach over 100fps and at that point it doesnt even really matter then http://i.imgur.com/KZqCE7n.png

as you can clearly see the gtx 1060 literally destroys the rx480 in literally 99.8% of all current titles and will continue to do so even after DX12 and Vulcan become mainstream , cause its not like Nvidia is going to sit there and not release any new game specific drivers or better performing dx12 drivers

http://arstechnica.com/gadgets/2016/07/nvidia-gtx-1060-review/

EVEN better website with all the card line up benchmarks all in one place showing the 1060 outperforms the rx 480 and r9 nano and even the titan x in nearly ALL games and resolutions .. sorry the rx480 which i wanted to love .. is just a bad buy when you can get the 1060 for the nearly the same price 😀 and if youre going to spend the 550 or so to cf the 480 might as well just get a 1070 and oc cause it out performs 480cf and even then might as well spend the extra 100 and get the 1080 😛 .. and yes 480 did what they said it would do .. play 1080p on high settings as acceptable frames , butnot 1080p or higher with maxed settings at acceptable fps