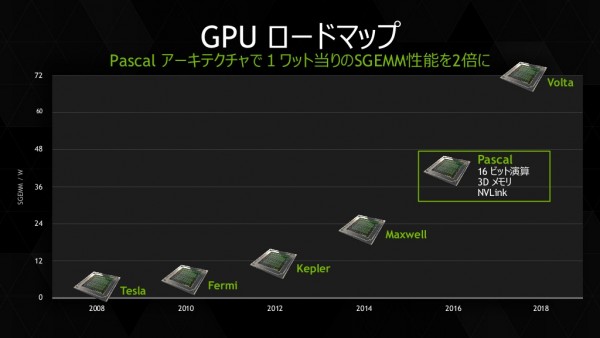

Now that Pascal is out in the open, the rumour mill has turned its attention to Nvidia’s next currently planned architecture on the roadmap, known as Volta. According to a report, Nvidia may be planning to stick with the same 16nm process used for Pascal for its next architecture jump.

Right now, we don’t know much about the Volta architecture, aside from the fact that it is set to launch sometime after Pascal and that it will introduce Stacked DRAM. However, according to a source speaking with Fudzilla, Volta GPUs will be using the 16nm FinFET process, rather than making a generational leap. At the same time, AMD is also said to be sticking to its current 14nm process for Vega.

This means that the jump to 10nm will be coming in a future architecture after Volta’s release. Volta will apparently bring along a significant increase in performance per watt too, which will be needed for Nvidia to keep making the most out of the 16nm process.

These are all very early rumours for now and since we don’t know the inside source of this information, we can’t independently verify any of it so as always, don’t consider any of this to be fact for now.

KitGuru Says: We are still quite a while away from publicly seeing the Volta architecture but still, these early rumours are still pretty interesting to follow.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Makes sense, we don’t want to wait for supply of a new node. Let 16nm mature and get everything u can out of it like they did with 28nm.

Yup, sounds good if true. Won’t be using a risky new node 🙂

that and the performance/power minimal from 16/14(that use parts of 20nm) to 10nm likely the big players will hold off for 7nm which TSMC and GF/Samsung are heavily vested in releasing in the 2018 time frame(if not late 2017)

Rumors however are 10nm will be ready for launch 2017, 7nm 2018 with Samsung and IBM likely to be able to do this sooner via their alliance, though because of issues Intel has had of late, it might be others are able to launch a smaller nm size before they are able to do their next step, but, they also will not have the same density in that process that Intel achieves(power, performance, temps)

anyways was digging and found this, a very solid read

http://semiengineering.com/10nm-versus-7nm/

Ya nice find, so 7nm is almost as imminent as 10nm

Wonder what 7nm will be made with?

I’ll put my money on a silicon-germanium alloy. But one day we shall achieve the graphene dream

unicorn dust and snake oil 😀

Samsung is shooting to get to 7nm with 10nm FinFET ASAP whereas TSMC to keep costs in check likely will be going for 10nm with 14nm FinFET(they all seem to classify full node and half node quite differently then the “industry” does) the cost for 28nm to 14nm is quite large and 10-7 and below is immense. No wonder major companies have decided to stick with 28nm or “bigger” for so long much easier to build for and the process are quite refined by now, AMD seems to take the safer approach and cram as much as they can so they more workhorse style, whereas Nvidia at least since the 400 series have been trimming the fat sort of speak so they can ramp clocks up even if it comes at cost of not being able to do “advanced” DX12/Vulkan etc type stuff.

They all build the way they believe they should, AMD for the longest time has been working towards multithreaded type(seeing as they pretty much started it all) whereas Nvidia and Intel even though can do multithread are bar none more focused on getting the most IPC single thread style, both have their good and bad points.

Either way, they should be building the highest quality product they can and not “skimping” to get more $$$ in their pocket especially Intel and Nvidia for how much they charge for their products.

if this helps any https://www.semiwiki.com/forum/content/5721-samsung-10nm-7nm-strategy-explained.html

the crystallized tears of people who bought founder’s edition

<<o. ✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤✤:::::::!ja441s:….,…..

I currently get paid in the range of $6000-$8000 monthly from working online at home. If you are prepared to complete basic computer-based work for several hrs every day from your couch at home and get solid payment in the same time… Then this work opportunity is for you… SELF40.COM

sADWEdsa

Nvidia knows that Samsung 14nm is not good for GPUs. RX480 vs GTX1070 performance/watt was a prime example.

… anyone who bought the founders edition knew what they were getting into

you’re comparing apples and oranges…and if that was true, how come the 14nm apple iphone chip is slightly more power efficient than the 16nm?