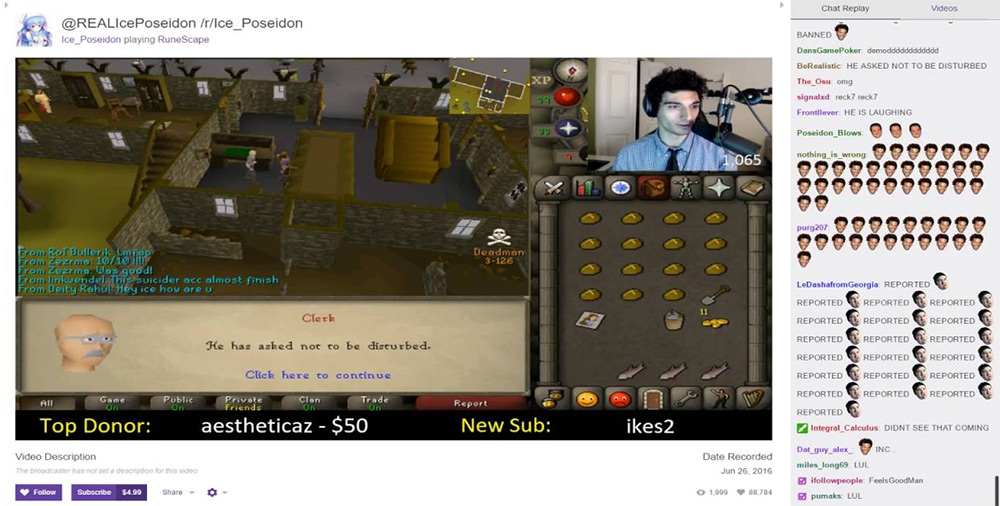

The automated moderation algorithm introduced by gaming streaming site, Twitch, earlier this week, is already paying dividends. According to a number of streamers who traditionally have to deal with abuse or fighting in their attached chat, the number of people saying mean stuff has fallen dramatically.

Traditionally, viewing any popular Twitch stream's chat means you may find at least a few people using the platform to be abusive or hateful. Hey, it's the internet right? With sometimes tens of thousands in individual chats though, moderating them manually was nigh on impossible, but Twitch's algorithmic approach seems to be working.

Working to flag up content for human moderators to deem a bannable offence or not, AutoMod has helped clean up streams for the likes of Alex Teixeira. Kotaku quotes him as saying that it works well and has made it easier for him to clear out racist and homophobic messages, even if the text is spelled wrongly to try and avoid being filtered.

The important part of it he says, is that it denies the people behind it the attention they want for causing problems. Better yet he says, “nobody gets the idea to jump on the bandwagon,” and join in with the messages.

Another streamer, Little Siha, has had similar success with AutoMod, we're told. Not only has it made her chat cleaner and a nicer place for non-abusive viewers to chat in, but it's made the job of her moderators far easier to achieve. They can now find most of the trouble makers in one place, rather than having to spot them as part of the rapidly changing chat stream.

The importance of AutoMod though is that it will allow streamers to set their own thresholds. They'll be able to select what words or types of content they allow through the filter, thereby setting the tone or at least the social bar, for what is and isn't acceptable in-stream.

Source: KingDenino/Youtube

Twitch claims that AutoMod will learn over time too, becoming better at detecting clever ways to circumvent its filters.

Currently available in English – though there are beta tools available for Arabic, French, German, Russian and more – AutoMod is likely to ruffle a few feathers during its first few weeks of use. But it's already proving effective in some circles and in time it could well become much more capable than any human moderation team could ever hope to be.

“We equip streamers with a robust set of tools and allow them to appoint trusted moderators straight from their communities to protect the integrity of their channels,” Twitch moderation lead Ryan Kennedy said (via VentureBeat). “This allows creators to focus more on producing great content and managing their communities. By combining the power of humans and machine learning, AutoMod takes that a step further. For the first time ever, we’re empowering all of our creators to establish a reliable baseline for acceptable language and around the clock chat moderation.”

Discuss on our Facebook page, HERE.

KitGuru Says: Usually I'm not a fan of filtering or moderation, but I can understand the difficulties Amazon faces here. With some streams having tens or even hundreds of thousands of simultaneous viewers, all of whom can contribute to chat simultaneously. That's simply impossible to moderate with human hands. Something like AutoMod may be the only way to instil some base level of civility in a chat that size.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

hate speech banning is a slippery slope..