Pixelated images, ‘potato' cameras, and pictures deliberately blurred to hide people's faces, could become a thing of the past thanks to Google's latest algorithm development. The company's neural network can now take an image of just eight by eight pixels and use clever guesswork, to work its way back to a relatively decent quality photo.

Traditionally, blurred or pixellated images required a lot of manual attention to improve. Photoshop has some anti-camera-shake filters you can use, or you can manually go down to the pixel level and correct the pictures by hand. But that's massively time-consuming and no good if you don't know what was captured in the image, looks like. With Google's system, you don't need to (thanks Guardian).

The image on the left was the original input, while those on the right show various outputs by the algorithm

Google describes the technique as having the algorithm “hallucinate,” the missing details of the image. In essence, the system is taking an educated guess about what single pixels could mean and enhances them with additional ones. Google's system was able to take eight by eight pixel images and turn them into 32 x 32 pixel images.

Adding a little blur to the edges, makes for a pretty good looking image, considering its source.

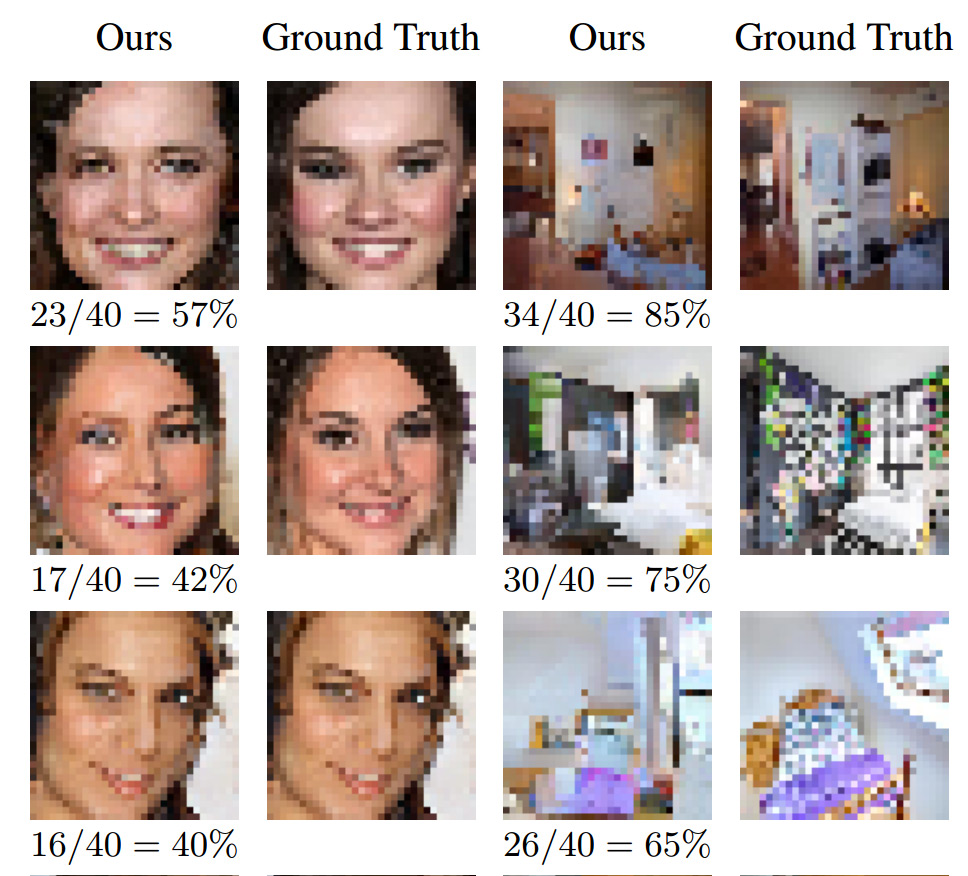

The images on the left, were what Google's algorithm created, while those on the right were the original source images.

In its research paper entitled: Pixel Recursive Super Resolution, Google showed the ability to take images with no discernible details and create something that resembles a much higher quality version of the input image. This technique could theoretically be used to unblur family faces from photos, improve old photographs from the past automatically, or even help identify criminals from blurry, old security footage.

However, there are some concerns about using it for serious purposes. While the system can make educated guesses, it isn't always entirely accurate. In some cases, the people's faces that Google's system ‘hallucinates' into existence, are a little different than the people who were used for the test.

Discuss on our Facebook page, HERE.

KitGuru Says: There's some very interesting potential here. I wonder too, if this sort of algorithmic method will make it possible to upscale a lot of old images in the future, to something resembling high-resolution.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Here I thought we were finally going to get tech that would allow us to do what they always seem to be able to do in TV shows and movies. Where they take a completely out of focus or just plain fuzzy picture and wam bam with a click of the mouse they magically clear it up so much that it is magically crystal clear like it was a completely new picture which it is but hey that’s TV & movie magic..lol

Google is paying 97$ per hour! Work for few hours and have longer with friends & family! !mj283d:

On tuesday I got a great new Land Rover Range Rover from having earned $8752 this last four weeks.. Its the most-financialy rewarding I’ve had.. It sounds unbelievable but you wont forgive yourself if you don’t check it

!mj283d:

➽➽

➽➽;➽➽ http://GoogleFinancialJobsCash283TopClubGetPay$97Hour… ★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★✫★★::::::!mj283d:….,….

But this doesn’t de-pixelate, which implies reversing the result. Obviously the resulting content is very different in meaning, even if similar elements, colors and locations are close, but this is inevitable given the method used.