Nvidia's Titan X Pascal has been dominating at the top of GPU performance charts for a little over seven months, with the gap to the Santa Clara-based GPU maker's second-in-line GTX 1080 being a sizeable one. With AMD's high-end competitors still not available for public adoption, the enthusiast market is ready for a GTX 1080 Ti GPU to offer Titan X-like performance at a price point closer to that of a high-end offering, rather than an ultra-high-end (borderline silly-money) one.

Along comes the GTX 1080 Ti. Take the GP102 GPU used in a Titan X Pascal – chop off one twelfth of certain areas, bump up the core clock speed by 63MHz and you get the GTX 1080 Ti. Oh, and let's not forget a £480 price reduction thrown in for good measure. Of course, there's more to it than that basic overview. The cooler has been tweaked, power delivery components are changed, and you now get 11 Gigabytes of faster 11Gbps GDDR5X VRAM versus the Titan X Pascal's 12 Gigs of 10Gbps GDDR5X. Reading between the lines, the expectation of slightly higher performance than a Titan X Pascal, driven by a higher core clock and faster memory, for a £699 asking fee looks like a good proposition.

With Nvidia's announcement for the GTX 1080 Ti last week came a whole round of product stack shake-ups. Titan X Pascal said so-long, GTX 1080 got a $100 price shave which also pushed down GTX 1070 fees, and new GTX 1080 and GTX 1060 models with faster VRAM were shown to be inbound. ‘What was the driving force behind this series of changes?' I hear you asking. No, AMD hasn't released a new high-end – Vega – GPU (yet). Instead, it the was the promise of Titan X-level performance from the $699 GTX 1080 Ti.

If you have been craving a true 4K60 single-GPU solution and simply could not justify spending more than £1k on a Titan X Pascal, you may want to pay attention to the £699 GTX 1080 Ti (yes, UK punters will pay a straight dollar to pound amount). Nvidia is claiming that the new 11GB card delivers 35% higher performance than a GTX 1080, making it the GPU vendor's biggest leap for a Ti-branded card. Single-card high-resolution and high-refresh rate gaming looks to have been made more affordable. That's even before AMD has entered the market with Vega competition. Who'd have thought?

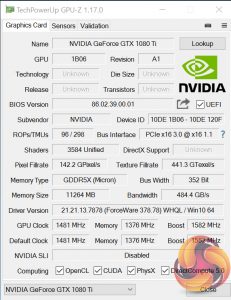

Nvidia is calling GTX 1080 Ti ‘the ultimate gamer GPU‘. Substantiating those claims is the application of the same 471mm2 GP102 GPU core found on the company's Titan X Pascal flagship consumer graphics card which is fabbed using a 16nm process. Nvidia adjusts the chip by disabling certain components which results in a one twelfth reduction to the number of ROPs, the amount of L2 cache, the associated memory bus width, and the quantity of VRAM. Those figures sit at 88 ROPs (vs Titan XP's 96), 2816 KB of L2 cache (vs Titan XP's 3072 KB), a 352-bit memory bus (vs Titan XP's 384-bit), and 11GB of GDDR5X VRAM (vs Titan XP's 12GB).

With a reduction in the number of on-chip components fighting for power and cooling comes headroom in the 250W TDP budget. Nvidia re-invests that TDP headroom by bumping the core clock up by 63MHz to 1480MHz which results in rated GPU Boost 3.0 frequency being improved by 61MHz to 1582MHz. This takes the GTX 1080 Ti's compute power to more than 11 TFLOPS.

Faster GDDR5X memory is also used. Compared to the Titan XP and its 10Gbps VRAM, the GTX 1080 Ti uses 11Gbps GDDR5X that Nvidia says it was able to achieve by working with DRAM partners to use advanced equalisation techniques, enhance signalling quality, and refine the data channel to the DRAM. The 10% increase in memory frequency versus Titan XP offsets GTX 1080 Ti's 8.3% reduction in bus width to give it greater memory bandwidth at 484GBps against Titan XP's 480GBps.

| GPU | Nvidia GTX 1060 6GB | Nvidia GTX 1070 | Nvidia GTX 1080 | Nvidia GTX Titan X (Pascal) | Nvidia GTX 1080 Ti |

| GPU Name | GP106 | GP104 | GP104 | GP102 | GP102 |

| Streaming Multiprocessors | 10 | 15 | 20 | 28 | 28 |

| GPU Cores | 1280 | 1920 | 2560 | 3584 | 3584 |

| Base Clock | 1506 MHz | 1506 MHz | 1607 MHz | 1417 MHz | 1480 MHz |

| GPU Boost Clock | 1709 MHz | 1683 MHz | 1733 MHz | 1531 MHz | 1582 MHz |

| Total Video Memory | 6GB GDDR5 | 8GB GDDR5 | 8GB GDDR5X | 12GB GDDR5X | 11GB GDDR5X |

| Texture Units | 80 | 120 | 160 | 224 | 224 |

| Texture fill rate | 120.5 GT/s | 180.7 GT/s | 257.1 GT/s | 317 GT/s | 331.5 GT/s |

| Memory Clock | 8Gbps effective | 8 Gbps effective | 10 Gbps effective | 10 Gbps effective | 11 Gbps effective |

| Memory Bandwidth | 192.2 GB/s | 256.3 GB/s | 320 GB/s | 480 GB/s | 484 GB/s |

| Bus Width | 192-bit | 256-bit | 256-bit | 384-bit | 352-bit |

| ROPs | 48 | 64 | 64 | 96 | 88 |

| Manufacturing Process | 16nm | 16nm | 16nm | 16nm | 16nm |

| TDP | 120 W | 150 W | 180 W | 250 W | 250 W |

| Power Connector(s) | 1x 6-pin | 1x 8-pin | 1x 8-pin | 1x 6-pin + 1×8-pin | 1x 6-pin + 1×8-pin |

| Current UK Starting Price | Approx. £230 | Approx. £360 | Approx. £470 | £1,179 (End of Line) | £699 |

Compared to Titan XP, the GP102 implementation for GTX 1080 Ti retains the 28 Streaming Multiprocessor Units (SMUs), 3584 CUDA cores, and 224 Texture Units. It is a 12 Billion transistor, 471mm2 GPU fabbed on TSMC's 16nm process technology. The 250 W TDP commands 6-pin plus 8-pin PCIe power connectors and a cooling solution that can keep pace up to the 83°C default (user-adjustable) thermal throttling point of GPU Boost 3.0 (the GPU's thermal threshold is 91°C).

GTX 1080 Ti isn't quite GTX 1080-and-a-half, as Titan X Pascal could crudely be described. But it isn't far off that level of raw numerical data on the specification sheet, either. In return for a 70W (39%) increase in TDP versus GTX 1080 and a ~£200 higher price tag, adopters are returned with the promise of 35% higher performance at the GTX 1080 Ti Founder's Edition's operating specifications.

For more details on the technologies found in Nvidia's Pascal-based graphics cards, please read our launch review of the GTX 1080 HERE.

Nvidia rates the GTX 1080 Ti Founder's Edition Boost frequency at 1582MHz. During our testing, we observed GPU Boost 3.0 taking the core speed as high as around 1860MHz before either power- or thermal-induced throttling kicked in. We will not be surprised to see after-market, custom-cooled, factory-overclocked cards from board partners hitting more than 1900MHz in gaming usage out-of-the-box.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Thanks for the review. In the concluding remarks you state “it should suffice for 5K usage”. Well, older cards also suffice for 5K usage, just not 5K gaming. I would be very grateful if you would expand on your thoughts in a meaningful way please? Why aren’t there any 5K gaming tests, there are 5K monitors for several years now. Yet powerful graphics cards are regularly tested with 1080p monitors, as if that means something to anybody?

1080p monitors are the most widely used by an enthusiast audience, and for those moving to a higher resolution 4k seems the way to go as a single card can now hold a 60fps minimum with high image quality settings. 5K would push the demand higher and its still very niche. I agree though, id like to see a few 5k tests just for shits and giggles.

Well, I would only partially agree, and with the more obvious part. These past ~2 years we have regularly seen 4K gaming tests although 4K60 was nowhere to be seen, for example. And if a technology is trying to break some barriers why not test it in quite an obvious way? 5K monitors are great for gaming, no lag, no 5000€ price etc.

Hmmm

Given that NVidia has had almost a year to come out with this “New” card, and given its still sky high price of $700 (700 bucks is only “cheap” to the people selling these cards..) it is an underwhelming release at best. And take a look at the 250 Watt power demand, which will surely surge past 300 watts when overclocked. How quickly the NVidia fan boys (and if the 10 reviews I have seen thus far are any indication, the online PC reviewer establishment as well) forget the endless whining and complaining about the R9290X power and heat performance. In this regard the 1080ti is a giant step backward.

So basically if you have a GTX1080 right now and are playing at 1080P there really isn’t any compelling reason to shell out $700 to get a 15% bump in performance. Not to mention you may have to upgrade your power supply and case cooling. And if you look at the performance delta in aggregate even at 1440 the cost/benefit isn’t stellar either.

These numbers look eerily similar to AMD’s Ryzen 7 gaming numbers. Things don’t get really interesting until you get to 4K. However, right now if 4k is where you play, this is best card on the market, at least until Vega shows up. But the fact remains that the 1080ti is nothing more than year old technology in the form of a cut down Titan. We really need AMD to hit Vega out of the park to get NVidia off its butt……

I’m an nvidia guy, I bleed green for sure now that the Shield TV is a thing. Back in the days of the 7970, I was all about AMD. We need Vega to be a hit, for nvidia fans and for amd, if it can compete with nvidias high end offerings, it will make competition between the two companies fierce, and in the world where consumer is king, that is a good thing.

Thank you for turning AA off in your 4k benchmarks which is something most sites don’t do. Annoying when they do benchmarks with things like MSAA on at 4k when it isn’t needed and you can’t get an accurate representation of real world 4k performance.

Could be worse, they could be telling you 20fps is all you need as its ‘super smooth’, as eteknix just did today in their GTX1080ti ‘CPU’ ‘editorial’. Biggest AMD shill piece on Ryzen I have ever read. last time I am going there!

Thanks for pointing that out. ‘Gaming’ was meant by the word ‘usage’, which has now been updated. With that said, multi-monitor 5K work can command a lot of VRAM too if you use programs such as Photoshop and Lightroom. I haven’t tested with a card other than the 6GB GTX 980 Ti, though, and I haven’t tested if it’s a case of Adobe simply caching data into the VRAM where there is spare capacity rather than a performance enhancement available.

Unfortunately, I had very little time to test this card due to shipping delays. So I wasn’t able to gather data for 5K gaming. Also, the limited appeal (to gamers) of the current handful of 5K monitors meant that it wasn’t a worthwhile compromise to sacrifice the gathering of other performance data in favour of 5K tests.

“AMD Graphics cards were benchmarked with the AMD Crimson Display Driver 16.11.4.”

Uhhhh, why were the AMD cards using drivers from 4 months ago?

I would imagine time is a problem as Luke already said in another post. There is quite a lot of work involved in these and I noticed he is also doing Ryzen reviews in the last 2 weeks as well. Guess the guy needs to sleep sometime. Anyone ever tell you, your avatar looks like a mafia don?

I’m sorry but that’s how nvidia is rolling

https://www.youtube.com/watch?v=YzSUzMGSMEQ

i dont see your 35% gain in some games the frame rate is 4 fps and some 15 to 20 frames that amounts

to 2 to 20% not the 35 you are saying

Ok Ok I have to admit it is a very fast card it just squeaks by as a good 4K gaming card which is pretty impressive. I still say they held onto the card for to long probably only because when I had the money to buy it they were thinking when the heck is AMD gonna release that Vega thing so we can start cashing in on Ti goodness…lol

No, no one ever did. Maybe because the ladies like this picture? Anyway, I turned out to be the darkest of the family, considering my brother is blond and my sister is blond with blue eyes. So considering I’ve been called Turkish before, being called south Italian is probably an improvement, since I’m Portuguese. And I look just like my father, except there’s a picture of him when he was 8 and blond, while I was never blond.

Umm it means something to me. I much prefer maxing out my games at 1080p 144hz+. So I’m thanking for 1080p benchmarks with super powerful cards.

Of course, but isn’t your 144Hz+ gaming limited by CPU? But yes, my post was over the top, no doubt, I wanted to make a point that we regularly read about Titan X, 10-core i7 CPUs and similar tech, with pleasure, because we’re enthusiasts. So even if I am not going to buy it because of its price/performance I like to see a review. 5K gaming on the other hand isn’t Martian Hyperdrive yet it is easily dismissed because it is not ‘enthusiast’, but ‘niche’, although there really is nothing wrong with high resolution, those are wonderful panels. However, Titan X or a 10-core Intel CPU are not regarded ‘niche’, they are ‘enthusiast’..by now you certainly get my point 🙂

Hi.

Which games are you referring to? The gains tend to be more

visible at higher resolutions (due to less CPU bottlenecking) and at 4K

are more than 30% in Deus Ex, GoW4, GTA V, Metro: LL, ROTTR, Witcher 3, and Total War: Warhammer.

The bulk of the data was gathered at that time, hence why the Nvidia cards (except GTX 1080 Ti, due to its later launch) also use a driver of the same period. We did some internal re-testing of the new Nvidia driver with the Titan X Pascal and found its performance changes to be relatively minor except in Ashes of the Singularity at 4K. So we decided that it was best to retain the older data for comparison as I wasn’t given enough time with the card to do full re-testing of a stack of GPUs.

AMD has been destroyed completely.

Ryzen is not good processor for gaming and they got no answer for Nvidia.

Total AMD failure!

I really do hope AMD Radeon are able to pull it out of the bag with the RX Vega later this year as from the limited releases we have seen so far it looks promising. Now if they can also replicate the same power savings they have achieved with their latest hardware then Intel AND Nvidia will seriously need to rethink their own tech and pricing structure. It will be a much needed boost to the Desktop market in my opinion.

sorry, a question and sorry for bad english, in the witcher it says AA (above), and above it says nothing about AA

I JUST SAW A REAL REVIEW on youtube that rated this card at real numbers and they are 21.5% from the 1080 not the lies about 35% they said you can get 35 from overclocking to the max no other way

stock speeds are 21.5 still good but not the lies that NVidia is saying

i hope so too but look at facts amd like to hype the razen is a failure at gaming i have one and overclocking no way i tried it crashes after 1 2 days on and when you reboot it says that overclocking has failed i hope its just a bios fix and my video encoding im seeing a 50%

slower then the 7700 kaby lake from what i know about amd this card will be 80% of a 1080

the 1080 ti is 21 to 35% faster then the 1080 i don’t see amd matching anywhere near this

the fury is 50% of the 1080 the most they can do i think will be 30 to 40% better that will be still slower then the 1080 im only guessing

I understand your frustration. IPC for the Ryzen chips is comparable to the Intel and OC aside I think they will and do provide great value for money in today’s market. The BIOS updates you mention will come thick and fast very soon improving stability and performance. Gaming is an issue for many but not the issue that media would have you believe as a very good FPS is still there especially in 4K. The problem we have at the moment is the vast majority of code is favouring the Intel platform and while updates will be forthcoming I trust that newer games will be able utilise the undisputed abilities of the Ryzen chips off the bat. Now as for RX Vega: AMD advertised 40% uplift on their CPUs and gave us 52% and IF the same were true for Vega then we are already on to a winner of a GPU. I also believe AMD have always given more “bang for your buck” so fingers crossed we shall see exactly that later this year.

Hi. The settings screenshots above The Witcher 3 charts show that AA is set to ‘on’ (the third screenshot). The ‘above’ text refers to the tested settings being shown in screenshots above the charts.

oh, yes, i didnt see them, thnx

is ur mobo made by asus?

I cant believe none of those cards can”t a maintain a minimum 60fps at 1080P at all times…