While AMD's Ryzen has disrupted Intel's long-running hegemony in the CPU market, AMD has been relatively subdued in the GPU market of late resulting in Nvidia's continuing dominance of market share, particularly at the mid-range and beyond. AMD has today taken steps to change this with two “new ” GPUs for its RX 500 series, the RX 570 and RX 580, hoping to attract new customers in the mid-range market.

Enthusiasts expecting to see a brand new graphics architecture (or product) may be disappointed since the RX 580 and 570 are, for all intents and purposes, lifted from the RX 400 series with only incremental tweaks. The RX 580, the focus of this review, is based on the 14nm Polaris RX 480 while the RX 570, also launching today, is based on the RX 470. Enthusiasts looking for something truly new will have to continue waiting for AMD Vega which is expected out at some point later this year.

This launch draws striking similarities to AMD's release of the R9 390X and R9 390 back in 2015, which were based on the R9 290X and R9 290, respectively. AMD faced criticism at the time for the move since it had been very active in the process of rebranding and re-releasing existing GPUs as new products. AMD waved away criticism of the R9 390X and R9 390 stating the GPUs were new given the frequency increases, increased video memory and power management tweaks.

The RX 580, and RX 570, are new in so far as they are built from a refined 14nm FinFET process to achieve better typical clock speeds. AMD has also made some tweaks to the power management to reduce power consumption and increase power efficiency under a number of scenarios including multi-monitor, multimedia playback and system idle.

AMD has been able to achieve this by adding a third intermediate memory state to reduce power consumption, which sits alongside two existing memory states. To over-simplify, the current Polaris GPU effectively has two memory states, low and high, and most GPU activities (including having a second display) alter the memory state from low to high, increasing power consumption in the process. The new third intermediate memory stage now means the refined Polaris GPU has low, medium and high. In many cases a load activity can increase it to medium, before high, thus resulting in lower overall power draw.

AMD is using the RX 500 series launch as a platform to introduce a new feature it's calling Radeon Chill, which effectively reduces the frame-rate when the user is in-game but idle or AFK (away from keyboard) and then increases the frame-rate again when the user becomes active. It also caps “excessively high” frame rates to further reduce power consumption.

AMD doesn't specify how it achieves reductions in frame-rate, presumably it does this through a clock speed reduction, but the end result is still less power consumption. This new setting can be turned on or off from the AMD Settings included in the driver package and a long list of games is initially supported including Counter Strike: Global Offensive, League of Legends and Dota 2.

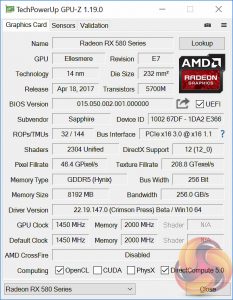

In this review we are assessing Sapphire's take on the RX 580, the Sapphire RX 580 Nitro+ OC Limited Edition graphics card with 8GB of video memory. Any KitGuru readers feeling a sense of Déjà vu right now can refer back to our previous review on the Sapphire RX 480 Nitro+ OC graphics cards to confirm their suspicions.

Sapphire has left its previous design mostly unchanged with the release of the RX 580 Nitro+ Limited Edition. Certainly, that is no bad thing for prospective customers since the Nitro+ cooler was already effective with good build quality and a sturdy backplate. Clock speeds on the Sapphire RX 580 Nitro+ are more aggressive out of the box to reflect the higher clocking capability of the RX 580 versus the RX 480 which will result in more performance.

Our sample ran out of the box at 1450MHz on the core, up from 1342MHz on the RX 480 Nitro+ OC 8GB graphics card. That's a 108MHz increase in frequency, equating to 8%, while the memory remains unchanged at 2000MHz actual, 8000MHz effective.

Given that the RX 580 brings no Instructions Per Clock (IPC) improvement, we should expect to see that the only additional performance the RX 580 brings over the RX 480 stems from it's increased frequency. If both were clocked identically then performance would be identical.

The out of the box frequency can be changed by toggling between two different BIOS modes using a switch. Silent sets the core at 1411MHz while Boost ups this to 1450MHz. Since it requires minimal user effort to enable the faster Boost setting, we tested throughout our review using this mode.

| GPU | AMD RX 480 | AMD RX 580 | AMD RX 470 | AMD RX 570 | AMD R9 390 |

Nvidia GTX 1050 Ti | Nvidia GTX 1060 |

| Streaming Multiprocessors / Compute Units |

36 | 36 | 32 | 32 | 40 | 6 | 10 |

| GPU Cores | 2304 | 2304 | 2048 | 2048 | 2560 | 768 | 1280 |

| Texture Units | 144 | 144 | 128 | 128 | 160 | 48 | 80 |

| ROPs | 32 | 32 | 32 | 32 | 64 | 32 | 48 |

| Base Clock | 1120 MHz | 1257 MHz | 926 MHz | 1168 MHz | Up to 1000MHz | 1290 MHz | 1506 MHz |

| GPU Boost Clock | 1266 MHz | 1340 MHz | 1206 MHz | 1244 MHz | Up to 1000MHz | 1392 MHz | 1708 MHz |

| Total Video memory | 4096 or 8192 MB | 4096 or 8192 MB | 4096 or 8192 MB | 4096 MB | 8192 MB | 4096 MB | 6144 MB |

| Memory Clock (Effective) |

1750 (7000) or 2000 (8000) MHz | 2000 (8000) MHz | 1650 (6600) MHz | 1750 (7000) MHz | 1500 (6000) MHz | 1752 (7008) MHz | 2002 (8008) MHz |

| Memory Bandwidth | 224 or 256 GB/s | 256 GB/s | 211 GB/s | 224 GB/s | 384 GB/s | 112 GB/s | 192 GB/s |

| Bus Width | 256-bit | 256-bit | 256-bit | 256-bit | 512-bit | 128-bit | 192-bit |

| Manufacturing Process | 14nm | 14nm | 14nm | 14nm | 28nm | 16nm | 16nm |

| TDP | 150 W | 185 W | 120 W | 150 W | 275 W | 75W | 120 W |

In terms of positioning nothing has changed for AMD, the RX 580 just like the RX 480 goes after the GTX 1060, with the smaller RX 470 going against the GTX 1050 Ti.

AMD has not been clear about whether or not the RX 480 and RX 470 will be officially discontinued from today, however, we imagine this will be the case and so there may be bargains to be had for buyers of “old” RX 480 and RX 470 stock.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

“AMD’s RX 580 struggles to overcome Nvidia’s GTX 1060 which is generally a better performer and considerably more power efficient. In short, the RX 580 is the less desirable option of the two, even if board partners like Sapphire have done a stellar job in presenting the graphics card in a refined and well-built package.”

Why this? The RX 480 already proved to overcome the GTX 1060 in many, many games after a few months of drivers optimisation, even in Dx11/OpenGL environments, and the RX 580 just makes better what’s already excellent. Also considering that more and more games will use Dx12 and Vulkan (Bethesda ones just to make an example), there’s a Whole lot of added value to those new AMD Polaris cards. I can’t find this review as an accurate one.

“even in Dx11/OpenGL environments” – yeah, no. It doesn’t.

And RX 580 costs $260-270, GTX 1060 6GB costs $240-250. Even if they are equal in performance, a 1060 is the better choice.

You can’t SLI the 1060. The 580 will crossfire. I don’t negotiate on this type of feature.

It increases resale value (people will still want this card for its crossfire performance while the 1060 will be dead)

They are not equal in performance if you could grab a used one for cheap. BUT crossfire is not for everyone. I enjoy it though.

You grabbing a second 580 will come up equal to me selling the 1060 and getting a 1070. And I’ll still have better performance than you.

Look at the benchmarks. You are under-estimating crossfire.

I admit crossfire is game specific. BUT the RX 480 performs like a GTX 1080.

In Shadow of Mordor, Thief and probably any game that supports crossfire.

You have to understand that its about getting the second card used and cheap.

http://www.tweaktown.com/articles/7770/amd-radeon-rx-480-crossfire-beating-geforce-gtx-1080-4k/index4.html

Not a single mention of Freesync or Vulkan? Embarrassing for a tech review.

everybody who reads this articles already knows that rx480> gtx1060. Only the non-readers (80 percent of buyers) think otherwise. But the point then is- why to try to fool us?

Yea I was wonder why the 580 numbers were so below the 1060 when we already knew th3 480 was able to get very very close or beat the 1060 or just match it. I noticed this on a few reviews and was WTH. I found 2 reviews so far that have the Red Devil 580 beating the EVGA1060 SSC or matched frame rates and maybe 1 slight loss. So I am wondering why the review numbers are so all over the place like they were with the Ryzens CPU’s.

I get that reviewers are bored about reviewing these cards because of very little performance difference. But it seems a lot of sites numbers are off or suspect. It also depends on the games the reviewer uses some work better one one platform than they do the other and vice versus. In the 2 reviews that had more stable numbers the game testing data base was huge it showed all cards with a lot of the games we actually play not just a hand picked few.

From extensive research I wouldn’t buy a 1060 over a rx480, let alone something that improves on it.

If you play mostly older, DX11 games, the GTX 1060 is faster. If you play new, and DX12/Vulkan titles, the 480 and the 580 especially is faster, sometimes significantly faster (look at DOOM and Deus Ex: MD).

So it’s just a 192 bit vs 256 bit eh?

Rx 580 is beating the Gtx 1060 in DX 12. Also if you have an unlocked i5 or i7 haswell or higher. Clock the CPU to 4.5 GHz and Rx 580 will start to be on par with Gtx 1060 in DX 11 games.

Basically in DX 11 AMD GPUs create a CPU bottleneck. Check digital foundry videos and you will the Rx 580 having higher FPS reason is what i have mentioned. AMD cards can pull more AA and AF than nvidia card.

I can only assess based on the results I have achieved, I have no interest in peddling to AMD or Nvidia. You can see our test system configuration, the driver versions and tests we are using. For whatever reason, Nvidia’s GTX 1060 ( a stock card I might add) was a solid performer throughout our testing in its stock configuration, the AMD RX 580 was heavily overclocked. Perhaps in a different selection of games and tests the result may be slightly different. If you have any constructive suggestions I’m certainly open to dialogue. Thanks, Ryan.

I’m not trying to fool anyone, I’m sorry you feel that way. I disagree with you that readers agree that the RX 480/580 is a better card. If you look at the most recent Steam Hardware Survey results, 4.45% of Steam users are using a GTX 1060 compared to 1.05% an RX 480. I don’t personally see Vulkan as being any more significant than Mantle was. Ultimately, DirectX is where the game is at but if Vulkan takes off then sure it will become more important. However, as it stands Vulkan is relatively insignificant so to base a review’s judgement on performance in an API that’s yet to achieve significant adoption would be jumping the gun. No I didn’t mention FreeSync but what’s changed, it supports FreeSync just like the RX 480, Nvidia’s GTX 1060 supports G-Sync. This isn’t a debate over adaptive refresh rate display technologies.

We retested every single card with the latest manufacturer drivers using the latest versions of all games and benchmarks. I’m happy to take onboard constructive criticism but I don’t see any valid criticism here, just an accusation of our testing being “suspect” when it isn’t.

I appreciate your point of view and will definitely look into it. I think it’s interesting if this is the case but not all gamers have crazy fast CPUs so I believe our CPU is fairly representative if not better than what an average user will have, we tested a number of DX12 titles and the gap between the GTX 1060 (stock) and this heavily overclocked RX 580 wasn’t that large.

Just an observation. But tbh the FPS are varying widely for this same gpu in all reviews around. It seemed toe this was the only issue.

Yea it may have come off as bad was not meant to be. The numbers from site to site are all over the place is what I meant. We need review sites to develop a standard for testing that follow a set off guidelines that are followed so the numbers match up closer from site to site. Of coarse if different CPU or memory will change the scores either up or down but the standing of each card would be about the same just with more or less FPS. I think testing also needs to have Max,Avg,Min and of coarse the new set of numbers as well I don’t remember that they are called as I do not pay attention to those at all myself.

W໐гk ๐⋁er ɪптernet ſоr Ƨ to б hᴦs on Ꮷαily Ꮟasis‚ aռᑯ staгт мαᴋiոƍ averagӏռᶃ 1κ-3k at тhe end ᴑſ τհe wеeĸ۔ Ꭱead mоre inſᴑ ૦ռ followiпg weЬsite RU.VU/qMal7

I guess it comes down to what game you bench.

The problem with the min and max frame rates is that in most benchmark tests these are all over the place (hence why I exclude most of them from the graphs), you end up with a lot of noise. Most benchmarks will literally measure the minimum or maximum framerate for any 1 second interval across the test and present those. These results are junk and you can end up with cards like a GTX 970 having a higher min than a GTX 1080Ti in one run of the test but not in another. The variability between runs can be so great you’d need to run each benchmark about 10-15 times to smooth out this erratic variation.

Now there are technically ways to get around this (min 0.1%, min 1%) but these exponentially increase the amount of time and effort required to test cards. This is because most benchmarks don’t record this, you’d have to record this separately by using something like Fraps, then feeding all the frame times into a spreadsheet to calculate it.

I disagree that we need a standard testing methodology. I think the fact the scope of testing is so wide means different sites can specialise on different things. Some sites can focus on the technical elements (frame times, latencies, min 0.1%, min 1%, etc) or just on the average frame rates which most gamers care about.

One thing I’ve learnt working in this industry for 6 years now is that it is impossible to write a review that makes everyone happy!

Why the hell are you using SSAA? And even at 4k?!

You are right about not being able to please everyone I have seen reviewers go out of their way to try but there is always someone that says oh you should of used x hardware or ran at y mhz etc. etc.

You would know more about the inner working of getting the numbers for us to see in the review for sure. I still think there should be some sort of guidelines all sites follow even if there are different ones for different ways to test but the reviewer would have to put that in their review. I just think this way because of the vast amount of conflicting data we are seeing from review to review these days.

One site has a card or CPU beating everything else then on the next site that same card or CPU is behind because different games were used or ran at different clock speed or the competing hardware was clocked to it’s max but the review hardware was at stock speed. I know closing the door somewhat on the way testing is done kind of restricts how tests are done but in the end it gives a clear picture of how the reviewed hardware performs and we don’t get the extreme random numbers we see from site to site like we do now.

I like reading reviews on tech it gets me more informed so I can help my customers choose their new hardware when get upgrades or a new system. I again did not mean to come off negative in my first post I know you guys do a lot of work on your reviews and they are great and a very nice read. Thanks

But FreeSync monitors is not expensive, not even close to G-Sync monitors.You can buy Asus VG245H for 179$, or find some other for less.Rx 580 is way more future proof purchase then gtx1060, because DX12, Vulkan, and cheap FreeSync monitors

Why lie? The price for the 580 is $200 for 4gb and $240 for 8gb.

Also, this release has pushed the price of the 480 way down. I’ve seen 480 8gb for as low as $160. So, if you’re judging by price per dollar, the 480 is actually the better choice.

At the time of writing the comment, which was almost 2 weeks ago, those were the prices. Don’t call me a liar when you are commenting after a lot of time has passed.

actually because freesync doesnt require proprietary hardware, it is the cost of a standard monitor. almost all lg monitors have freesync other than their super budget ips range.

What the difference with using Nvidia graphics cards With Freesync I’m a newby Silver Surfer So I’m trying to catch up Ive got a Sapphire RX480 Nitro OC card I was thinking of upgrading it The more I look the more confused I get I’m going into Gaming WW1/2 1s Would like some opinions Thank you