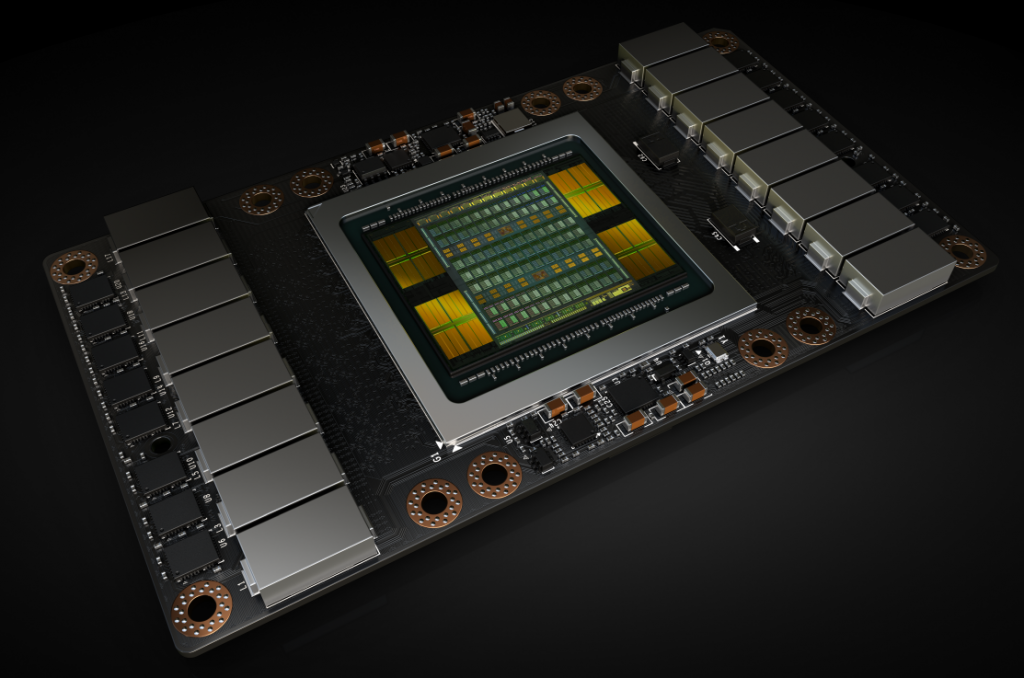

We've already had a peek at Nvidia's Volta architecture with the launch of the mega-expensive professional Tesla cards, but gamers are still waiting for this new tech to trickle down to consumer graphics cards. This week, TSMC, Nvidia's silicon partner, announced that it will be kicking off production of its 12nm FinFET chips later this year, making Volta-based GeForce graphics cards possible as soon as early 2018.

The first Volta GPU to make it out into the wild was the V100, which is squarely aimed at professionals. While it has been out since may, this GPU will differ from what we will see in GeForce cards next year. Given that there is a Volta-based GPU out already, this won't be the first time TSMC has produced 12nm FinFET. However, starting in Q4 volume will be ramped up in order to appease the consumer market.

It's worth noting that Nvidia CEO, Jensen Huang, recently told investors during an earnings call that gamers should not expect Volta before the end of 2017, stating that for the foreseeable future “Pascal is just unbeatable”. You can find the full quote below:

“Volta for gaming, we haven't announced anything. And all I can say is that our pipeline is filled with some exciting new toys for the gamers, and we have some really exciting new technology to offer them in the pipeline. But for the holiday season for the foreseeable future, I think Pascal is just unbeatable.”

So we won't be seeing any GeForce-series Volta graphics cards in time for the holiday season, but with production of 12nm FinFET ramping up, we could see something in the first half of next year, potentially even before the end of March 2018 but that is speculation at this time.

KitGuru Says: The ball firmly rests in Nvidia's court and with such a shift in architecture for both AMD's Vega and Nvidia's Volta cards, the GPU market looks to be quite interesting right now. Do you plan on upgrading anytime soon? What would be your next card?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

I wish they finally brought out a new Shield android console with a new Tegra Chip…

As much as gamers would like to see Volta this year there is really no reason for Nvidia to rush Volta especially knowing that unlike last generation , AMD with Vega has strictly nothing to offer against 1080Ti and when it offers something against 1080 and 1070 it does with a much more power hungry enveloppe at at the same or higher price ….this is a no brainer for Nvidia .

On the other hand Jensen speaks about exciting toys and new technologies to offer to gamers with Volta and this is good to know because sadly unlike others Nvidia delivers !

It’s a pity really, because we will have to buy another gpu for 4k 60fps :/

God damnit !

1080Ti is already enough but also expensive for some people so yeah …. Hopefully Volta will offer 4K 60fps (or better ) at a lower pricetag .

512 bit is nothing ye XD

Nvidia has the ball in their court easily.

The GTX 1080Ti can hardly handle new released titles and even some old at 4k on high or ultra settings while maintaining 60fps avg not counting the 1% lows, what is the point of playing at 4k if you have to use med or high settings?

Not enough mate, it struggles on lots of new released to get 50fps with everything maxxed out.

While it is mostly depending on the optimization made more power would go a long way to get 60fps

Really custom 1080Ti struggles to get 50fps (let alone 60 ) in new released ?

Wich one for example ?

Really ? Well that not what i see here : http://www.guru3d.com/articles_pages/msi_gtx_1080_ti_gaming_x_review,12.html

1080Ti can easely do 60 or way above 60fps 4k ultra on a huge majority of game they tester there except DeusX , Ghost Recon and Watch Dogs 2 . Now if some game are badly optimised litle can do GPU manufacturers . There are always games that are going to run on lower FPS than they should according to the hardware .

1080Ti is definitely plenty capable of 4K 60fps Ultra . Thats a fact !!!

problems #1 you are only going off one one set of benchmarks. #2 Doom is a good example of how frame rate and times will very throughout the game, just because you hit 80FPS or higher during one part of the game does not mean you frame rate will be the high the whole game. #3 half of those games on that list are just at 60 to 65fps or are under 60fps, what do you think the frame rates will be in upcoming games that will be out at the end of 2017 or games coming out in 2018, if games from 2015 to 2016 are already pushing a GTX 1080Ti to 50fps-65fps do you think future games are not going to hammer those numbers number even further? #4 when your GPU running at 99% usage can only maintain 55fps-60fps then the 1% lows likely going to make the game unplayable during critical moments in competitive games and moments. If the goal is 60fps then the GPU needs to be able to run present and past games at 15fps or higher with 1% low drops no more 30% the frame rates or you will be upgrading that GPU within 1 to 2 years, and the GTX 1080Ti can not even maintain 60fps in many present games.

Answer below

You guys need to understand something.

First you need to take something into account and that is the oc you will be able to sustain with your gpu, which is depending on your luck at the silicon lottery.

Also depending on your cooling solution you may not be able to sustain your highest oc because your gpu will suffer from downclocking.

Big oc means big voltage means your oc will not be stable and you will experience fps drops while gaming.

It is good for benchmarking but not so much for gaming, fiy a lot of us run their oc at 0,9v ish.

So our oc will not be as high as it could be, thus even though we will have a stable gaming experience we won’t reach the highest fps we can (but again, stable gaming experience).

Also benchmarking is different from actual gaming, by a fair margin.

So those benchmarks are all good and all but running my gpu at 1974mhz (60mhz difference with their oc gpu) i can tell you that i am nowhere near the values they give (and 60mhz is not that much of a difference anyway).

65 fps rise of the tomb raider? You wish, more like 55-60fps with drops to 40-45.

55fps for ghost recon sounds fair, but they disabled the most beautiful features, if you run the game in ultra you will have an average of 45-48fps, far from 60.

Bf1 78 fps? YEAH IF YOU PLAY THE SOLO CAMPAIGN !

This benchmark is a godamn joke, there is no way to get 78fps during a 32 vs 32 operation, not even close !

Don’t get me wrong, a lot of games are fully playable even though they don’t run at 60fps, but having only one gpu is not future proof.

Some release in 2018 will draw even more power from the gpu and seing some games barely run at 50fps i am afraid that we will fall as low as an average of 40 :/

#1 Yes i know this only one set of benchmarks but Guru3D are well know for quality benchs and honestly do yo realy think i was going to post every single bench over 1080Ti since it’s release ?

#2 Variation of frame rate throughout a game is well know this is why on general benchmarks give you an average value . How is this supposed to make a point ?

#3 There are only 3 games running under 60fps . New game are always going to demand more and more horsepower so saying 1080Ti is not going to be able to drive future games at 6ofps 4K Ultra is a pleonasm . This is why you have new GPU’s almost every year !

1080Ti is the first single GPU to be able to drive a majority of AAA games at 4K Ultra 60fps that’s a fact . Again we are talking about 4K Ultra here , move this to 4K High ( graphic difference between high and ultra on most games to not say every game is laughable ) and you have a GPU that can EASELY push way above 60fps on 4K on every damn game that exist .

Do you realize that is 4 1080p displays? All with massive AA needs, because as size increases by double smoothing becomes exponentially harder.

So you cannot just say 4k 60 FPS as that is seriously night and day different by adding 8x SSAA vs 2x MSAA for instance. Now if shadows are to ultra, forget about it and if it has a lot of reflections, its another double the work load.

Guys are so wet on the 1080 TI but they dont really know how they work, just that they are the big boys for now, and they are told that they are all you would ever need.

Whats funny is the 1080 TI doesnt even pay for itself, and yet guys are obsessed with it even though most will never be able to by one.

Luckily Im obsessed with what I can afford the 1060-1070-Vega 56 region and it just so happens Vega 56 is by far the best bang for the buck right now, and the only card that I predict will gain more than 8% after its launched because gaming devs will learn how to properly abuse HBM.

I mean look at Dirt 4, the HBM loved the CMAA and it was 24% faster than the 1070, but with MSAAx8 it was only 8% faster. Point is when they really tweak it to games it will be an animal. A simple Bios tweak gives the 56 5% more FPS across the board. And adding another 70w unleashes it. Its not bound by thermals but by power.