Over the last year, the idea of ‘fake news' has become a hot button issue on social media, with politicians calling for the likes of Facebook, Twitter and Google to combat the spreading of false information. Now Facebook is stepping up to the plate with ‘trust indicators', intended to identify and label trusted news sources.

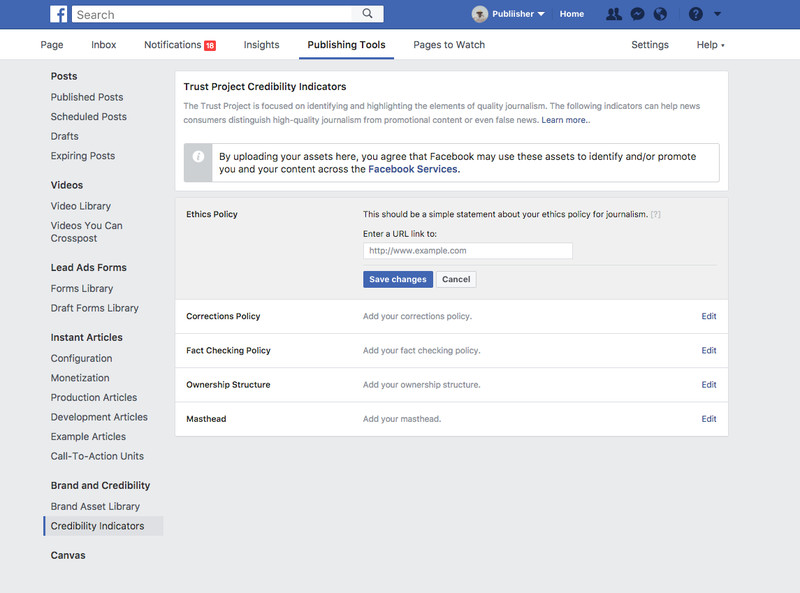

The ‘trust indicator' will appear as an icon on articles shared across Facebook. These indicators will also allow users to see specific information about publishers, including their policies on corrections and fact checking. Publishers can also choose to add details on their ‘ownership structure' while also appointing a ‘masthead' who would be responsible for overseeing content.

In a statement given to The Verge, Facebook explains the thought process behind trust indicators: “We believe that helping people access this important contextual information can help them evaluate if articles are from a publisher they trust, and if the story itself is credible. This step is part of our larger efforts to combat false news and misinformation on Facebook — providing people with more context to help them make more informed decisions, advance news literacy and education, and working to reinforce indicators of publisher integrity on our platform.”

Facebook will begin rolling out trust indicators to a small group of publishers on the platform. Over time, it will expand and eventually, it should include all news publishers sharing on the platform.

KitGuru Says: Facebook already has a ‘verified page' icon but given how quickly false information can spread, even big sources can fall into the trap from time to time. This new indicator may be able to help combat that, particularly if it gives readers confidence that any mistakes will be corrected.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards