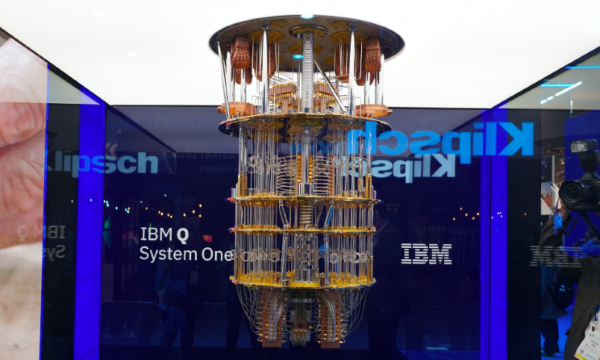

This week, we saw IBM continue to push its quantum computing efforts by announcing a 53 quantum bit/qubit capable quantum computer. That’s a big increase on IBM’s Q System One, which debuted this January boasting 20-qubits of processing power.

For anyone who doesn’t know, a qubit is a quantum bit – the equivalent in quantum computing to the binary digit or bit of traditional computing. Just as a ‘bit' is the basic unit of information in a traditional computer, a qubit is the basic unit of information in a quantum computer.

Unlike classic computing that uses bits (pieces of data) stored as zeros and ones, quantum computers leverage quantum mechanical phenomena to shape information. To do this, they rely on quantum bits or qubits. “The new quantum system is important because it offers a larger lattice and gives users the ability to run even more complex entanglement and connectivity experiments,” says Dario Gil, director of IBM Research, in a press statement.

Quantum computing is capable of processing data much faster than traditional computers, therefore it means large amounts of data can be handled to solve big problems. Keeping qubits stable is not an easy feat – the hardware does this by operating at super-cooled temperatures of 10 millikelvin (-273.14˚C).

IBM isn’t the only company investing in quantum computing – Volkswagen, a German car manufacturer, hopes it will help them predict common future traffic volumes and demands for transport. Other competitors like Google and Microsoft are also researching quantum computing. A Google researcher’s paper claimed to have conquered a major milestone by achieving quantum supremacy, as reported by the financial Times.

IBM has been promoting quantum computing via the cloud since 2016 and have opened a new quantum computing centre in New York to add 10 quantum computing machines. IBM’s new 53-qubit monster will be available to its IBM Q Network clients starting in October.

KitGuru says: Quantum computing is still a relatively new technology and may never feature in home systems due to its size and noise output. However, the technology used could change the way computers work forever once perfected.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards