AMD has announced that the third generation of Nvidia DGX A100, “the world’s most advanced AI system“, features 2nd Gen AMD EPYC processors equipped with 128 cores.

The AI system from Nvidia is capable of delivering 5 petaflops of computational performance and is designed to handle “diverse AI workloads such as data analytics, training, and inference.” The Nvidia DGX A100 is equipped with “128 cores, DDR4-3200MHz and PCIe 4 support from two AMD EPYC 7742 processors running at speeds up to 3.4 GHz.”

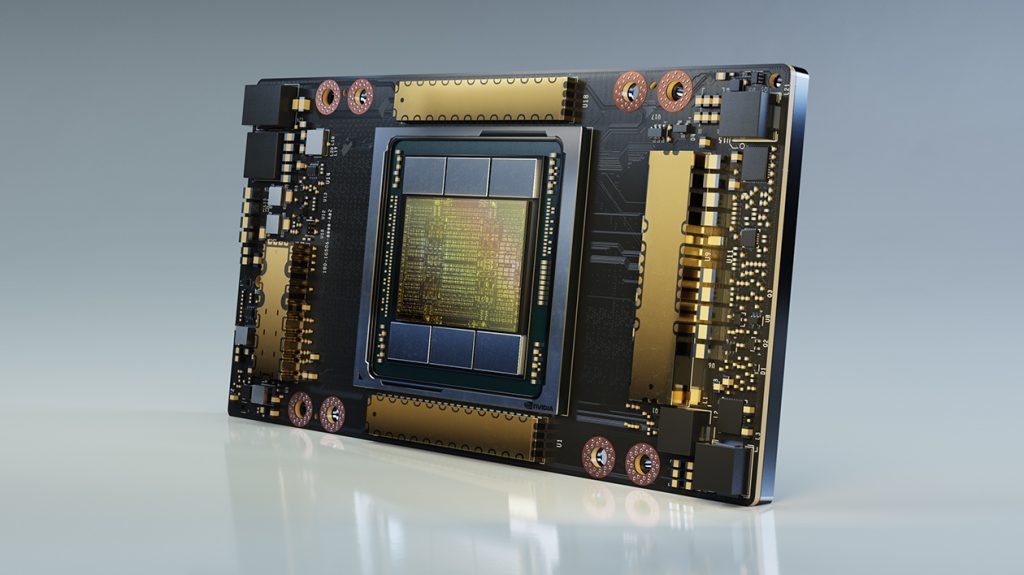

Image credit:Nvidia

Currently, according to AMD, 2nd Gen EPYC processors are the only x86-architecture server processors that support PCIe 4, providing key bandwidth that enables strong performance. The high bandwidth I/O is essential for faster connections between the CPU and other devices like GPUs.

“Only 2nd Gen AMD EPYC processors can provide up to 64 cores and 128 lanes of PCIe 4 interconnectivity in a single x86 data center processor, and we’re excited to see how the power of the NVIDIA DGX A100 system enables the I/O bandwidth to be effectively doubled,” said Raghu Nambiar, corporate vice president, data center ecosystems and application engineering, AMD.

“The NVIDIA DGX A100 delivers a tremendous leap in performance and capabilities,” said Charlie Boyle, vice president and general manager, DGX systems at NVIDIA.

The full press release from AMD can be read HERE.

Discuss on our Facebook page HERE.

KitGuru says: Are you interested in AI infrastructure? What do you think of AMD's and Nvidia's collaboration to create the 5 Petaflop AI system?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards