In a Micron Tech Brief, the company has confirmed the launch of the successor to HBM2E, named HBMnext. This next evolution of High-Bandwidth Memory is expected to arrive in 2022.

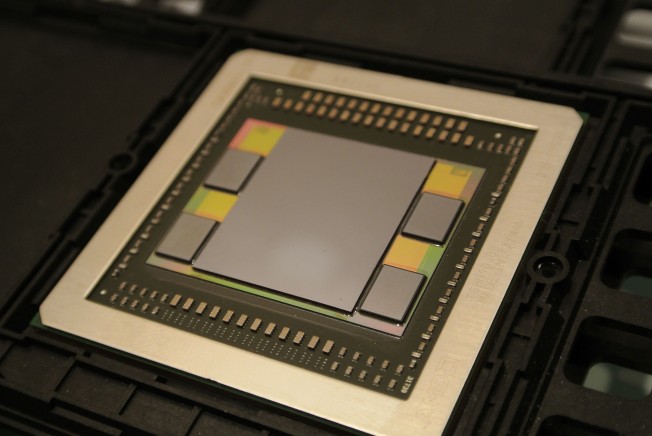

HBM (high-bandwidth memory) was used in GPUs for the first time by AMD in 2015. Back then, AMD used SK Hynix HBM on AMD Fiji GPUs, more specifically, in the R9 Fury, R9 Nano, and R9 Fury X graphics cards. HBM is generally more efficient and faster than GDDR5, but those benefits come with an extra cost.

In 2016, Samsung announced the mass production of HBM2, with SK Hynix following suit by announcing that they were also mass-producing HBM2. Two years later, the HBM2 JEDEC specification was updated, increasing the bandwidth and capacity, creating HBM2E, which has been also produced by Samsung and SK Hynix.

Micron started to catch up with SK Hynix and Samsung in 2020, when it started to produce HBM2. Until this year ends, Micron wants to put its JEDEC-complaint HBM2E in the market, available in “4H/8GB and 8H/16GB densities”, with data transfer speeds of up to 3.2Gb/s or more. As a comparison, the Nvidia A100 GPU uses HBM2E at 2.4Gb/s.

To further solidify their presence in the market, Micron has announced HBMnext, with an expected release date at the end of 2022. Micron “is fully involved in the ongoing JEDEC standardisation” and believes that with the increase of consumed data, “HBM-like products will thrive and drive larger and larger volumes”.

If you want to know more about Micron Tech Brief, you can check it HERE. Discuss on our Facebook page, HERE.

KitGuru says: How do you think tech companies will use HBM2E and HBMnext? Will it be viable to use it in gaming-oriented graphics cards?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards