AI Power Profiles

Before diving into the testing results, it's worth quickly going over Gigabyte's AI system, as this essentially boils down to different modes which enable varying power limits for the CPU and GPU. The first stage is choosing the ‘Power Gear', essentially giving a choice between Power Saving, Balance or Performance profiles, though do note enabling a new mode here does require a restart.

The AI Boost feature is more interesting, as this offers a bunch of profiles that are tailored to different tasks. You can enable these manually, but if you simply engage the AI Boost feature, the system will automatically switch profiles when it detects certain software being used. Cinebench, for instance, switched to the Creator profile, while Cyberpunk 2077 engaged the Gaming profile.

Personally I think calling this ‘AI' is somewhat of a stretch, but it is at least a semi-useful system that will funnel more power to either the CPU or GPU, depending on what you are doing.

For my testing today, I tested three different configurations. The first was using the Balanced power option, with AI off – the default, out of the box experience. I then tested again using the same Balanced power mode, but this time with AI Boost on so we can see how the different profiles affect performance. Finally, as an all-out test, I ran the Performance power mode but with the Turbo profile manually selection, to give an indication of the best performance this machine is capable of, though the noise levels were extreme as we will get to!

In terms of behaviour then…

- The Balanced power setting with no AI saw the P-cores boost to 3.6-3.7GHz initially, with power around 120W. After 40 seconds, the power dropped to 85-95W, with P-cores clocking at 3.2-3.3GHz. In Cyberpunk 2077, the GPU clocked around 2370MHz, with power reported at about 95W.

- The Balanced power mode but with AI enabled saw the P-cores boost to 3.9-4GHz initially, with power around 135W. After 40 seconds, the power dropped to about 110W, with P-cores clocking at 3.5-3.6GHz. In Cyberpunk 2077, the GPU clocked around 2565MHz, with power reported at about 110W.

- The Performance power mode using the Turbo profile saw the P-cores boost to 4-4.1GHz initially, with power around 150-160W. After 40 seconds, the power dropped to about 120W, with P-cores clocking at 3.7-3.8GHz. In Cyberpunk 2077, the GPU clocked around 2565MHz, with power reported at about 115W.

Testing

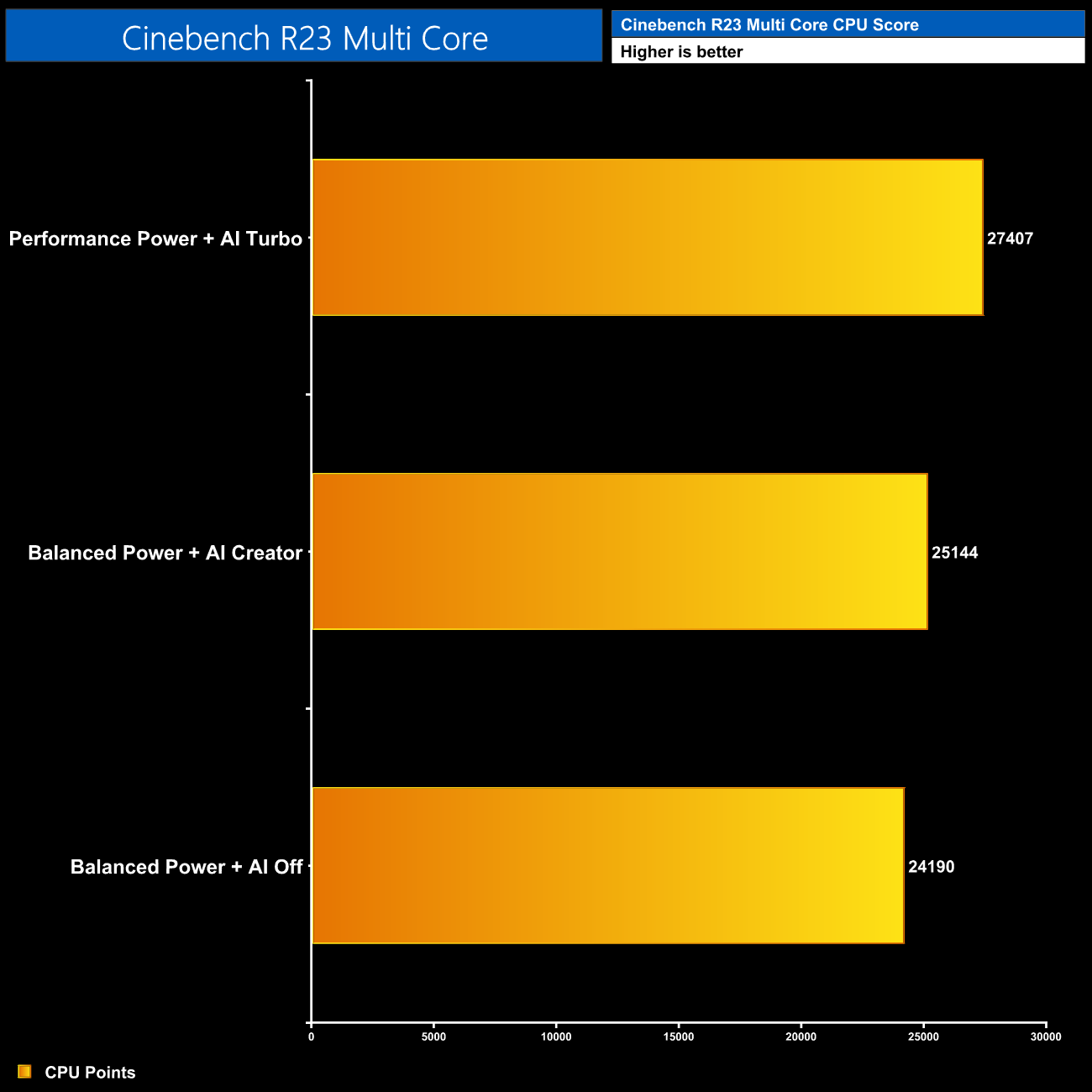

Naturally in Cinebench Multi Core, the higher the power the better the score will be, with our Turbo testing indicating performance can be improved by about 13% compared to the default, out of the box experience.

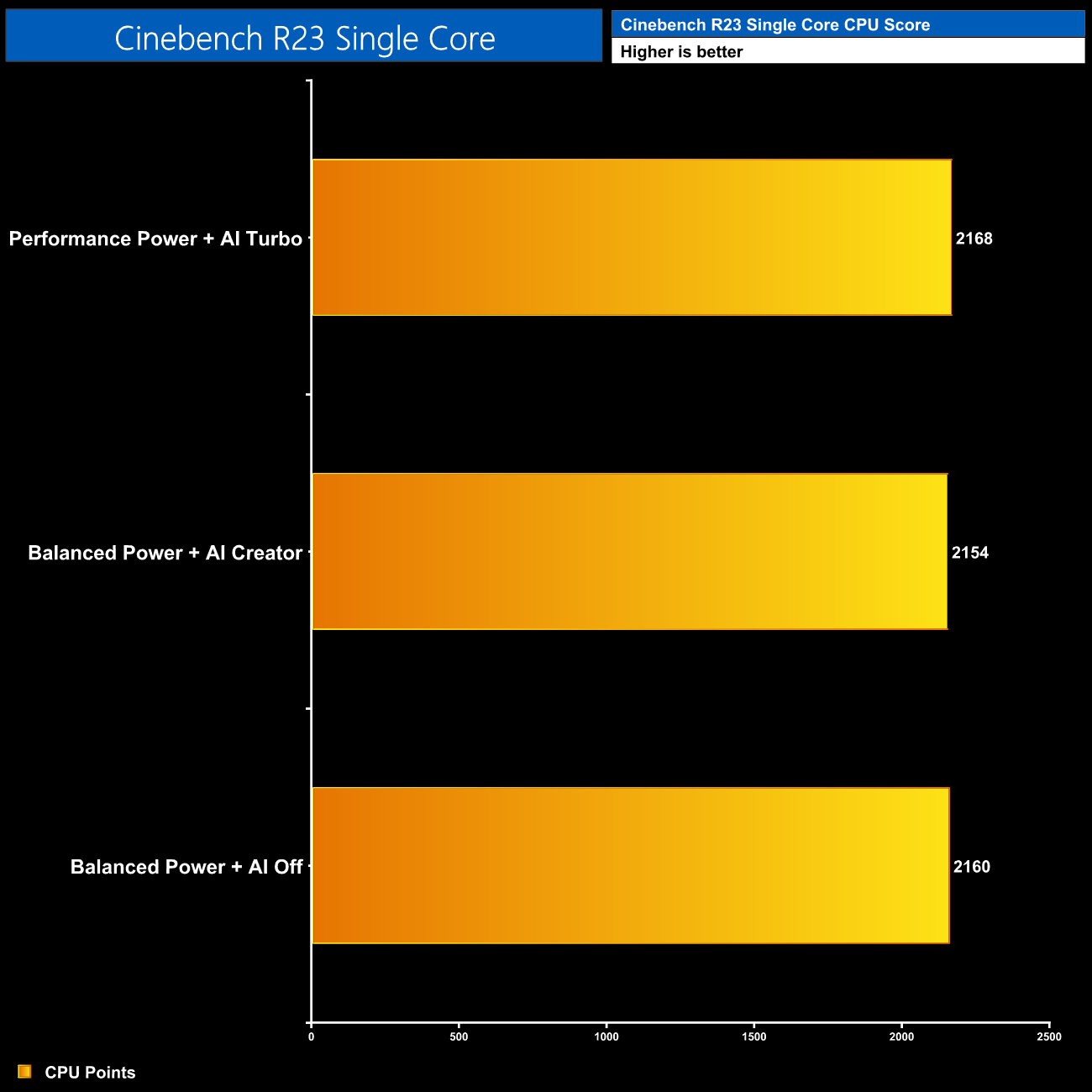

The single core testing saw no real difference in performance however, as the power limit has very little significance with this metric.

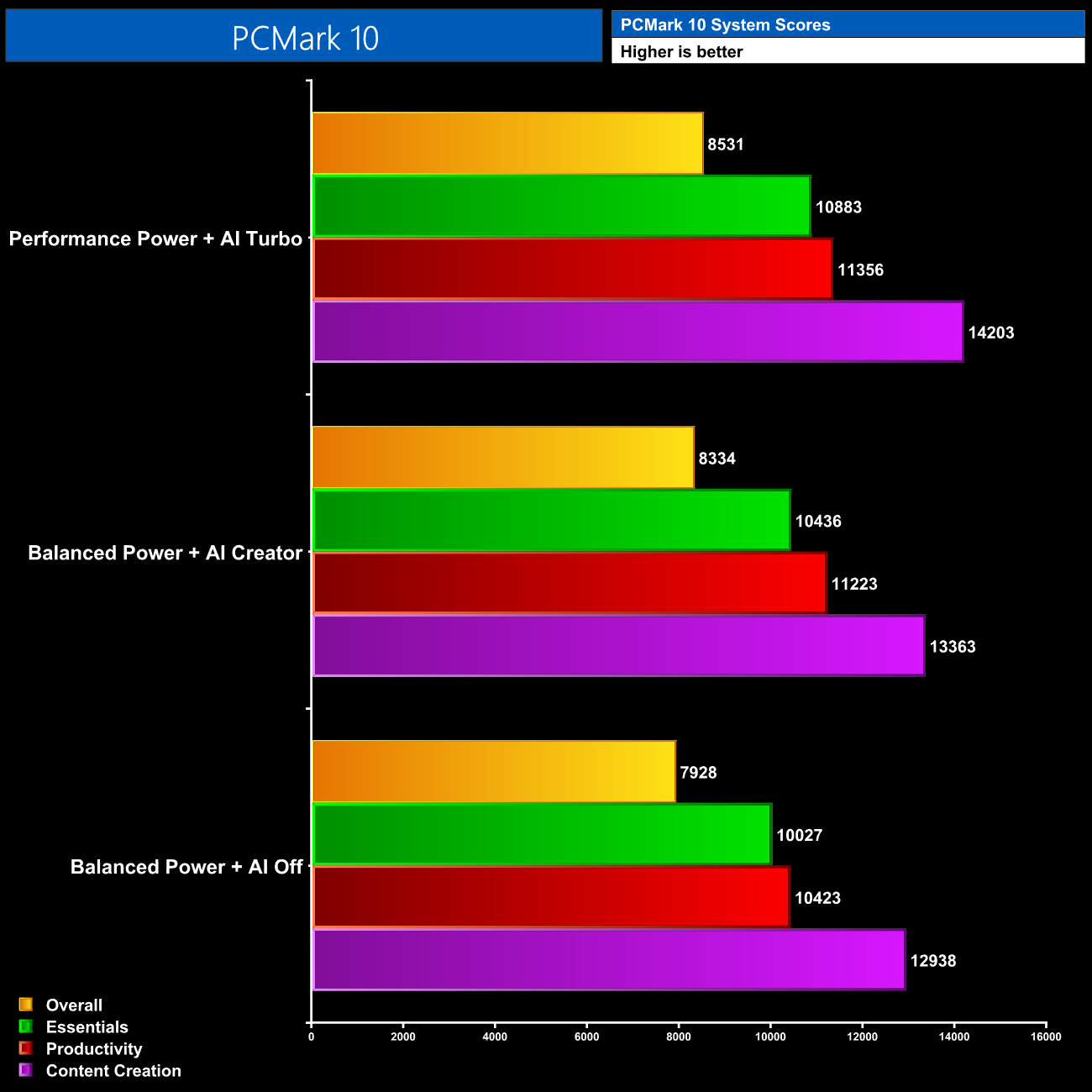

PCMark 10 again shows the benefit of the higher power modes, with scores increasing every time we bumped up the profiles tested. Overall, the Turbo mode is 10% faster than the results with no AI enabled.

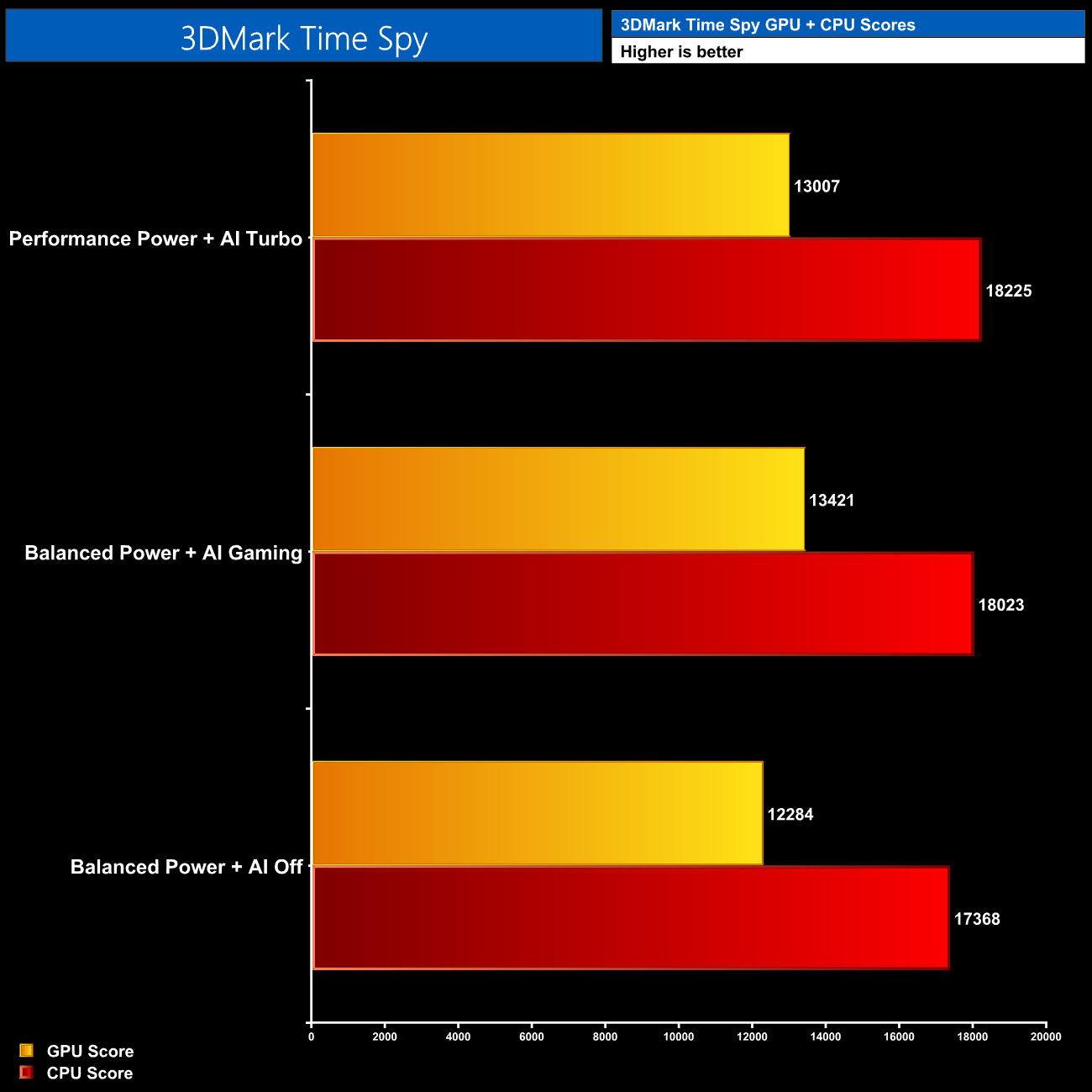

We see the same performance scaling in 3DMark when looking at the CPU score, but interestingly the Turbo GPU score was slightly worse than the Balanced + AI Gaming result. This behaviour cropped up a few times in our game testing too, and it appears to down to the fact that the Turbo mode can sometimes reduce GPU power and clocks compared to the AI Gaming mode, in exchange for higher CPU power.

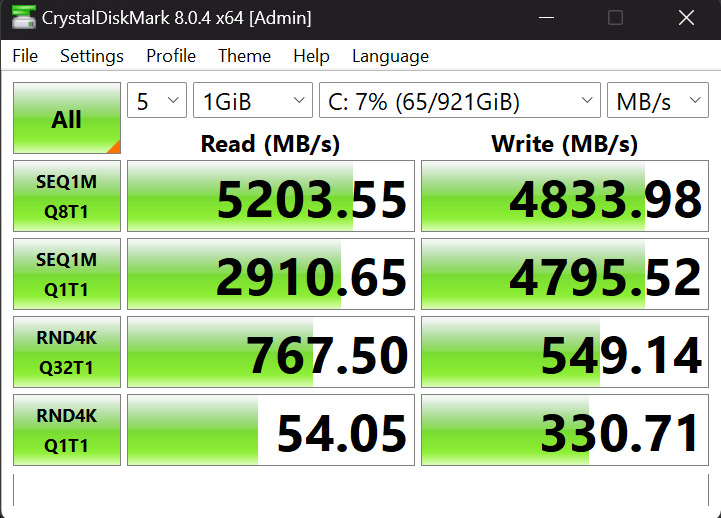

The pair of Aorus Gen4 5000 SSDs used here live up to their name, with sequential reads hitting 5.2GB/s, while sequential writes hit 4.8GB/s. As both SSDs are the same model, performance is identical between the two.

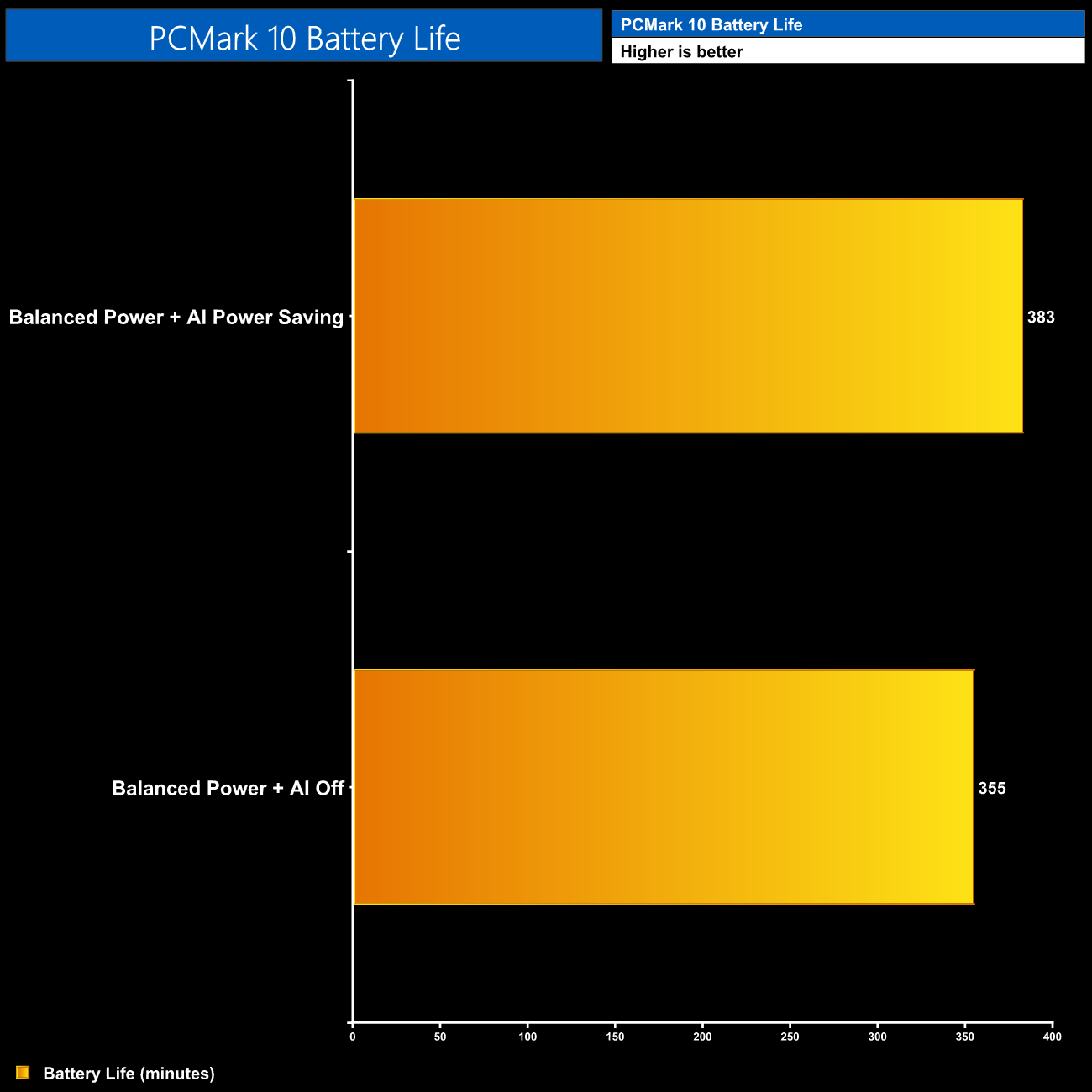

Finally, we tested battery life using the PCMark 10 Modern Office test, using the Balanced profile with no AI, and then again with the AI Power Saving mode engaged. This didn't make much difference, but both results offered about seven hours of battery life for light tasks which I was pretty happy with considering the beefy hardware in this laptop.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards