Fallout 4 has been out in the wild for one week now and we have been hearing a lot about the PC port. We already took a quick look at it on launch day, using a GTX 980Ti to analyse the port and seek out any issues. Now, we have decided to throw a couple of other popular graphics cards in to the mix, so you can see how the game runs on a wider range of hardware.

We have received a lot of positive feedback from readers on our past PC game performance reports and while I don't review graphics cards for KitGuru, the hardware team sent me a couple of extra cards last week to add to these tests: The MSI GTX 970 and the Sapphire Vapor X AMD R9 290. These extra cards, along with my GTX 980Ti will be running in a system using an Intel Core i7 5820K, 16GB of Crucial Ballistix 2400MHz RAM, a MSI X99S SLI PLUS Motherboard and a 240GB Kingston V300 SSD.

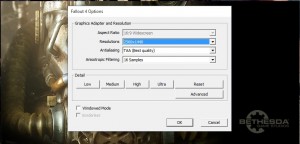

Fallout 4 is running on an updated version of the Creation Engine, which was also used in Skyrim back in 2011. The game has V-Sync switched on by default but there is no frame rate cap, meaning those with high refresh rate monitors won't be bottlenecked. This does bring to light some issues though- the engine wasn't designed to run at frame rates higher than 60, so the game will be noticeably buggier if you go higher than 60Hz.

In my initial report last week, I pointed out that exiting terminals would often cause in my character to get stuck in place and not being able to move. I also encountered a bug with the power armour animation. These can be avoided by capping your refresh rate at 60Hz.

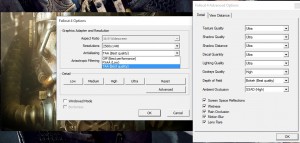

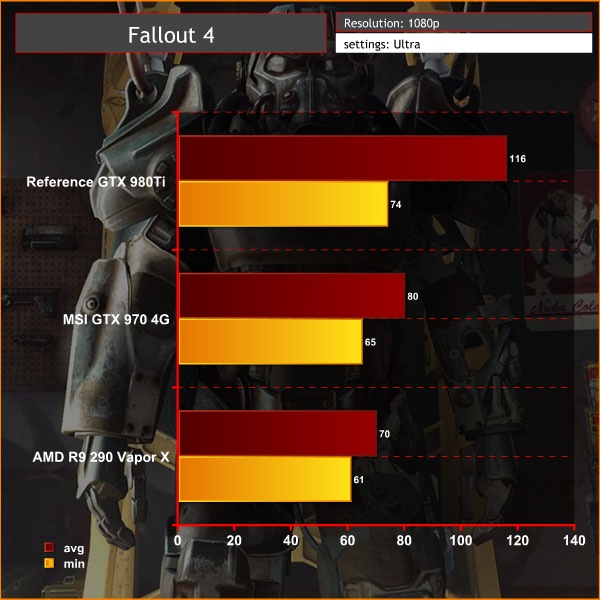

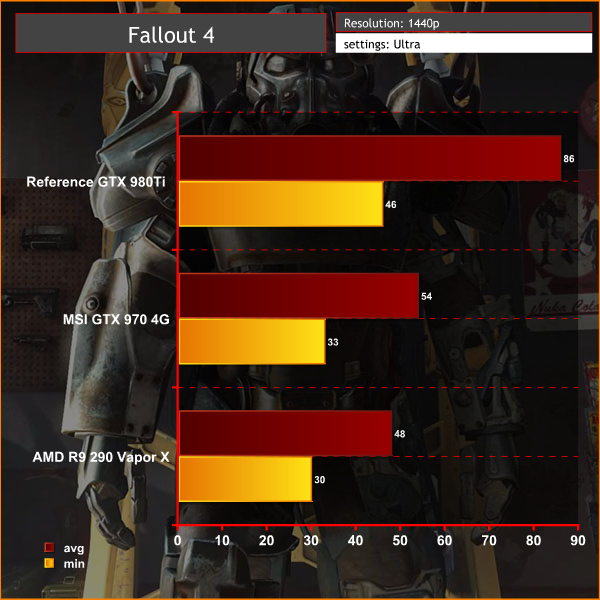

Now with that all out of the way, lets get down to performance. Fallout 4 isn't a graphically ground breaking game, so it should run fairly well in theory. I tested the game at both 1440p and 1080p using the Ultra preset on the GTX 980Ti, GTX 970 and R9 290 in order to see how the game runs while looking its absolute best. On the AMD side, the latest Catalyst 15.11.1 beta driver was used. For Nvidia, we used the Game Ready driver 358.91.

Click images to enlarge.

In the GPU Z screenshots above you can see the specifications and clock speeds of the hardware. None of them were overclocked for this test.

The graphs above show average and minimum frame rates for each of our three GPUs. No matter what card we were using, we found that frame rates can vary dramatically, seemingly at random. We would often get huge spikes in frame rate too, resulting in a pretty disappointing experience overall, gameplay doesn't stay smooth for very long.

On the GTX 970 and R9 290, frame rates would often dip in to the low 30's but would also occasionally rise as high as 80 to 90 frames per second at points in the Wasteland while running at 1440p. At 1080p, both cards are able to keep things above 60 frames per second more often than not but there are still plenty of huge spikes and dips.

Given that the engine isn't designed to run above 60 frames per second without issues, I also ran the game at 60Hz and still found that the frame rate would cut itself in half at points.

We aren't alone with these frame rate issues on the PC. There have been plenty of reports showing the console version of Fallout 4 also struggling, so it seems Bethesda may have dropped the ball a bit there. There is a beta patch available on Steam right now but it doesn't seem to make much of a difference and you will still find that performance dips as much as 50% in some cases.

There are reports that lowering the Shadow Distance setting to medium can help, but I did not find this to be the case here. Still, it is worth trying as you may have different results.

As for the actual game, it plays very similarly to Fallout 3 with an engaging story and huge world, the shooting mechanics are also much better, so much so that VATS doesn't need to be relied on nearly as much. Plenty of you are already playing Fallout 4 and many will likely look past the issues and push on with the story. However, if you are particularly sensitive to frame rate instability, then you may want to wait for a performance patch.

Discuss on our Facebook page, HERE.

KitGuru Says: Bethesda has a long history of supporting its games post-launch, so we will likely see the first patch within a few weeks. Hopefully when it does arrive, we will see performance across all platforms stabilize a bit. Have you been playing Fallout 4 this week? Are you satisfied with the performance?

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Still pretty high end cards, overall

Have you not got any 750 Ti cards knocking about? 560 Ti? any AMD R7 Series cards?

Maybe I’m not particularly sensitive to frame rate instability, but I’ve been playing this at 1080 on an i5-4690k, 8gb DDR3-1866, and a Gigabyte R9-290, and the game seems to run really, really well. Nice and smooth, pretty much constantly. I’ve been really impressed, and I can’t think of any moments yet where my frame rate has suffered.

(I do turn off a couple graphics options, not because they don’t run well, but because I find them annoying. I turn off motion blur because it gives me motion sickness. I turn down AO because it’s generally too bright and makes it hard to see. In this case I’m using MSAA instead of TAA because TAA makes everything blurry.)

Motion Blur and TAA have quite a impact (especially AA), but I suppose you’re not very sensible to frame drops because having a framerate varying between 30 and 48 should result in erratic smoothness and/or tearing if v-sync is off.

Lucky you ^^

30-48 fps is on the 1440 graph. I’m running 1080, which on this graph is in the 61-70 fps range for the 290.

Not very happy with the graphics quality – the frame rate issues and hitching are incredibly annoying. I did find running in windowed borderless helped a bit, but for whatever reason I have a column of pixels in column 0 which shows my desktop background. It’s playable, but I’m not too impressed.

I’m running an EVGA 980Ti SC and a pretty powerful 8-core setup – this setup played day-1 Batman Arkham Knight without any significant issues, and that game was visually stunning – it makes Fallout 4 look like something designed by people that just didn’t care about anything but the money. Since this is my first foray into the Fallout franchise, this makes me very apprehensive about buying any DLC for Fallout 4, or wasting any money or time on Fallout 5 should one ever see the light of day.

My bad.

Do you use a 60Hz display and have you enabled v-sync ? (either in the game or the driver settings).

If yes, that explains it. Enabling v-sync will force the game to render no more than 60 frame per seconds, hence preventing any framedrop and lack of smoothness.

My display is, in fact, 60Hz. (Actually, Windows sees it as having a 75Hz max refresh rate but I don’t think I’ve seen it actually do it.) My driver is set to use application settings for v-sync, so if Fallout 4 is enabling it by default, then yes, v-sync is on.

But either my frame rate doesn’t drop enough for v-sync to switch to 30fps, or my eyesight isn’t strong enough to recognize the difference between 60fps and 30fps and the switch between them.

“At 1080p, both cards are able to keep things above 60 frames per

second more often than not but there are still plenty of huge spikes and

dips. Given that the engine isn’t designed to run above 60 frames per

second without issues, I also ran the game at 60Hz and still found that

the frame rate would cut itself in half at points.

That’s the thing that either I don’t experience, or I don’t notice happening. (I’ll freely admit that I might just be not-noticing it. I have a tendency to get really, really immersed in Fallout games. lol)

what v-sync does is matching the rate at which the images are sent to the display, to the refresh rate of your display (hence preventing tearing).

It doesn’t it doesn’t “switch” to 30fps. In case the game drops below 60fps, some images might get displayed twice in a row (because the next one is not ready).

it is a little more complicated than that (there is also a buffer, etc), but that the gist of it.

I suspect you’re not seeing it because you don’t have those frame rate fluctuation (being limited to a refresh rate lower than the “dips”).

I know it doesn’t “switch” to 30fps, but without triple buffering, if the game drops below 60fps, you’re pretty effectively getting 30fps.

http://www.tweakguides.com/Graphics_9.html

That’s the page that primarily shapes my understanding of v-sync.

“If at any time your FPS falls just below your refresh rate, each frame starts taking your graphics card longer to draw than the time it takes for your monitor to refresh itself. So every 2nd refresh, your graphics card just misses completing a new whole frame in time. This means that both its primary and secondary frame buffers are filled, it has nowhere to put any new information, so it has to sit idle and wait for the next refresh to come around before it can unload its recently completed frame, and start work on a new one in the newly cleared secondary buffer. This results in exactly half the framerate of the refresh rate whenever your FPS falls below the refresh rate.“

It’s not shadows distance that affects FPS, it’s shadow quality and godrays

That’s partially true.

Displaying twice every images will obviously result in dropping to 30 fps, but that doesn’t mean the need to display the image twice will be applicable for all the images in the same second.

Either the framerate might drops for only a part of the whole second (common with unexpected shader compilation and the like), or your card could be able to compute 45 fps, which means it will need to display twice only odd images : 1-1-2-3-3-4-5-5 (triple buffering).

Obviously, displaying images at a framerate which is not a multiple of the refresh rate induce stutters.

Oh I know. All I’m saying is, I know it doesn’t literally “switch” from 60 to 30 fps and back again, but for the purposes of the discussion (and for the purposes of my comments) it’s a close enough description of the phenomenon to be understood, and I’m not experiencing the dips and spikes and judders that the article’s author apparently experienced with the same GPU (on a similar board) and a much more powerful CPU/RAM combo.

I have that exact 970 card and I don’t average 80 fps, is it time to change my i5-4670k 3,40 GHz proccessor for future games already? :S

I agree, it would be a lot more helpful if they added other 9 series Nvidia cards along with a couple of older AMD cards like the 290X etc. But one way to find out if you can run it is to go to this website and enter your specs.

http://www.game-debate.com/games/index.php?g_id=5013&game=Fallout%204

It will give you a rough idea of how you will perform and a much better idea if you create an account (which is free).

I’m running the 560 Ti, so I went into the game thinking it was going to be like playing Tomb Raider 1 again

I was pleasantly surprised though, as the I was getting 60FPS on 1080p in same areas, but I haven’t seen it dip below 45FPS yet

using i7 and 980 NVidia with 16gb memory , on Ultra settings apart from one falling through the frame , no problems at all ,

With the level of polygon count, character animations, physics, AI, textures this game has, it should be running at 60 fps on a GTX680.

Ahja cause graphics are the only thing that makes a game worth playing, obviously. Sarcasm aside, arkham knight’s release was so bad they stopped selling it, but heey it looked pretty on your pc right.

Fallout 4 has enough content to last you months. Arkham Knight, ah week or so. Fallout 4 has sidequests with more characters, environments, action, atmosphere, story and epicness then Arkham Knight offers in the entire game.

And its a wasteland with disgusting mutated animals en ghouls. So obviously it wont shine like batman does. If you need shiny muscular tough guys, who talk like they have throat cancer, then you also need to come out of that closet Tom. Or just pop in a movie, since gameplay isn’t your thing.

And stay away from fallout franchise, people are having fun with it, don’t ruin it for them.

Same here, with previos drivers. Instead of 290 if have the 390, but that’s almost the same right.

Only notice drops when i just entered diamond city. For 2 seconds tops so no biggie.

I run the game with TAA and motion blur. Turning motion blur off next time though, anoying indeed.

Yes it does. At least nowadays it does. In Black ops 3 multiplayer with cinematic smaax2, the performance dropped far under 60 every know and then, resulting in a fixed 30fps until performance comes back for 60.

Fallout 4 – on my configuration doesn’t work well, doesn’t operate even close to smoothly. It hitches a lot, rather unenjoyable, and it can be difficult to fight at times when this happens. This is a very common complaint, so it’s not just some whiny child, it’s a lot of people across all age groups.

I am well versed in Arkham Knight’s problems, but even though people had a tough time with the issues, I didn’t have the same issues. My point was considering how much more involved and detailed the graphics were, more environmental effects, etc, my card reacted spectacularly to Arkham Knight, before the patches started rolling out.

The graphics in Fallout 4 are not the issue, it’s the code driving them.

I know Fallout 4 will receive some patches to improve graphics performance, reduce hitching , etc. As someone who is directly involved in quality control, it saddens me to see a product pushed out of such low coding quality, so low that it’s affecting multiple platforms. I was not impressed to hear other people’s experiences with Arkham Knight on the PC, so there are certainly parallels here.

Anyone buying this game should be prepared for either a great experience, or a terrible experience – initially. It’s not ruining it for them to talk about the problems. It’s caveat emptor.

Fixed 30fps ? Does Black Ops 3 lack triple buffering support or have a dynamic capped framerate feature ?

Are those the latest drivers from AMD? They just released some new drivers and the version is different than what your GPUZ screenshot is showing. Latest should read as 15.201.1151.1010, which actually has FO4 optimizations. OC3D had a post comparing old benchmark numbers to the new one with the optimized drivers and the Fury X got boosted by around 18% on average through 4k, 1440p and 1080p.

Yeah i get your point. Sorry for the cynicism. I’m sometimes baffled to, how one game performs like a dream with a minimum amount of resources used. Other games who look mediocre, run like shit.

I never worked at a game developer.. but it seems they have their priorities wrong.

For me, after fallout 3. I knew what to expect.. i think that’s a large factor in your disappointment. Fallout 3 had a even buggier release, even some game braking bugs story and quest wise.

But somehow, for me at least, it seems to fit the game. It gives it a unique style, that reminds you of the old days with all the perks and pro’s of modern videogames.

Dynamic/adaptive vsync, does that exist? It popped up in my head, so i assume it comes from somewhere.

Dynamic vsync exists, but it only disable v-sync as soon as fps are below the refresh rate, to lessen lag at the price of tearing.

I don’t have Black Ops 3, but that choice (dynamic capping of framrate) is very puzzling.

Yeah it’s not a big problem if you’re settings are right, but a really weird choice from the developers.

I’m running an i7 920 with GTX 480 SLI and I have only occasional dips in frame rate at Ultra Quality. Totally playable and enjoyable!

l just g○t paid` $6784` work!ng off my Iapt○p last 7 days. lf You think that’s CooI, my d!vorced friend with twin t○ddIers, is making over $10k every month & convinced me To try. l can’t beIieve how easy it was once l tried it out. Earning potentiaI with this is endIess!…..

To See What I Do Check my Pr0FlLe`………….. 48