Marvel's Guardians of the Galaxy hit shelves earlier this week and we've been hard at work to bring you this extensive performance analysis. If you want to know exactly how well this game performs, we've tested over 25 graphics cards across three resolutions to answer that exact question, with a look at the game's ray tracing and DLSS features also included.

Eidos Montreal is the team behind Marvel's Guardians of the Galaxy, with the developer opting to use its Dawn engine for this title – the same engine that powered Deus Ex: Mankind Divided. It's a DX12-only title, with a choice of five different graphical presets. We did all of our benchmarks using the Ultra preset, but we take a look at preset scaling in the video.

Somewhat frustratingly, the game's display setting menu is home to a refresh rate slider that only goes up to 144Hz. It seems that the refresh rate set here also acts as a framerate limiter in game, so it’s not possible to get more than 144FPS. Based on my testing with ultra settings, that’s only going to be an issue if you’re using an RTX 3090 or RX 6900 XT at 1080p, which is somewhat of a waste, but I still don’t like to see a frame rate cap at all and would hope Eidos Montreal can remove this in a future patch.

Driver Notes

- AMD GPUs were benchmarked with the 21.10.2 driver.

- Nvidia GPUs were benchmarked with the 496.49 driver

We didn’t end up using AMD's latest ‘game ready' 21.10.3 driver during our testing, instead we opted for 21.10.2. The simple reason for this is because, using the 21.10.3 driver, shadows are very glitchy in the game – they seem to pop out as you move over or next to them and it’s very distracting (as shown in the video). Reverting back to 21.10.2 completely removes this issue so I’m not sure what has happened there, but right now we think it’s really the only way to play the game, as the glitchy shadows is a noticeable and immersion-breaking issue.

Test System

We test using a custom built system from PCSpecialist, based on Intel’s Comet Lake-S platform. You can read more about it over HERE, and configure your own system from PCSpecialist HERE.

| CPU |

Intel Core i9-10900K

Overclocked to 5.1GHz on all cores |

| Motherboard |

ASUS ROG Maximus XII Hero Wi-Fi

|

| Memory |

Corsair Vengeance DDR4 3600MHz (4 X 8GB)

CL 18-22-22-42

|

| Graphics Card |

Varies

|

| System Drive |

500GB Samsung 970 Evo Plus M.2

|

| Games Drive | 2TB Samsung 860 QVO 2.5″ SSD |

| Chassis | Fractal Meshify S2 Blackout Tempered Glass |

| CPU Cooler |

Corsair H115i RGB Platinum Hydro Series

|

| Power Supply |

Corsair 1200W HX Series Modular 80 Plus Platinum

|

| Operating System |

Windows 10 21H1

|

Built-in Benchmark vs Gameplay

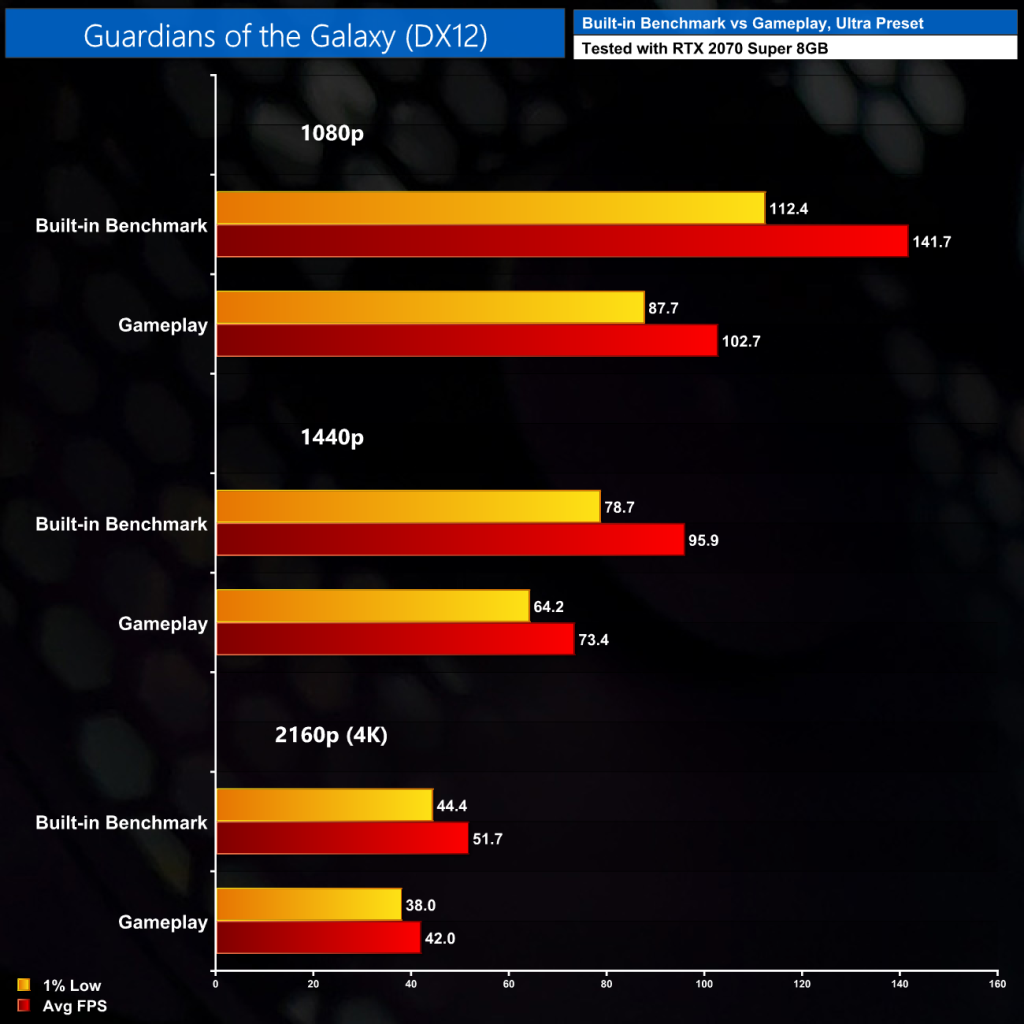

Before getting to the benchmark data for every single GPU, it's worth noting that we didn't use the game's built-in benchmark for our testing today. As you can see above, the built-in bench delivers frame rates up to 38% higher than what we saw while actually playing the game. Instead, for this analysis we benchmarked a section from the game’s first chapter, delivering data that was much more representative of what I saw over the two hours or-so that I played the game.

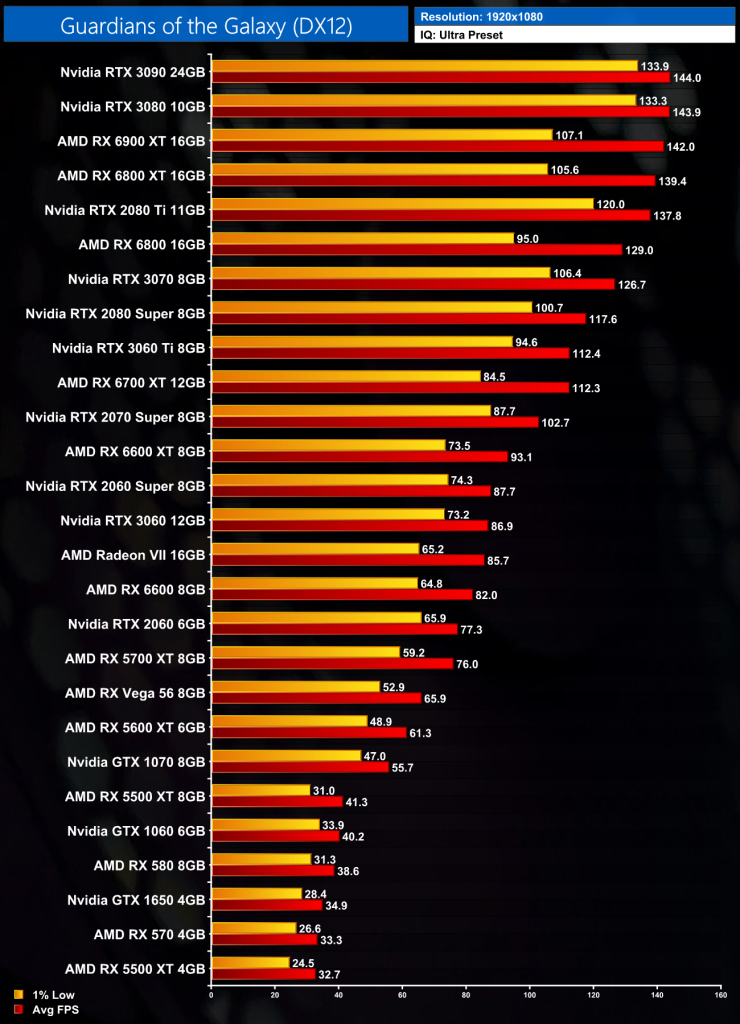

1080p Benchmarks

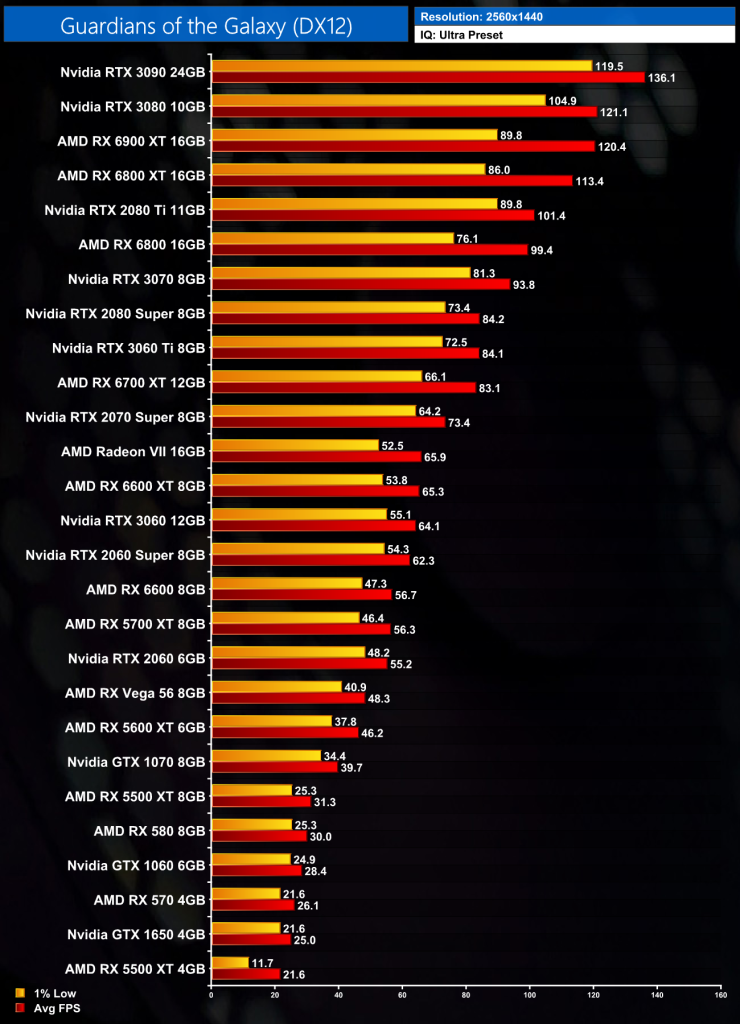

1440p Benchmarks

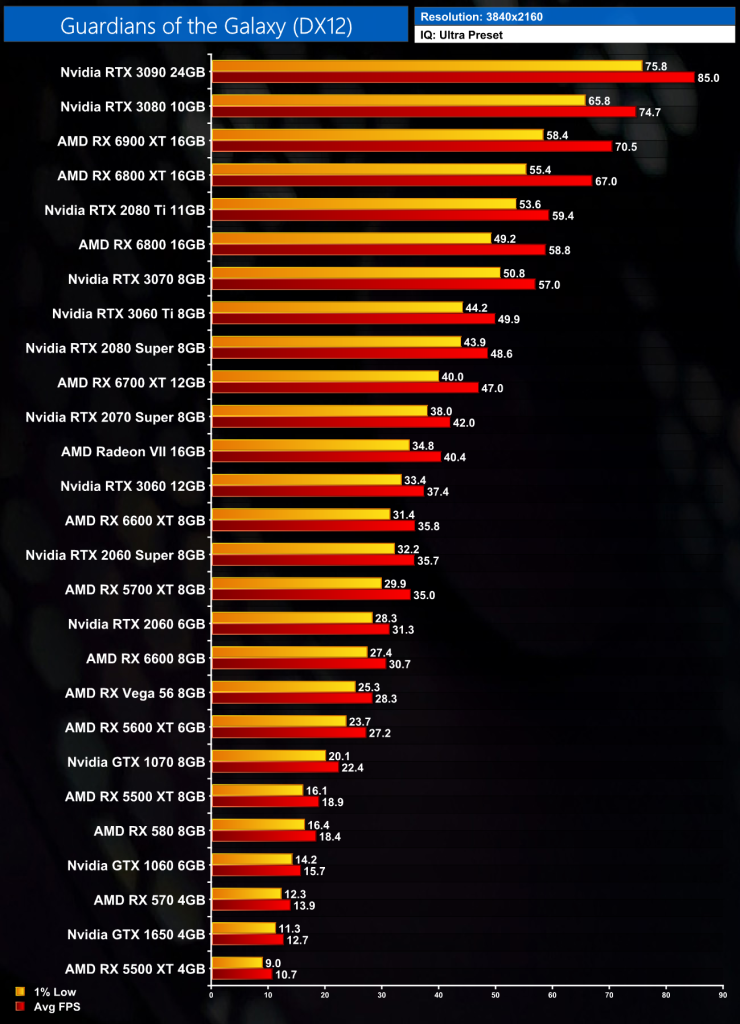

2160p (4K) Benchmarks

Preset Scaling

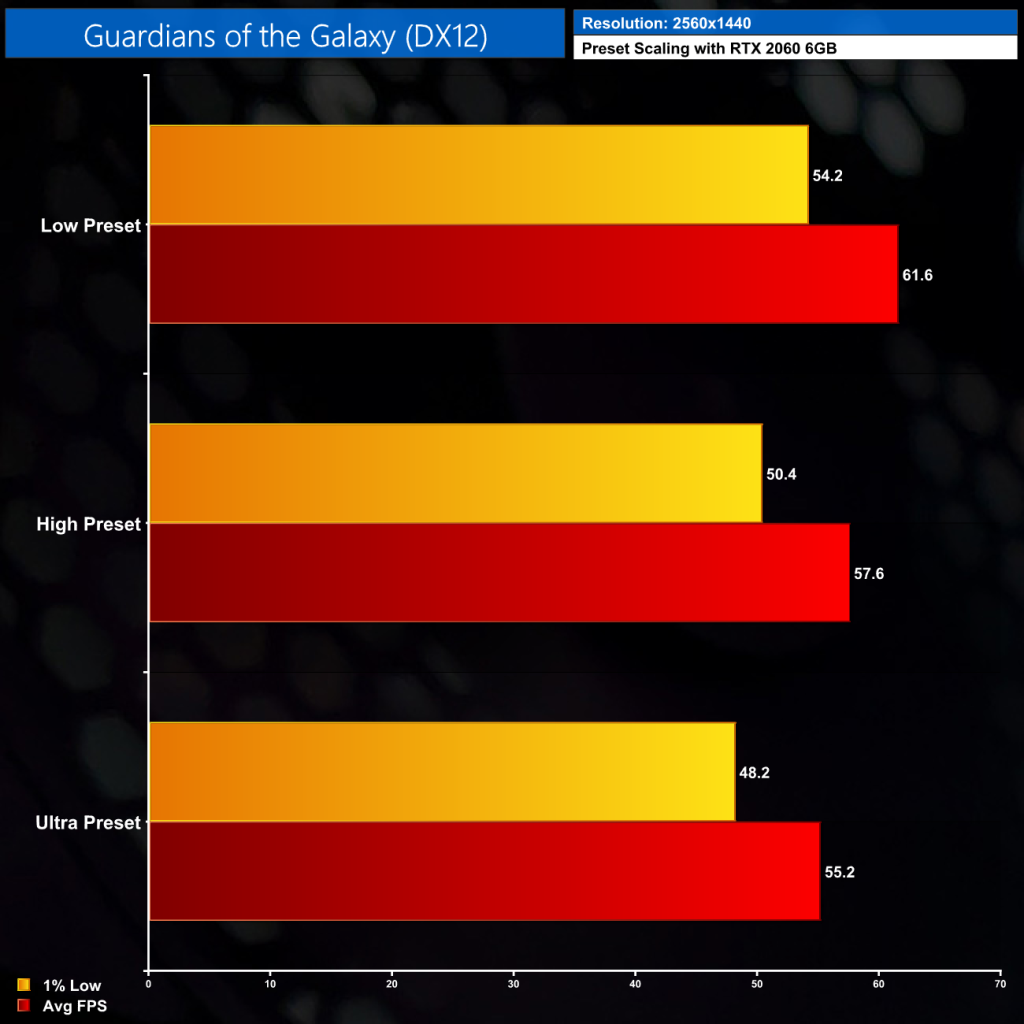

We benchmarked the ultra preset on every single GPU we tested – but how much performance can you gain by dropping down a preset or two? The answer is barely anything – even going from the ultra preset to the low preset resulted in a difference of less than 7FPS. This is pretty shocking in my view, and yes I did try this with various GPUs but the scaling was consistent across the board.

I think this is a consequence of only having a handful of meaningful image quality settings – comparing the visuals of ultra settings vs low doesn’t show much difference at all, as you can see in the video. It does mean those with lower-end GPUs may struggle even on low settings however, so I really would’ve liked to see more work done to improve scalability.

VRAM Allocation

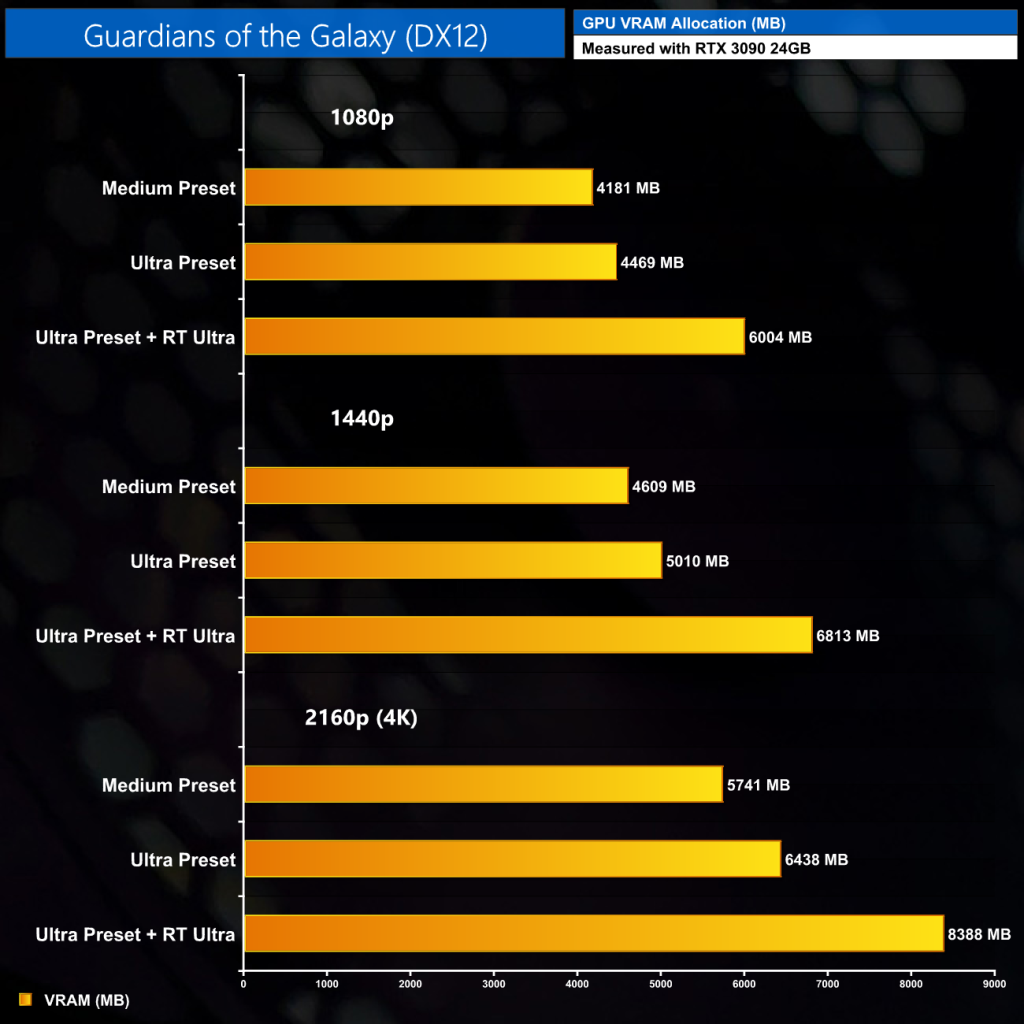

VRAM allocation isn't too high for a modern title. 1080p ultra saw our RTX 3090 allocate almost 4.5GB VRAM, which could explain why the 4GB GPUs we tested all suffered somewhat at 1080p, but 5GB VRAM at 1440p isn't so bad. This does increase to around 6.5GB at 4K, while ray tracing of course increases VRAM requirements by a significant amount at every resolution.

Closing Thoughts

Marvel's Guardians of the Galaxy is a great-looking game with a fantastic art style. It's not without its fair share of issues though. The essentially non-existent performance scaling is definitely among those and one I hope can be fixed with a future update, as is the annoying 144FPS cap that is imposed for seemingly no reason. I did notice a couple of random bugs during my play-through, including the Star-Lord logo just hanging in mid-air, while AMD’s latest driver actually breaks shadows in the game.

Despite those issues, I've been pretty impressed with the overall frame rates and stability of the engine. I didn't notice any stuttering during my time in the game, and while AMD's GPUs do tend to have slightly more erratic frame times and 1% lows, they still perform well – even on the older 21.10.2 driver.

We can see Nvidia's Ampere GPUs taking the upper hand though, with the RTX 3080 and RTX 3090 leading the pack across every resolution tested. The RX 5700 XT is particularly poor performer, coming in closer to the RTX 2060 than the RTX 2070 Super, but again we hope a proper game ready driver – that actually works – can help restore some performance there.

The one caveat in terms of performance is around 4GB GPUs. When testing 1080p ultra settings with an RTX 3090, we saw nearly 4.5GB VRAM allocated and that could explain why the 4GB cards we tested all struggled to hit even 30FPS. The 4GB 5500 XT is significantly slower than its 8GB counterpart for instance, so do be wary.

That said, the game's official recommended specs tout the GTX 1060 6GB as minimum though, and at 1080p ultra settings that card delivered 40FPS on average, so you don't need a beast of a GPU to get a playable frame rate. Particularly low-end cards however, with less VRAM, may struggle.

On the whole, I had a great time playing this game and will definitely be finishing it off this weekend. There are some bugs and issues that need to be ironed out, but considering the visual quality on show, I am pretty happy with what Guardians of the Galaxy can offer.

Update 29/10/21: Shortly after publishing this article, AMD released the Adrenalin 21.10.4 driver, specifically addressing the lighting/shadow issues we pointed out with the 21.10.3 driver. This should help improve performance on AMD GPUs. You can download 21.10.4 HERE.

Discuss on our Facebook page HERE.

KitGuru says: It's a beautiful game with a lovely art style, and it runs pretty well on a wide range of GPUs, too.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards