Adobe Media Encoder CC 2017

Like 3D rendering, video encoding is a task that now takes very good advantage of multi-core processors. Although a lot of reviews focus on Open Source encoders such as Handbrake, this is a review of professional applications, so we have chosen Adobe Media Encoder CC 2017 (AME) for our test bed instead. You can download a trial of this software from Adobe. For an encoding source, we used the 4K UHD (3,840 x 2,160) version of the Blender Mango Project Tears of Steel movie.

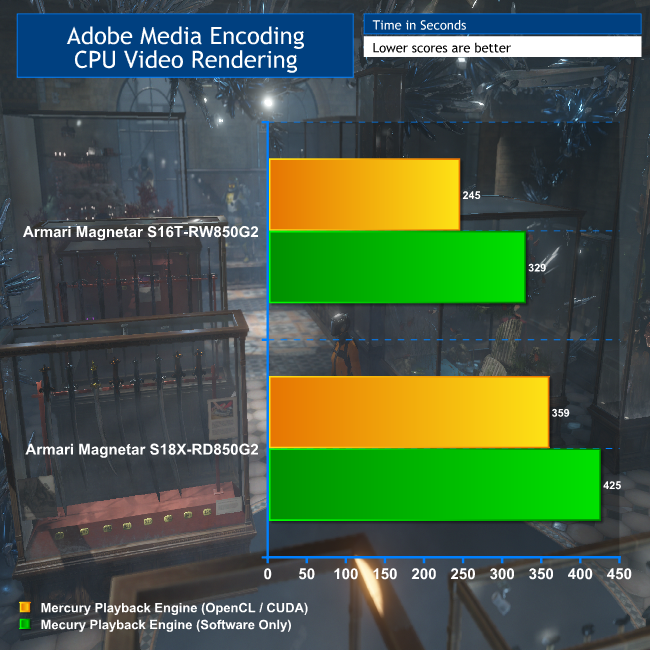

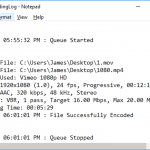

We encoded with the Vimeo 1080p HD preset in AME. This is a MP4 H.264 preset, using High Profile and Level 4.2. There are two modes available for the AME rendering engine (called the Mercury Playback Engine, presumably because it's smooth and, erm, shiny). One uses software only, so will just employ the CPU. But you can also call in CUDA on NVIDIA graphics and OpenCL on AMD graphics. We tried both GPU-accelerated and CPU-only options on both systems.

This is perhaps the most surprising result of all. With the Mercury Playback Engine in Software Only mode, the AMD Ryzen Threadripper was 29 per cent faster than the Intel Core i9. Bring in the GPU, and the difference is even more pronounced, with the AMD system providing 47 per cent faster encoding.

We were definitely not expecting the AMD-AMD option to be supreme in this test over Intel-NVIDIA. If you are video editing with Adobe applications, it looks like AMD is not just value for money, but actually the best choice for performance as well. Again, optimisation is likely a factor, but these are real results with real production software that is widely used in industry.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

fake benchmarks. sorry.. but isnt possible

Then please, go ahead and post your own benchmarks of the two systems. Oh wait, you can’t. Keep your tin-hat “fake news” screaming to your self.

Why does no one put a blu-ray player into their high end machines for these tests (or at all, even boutique companies make you do it as an upgrade)? If you have it hooked up to a monitor that also acts as your TV screen with HDMI connection, then why not make it your multimedia center like PS3/PS4/X-Box One was used for?

AMD releases ThreadRipper, Intel Releases I9, AMD will release 12 nm new RyZens, seems like the competition and frog leaping is back.. great for customers..

Intel was the king, then AMD was the king, then Intel was the king..

One thing for sure, there is no more a single kind.. and that’s good because prices are going to go down in all categories.. no more monopolistic price hikes..

Since AMD is back.. Cudo’s AMD…!!!

If you think about it, it isnt a fair benchmarck.

It should be every component at stock values. No OC.

It seems like the ideal mix might be the Threadripper with the Titan XP.

LOL ya thats what all the reviewers did with Ryzen 1600 vs 8400 no OC comparison then they say we will have OC results later.

There is another variation with AMD that NVIDIA can’t compete with AMD. The Radeon Pro SSG, which contains 16 GB memory + 2 TeraBytes SSD highly integrated into the GPU..

It can handle 8K, VR, 360 video stitching and other highly demanding graphics workloads like butter while the NVIDIA GPUs either crash with big data or just can’t perform smoothly..

It is a $8000.00 GPU card, but if you want the best processing power for large data, you only have the high-end large-data AMD Radeon Pro SSG..

CPU is not key here.. since most of these applications are GPU intensive and the CPU part is very secondary and the difference is negligible for these kinds of tasks when you have good enough CPUs form AMD or Intel.

AMD is coming back to its old glory.

Thanks for this nice review! Building a threadripper RX vega mgpu system myself this is interesting.

I would like to point out that Armari could have read the QVL on ASRock Taichi and get better working G.Skill Flare-X memory for TR4 platform. It does provide benefit. 64GB 2933@CL14 is not impressive for a finely tuned system. It could be considered sloppy.

Also selecting the Fatal1ty MB X399 instead would grant you 10GBit LAN.

AMD hasn’t been the king of CPUs since 2003ish Althon… And I don’t think they were ever the king of GPUs (nor were ATI)

Phew, I’d like to know how many of these monsters they manufactured,

5850/5870 for a while when Nvidia had to postpone 470/480

Possibly because people might just see optical drives as obsolete.

I know I have since for about 14 years now and hadn’t really used a DVD or a Blu-ray.

There are storage limitations to consider with optical disks vs massive SSD’s and HDD’s, plus the fact that its easier to damage your optical drives – whereas, it’s a lot cheaper to just get an external HDD (even portable ones top out today at 4TB) and just put loads of stuff there.

Also, most movies and shows can easily have Blu-ray quality without requiring tons of space thanks to superior compression algorithms.

AMD is coming back to its old glory.

fake benchmarks. sorry.. but isnt possible

Why? These are two production systems you can buy with three-year warranties. Armari will sell you precisely these systems.

Fake account…

Thanks! The Fatal1ty version of the board is quite a bit more expensive for just 10Gbit LAN, and although I’ve tested this in a previous ASRock board – it’s very quick – most people don’t have 10Gbit LANs so it’s a bit of a waste of money.

Where the RAM is concerned, there is a bit of a RAM shortage in the UK right now. I’m absolutely certain Armari read the QVL. They have a direct line to ASRock and their suggestions for BIOS improvements are generally implemented immediately. But after testing, this was the best RAM that would work in the board with the BIOS at the time. During building, it appeared that you could either have faster RAM or a processor overclock, but not both at the same time. I’ve managed to run the system solidly at 3,066MHz, but it doesn’t make any difference to benchmark results. At 3,200MHz, it’s not entirely stable.

Also, I just did a bit of research and G.Skill doesn’t have a Flare-X 3,200MHz kit as 4 x 16GB, only 2,933MHz, so I very much doubt it would be better than the Corsair memory used for this review.

LOL ya thats what all the reviewers did with Ryzen 1600 vs 8400 no OC comparison then they say we will have OC results later.

AMD is coming back to its old glory.

Well, G.Skill Flare-X is supposed to be gauranteed Samsung B-Die which means that on TR4 you can push it higher. 3.8GHz and 3200mhz @cl14-13-13-28-42-1T is not unheard of (4x 16GB Samsung B-die DIMM’s).

http://cdn.overclock.net/d/d5/d50fd86a_HCI2.jpeg

Corsair can and do change suppliers as they seem fit. A certain version of the RAM corresponds to Samsung B-die but it is never specified beforehand on the order or merchandise. Only when you open the box and check the Samsung B-die lists around the web you know if you got Hynix or Samsung E-die which can’t be pushed well on TR4 platform.

Well, G.Skill Flare-X is supposed to be gauranteed Samsung B-Die which means that on TR4 you can push it higher. 3.8GHz and 3200mhz @cl14-13-13-28-42-1T is not unheard of (4x 16GB Samsung B-die DIMM’s).

http://cdn.overclock.net/d/d5/d50fd86a_HCI2.jpeg

Corsair can and do change suppliers as they seem fit. A certain version of the RAM corresponds to Samsung B-die but it is never specified beforehand on the order or merchandise. Only when you open the box and check the Samsung B-die lists around the web you know if you got Hynix or Samsung E-die which can’t be pushed well on TR4 platform.

AMD is still the absolute king here. Offering performance that high at such a lower price is the clear winner for me, hands down. Intel wants $2600 for that processor here in Canada, meanwhile I can get the top of the line Threadripper for $1200. More than double the price for marginal differences? No thanks.

This screenshot is not my system but a well working prime/blender cunching system by chew.

I would think 3.8GHz and 3200 cl 14-13-13 does fair better in performance than +200 on core. The Infinity fabric does depend on entirely on your ramspeed timings and frequency so what we usually find is that increasing RAM mhz with lower timings on TR4 does more than mhz numbers on a simple core clock.

I know Ryzen gets more benefit from faster RAM than Intel appears to, but I’d love to see the benchmarks to prove what you are saying. We have found the opposite of what you are claiming. When I set the RAM on the Threadripper system to 3,066MHz and left the CPU at the same 4GHz, the Blender benchmark was EXACTLY THE SAME. And, I mean, to within a second. I’ll believe what you’re saying if you can show me benchmarks. Until then it’s uncorroborated theory.

That makes no sense as both systems were tested with their off-the-shelf settings.

Because it’s cheaper to buy a standalone bluray player for a TV that includes the correct codecs – Windows 10 doesn’t ship with the ones needed to natively play bluray discs.

Good points Petar, though I am worried about the FCC deregulating internet access where the internet companies who don’t enjoy competition and love profits (like Comcast) will now put in paywalls for video websites (like Comcast already did for Netflix, making the company pay more so Comcast wouldn’t slow down the connection to the website to its customers. If Ajit V. Pai gets his way, we will have to pay internet companies twice (or maybe more than one company), one price for access to the internet and another price (or prices) to be permitted full speed access to the websites! I’d rather have a bluray player on my computer for both purchasing games and movies for the probable situation of Ajit V. Pai putting profits of cable (and other internet access) companies over the consumers wishes for net neutrality, and making me pay more to my internet company for access to websites I already paid them both to access!

They’re roughly the same price mazty for either external or internal (sometimes the internal is cheaper because the HDMI connection and other things an external bluray player contains [like on the Playstation 3/4 for example] is already integrated into the computer), and the software to play the bluray disks comes with the software on the drives. So why aren’t these companies putting a $70 – $80 bluray player (as opposed to $25 – $50 for DVD-RW) inside a $3000 – to possibly as high as a $10000 machine?!

I don’t like competition in general as it generates worst possible qualities.

Also, it effectively makes companies come out with products that are basically identical to each other except with differences in features.

Collaboration on the other hand from companies or even people at large to create highly innovative technologies and content by freely sharing ideas and removing planned obsolescence would be better.

Couple it with recycling to harvest raw materials from older technology so you can make new one (and eliminate need for resource harvesting from the environment) and just put out the BEST that is possible using latest science.

Current consumer technology is DECADES behind latest scientific knowledge and ‘competition’ is keeping us there artificially.

Look at all the wonderful patents from several decades ago that we had the ability to turn into usable technology a LONG time ago.

Instead, you mainly see people ‘protecting’ their intellectual rights as if their lifeline depends on it… and that’s the problem of a socio-economic system we live in, because it generates mistrust, competition and worst qualities in humans coupled with artificially induced scarcity.

Profit and indefinite growth on a finite planet is the reason we are in this mess.

Think of how much more we can achieve through free exchange of ideas, resources, etc… not for profit or competition but for preservation and restoration of the environment, advancement of the whole human species combined with increasing the living standard for everyone (not just select few).

Until we change the current socio-economic system, the outdated rules in place will continue to exist and slow us down at every conceivable turn.

As for people being forced to pay more to the ISP… that’s already happening as is.

It’s a systemic issue at large that leads to these conditions, not lack of Blu-Ray or optical drives in general.

Fact is, it is a lot more effective, faster and just overall better to store content on HDD’s for example because of storage capacity, lack of being able to easily damage the data, etc.

Well, damaging the data can be relative depending on the situation… you can hit the computer or magnetize it if you wanted to, but most people don’t do that to their optical discs, let alone HDD’s.

Hawaii among many others would like to say high (290/290X). Might wanna study up on GPU history a little more. Beat the crap outta Kepler in 2012 and nowadays Big Kepler (780 Ti) isn’t anywhere even freaking close (I’m talking entire tiers apart). Heck Hawaii nearly managed to go near toe to toe with the majorly revamped and drastically improved Maxwell 2 (GTX 980. Hawaii thrashed the 970) the year later with pretty much no changes whatsoever. I dunno if I can name another GPU arch in history that has aged as wonderfully as Hawaii. It just keeps kicking arse, year after year after year, while other cards fall to the wayside.

And that’s far from the only one. People get serious selective amnesia when thinking about Nvidia vs AMD/ATI.

The thing is, it does not really matter what was at the end of the day. It may sway your preference but what is always the most important is what products are out now or in the near future and who can deliver the best product for YOU regardless of their past failures/successes.

Marginal? Most games only use 1-2 cores. Some AAA games are optimized to use up to 4 cores, but not more than that. The css renderer in your browser use only 1 core (Firefox 57 is about to release the world’s first multithreaded css renderer, but it’s not out yet as of speaking), and as a programmer, I can assure you that optimizing programs for multiple cores is very difficult and hardly ever worth it. Thus, for the vast majority of programs, it doesn’t matter how many cores yiu

*are manufacturing.

This is an active production product, with very hefty & growing enterprise demand (for a uniquely niche, $8000 professional GPU that is). You might be thinking of it’s Fiji (Fury/Fury X/Nano) based predecessor which had the same Radeon Pro SSG name as this Vega model which replaced it. As far as that card goes, iirc AMD never released it into open sale so examples in the wild are few & far between. It was more of a “selectively sampled proof of concept” that let those major scientists & companies test and confirm the idea’s potential to drum up demand in advance of it’s much more refined and more widely available successor; which my all accounts appears to have worked exactly how AMD/RTG planned (for many Uber memory intensive workloads like editing/scrubbing 8K video footage, it’s literally the only GPU on the market that is able to handle them).