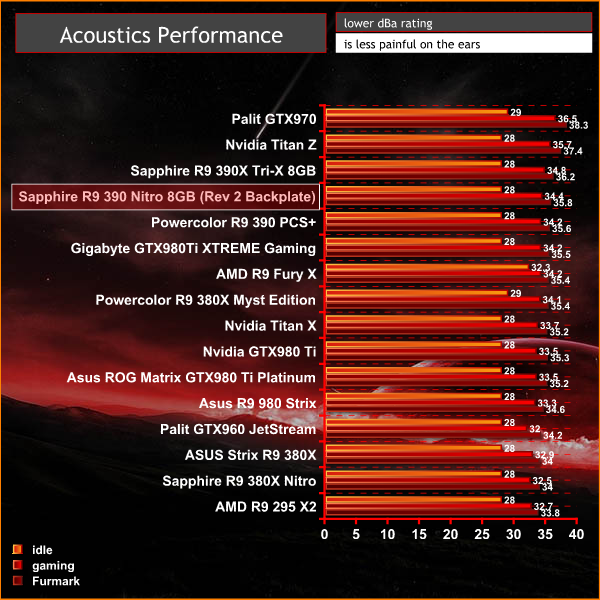

We have built a system inside a Lian Li chassis with no case fans and have used a fanless cooler on our CPU. The motherboard is also passively cooled. This gives us a build with almost completely passive cooling and it means we can measure noise of just the graphics card inside the system when we run looped 3dMark tests.

We measure from a distance of around 1 meter from the closed chassis and 4 foot from the ground to mirror a real world situation. Ambient noise in the room measures close to the limits of our sound meter at 28dBa. Why do this? Well this means we can eliminate secondary noise pollution in the test room and concentrate on only the video card. It also brings us slightly closer to industry standards, such as DIN 45635.

KitGuru noise guide

10dBA – Normal Breathing/Rustling Leaves

20-25dBA – Whisper

30dBA – High Quality Computer fan

40dBA – A Bubbling Brook, or a Refrigerator

50dBA – Normal Conversation

60dBA – Laughter

70dBA – Vacuum Cleaner or Hairdryer

80dBA – City Traffic or a Garbage Disposal

90dBA – Motorcycle or Lawnmower

100dBA – MP3 player at maximum output

110dBA – Orchestra

120dBA – Front row rock concert/Jet Engine

130dBA – Threshold of Pain

140dBA – Military Jet takeoff/Gunshot (close range)

160dBA – Instant Perforation of eardrum

The card is quiet under gaming load, and barely audible next to a case fan. The triple fans spin to produce a fair bit of air, but their pitch is quite low so it is not really that audible. The card exhibits no coil whine under synthetic stress situations.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

It may be faster than the 970GTX, but I’d hardly say they cost the same. With the added power consumption, this baby will certainly cost you a lot more in the end.

Only if you have the card for 5+ years iirc. Even a gamer’s PC spend most of their time off or in idle, it will have a minimal effect on your power bill.

A 100 watt difference will have a lot more impact than that. Even with the idle time (during which the 970 actually consumes more) it’ll cost you a few quid each year. After five years, it could easily run upwards of 10% of the price you paid, and you don’t have to game a lot to get there.

100W for a few hours every now and then, not really. And the point is it only starts becoming an amount you notice after 5 years, have you ever used a single video card for 5 years?

Assuming a 5 hour per day average of game play and playing everyday for a month, 100 watts equates to 15KWH more per month. Now in the US the average cost per KWH is around $0.12 so this would come out to $1.80 per month, so less than $24 per year in total energy cost increase.

For that extra minor monthly cost your getting better performance a much more robust memory system which could mean further gains as modern games use more memory.

5 hours a day every day for a year? Someone needs to get outside.

Which means a normal gamer would never notice the difference, but that being said a gamer wouldn’t notice the 8GB of memory as the card starts to dip in performance before the memory is used – if the fury or 980ti had 8gb of memory, that’d be different.

MSRP of the 390, according to this article, is about 270 quid.

If your estimate of power cost is true, then after 5 years, at a power cost of “upwards of 10% of the price you paid,” that leads to 27 quid.

Just to be nice to your theory, let’s round that up to 30 quid. Also rounds out the math nicely.

So over 5 years, you’ve spent 30 quid more on running the R9-390 than you would running the GTX 970. That’s 6 quid per year, or 0.50/month.

My questions for you, then:

One, does the R9-390 outperform the GTX 970 to such a degree that the 0.50/month is worth it?

Two, how long would one have to keep using the R9-390 in order for the power cost (at 0.50/month) to make up for the difference in purchase price between the two cards?

Three, be completely honest now, how badly will an extra 0.50/month on your electric bill mess with your monthly budget? Seriously?

Four, how long do you plan to keep that R9-390 or GTX 970? Will it be anywhere near as long as it would take to make up the difference in purchase price?

Five, do you actually care that much about power usage and power cost? Or are you grasping at any reason you can find to justify (to yourself) the brand preference you have chosen?

Wow to be up there in GTA 1080p (no AA?), and couldn’t offer number for AoS at 1440p?

Giving that Palit GTX970 appears to be that “Jetstream from September 23rd, 2014” (is that was what was used and then supposedly down clocked to reference?) because that normally is with a 1304Mhz Boost! I’d bet if GTA5 got a little AA that 970 would drop more than this 390 Nitro. The sad part is the Jetstream is a mix-bag card, built with on a reference “smurf” PCB, while Boosted well past other it like getting a Uber card and a non-beefy cooler. It might well ran good out of the box, but looking at what you get it’s a terrible value. I mean for not even 5% more this beautiful Sapphire Nitro that exudes quality and value.

And OMG if buy this much card a worrying about the power your using, do yourself a favor and just stop gaming altogether. While you sit there wasting time gaming and adding to Global Warning, give it up and do something useful for mankind.

While you raise valid points (except for 5 obviously, but I’ll ignore that one), these points are always valid for every minor increase in your energy bill. So you’re right, the price is probably not relevant for this one product. So in that sense, the price difference is irrelevant.

However, I am very meticulous about my energy bill (utilities in general actually), possibly too much so. If it’s fine for one product to spend a bit more, it could be fine for all products, resulting in an overall higher energy bill (and not unimportantly, environmental cost). And if you add 50 pence for a few dozen products, it becomes a lot quickly. Which I guess actually does address the first part of question five.

Yes actually 😀 which is why I’m looking for a new one 😉

So, here the 390 is slower than the 970 at 1080p on every game.

https://www.youtube.com/watch?v=x_5EbUL3BmY

It most cases yes the graphics card would run out of horses before Vram but it did a great job in black ops 3 even when using 7.8GB of Vram

Anyone who complains about video card power use but hasn’t replaced all

incandescent bulbs with LED light bulbs is a hypocrite. Save 100 watts

for $5.