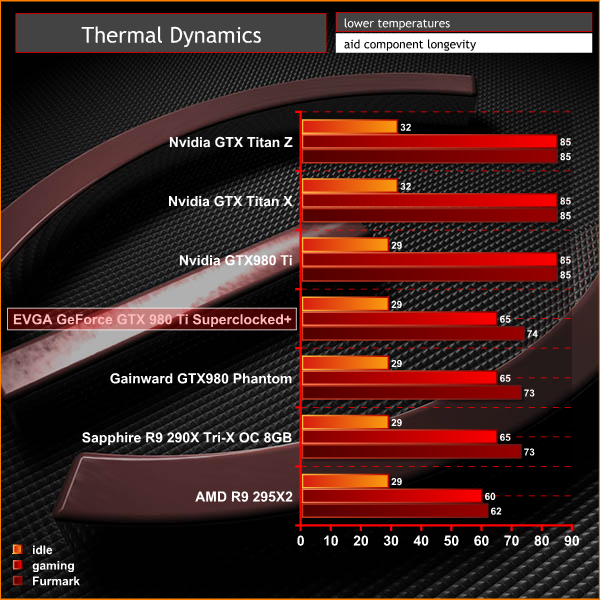

The tests were performed in a controlled air conditioned room with temperatures maintained at a constant 23c – a comfortable environment for the majority of people reading this.Idle temperatures were measured after sitting at the desktop for 30 minutes. Load measurements were acquired by playing Crysis Warhead for 30 minutes and measuring the peak temperature. We also have included Furmark results, recording maximum temperatures throughout a 30 minute stress test. All fan settings were left on automatic.

Idle temperatures are improved over the reference card by 11c, under load.

The GPU is using Nvidia’s GPU Boost 2.0 which dynamically adjusts clock speed and voltage settings, factoring in temperatures.

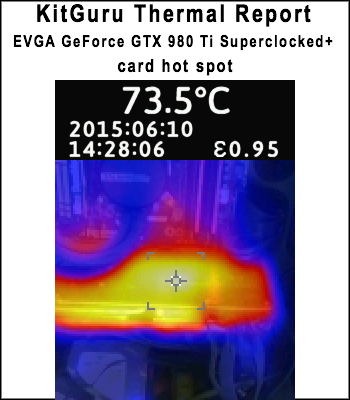

We install the graphics card into our system and measure temperatures on the back of the PCB with our Fluke Visual IR Thermometer/Infrared Thermal Camera. This is a real world running environment.

Details shown below.

EVGA have added a backplate to this card and temperatures on the rear of the PCB are much more evenly distributed than the reference board. The hottest part is the reverse side of the GPU core area. We managed to increase temperatures to 73.5c in this area after 30 minutes of Furmark. This is strictly a worst case scenario. This is around 10c lower than the Nvidia reference card and will help improve the life span of the hardware.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Sweet jeeeeesus. Incredible performance, really didn’t expect the Ti to be as good as it obvioulsy is!

Kinda sucks it can’t do silent idle 🙁 that’s something the Gaming/Strix have in their favour.

Uh it idles at 29c that’s silent idle…. since it’s below 60c

This review sucks.

——— SSSSSSoooooo Extra Cute profit with kitguru ——- ——— Keep Reading

I was curious about this card. It’s nice to see that it’s got the goods to keep cool and effecient, but I’ll be more interested to see the prices after AMD drops the fury into play. I’m hoping to see this card for around $550 currently $700 in the US.

Why do reviews at 4k with things like 4x msaa enabled in GTA 5 (when it’s known msaa is a performance killer nor is it needed at 4k) and hairworks on in Witcher 3?

Do tests showing more reasonable settings that people are going to be using the damn game with.

what the hell happened to the R9 295x in the Withcher III ??

No Crossfire support, I assume.

so how is it that Nvidia manages to get drivers ready for the newest games and release them on the day but AMD have to wait a week or so before their drivers are released ? Are Nvidia paying for early code access I wonder ??

actually no .. the card is just terrible in some games. I just sold one for this exact reason. witcher runs fine in some areas while other areas give you 12fps. crap card.

Not really , they just produce better hardware. Look at Dirt Rally for example. Its an AMD sponsored game and yet if you own an AMD GPU your stuck with dotted transparent textures. Team red just can’t get their driver team to produce decent drivers. Project Cars , Witcher ,Dirt Rally , Most new titles have serious issues with crossfire and the 200 series cards.

The reason for this is terrible VRAM. Yes, it has 512-bit interface and the 980ti has only 386-bit interface, but Delta-Colour-Compreesion (DCC – Gen 3) helps massively.

I agree with you. I am 100% Nvidia and always will be untill AMD starts to sort out their drivers. Trouble is everytime I mention this to AMD (or on any AMD related article) I get nothing but abuse from over-zealous FanBoys who can’t see the true facts because of the rose-tinted glasses they seem to view everything through…

Temperature Sound level

EVGA’s ACX 2.0+ fan turns off below 60°C, generating 0dB of noise.

Suck my ass OK.

The results shown here are the same as the 290X, which is what happens when AMD doesn’t have a crossfire driver ready.

Yeah, exactly what I was thinking. And why no 1440p benchmarks instead of 1600p? Who the hell uses a 1600p monitor???

40$/hour@kitguru

>/

< col Hiiiiiii Friends…——–''.???? ?++collider++ < see full info='……..''

????????????????????????????

Check out with this site kitguru … KEEP READING

really? 65° in game? mmmm

If you spend $500 on a card you want max settings on everything.

Nop if you want max settings you’ll need 2 card at 1200$ that the price for max settings.

If you spend 400$ on a ps4 you’ll get only 1080P

I troll but today developper got high end pc with tri or quad sli and professionnal graphics card to developpe the game, cause if you buy a quad sli, you want to be able to use it at full power. Not only using 20% of that fabulous power !

For me i want my card to be fully used at this price !

Amd suck that’s all, if amd do great processors and graphic card, amd/ati will be the number one in the market, you want perfomance ? intel/nvidia !

We just replaced two (3) 980 STRIX with 2 of these cards. At a +150 core 75 + mem 102 power target it beated our way more overclocked 3-Way SLI. These cards are actually okay. Take a look at them here, there is some pictures and also of the 3DMark results in comparison. http://computer.bazoom.com/dk/galleri/8503-stationaer-asus-rog-blackredmetal-extreme