For the last 14 days we have been testing and retesting all the video cards in this review with the latest 15.6 Catalyst and 353.30 Forceware drivers. We have also selected some new game sections to benchmark during our ‘real world runs’.

If you want to read more about our test system, or are interested in buying the same Kitguru Test Rig, check out our article with links on this page. We are using an Asus PB287Q 4k and Apple 30 inch Cinema HD monitor for this review today.

Due to reader feedback we have changed the 1600p tests to 1440p, and we have also disabled Nvidia specific features such as Hairworks in The Witcher 3: Wild Hunt as it can have such a negative impact on partnering hardware.

Anti Aliasing is also now disabled in our tests at Ultra HD 4K as readers have indicated they don’t need it at such a high resolution.

If you have other suggestions please email me directly at zardon(at)kitguru.net.

Cards on test:

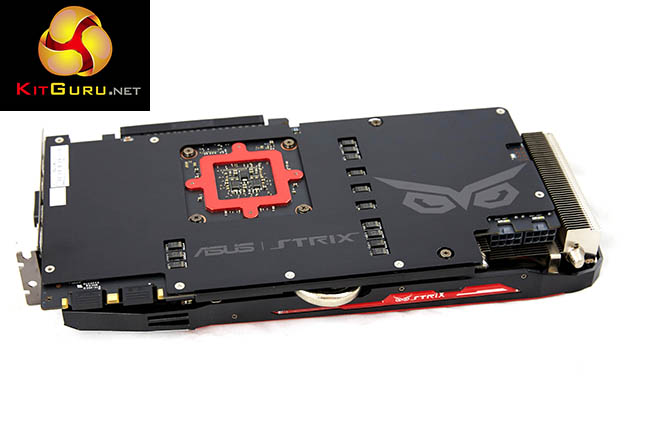

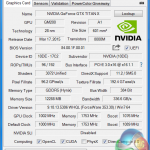

ASUS STRIX Gaming GTX 980 Ti DirectCU 3 (1,216 mhz core / 1800mhz memory)

Visiontek Radeon R9 Fury X 4GB (1,050mhz core / 500mhz memory) & (1,130mhz core)

Sapphire R9 295X2 (1,018 mhz core / 1,250 mhz memory)

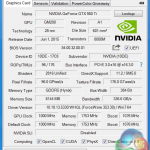

Nvidia Titan Z (706mhz core / 1,753 mhz memory)

Gigabyte GTX980 Ti G1 Gaming (1,152mhz / 1,753 mhz memory)

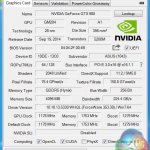

Nvidia Titan X (1,002 mhz core / 1,753 mhz memory)

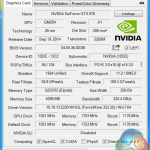

Nvidia GTX 980 Ti (1,000 mhz core / 1,753 mhz memory)

Asus GTX980 Strix (1,178 mhz core / 1,753 mhz memory)

Sapphire R9 390 X 8GB (1,055 mhz core / 1,500 mhz memory) & (1,144mhz core / 1631 mhz memory)

Sapphire R9 390 Nitro 8GB (1,010 mhz core / 1,500 mhz memory) & (1,125mhz core / 1637 mhz memory)

Sapphire R9 290 X 8GB (1,020 mhz core / 1,375 mhz memory)

Asus R9 290 Direct CU II ( 1,000 mhz core / 1,250 mhz memory)

Asus R9 285 Strix (954 mhz core / 1,375 mhz memory)

Palit GTX970 (1,051 mhz core / 1,753 mhz memory)

Software:

Windows 7 Enterprise 64 bit

Unigine Heaven Benchmark

Unigine Valley Benchmark

3DMark Vantage

3DMark 11

3DMark

Fraps Professional

Steam Client

FurMark

Games:

Grid AutoSport

Tomb Raider

Grand Theft Auto 5

Witcher 3: The Wild Hunt

Metro Last Light Redux

We perform under real world conditions, meaning KitGuru tests games across five closely matched runs and then average out the results to get an accurate median figure. If we use scripted benchmarks, they are mentioned on the relevant page.

Game descriptions edited with courtesy from Wikipedia.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

still laughing at the r9 295×2 performance on the Witcher 3 hahahahahahahahaha (sorry).

Well I suspect that if AMD were to ever work out their driver-level Crossfire support for W3, the 295×2 would likely at least trade blows with the Titan Z. As it is, it looks just like I would expect a single reference 290X to look, because as far as W3 is concerned, that’s what it is.

It’s an Nvidia showcase title, I don’t expect any better.

Amazing card.

Good deal faster than Fury X on 4K, smokes it on 1440p which is my preferred resolution, good price, premium quality components used throughout the card, more quiet than Fury X.

Good test KitGuru.

They sacrificed some temps to make it more quiet. I fully support that !

i just agree with kitguru. < Find Here <

it’s a dual chip card (so basically crossfire) and it can even run past 45 fps on 1440p that is piss-poor performance even their single chip cards beat it

i am getting this and a hyper 612 pwm to upgrade my currently crippled rig

i7 2600

GTX 560 from msi that broke so gt 730 1 gb ddr3 64 bit from msi is the temp. gpu

16GB ram

TBs of HDD

etc

having put up witth infernal stock and laptop coolers running at 6000 rpm (100rps or 10ms/rev)

i won’t mind the extra fan speed for cooling… 3000 rpm seems to be the max i can tolerate a non optimised fan design but with this i think i can go to hell with the fans… although 2-3000 rpm at max should be enough that should be like 50 degrees right

SIDENOTE: because i have a micro atx mb it does mean the graphics card can only use the top pci slot so it means the 612 would almost touch the asus but hey more cooling right…

cool the cpu and backside of the gpu with 1 fan (+case)

in another review the temps were 80 ish ‘stock’ and 70 ish oc with a more aggresive fan

so that means it would be well under 65 with ‘stock’ clocks and more fan speed

40 fps @1440p is disastrous for that card, AMD are slowly killing themselves because of poor drivers and optimisation. If you look at Tomb Raider which is an AMD showcase title (tress FX first outing) Nvidia perform very well on there. If Nvidia can get their cards to perform well on AMD biased titles then why can AMD do the same on Nvidia biased titles ?

Because tech like TressFX is made open, deliberately, by AMD. When Tomb Raider came out and debuted TressFX, for a week or two Nvidia fans were screaming and moaning that it didn’t work properly on their cards, until Nvidia fixed it in drivers, and lo and behold, it suddenly worked BETTER on Nvidia cards. It was easy for them because TressFX is open, Nvidia picked up the base code and fixed it up in their drivers.

AMD can’t do that with Nvidia-biased titles because Gameworks features (such as Hairworks) are a black-box. Nvidia doesn’t open that stuff up, they lock it up.

By the way, the new Catalyst driver adds Crossfire support for Witcher 3, so I would expect the R9-295×2 to start kicking ass again at that game.

I’m definitely getting two of these. Nice review and great looking/performing card.

Either AMD need to start shutting Nvidia out or Nvidia need to start sharing more……it is unfair on the gamers.

This is what AMD fans have been saying for a while now. AMD simply can’t afford to try to shut Nvidia out, even if they wanted to – one failed attempt could be disastrously expensive for them. And their open approach tends to benefit all gamers when it’s successful. Nvidia on the other hand has piles of money to spend when Huang’s not swimming around in it like Scrooge McDuck, and a veritable army of devotees who would rather a feature not exist if it’s not an Nvidia exclusive. In my opinion, TressFX is superior to HairWorks (which is just “tesselate the **** out of it” written into code) and is advantageous because it works really, really well on all platforms, but Nvidia has 75% (ish) of the market, so game companies tend to do (and use) what they say.

why 4 gigabites from gpu?, the gtx 980 ti should be get 6 gigabites right?

Yes 1440p is great – I have a triple 1440p setup – Dual 980ti Strixii 🙂

Me too – They are in the post (hopefully)