We have been retesting a slew of graphics cards over the last 10 days, focusing on the latest Nvidia and AMD drivers. We have also selected some new game sections to benchmark during our ‘real world runs’.

Additionally, even though it is not close to being finished, we wanted to include some findings from an early access build of the (Windows 10) Direct X 12 capable Ashes of The Singularity by Stardock (website HERE) – this uses the Nitrous game engine. We are confident this is not an indication of how the game will run when it reaches retail in the future, but still, it is interesting to showcase today. You can buy it from STEAM, over HERE.

If you want to read more about our test system, or are interested in buying the same Kitguru Test Rig, check out our article with links on this page. We are using an Asus PB287Q 4k and Apple 30 inch Cinema HD monitor for this review today.

Due to reader feedback we have changed the 1600p tests to 1440p. Anti Aliasing is also now disabled in our tests at Ultra HD 4K resolutions as readers have indicated they don’t need it at such a high resolution.

If you have other suggestions please email me directly at zardon(at)kitguru.net.

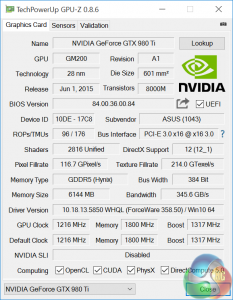

Asus ROG Matrix GTX 980Ti Platinum Edition (1,216 mhz core / 1,800 mhz memory)

Comparison Cards on test:

Sapphire R9 295X2 (1,018 mhz core / 1,250mhz memory)

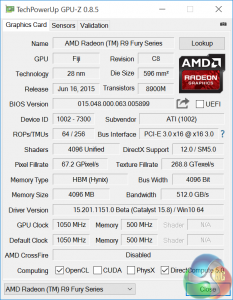

AMD Fury X (1,050 mhz core / 500 mhz memory)

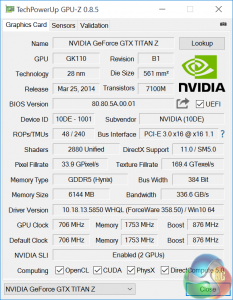

Nvidia GTX Titan Z (706 mhz core / 1,753 mhz memory)

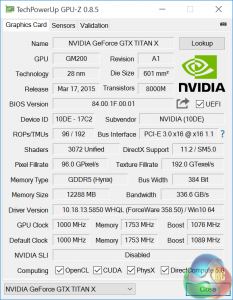

Nvidia GTX Titan X (1,000 mhz core / 1,753 mhz memory)

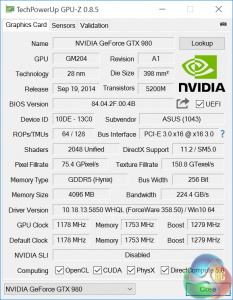

Asus GTX980 Strix (1,178 mhz core / 1,753 mhz memory)

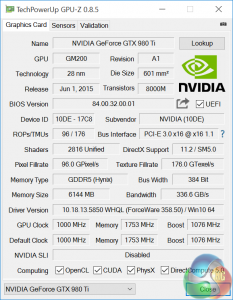

Nvidia GTX980 Ti (1000 mhz core / 1,753 mhz memory)

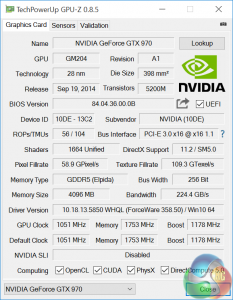

Palit GTX970 (1,051 mhz core / 1,753 mhz memory)

Sapphire R9 390X Tri-X 8GB (1,055 mhz core / 1,500 mhz memory)

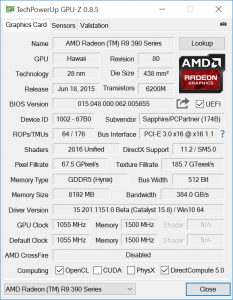

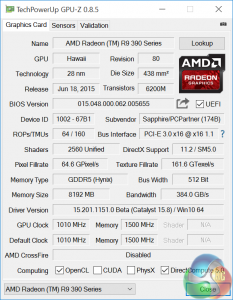

Sapphire R9 390 Nitro 8GB (1,010 mhz core / 1,500 mhz memory)

Software:

Windows 10 64 bit

Unigine Heaven Benchmark

3DMark 11

3DMark

Fraps Professional

Steam Client

FurMark

Games:

Ashes Of the Singularity (early access build)

Grid AutoSport

Tomb Raider

Grand Theft Auto 5

Metro 2033 Redux

We perform under real world conditions, meaning KitGuru tests games across five closely matched runs and then average out the results to get an accurate median figure. If we use scripted benchmarks, they are mentioned on the relevant page.

Game descriptions edited with courtesy from Wikipedia.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

So how does this card compare to the MSI Lightning GTX 980 Ti, and the EVGA Kingpin 980 Ti? Are there significant differences, or is it too close to call?

we haven’t tested the kingpin, we did test the XLR8 model, which is a little lower in the chain http://www.kitguru.net/components/graphic-cards/zardon/pny-gtx980-ti-xlr8-oc/ – my colleague Luke tested the Lightning but I haven’t seen it myself http://www.kitguru.net/components/graphic-cards/luke-hill/msi-gtx-980-ti-lightning-6gb-review/

Nice card but I really wouldn’t want to be spending £650 on a Maxwell card so late in its life cycle, Pascal cards costing half as much will have roughly the same performance in 6-8mths.

So is this 2.5 slot or 3 slot, I keep being told 3 slot design.

then high end Pascal in Q2 2017

Allan, is this 2.5 slot or 3 slot, I keep being told it is 3 slot.

Why do you keep changing your GPU tests, makes it harder to compare due to different situations, stick to one way, if not test all the GTX 980ti again, I would like to have a comparison of GTX 980ti Lightning and the ASUS GTX 980ti Matrix

It’s 2.5 slot.

Source? Even if true, GTX680 outperformed GTX580 by 35% at 1440P on day one of release, and had better feature set and driver support. That means in 2016 we’ll should have a 16nm $500-600 card from AMD/NV that should be 30-40% faster than 980Ti. It won’t be double the performance but it’ll likely have 8GB of memory and better feature set and most importantly the latest driver focus (*Kepler gimping* ahem).

One is the 16nm fab is new…and untested…and you can’t go large and power hungry on first generation chips. Don’t believe how unreliable a new fab is? Look at how long it took Nvidia to pick between Samsung and TSMC and finally siding with TSMC due to their longer working history and reliability.

Second point is…they will kill their own sales by offering their best card right at the release of the new generation. They will not be able to give any reason at all to their enthusiast market to upgrade their card within 3 years of launch. Whereas when you look at Kepler, people bought the 680, then the Titan came out, then the 780, and most people who bought the 680 eventually upgraded to those cards. And then when Maxwell launched, they started with just the 750 and 750ti. Then released the 980 that some bought, and finally after a while, the Titan X and 980ti which gave people (enthusiast market, again) a reason to upgrade.

Nvidia likes incremental upgrades. They don’t want you to buy a card now, and launch another card in a year that completely destroys the card you bought. They put out one card, then 12 months later they bring out another card that is generally just 10-20% faster, so it’s better than what they offered before, but not enough to piss off anyone who bought their last gen cards. And then another 6-12 months later they put out an even better card, with 50%+ better performance than their older cards, and people start upgrading again.

If on day one of the Pascal launch, if they come out with their absolute best card, they are going to have nothing interesting to bring to market for 2 to 3 years until Volta comes out. And that would be silly. Even if it were possible with the new 16nm fab. In terms of business, you need to offer a product that is a bit better than your competition, without being too much better/too costly for you. So they just need to put out a slight performance increase, but sell the card on much lower power consumption, wait for AMD to release something else, and then launch a bigger die version themselves, and back/forth they go. Just a quick reference:

FERMI

———–

GTX 580 = 520mm2

KEPLER

————-

GTX 680 = 294mm2

GTX Titan = 551mm2

MAXWELL

—————

GTX 750ti = 148mm2

GTX 980 = 398mm2

GTX Titan X = 601mm2

Do you see the pattern? Small, Medium, Big, restart. Don’t think about it based on die size. Because it’s really just about transistor count. Pascal at 16nm, even a 294mm2 sized die like the GTX 680, along with HBM2, would result in performance close to the Titan X…and perhaps even higher due to lower heat/power consumption allowing higher clocks.

Hope that helps.

Also NVIDIA has not even released the GTX 990, so why would high end Pascal come out in Q1 2016, no one would buy the GTX 990

Also, you really think it will be 30 percent, node shrinks do not increase performance. It will be the same performance in games. Also we are comparing a GTX 980t, not the GTX 980, the GTX 1080 will have the same performance as the GTX 980ti

https://www.youtube.com/watch?v=Lqq6gJlKvtk

There you go, all you need to know 🙂