It's strange to think it has now been a year – almost to the day – since Intel launched the A750 and A770 GPUs. Stranger still is the fact that the A580 was also announced alongside those graphics cards, yet it went completely unheard of for months on end. That changes today, however, as the A580 has finally landed, with Intel targeting a $179 MSRP. We put it through its paces and find out how it stacks up against the competition.

When I reviewed both the Intel A750 and A770 this time last year, my main takeaway was how Intel's Alchemist silicon showed promise, but the driver side was really holding things back. Thankfully, Intel has been working continuously to improve things, and when I revisited the A770 earlier this year, it was impressive just how far things had come.

Now, with the launch of the Intel Arc A580, it's time to see if Alchemist has what it takes to compete at the $179 price point, with the likes of the RX 6600 and RTX 3050 currently occupying this market segment. This review also marks Sparkle's return to our pages, with the former Nvidia partner back in the business making Intel-based graphics cards. Let's find out what it can bring to the party…

If you want to read this review as a single page, click HERE.

| Arc A770 | Arc A750 | Arc A580 | Arc A380 | |

| Silicon | ACM-G10 | ACM-G10 | ACM-G10 | ACM-G11 |

| Process | TSMC N6 | TSMC N6 | TSMC N6 | TSMC N6 |

| Render Slices | 8 | 7 | 6 | 2 |

| Xe Cores | 32 | 28 | 24 | 8 |

| Shaders | 4096 | 3584 | 3072 | 1024 |

| XMX Engines | 512 | 448 | 384 | 128 |

| RT Units | 32 | 28 | 24 | 8 |

| Texture Units | 256 | 224 | 192 | 64 |

| ROPs | 128 | 112 | 96 | 32 |

| Graphics Clock | 2100 MHz | 2050 MHz | 1700 MHz | 2000 MHZ |

| Memory Config | 8/16GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 6GB GDDR6 |

| Memory Data Rate | 17.5 Gbps | 16 Gbps | 16 Gbps | 15.5 Gbps |

| Memory Interface | 256-bit | 256-bit | 256-bit | 96-bit |

| Memory Bandwidth | 560 GB/s | 512 GB/s | 512 GB/s | 186 GB/s |

| PCIe Interface | Gen 4 x16 | Gen 4 x16 | Gen 4 x16 | Gen 4 x8 |

| TBP | 225W | 225W | 185W | 75W |

First, it's worth recapping the specs here. With the A580, Intel is continuing to use its ACM-GM10 silicon – the GPU that was the basis of both the A750 and A770. This time, the A580 is cut down and uses just 6 render slices, or 24 Xe cores. Each Xe core offers 16 vector engines, with each vector engine housing eight FP32 ALUs, for a grand total of 3072. Each Xe core is accompanied by a Ray Tracing Unit, while we also find 192 TMUs and 96 ROPs.

The memory subsystem, meanwhile, is identical to the A750. That means we find 8GB of GDDR6 operating at 16Gbps, with a 256-bit memory interface, giving a total memory bandwidth of 512 GB/s.

Clock speed has been cut down for the A580 however, with a 1.7GHz reference clock, but as we shall see, Sparkle has increased this to 2GHz via a factory overclock.

Lastly, total board power is rated at 185W, but this has also been increased by Sparkle, something we look at closely in this review using our in-depth power testing methodology.

The Sparkle Arc A580 Orc ships in a dark blue box, with an image of the graphics card taking up most of the front. On the back, Sparkle highlights a few key features of the card and the cooler.

Inside, there's no quick start guide or anything like that – the only accessory is a promo code for two free games (Gotham Knights and Ghostbusters: Spirits Unleashed) based on the current campaign Intel is running.

As for the card itself… it's very blue. To be honest I am not a fan of the design at all, to me it looks quite dated and a blue graphics card surely limits the overall appeal – what if you already have a colour-coordinated build? Even the PCB is blue which is certainly a bold choice!

That said, while the shroud is made of plastic, it feels reassuringly sturdy in the hand and there is a black metal backplate as we will see below. We can also note the two fans, each measuring in at 90mm diameter.

The Orc is relatively compact too, measuring in at 222 x 101 x 40.9, so it's only just thicker than two PCIe slots. I also weighed it at 734g on my scales.

The front side is home to the Sparkle logo (the only LED lighting zone on the card) and the Intel Arc branding printed in white text.

We can also see the full-length metal backplate with the blue PCB clearly visible underneath. Strangely, there are a few cut-outs in the backplate which would normally allow air to pass through the heatsink and out the back of the card… except the PCB is blocking the cut-outs anyway, so I'm not too sure what this achieves.

Back to that LED zone however, this uses what Sparkle describes as its patent-pending ThermalSync technology. Essentially the Sparkle logo changes colour based on the temperature of the GPU – it shows blue when idling, and changes to yellow when gaming (I saw it change when GPU temperatures exceeded 64C). I didn't see it change to red to indicate an even higher temperature, though of course this will depend on your case's airflow setup and ambient conditions.

Finally, power is delivered by two 8-pin inputs – for reference, the A750 Limited Edition uses one 8-pin and one 6-pin. We can also note three DisplayPort 2.0 video outputs, and one HDMI 2.1 connector.

Driver Notes

- AMD GPUs were benchmarked with the Adrenalin 23.9.3 driver.

- All Nvidia GPUs were benchmarked with the 537.42 driver.

- Intel Arc A750 was benchmarked with the 31.0.101.4885 driver.

- Intel Arc A580 was benchmarked with the 31.0.101.4830 driver supplied to press.

Results are only directly comparable where this exact configuration has been used.

Test System:

We test using a custom built system from PCSpecialist, based on Intel’s Rocket Lake platform. You can read more about this system HERE and configure your own PCSpecialist system HERE.

| CPU |

Intel Core i9-13900KS

|

| Motherboard |

Gigabyte Z790 Gaming X AX

|

| Memory |

32GB (2x16GB) Corsair Dominator Platinum RGB DDR5 6000MHz

|

| Graphics Card |

Varies

|

| SSD |

4TB Seagate Firecuda 530 Gen 4 PCIe NVMe

|

| Chassis | Corsair 5000D Airflow Tempered Glass Gaming Case |

| CPU Cooler |

Corsair iCUE H150i Elite RGB High Performance CPU Cooler

|

| Power Supply |

Corsair 1600W Pro Series Titanium AX1600i Digital Modular PSU

|

| Operating System |

Windows 11 22H2

|

| Monitor |

MSI Optix MPG321UR-QD

|

| Resizable BAR |

Enabled for all supported GPUs

|

Comparison Graphics Cards List

- MSI Ventus RTX 4060 8GB

- Palit RTX 3060 StormX 12GB

- Palit RTX 3050 StormX 8GB

- Nvidia RTX 2060 FE 6GB

- Nvidia GTX 1060 FE 6GB

- Sapphire RX 7600 Pulse 8GB

- Gigabyte RX 6600 Eagle 8GB

- Gigabyte RX 6500 XT Eagle 4GB

- Intel Arc A750 LE 8GB

- Sparkle Arc A580 Orc 8GB

All cards, except the Sparkle Arc A580 Orc that is the subject of this review, were tested at reference specifications.

Software and Games List

- 3DMark Fire Strike & Fire Strike Ultra (DX11 Synthetic)

- 3DMark Time Spy (DX12 Synthetic)

- 3DMark DirectX Raytracing feature test (DXR Synthetic)

- Assassin's Creed Valhalla (DX12)

- Cyberpunk 2077 (DX12)

- Forza Horizon 5 (DX12)

- God of War (DX11)

- Horizon Zero Dawn (DX12)

- The Last of Us Part 1 (DX12)

- A Plague Tale: Requiem (DX12)

- Ratchet and Clank: Rift Apart (DX12)

- Red Dead Redemption 2 (DX12)

- Resident Evil 4 (DX12)

- Returnal (DX12)

- Shadow of the Tomb Raider (DX12)

- Total War: Warhammer III (DX11)

We run each benchmark/game three times, and present mean averages in our graphs. We use FrameView to measure average frame rates as well as 1% low values across our three runs.

Before we dive into the benchmarks, we should clarify how we've tested the Sparkle Arc A580 Orc today. Usually, we test all of our comparison GPUs at reference specifications for these reviews – that's easier when we get the Nvidia Founders Edition, or Intel Limited Edition boards for review. The Sparkle Orc is an AIB card and not only has it been factory overclocked from 1.7GHz up to 2GHz, but it quickly became clear from our testing that Sparkle has significantly increased the power draw – Intel claims the A580 should hit 185W, but we saw 215W power draw from the Orc.

While Intel's tuning tool doesn't allow you to lower clock speed, we did reduce the power limit of the Orc, setting a 132W chip-only limit, which resulted in as close to 185W total board power as we could get.

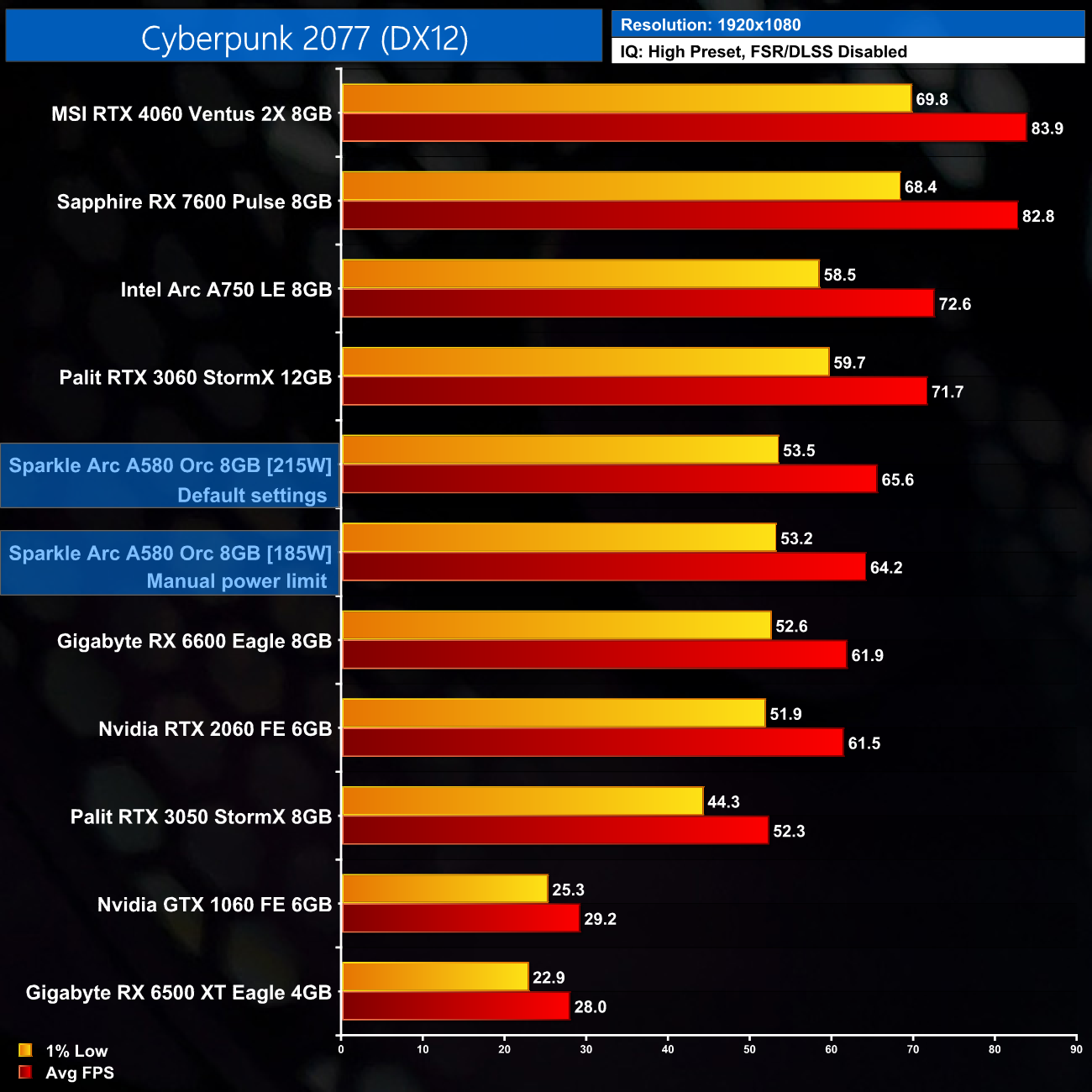

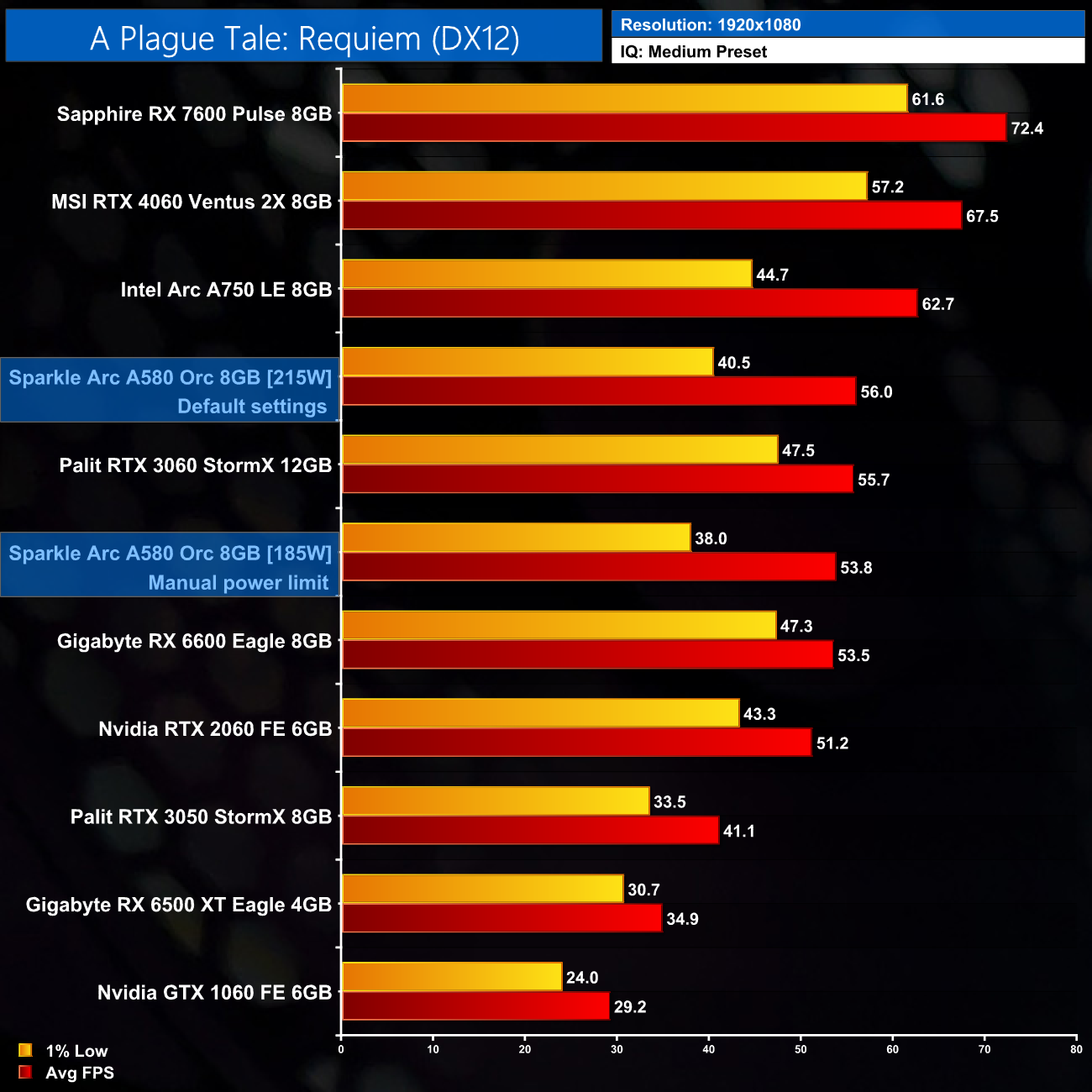

Doing so did reduce performance, but not by a whole lot – by 2% in Cyberpunk 2077 and 4% in A Plague Tale: Requiem. This is just something to bear in mind across this review – we tested the Sparkle in its default, out of the box configuration, so it will run a small bit faster than a reference clocked card.

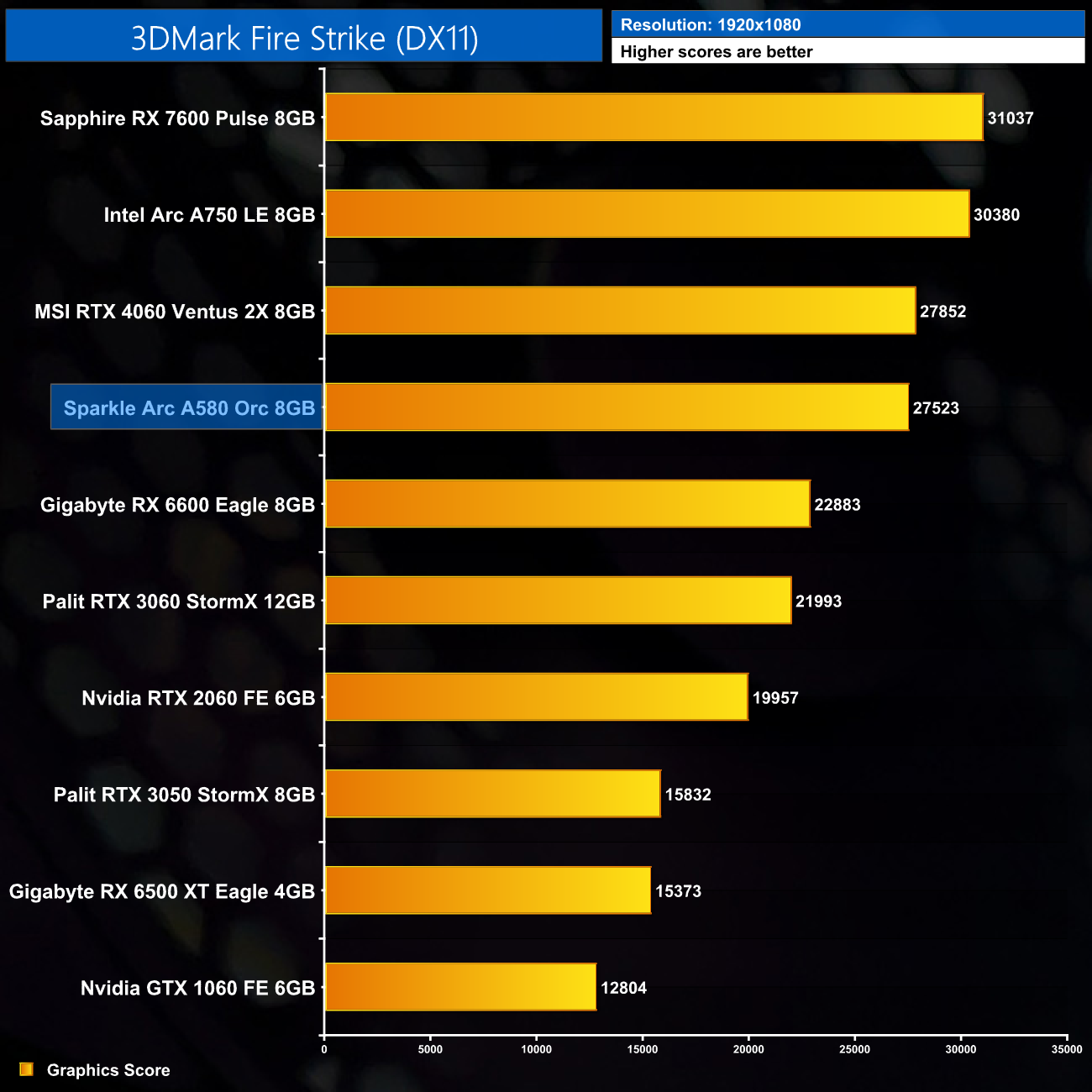

Fire Strike is a showcase DirectX 11 benchmark for modern gaming PCs. Its ambitious real-time graphics are rendered with detail and complexity far beyond other DirectX 11 benchmarks and games. Fire Strike includes two graphics tests, a physics test and a combined test that stresses the CPU and GPU. (UL).

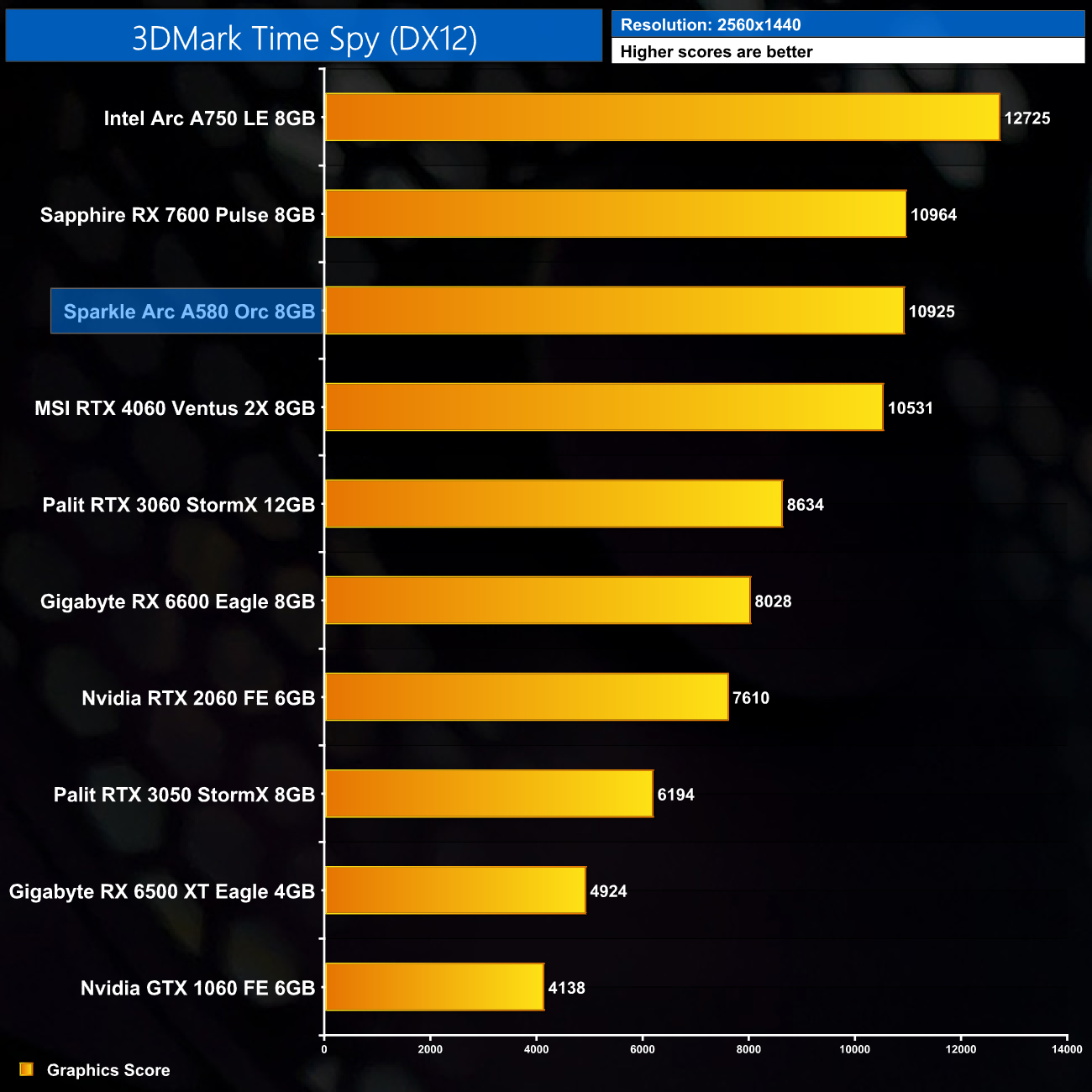

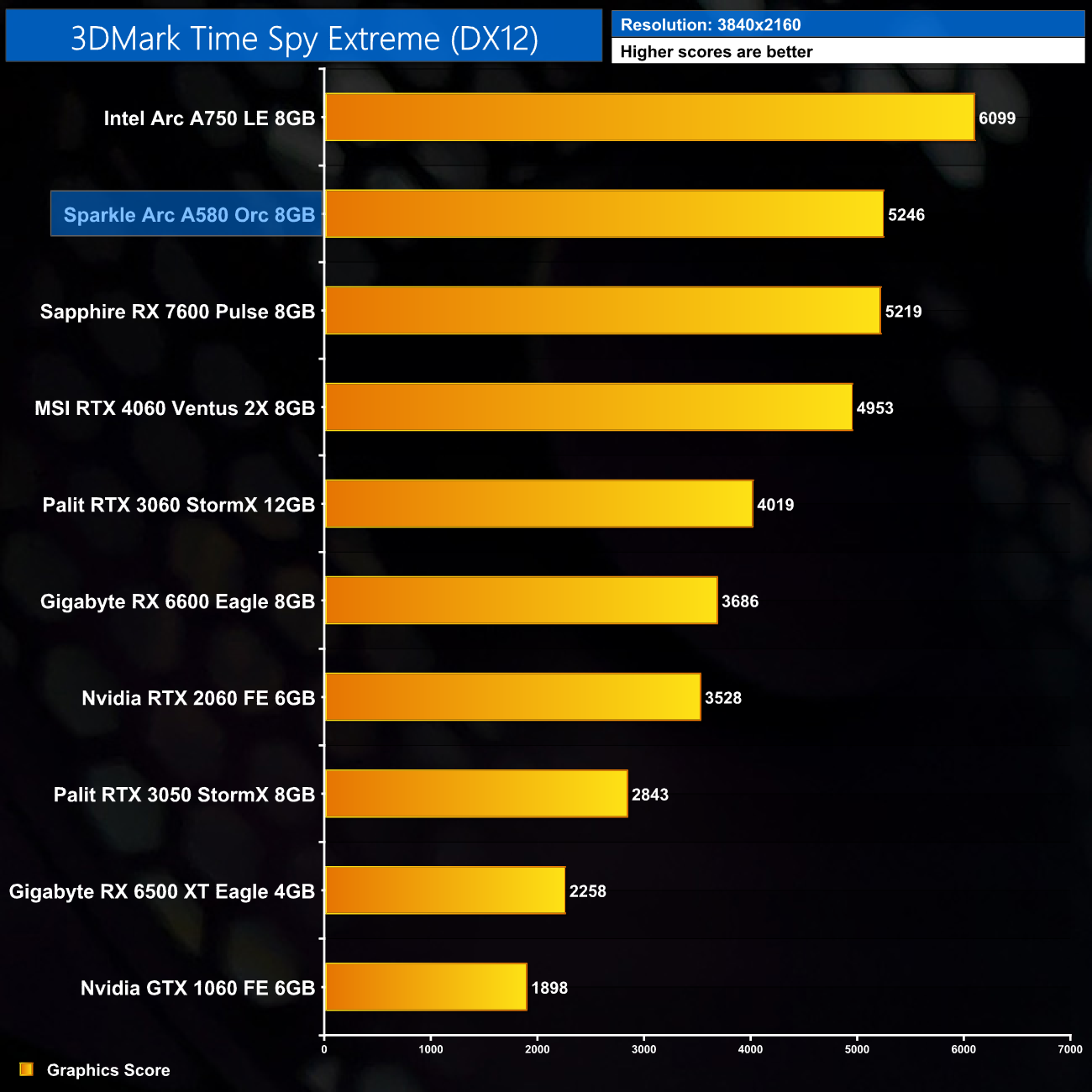

3DMark Time Spy is a DirectX 12 benchmark test for Windows 10 gaming PCs. Time Spy is one of the first DirectX 12 apps to be built the right way from the ground up to fully realize the performance gains that the new API offers. With its pure DirectX 12 engine, which supports new API features like asynchronous compute, explicit multi-adapter, and multi-threading, Time Spy is the ideal test for benchmarking the latest graphics cards. (UL).

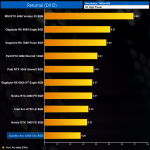

We get our first look at performance indicators in 3DMark, where the A580 scales very well – it's 20% faster than the RX 6600 in Fire Strike, but we'll have to see if that plays out in our game benchmarks.

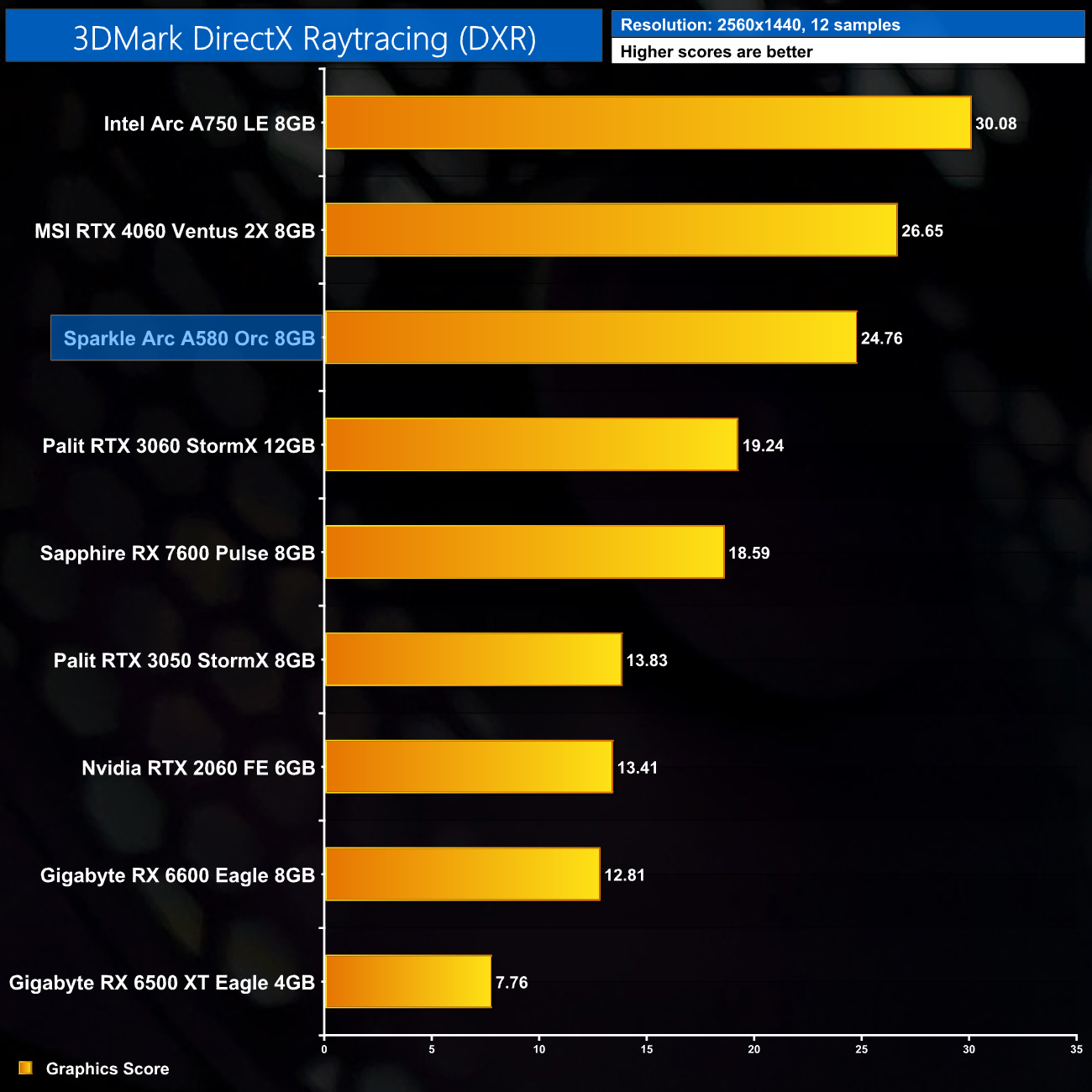

Real-time ray tracing is incredibly demanding. The latest graphics cards have dedicated hardware that’s optimized for ray-tracing. The 3DMark DirectX Raytracing feature test measures the performance of this dedicated hardware. Instead of using traditional rendering techniques, the whole scene is ray-traced and drawn in one pass. The result of the test depends entirely on ray-tracing performance. (UL).

3DMark's DXR Featuretest also shows very promising performance, with the A580 only a couple frames behind the RTX 4060, while it's well clear of the RTX 3060.

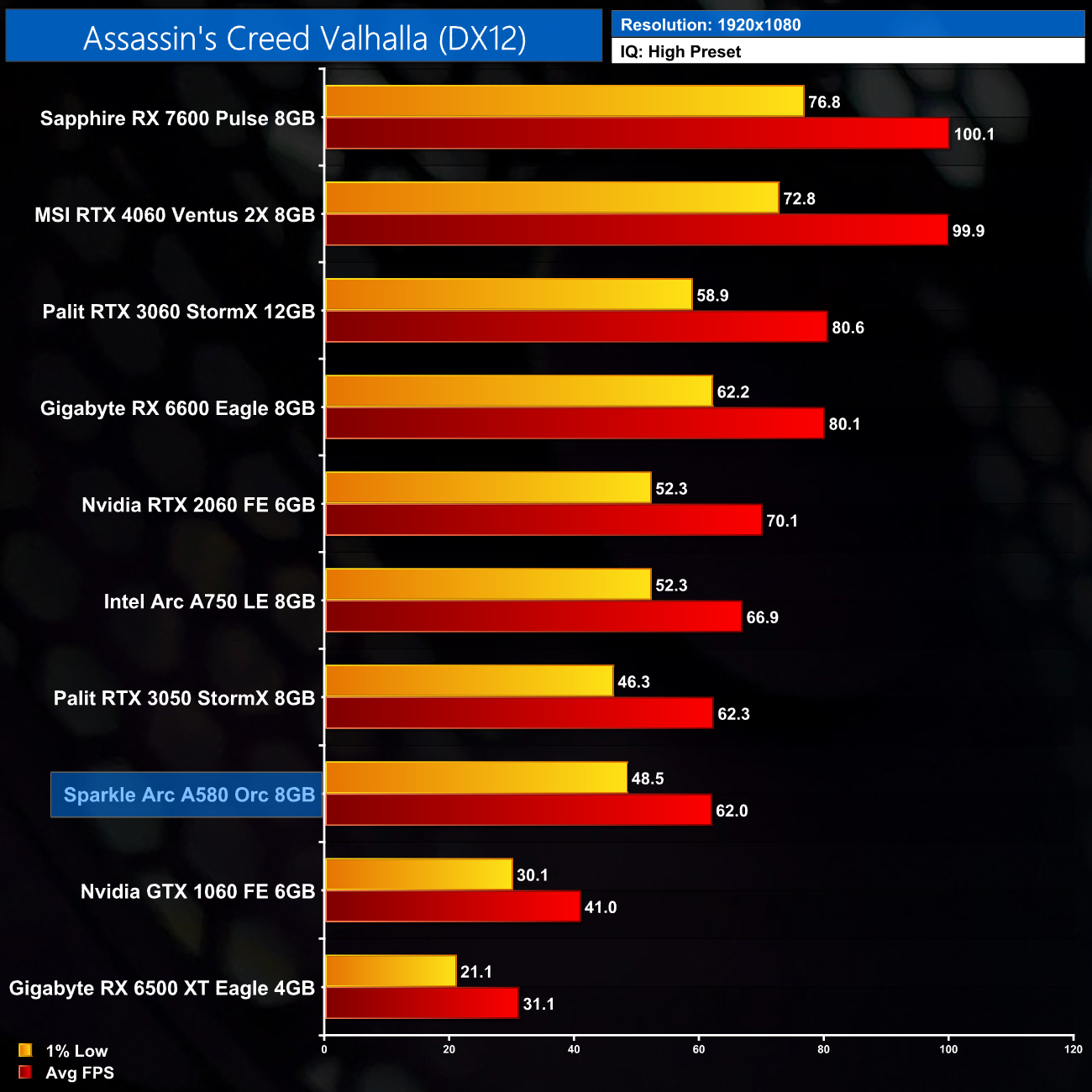

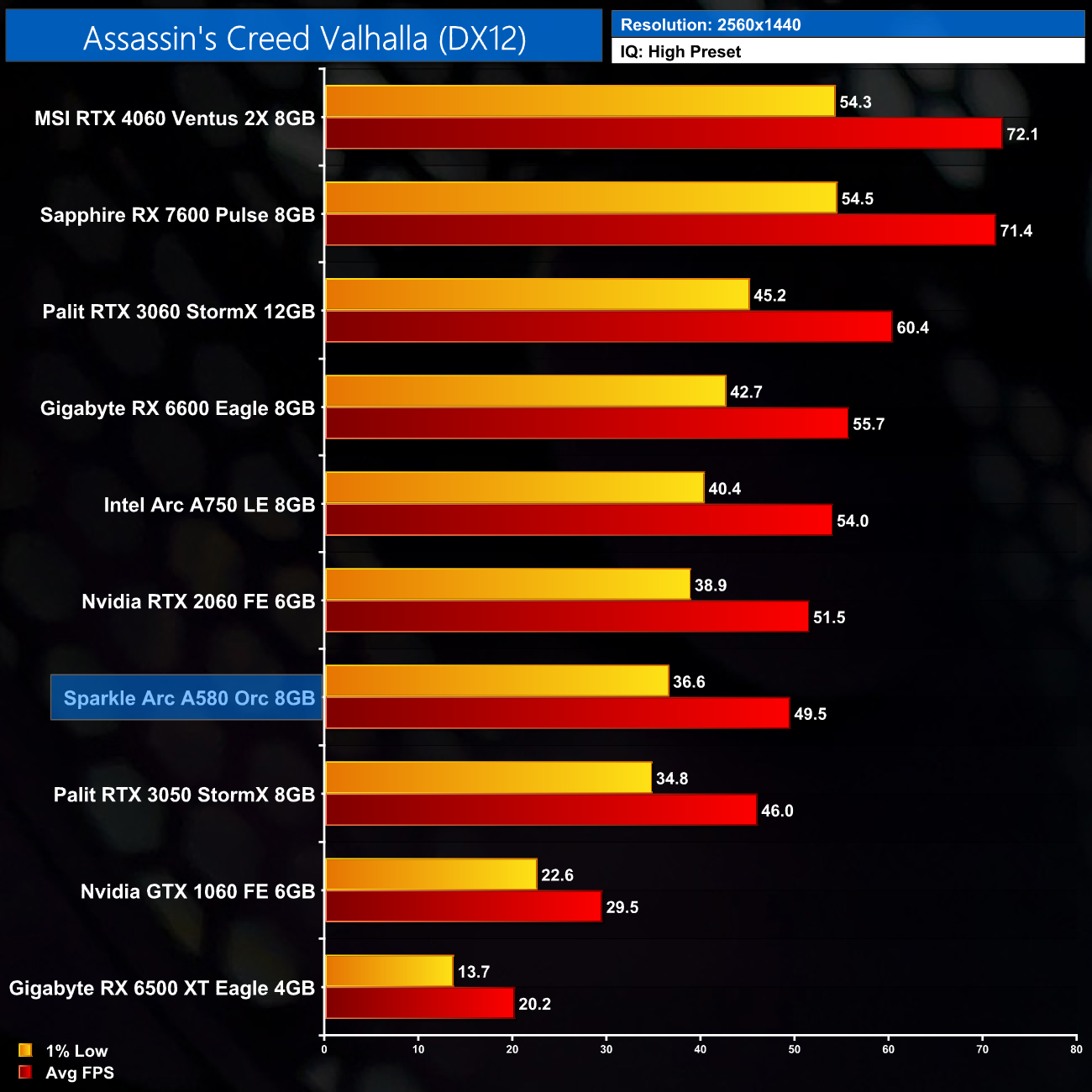

Assassin's Creed Valhalla is an action role-playing video game developed by Ubisoft Montreal and published by Ubisoft. It is the twelfth major instalment and the twenty-second release in the Assassin's Creed series, and a successor to the 2018's Assassin's Creed Odyssey. The game was released on November 10, 2020, for Microsoft Windows, PlayStation 4, Xbox One, Xbox Series X and Series S, and Stadia, while the PlayStation 5 version was released on November 12. (Wikipedia).

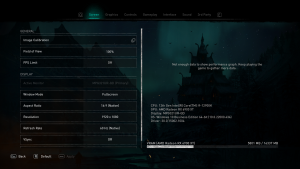

Engine: AnvilNext 2.0. We test using the High preset, DX12 API.

Kicking things off with Assassin's Creed Valhalla, it's not the best start for the A580, pushing out a little over 60FPS at 1080p. That puts it dead level with the RTX 3050, though with slightly better 1% lows, but it's a hefty 23% slower than the RX 6600. There's not much of a difference versus the A750 though, as the A580 is just 7% behind.

We also test 1440p and the A580 does scale a touch better, now coming in slightly faster than the RTX 3050, while it's 11% behind the RX 6600. Against the A750, the A580 is now 8% slower, so that's not much of a gap at all between the two Arc GPUs.

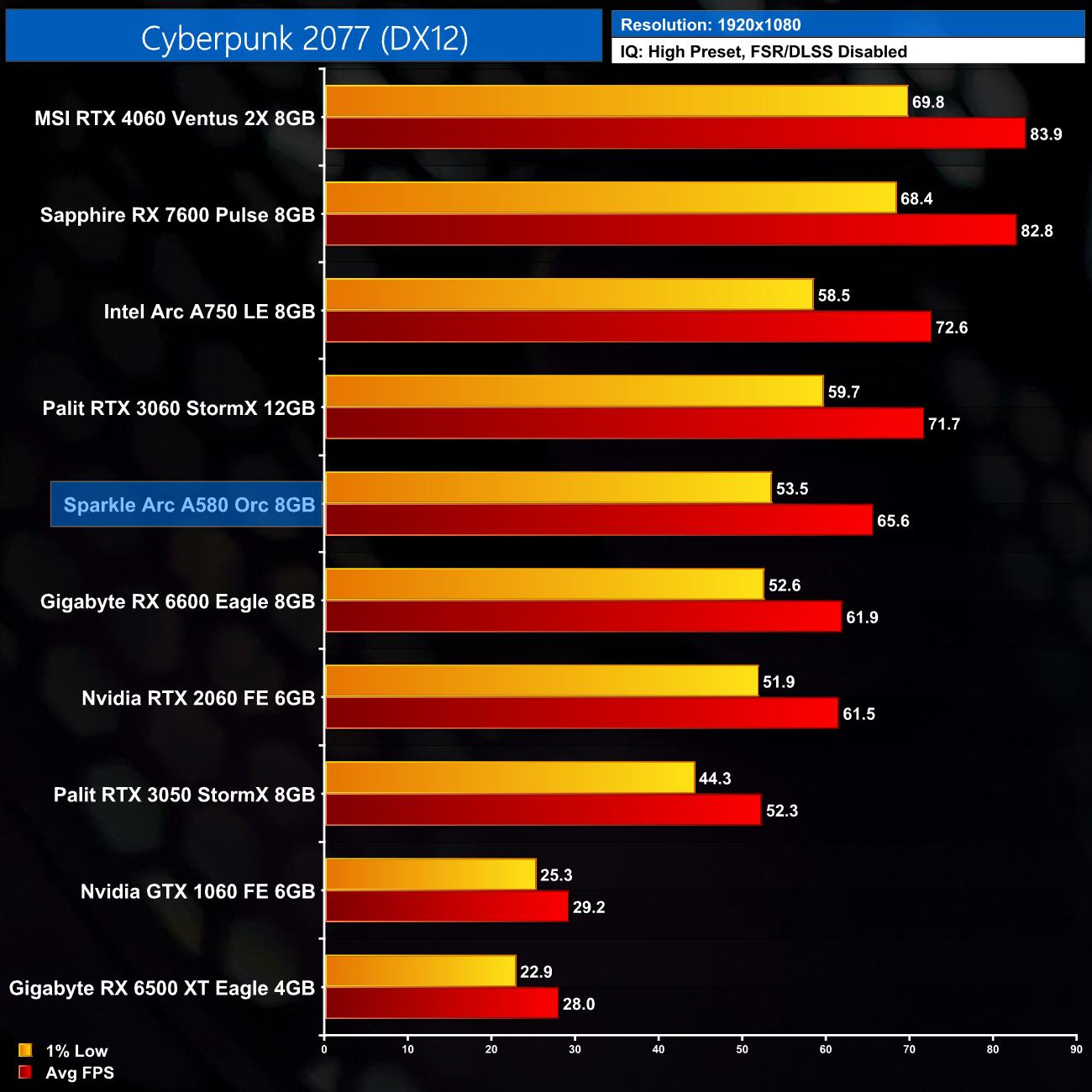

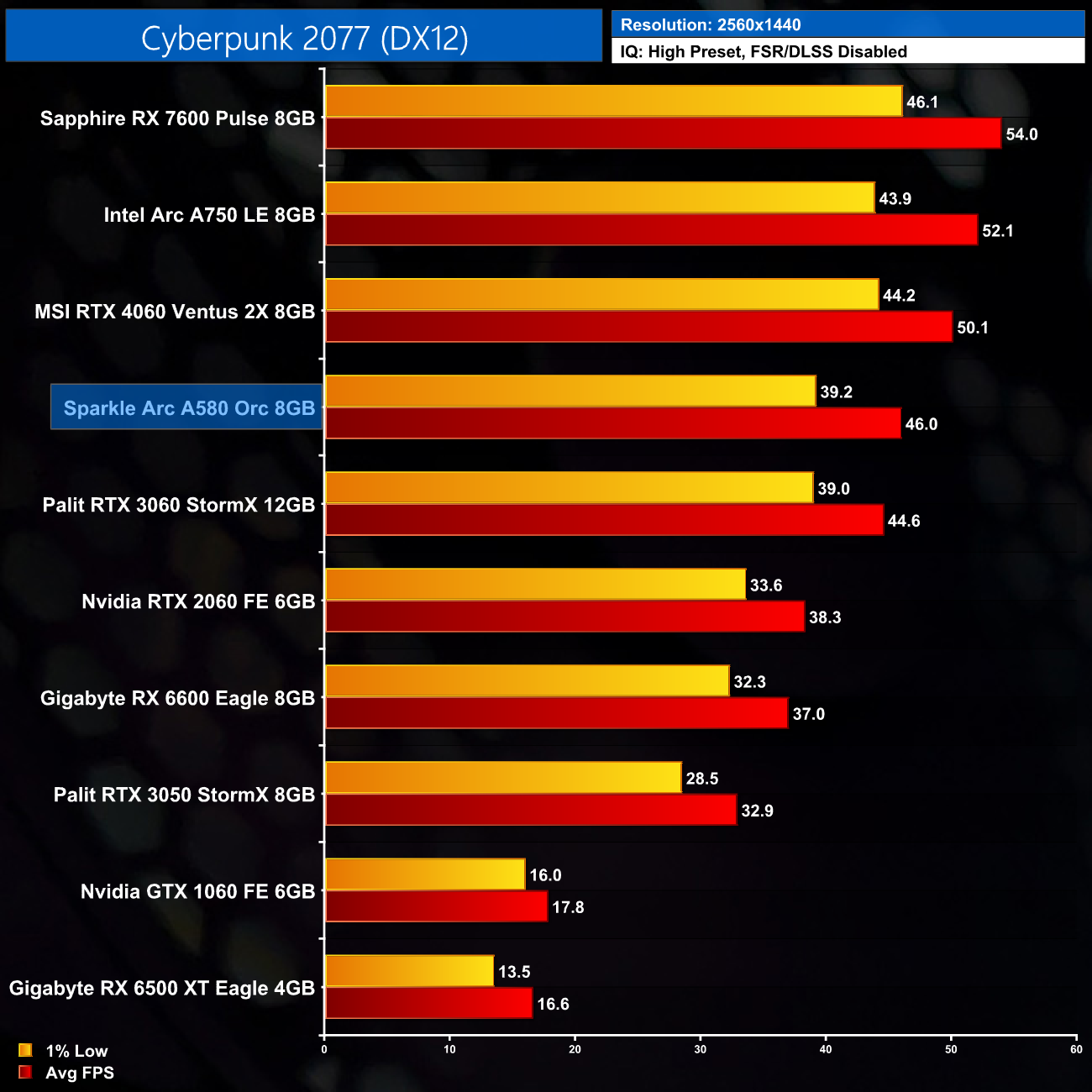

Cyberpunk 2077 is a 2020 action role-playing video game developed and published by CD Projekt. The story takes place in Night City, an open world set in the Cyberpunk universe. Players assume the first-person perspective of a customisable mercenary known as V, who can acquire skills in hacking and machinery with options for melee and ranged combat. Cyberpunk 2077 was released for Microsoft Windows, PlayStation 4, Stadia, and Xbox One on 10 December 2020. (Wikipedia).

Engine: REDengine 4. We test using the High preset, FSR disabled, DX12 API.

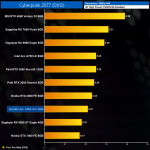

As for Cyberpunk 2077 using the new 2.0 update, the A580 looks better here, delivering 66FPS on average and holding a 6% lead over the RX 6600, while it's in a different league to the RTX 3050.

At 1440p it's even faster than the RTX 3060 and isn't far off the RTX 4060. This time we're also looking at a 12% deficit versus the A750, which still isn't a particularly large gap between these two Intel cards.

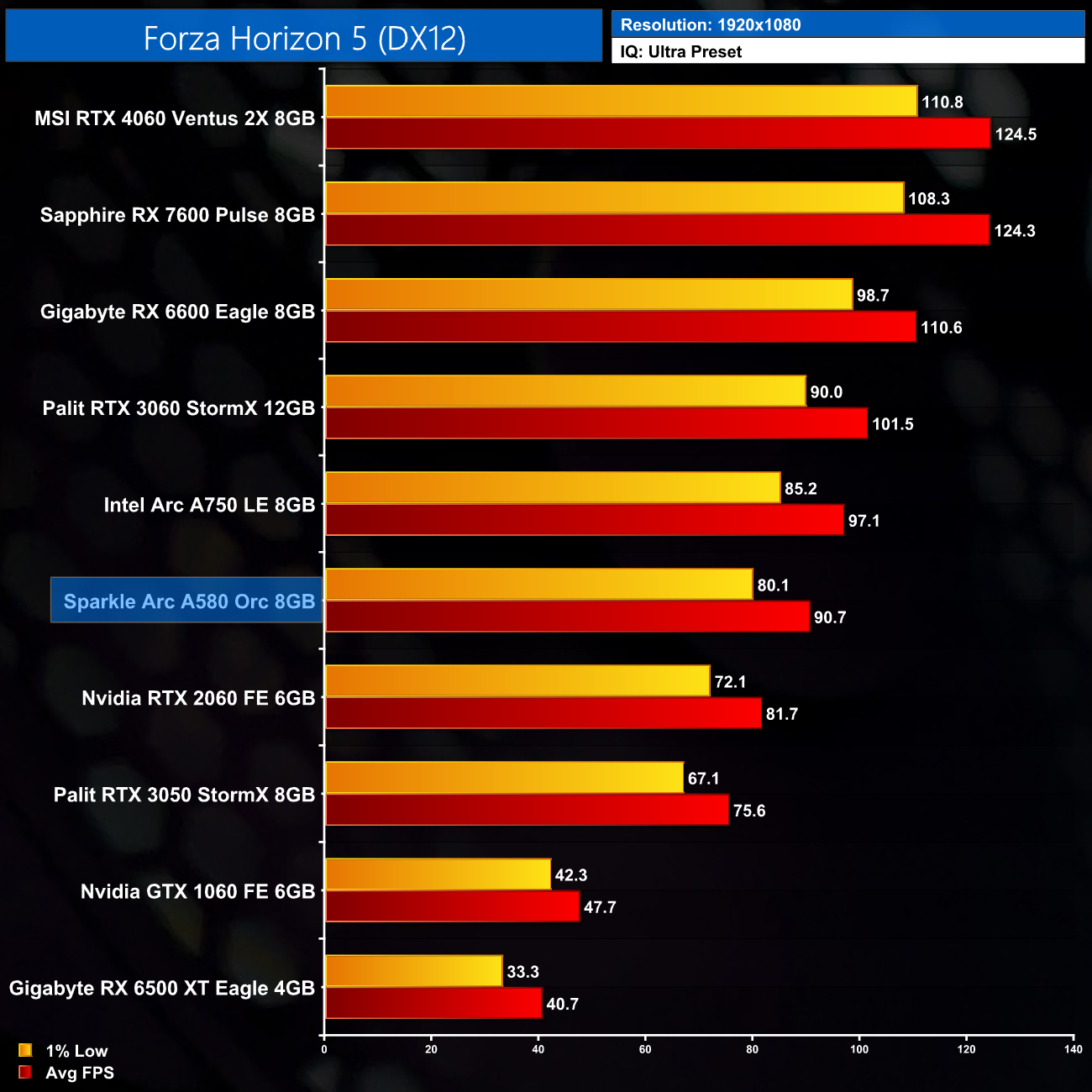

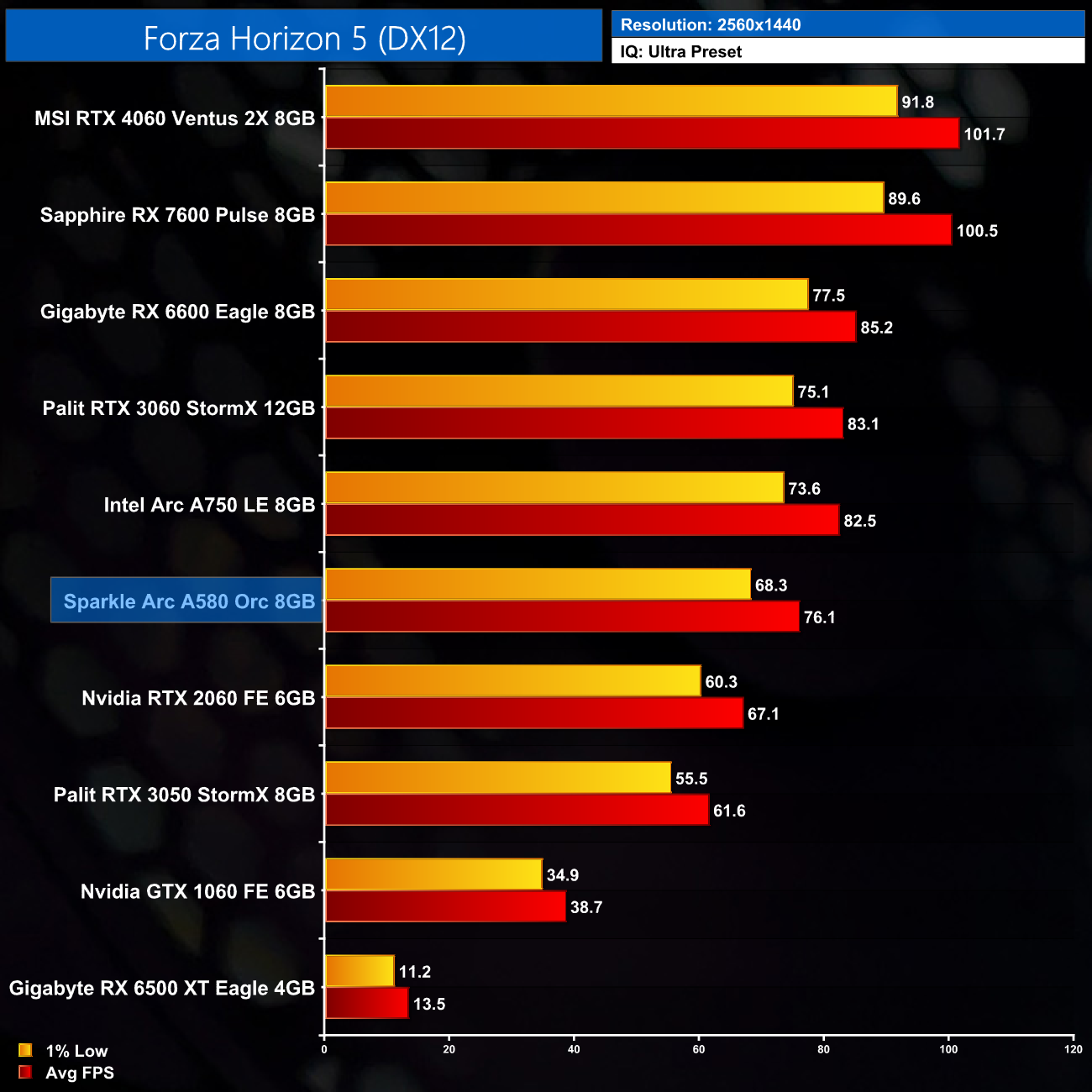

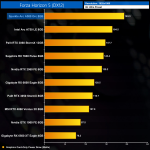

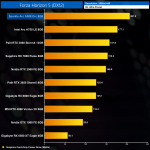

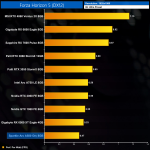

Forza Horizon 5 is a 2021 racing video game developed by Playground Games and published by Xbox Game Studios. The twelfth main instalment of the Forza series, the game is set in a fictionalised representation of Mexico. It was released on 9 November 2021 for Microsoft Windows, Xbox One, and Xbox Series X/S. (Wikipedia).

Engine: ForzaTech. We test using the Ultra preset, DX12 API.

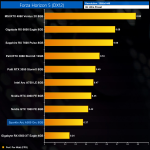

Forza Horizon 5 sees the A580 take a backward step, relatively speaking. It's still very playable, hitting 91FPS at 1080p, but it's 18% slower than the RX 6600. Versus Nvidia though, the A580 is 20% faster than the RTX 3050 and only 11% behind the RTX 3060.

1440p is still a smooth experience too, hitting 76FPS, though that's only just behind the A750 – but do remember the A580 is factory overclocked.

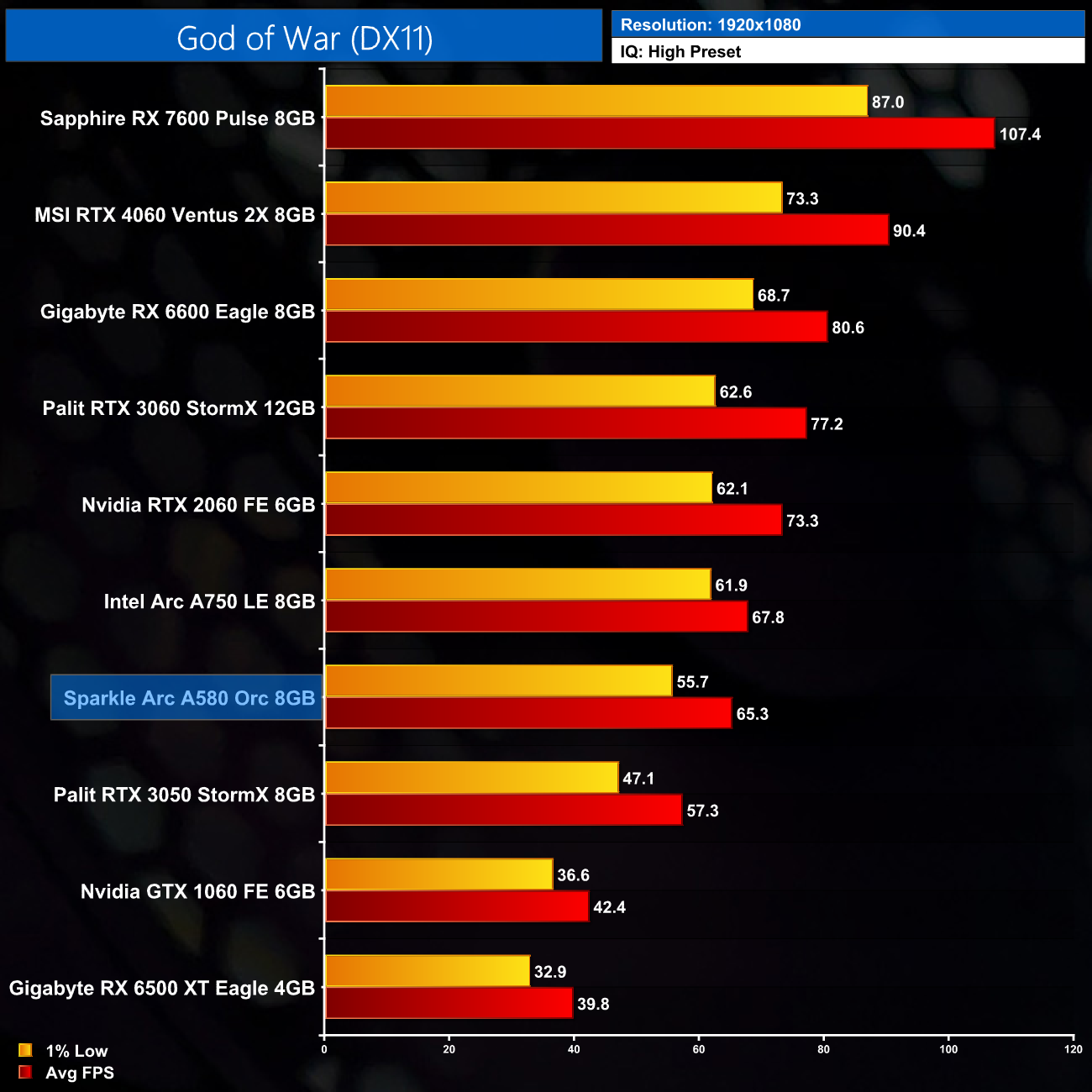

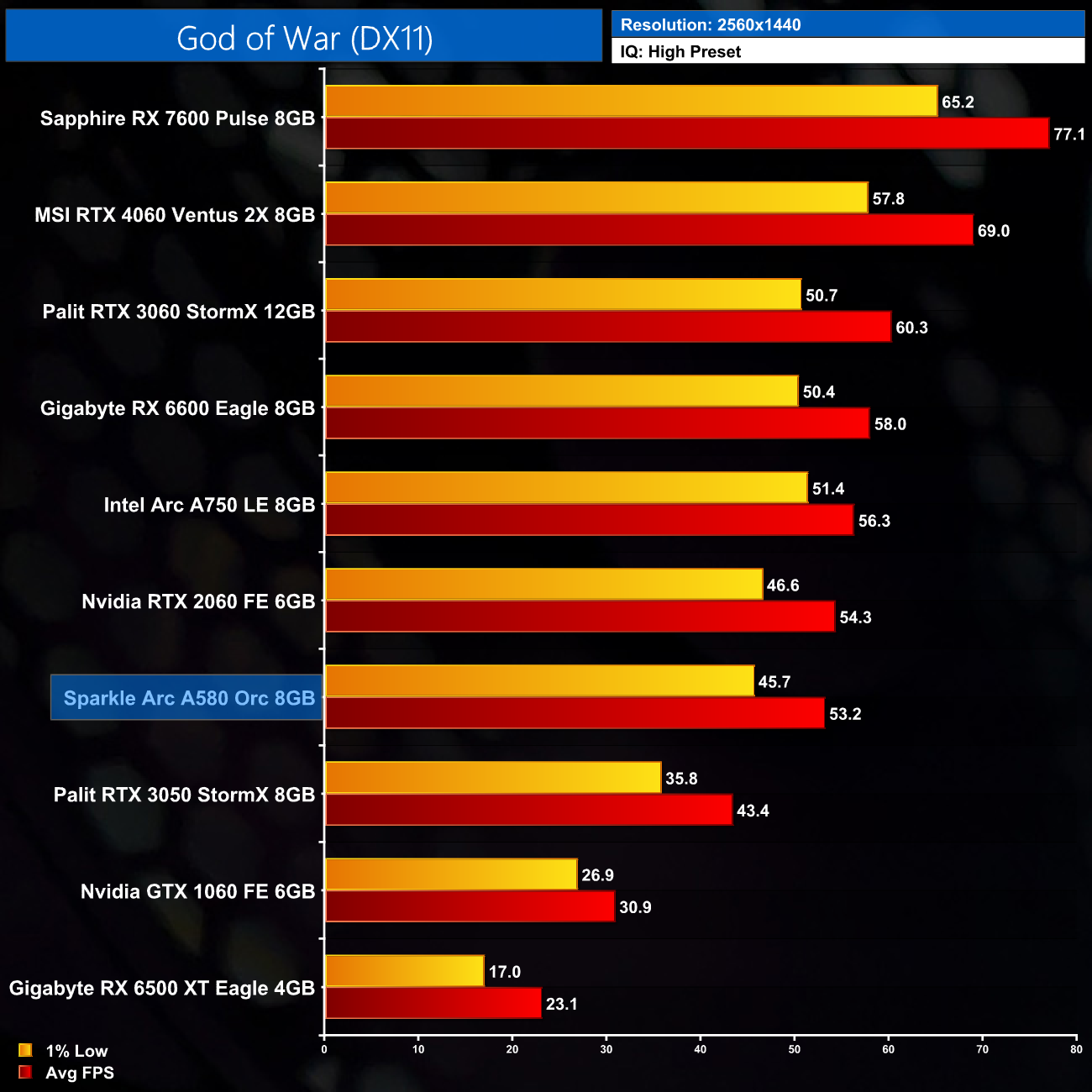

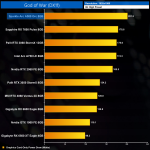

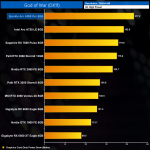

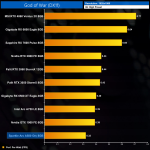

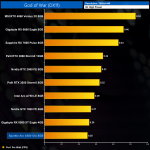

God of War is an action-adventure game developed by Santa Monica Studio and published by Sony Interactive Entertainment (SIE). It was released worldwide on April 20, 2018, for the PlayStation 4 with a Microsoft Windows version released on January 14, 2022. (Wikipedia).

Engine: Sony Santa Monica in-house engine. We test using the High preset, DX11 API.

God of War shows particularly narrow scaling for the A580 versus the A750, as there's just a 4% gap between the two GPUs at 1080p. The A580 is 19% slower than the RX 6600, but 14% faster than the RTX 3050.

The A580 does scale better at 1440p though, where it's now 23% faster than the RTX 3050 and actually on par with the RTX 2060. It also comes in just 8% behind the RX 6600.

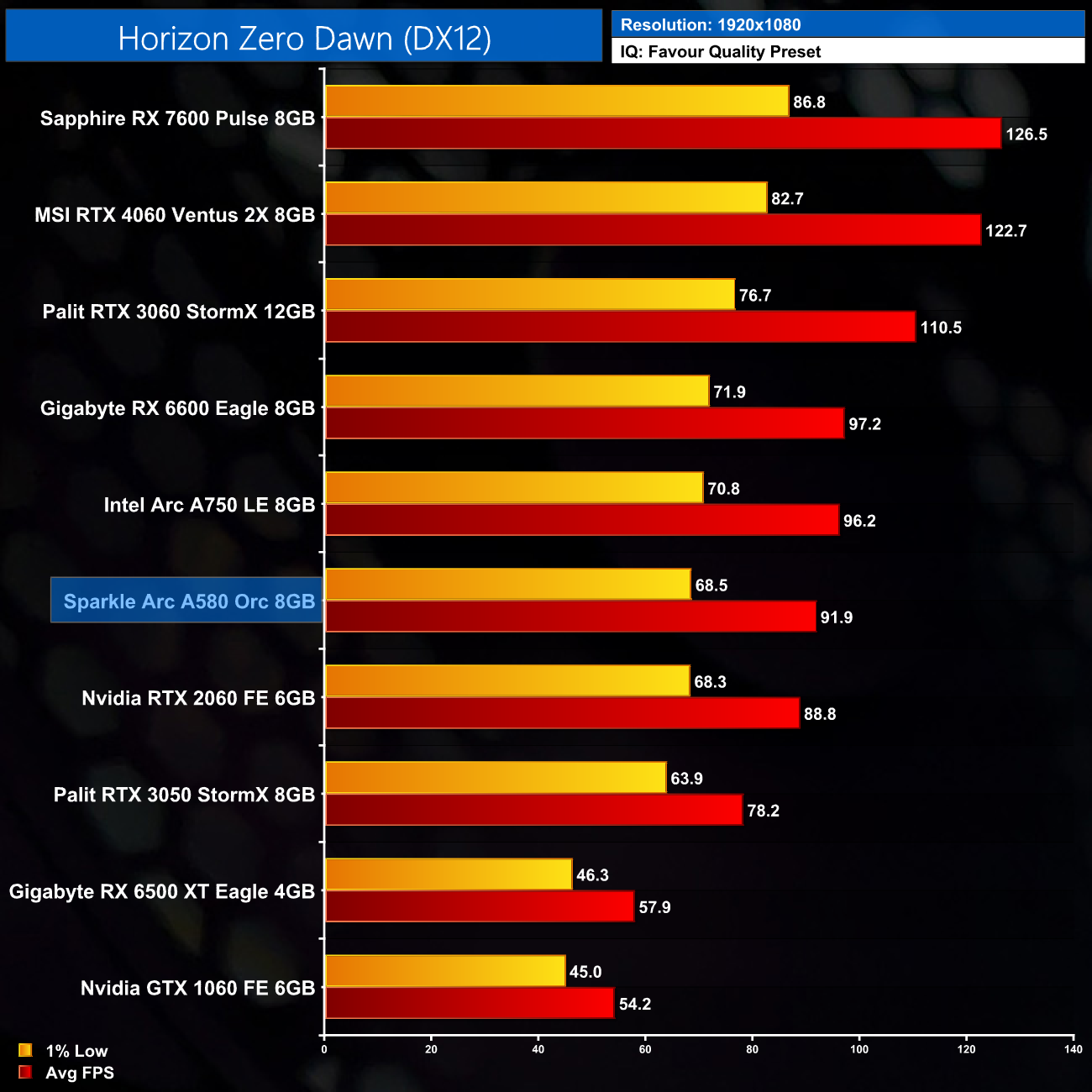

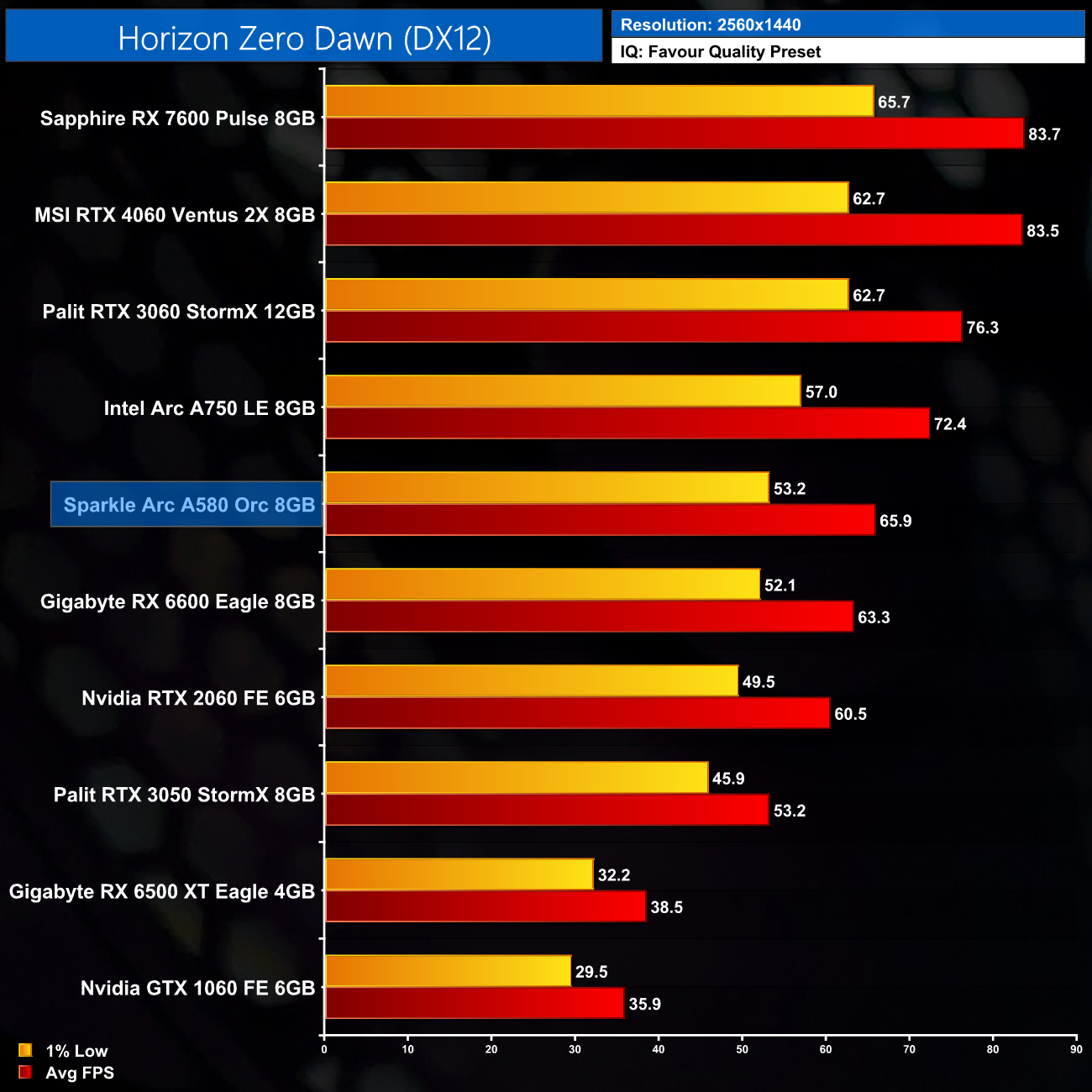

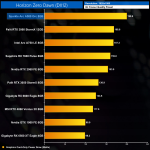

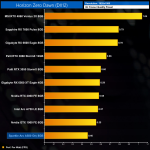

Horizon Zero Dawn is an action role-playing game developed by Guerrilla Games and published by Sony Interactive Entertainment. The plot follows Aloy, a hunter in a world overrun by machines, who sets out to uncover her past. It was released for the PlayStation 4 in 2017 and Microsoft Windows in 2020. (Wikipedia).

Engine: Decima. We test using the Favor Quality preset, DX12 API.

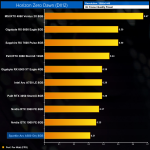

Next up is Horizon Zero Dawn, where at 1080p the A580 is delivering very similar performance to the RTX 2060. It's still only 5% slower than the A750, but it's also only 6% behind the RX 6600 which isn't too bad considering what we saw in Forza and God of War.

In fact, at 1440p it even edges out the RX 6600, likely due to its wider memory bus and increased memory bandwidth, delivering 66FPS on average.

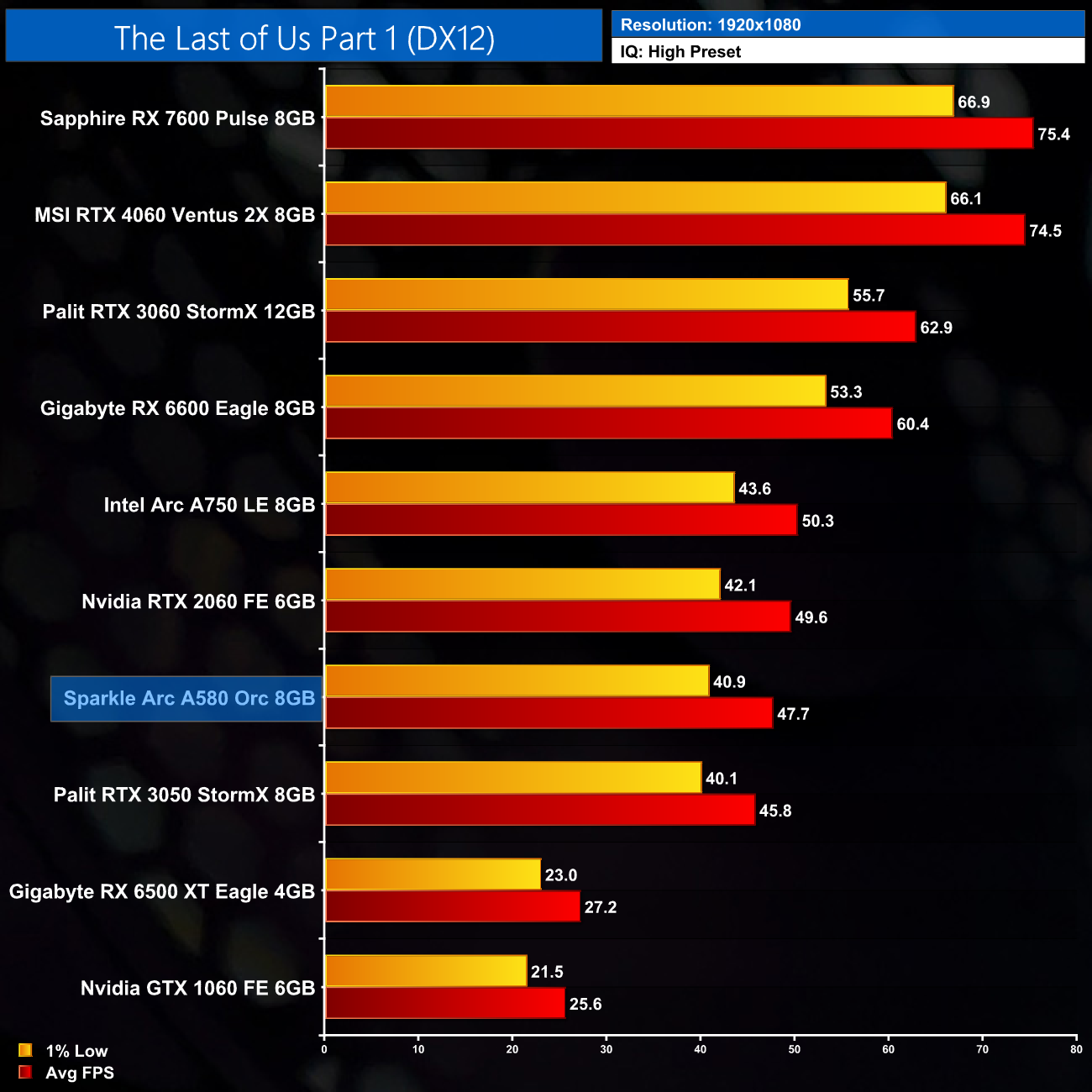

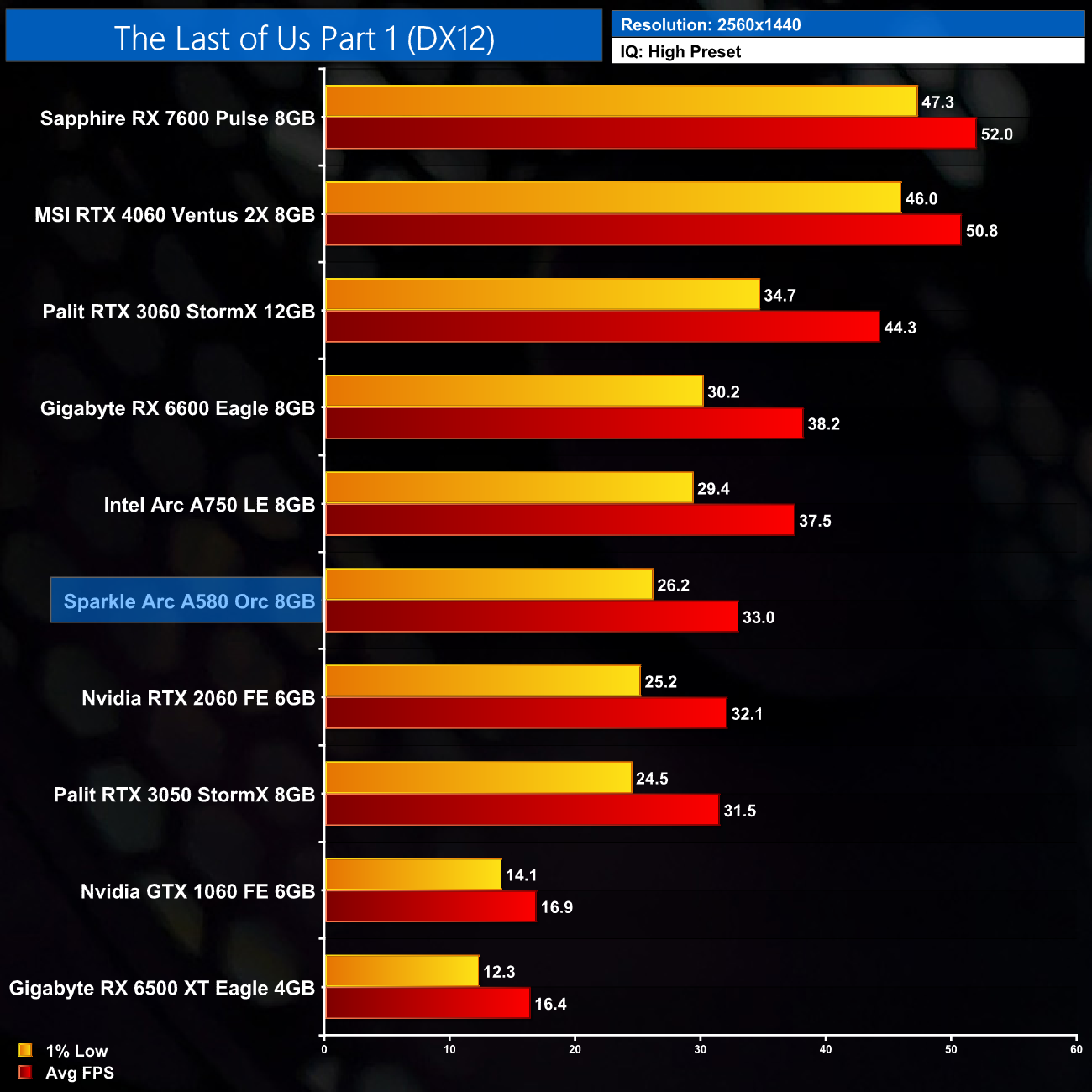

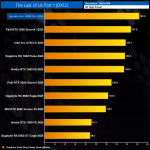

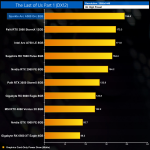

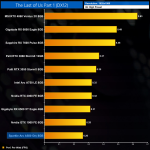

The Last of Us Part I is a 2022 action-adventure game developed by Naughty Dog and published by Sony Interactive Entertainment. A remake of the 2013 game The Last of Us, it features revised gameplay, including enhanced combat and exploration, and expanded accessibility options. It was released for Microsoft Windows in March 2023. (Wikipedia).

Engine: Naughty Dog in-house engine. We test using the High preset, DX12 API.

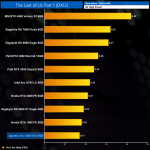

Things aren't so pretty for the A580 in The Last of Us Part 1, however. At 1080p it's within 4% of the RTX 3050, and that makes it 21% slower than the RX 6600. The A750 doesn't do very well in this title either, so it seems a bit problematic for Intel Arc.

At 1440p things do improve slightly, with the A580 now just 14% behind the RX 6600, but its 1% lows drop down top 26FPS, so it's not a playable experience using the High preset.

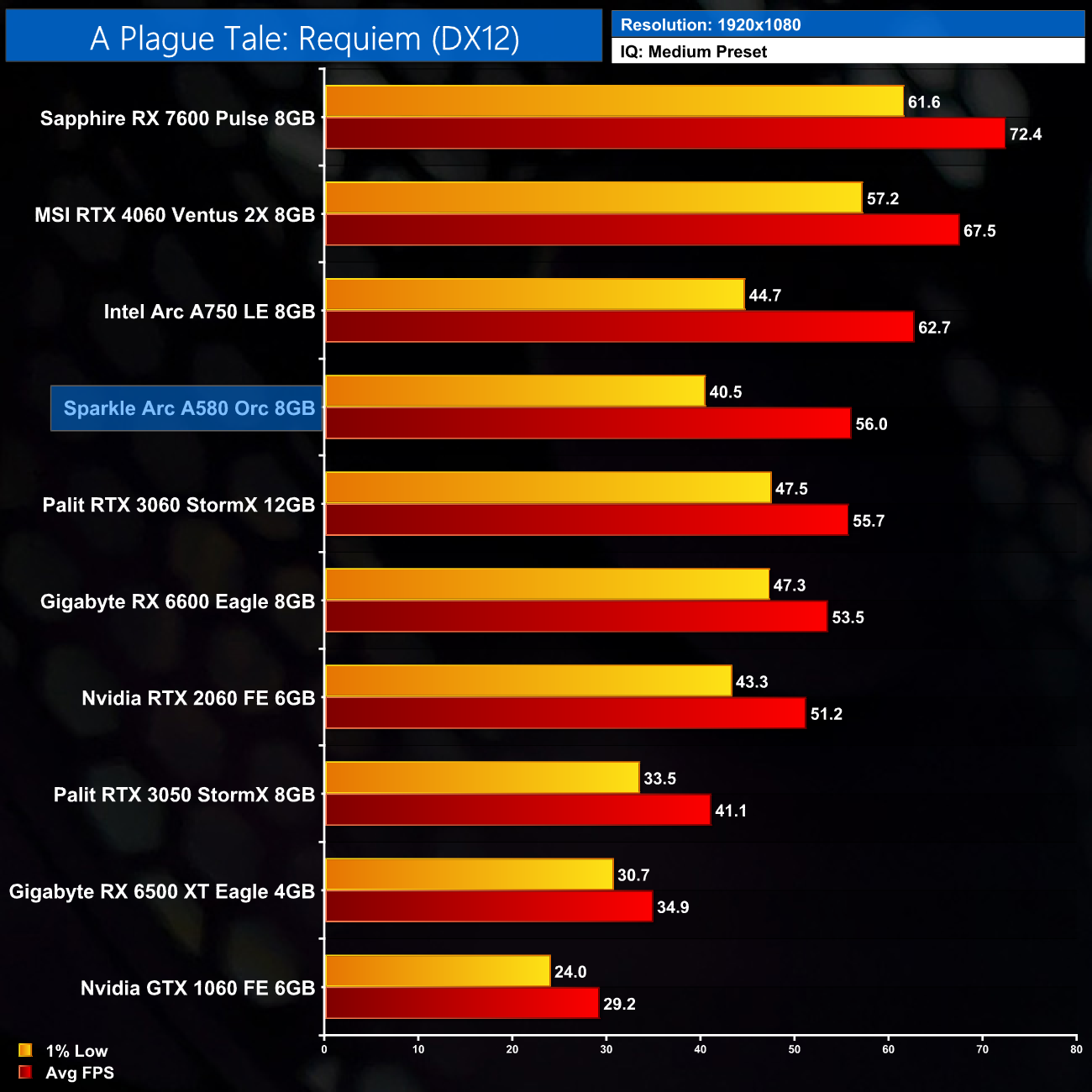

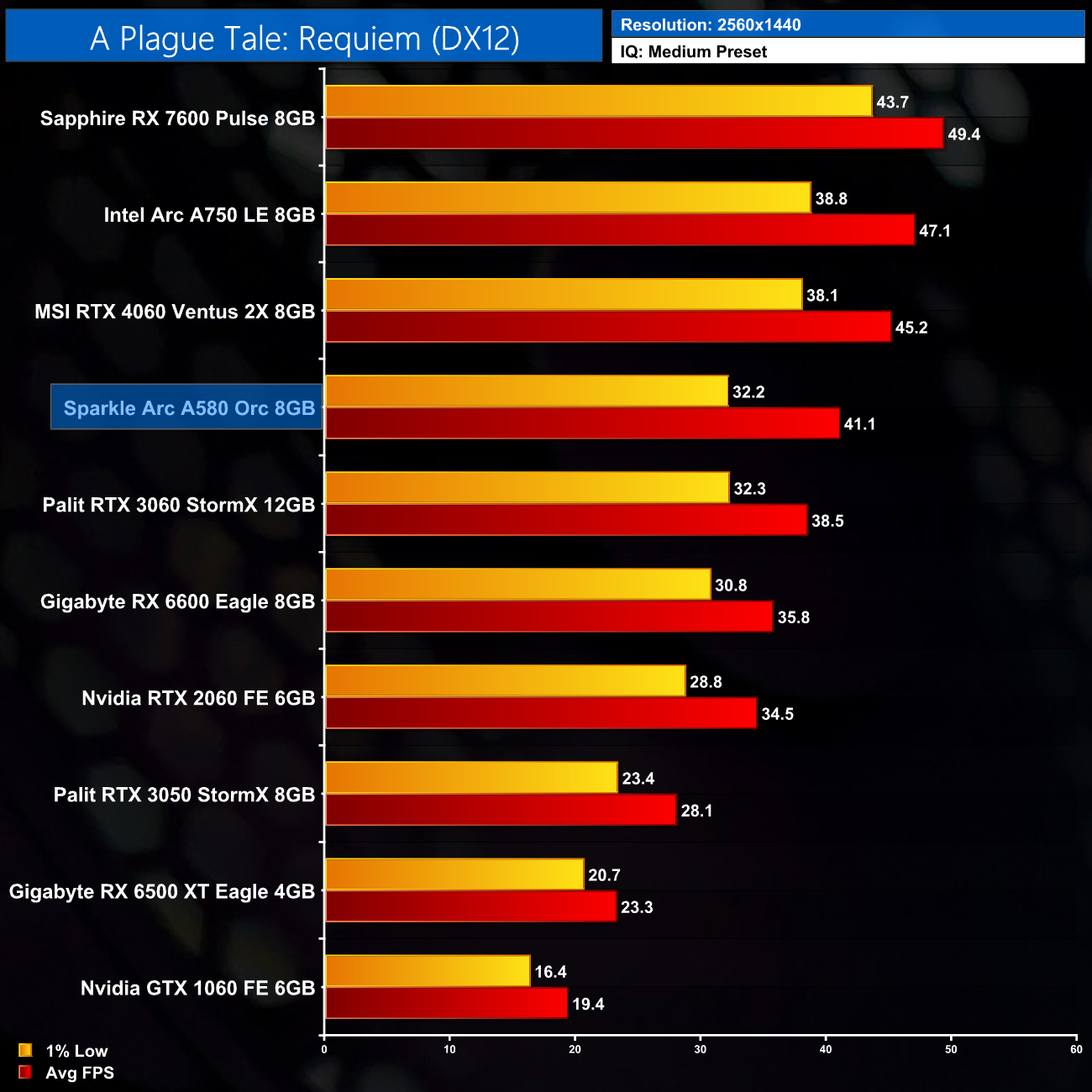

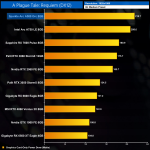

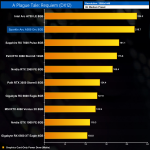

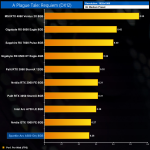

A Plague Tale: Requiem is an action-adventure stealth game developed by Asobo Studio and published by Focus Entertainment. It is the sequel to A Plague Tale: Innocence (2019), and follows siblings Amicia and Hugo de Rune who must look for a cure to Hugo's blood disease in Southern France while fleeing from soldiers of the Inquisition and hordes of rats that are spreading the black plague. The game was released for Nintendo Switch, PlayStation 5, Windows, and Xbox Series X/S on 18 October 2022. (Wikipedia).

Engine: Asobo Studio in-house engine. We test using the Medium preset, DX12 API.

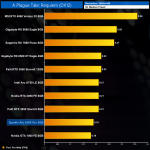

As for A Plague Tale: Requiem, the A580 scales much better here, sitting closer to the top of the chart and even beating the RX 6600 by a small margin. The trouble is its 1% lows aren't quite so impressive here, something which also affects the A750. It's not awful, but the RX 6600 is much more consistent overall.

Things to tighten up at 1440p, but again the overall frame rate is now much lower – with 1% lows hitting just 32FPS, even using the Medium preset.

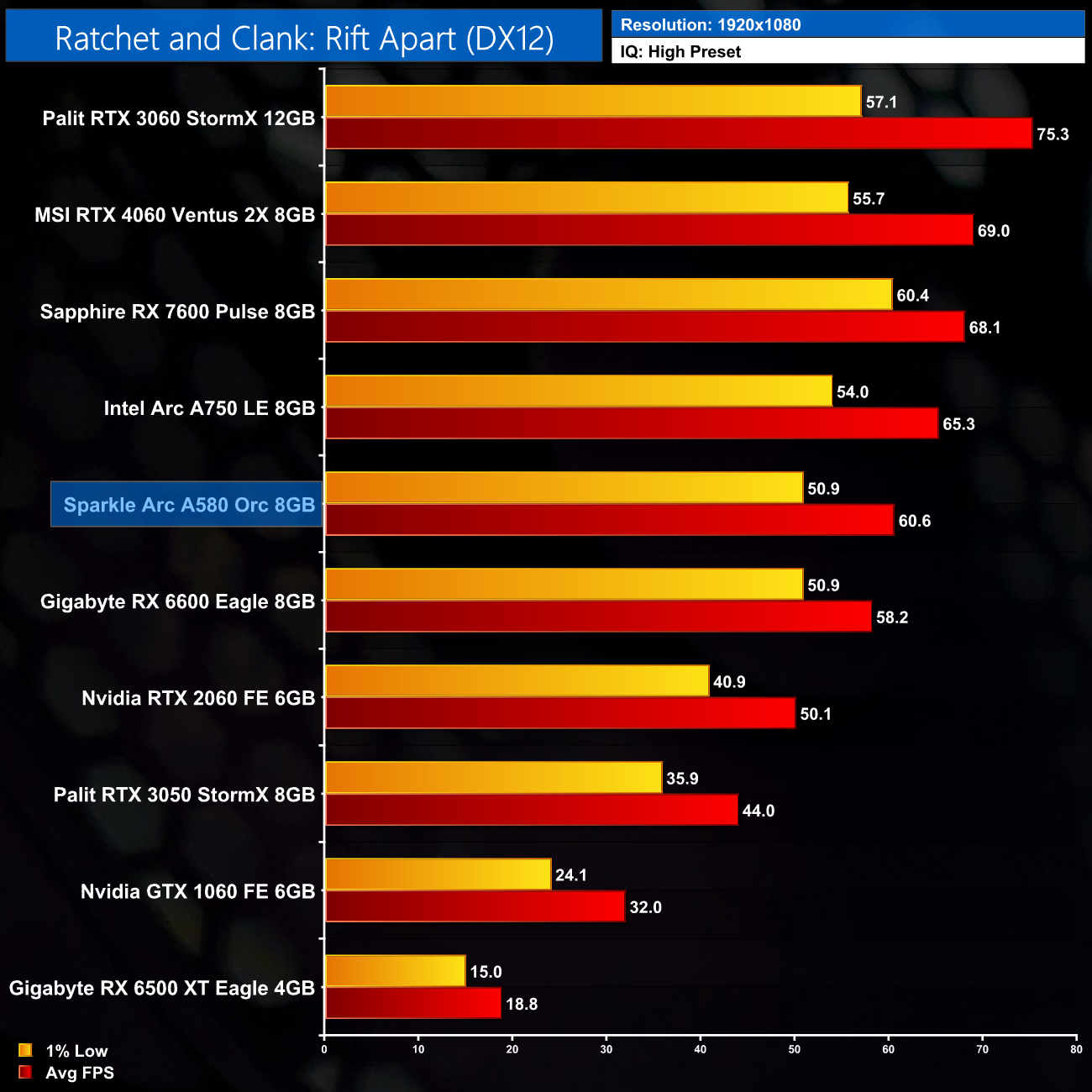

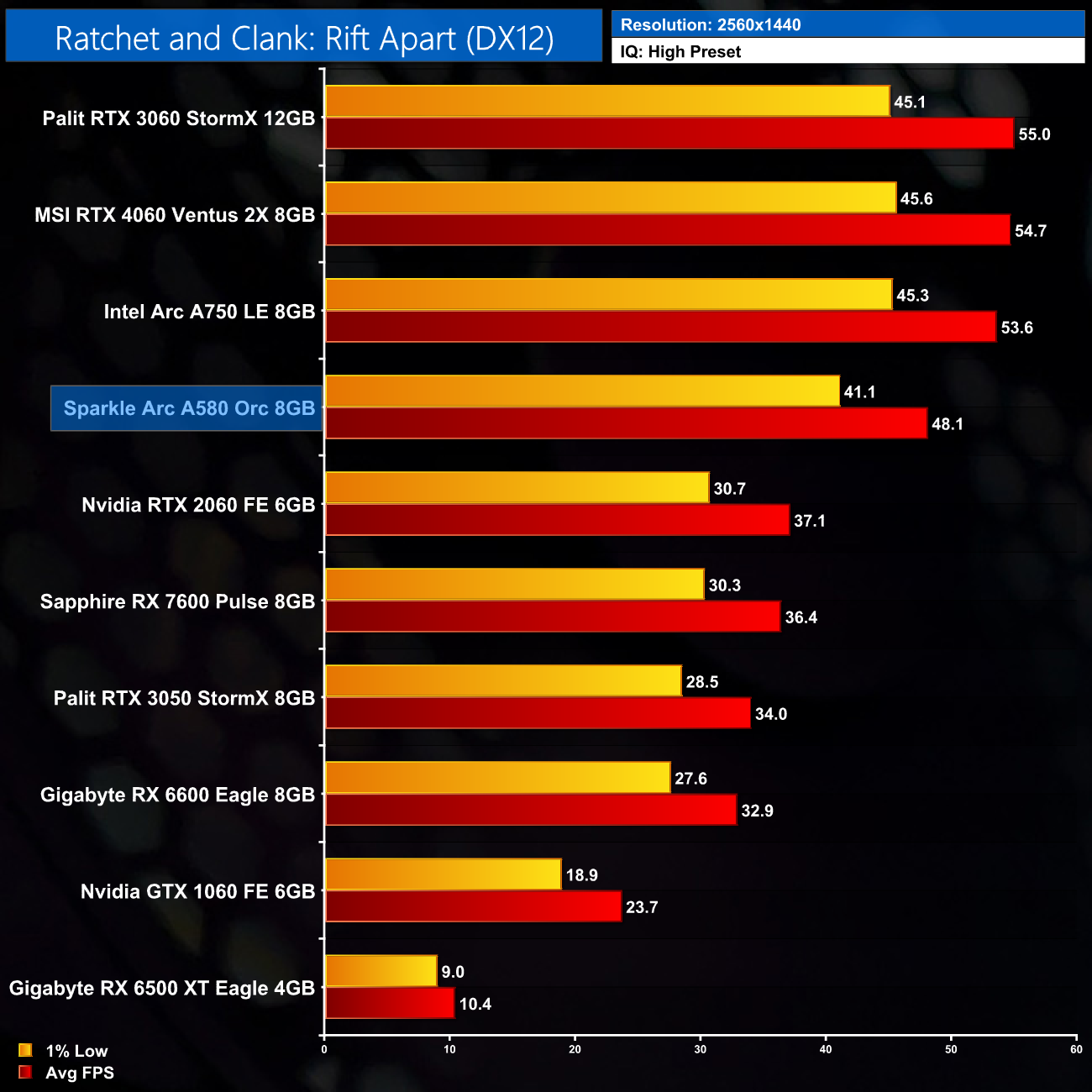

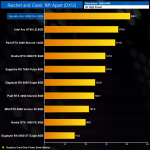

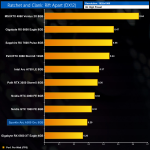

Ratchet & Clank: Rift Apart is a 2021 third-person shooter platform game developed by Insomniac Games and published by Sony Interactive Entertainment for the PlayStation 5. It is the ninth main installment in the Ratchet & Clank series and a sequel to Ratchet & Clank: Into the Nexus. Rift Apart was announced in June 2020 and was released on June 11, 2021. A Windows port by Nixxes Software was released on July 26, 2023. (Wikipedia).

Engine: Insomniac Games in-house engine. We test using the High preset, DX12 API.

We do see better performance in Ratchet and Clank, too, where at 1080p the A580 nudges ahead of the RX 6600 and is in a different league compared to the RTX 3050. The gap here versus the A750 is still only 7%, however.

1440p is manageable too, provided you are happy with 48FPS on average, and that puts the RX well clear of the RTX 2060 and even RX 7600, which really falls off a cliff at 1440p.

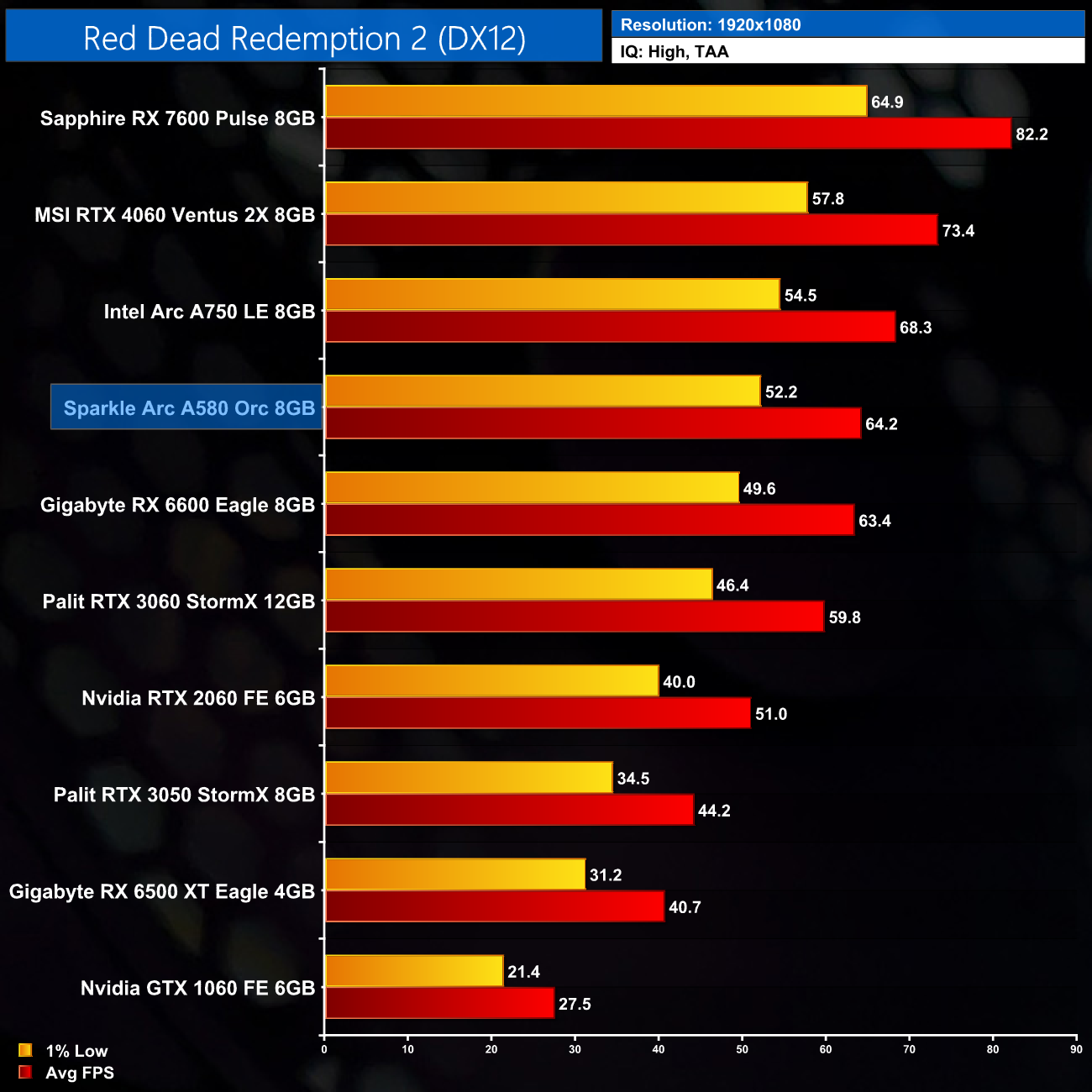

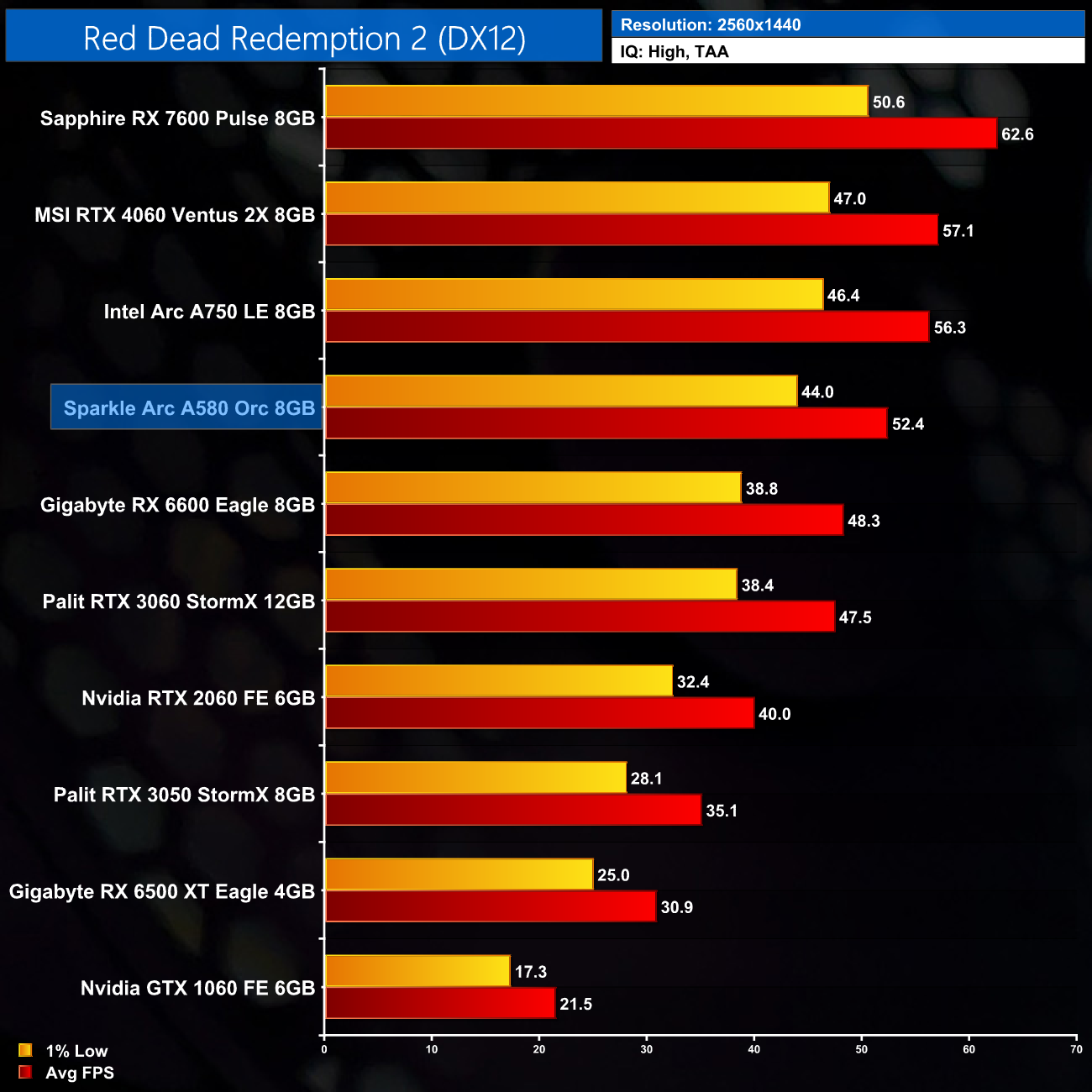

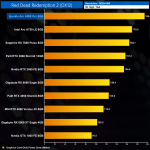

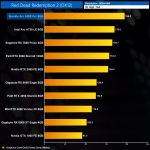

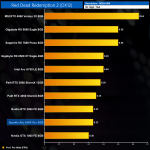

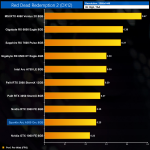

Red Dead Redemption 2 is a 2018 action-adventure game developed and published by Rockstar Games. The game is the third entry in the Red Dead series and is a prequel to the 2010 game Red Dead Redemption. Red Dead Redemption 2 was released for the PlayStation 4 and Xbox One in October 2018, and for Microsoft Windows and Stadia in November 2019. (Wikipedia).

Engine: Rockstar Advance Game Engine (RAGE). We test by manually selecting High settings, TAA, DX12 API.

We see similar scaling in Red Dead Redemption 2 as well, with the A580 coming in level with the RX 6600 but faster than even the RTX 3060 at 10800p, where it delivers 64FPS on average.

At 1440p it's still decently playable, hitting 52FPS, and this time it comes in 9% faster than the RX 6600, while it's 7% slower than the A750.

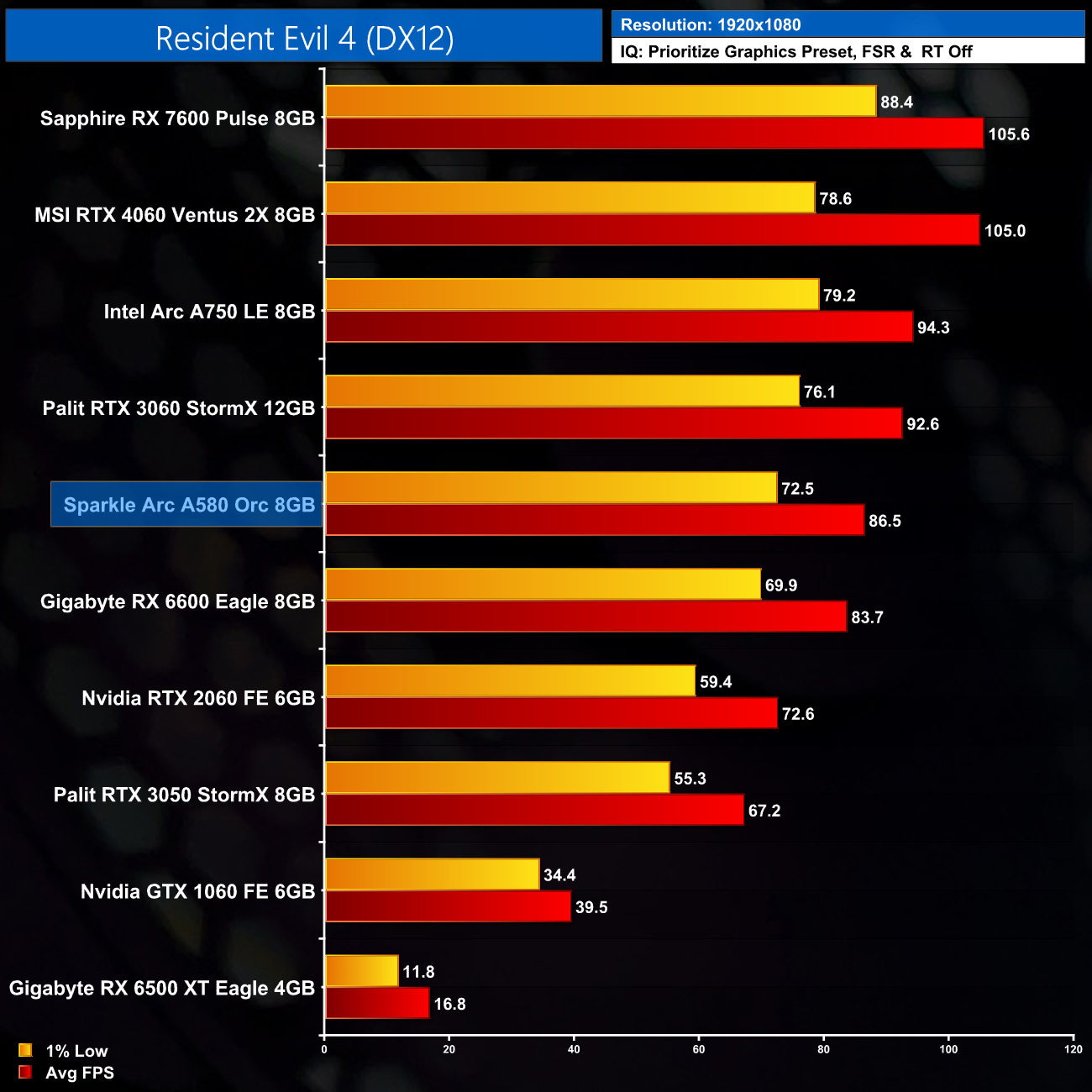

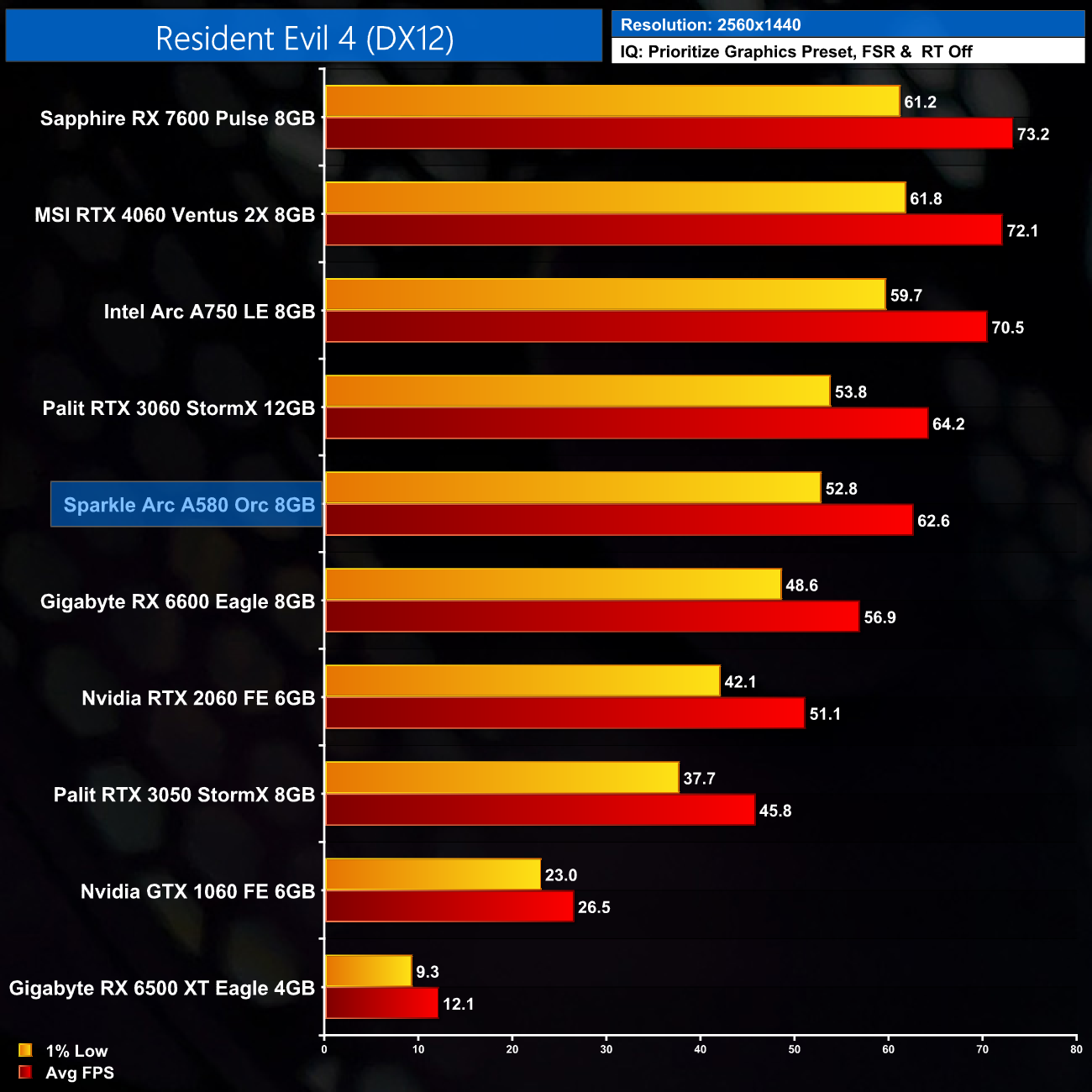

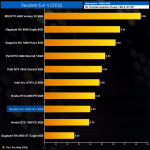

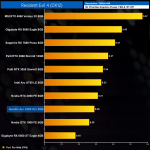

Resident Evil 4 is a 2023 survival horror game developed and published by Capcom. It is a remake of the 2005 game Resident Evil 4. Players control the US agent Leon S. Kennedy, who must save Ashley Graham, the daughter of the United States president, from the mysterious Los Illuminados cult. Resident Evil 4 was announced in June 2022 and released on PlayStation 4, PlayStation 5, Windows, and Xbox Series X/S on March 24, 2023.

Engine: RE Engine. We test using the Prioritise Graphics preset with ray tracing disabled, DX12 API.

As for Resident Evil 4, at 1080p the A580 delivers 87FPS on average, sitting it neatly between the RX 6600 and RTX 3060. It also comes in 8% slower than the A750.

Up at 1440p it's still playable, hitting over 60FPS, and this time it comes in 10% faster than the RX 6600, so that's one of the better results for the A580.

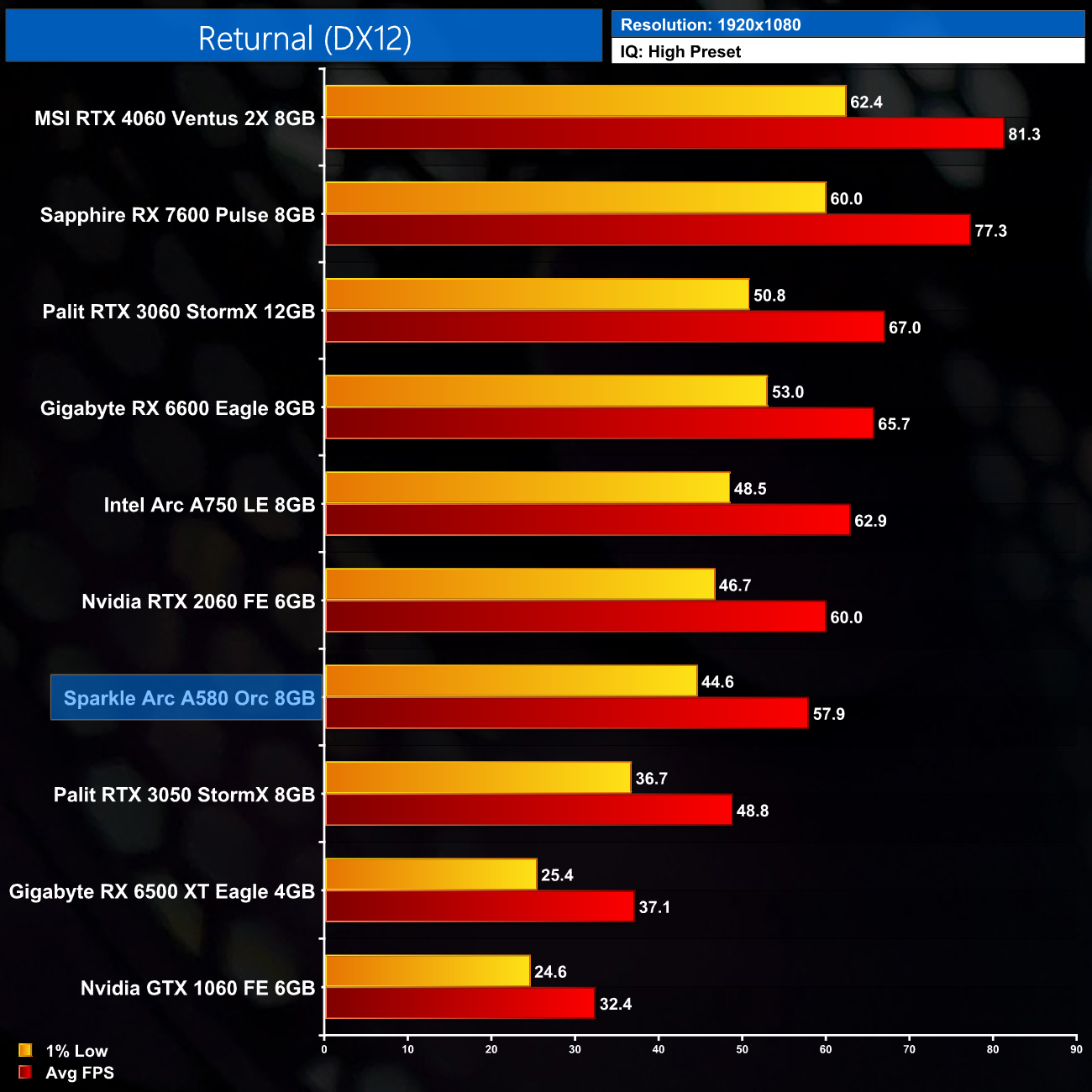

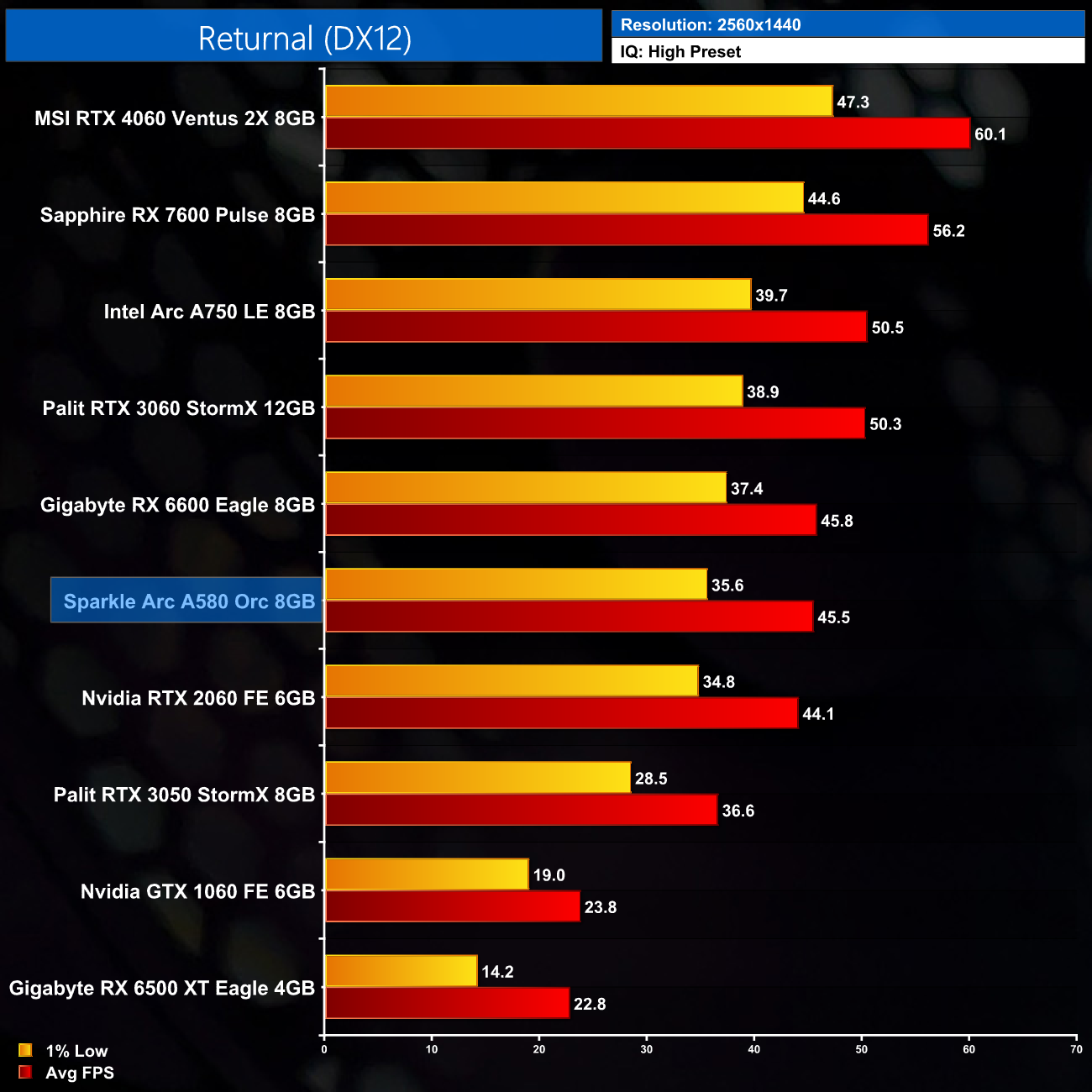

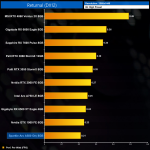

Returnal is a 2021 roguelike video game developed by Housemarque and published by Sony Interactive Entertainment. It was released for the PlayStation 5 on April 30, 2021 and Windows on February 15, 2023. The game follows Selene Vassos, an astronaut who lands on the planet Atropos in search of the mysterious “White Shadow” signal and finds herself trapped in a time loop. (Wikipedia).

Engine: Unreal Engine 4. We test using the High preset with ray tracing disabled, DX12 API.

Returnal is slightly less impressive but still OK for the A580. It hits 58FPS on average at 1080p, this time coming in 12% slower than the RX 6600 but still 19% ahead of the RTX 3050.

Up at 1440p it improves however, drawing level with the RX 6600 and increasing its lead to 24% over the RTX 3050.

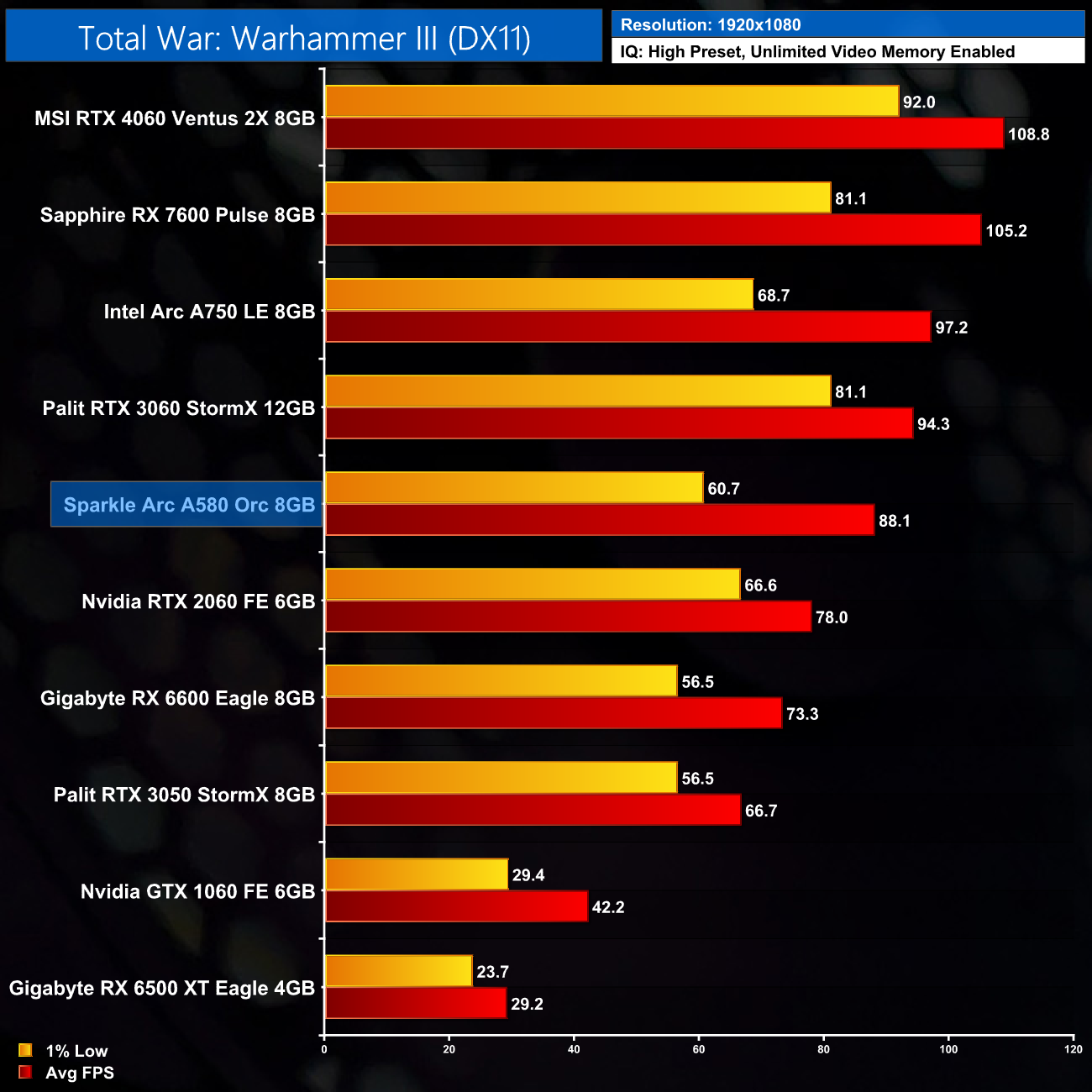

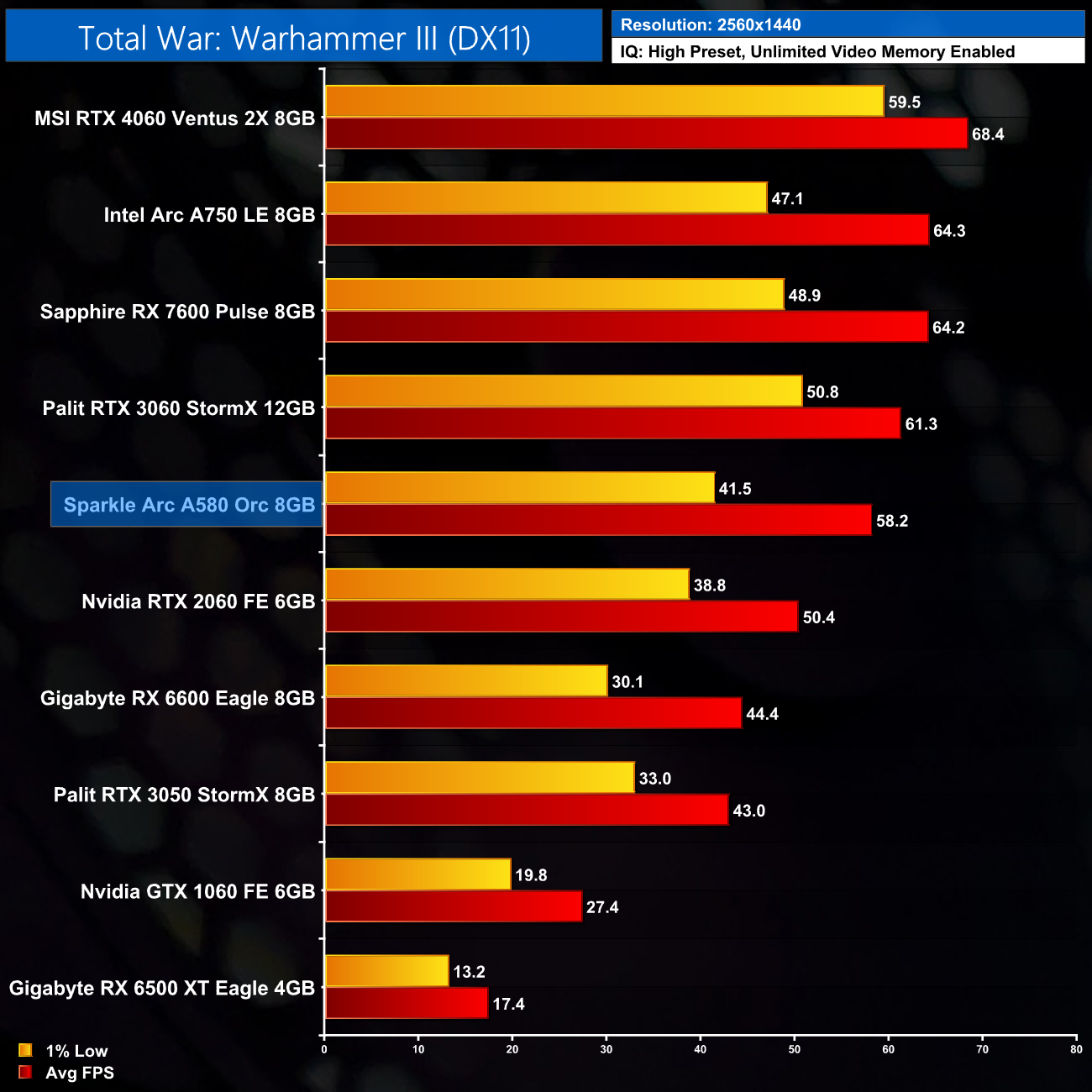

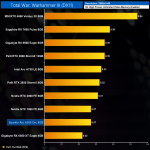

Total War: Warhammer III is a turn-based strategy and real-time tactics video game developed by Creative Assembly and published by Sega. It is part of the Total War series, and the third to be set in Games Workshop's Warhammer Fantasy fictional universe (following 2016's Total War: Warhammer and 2017's Total War: Warhammer II). The game was announced on February 3, 2021 and was released on February 17, 2022.(Wikipedia).

Engine: TW Engine 3 (Warscape). We test using the High preset, with unlimited video memory enabled, DX11 API.

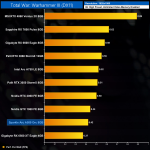

Lastly, we finish up with Total War: Warhammer III. At first glance the A580 looks good enough, coming in 20% faster than the RX 6600 in terms of the average frame rate – but the 1% lows are a let down here – they're only 7% better than the RX 6600, and worse than the RTX 2060, despite the averaging frame rate of that Turing GPU being lower overall.

Likewise, at 1440p the A580 hits 58FPS average but with the 1% lows lagging behind – it's only a small improvement over the RTX 2060 at this resolution.

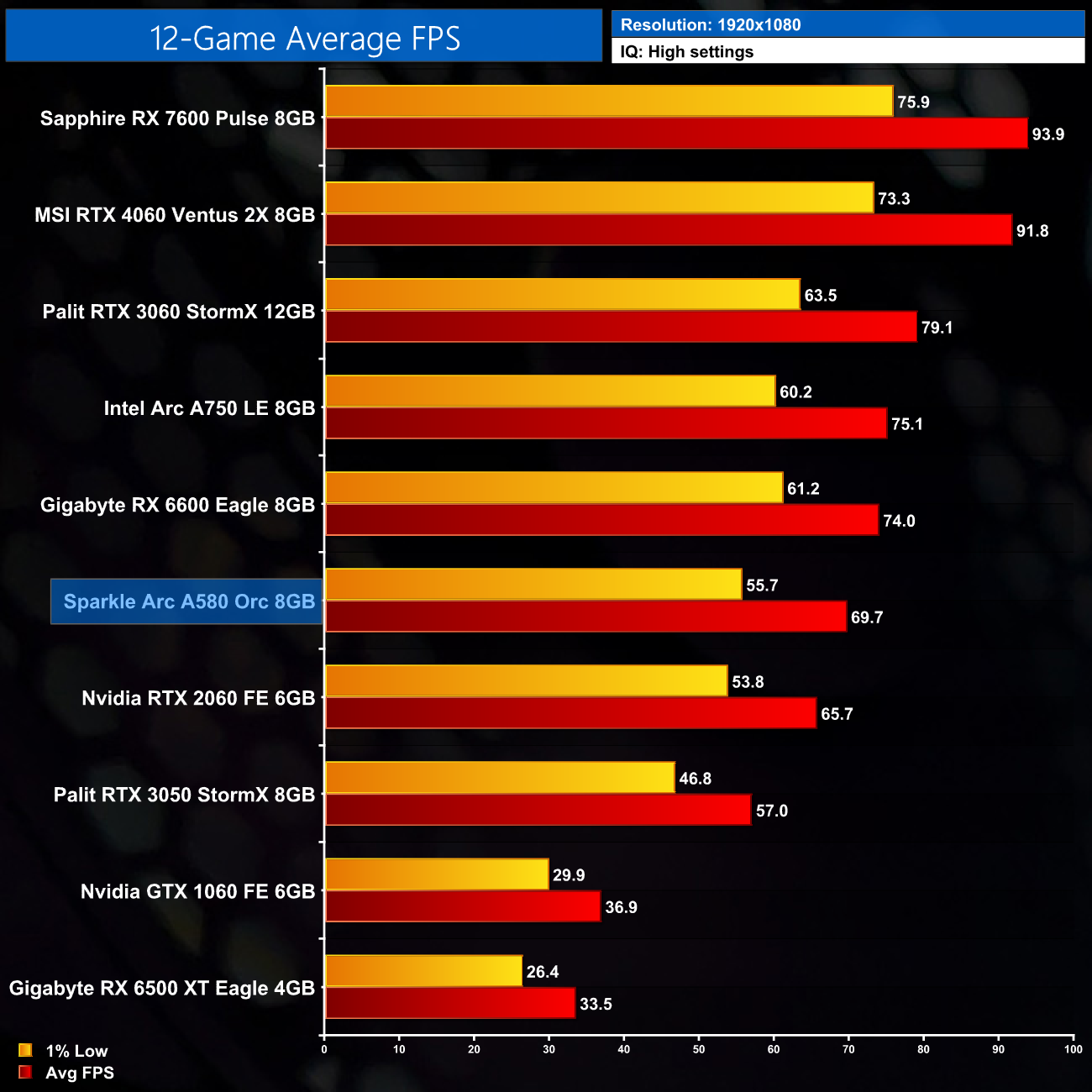

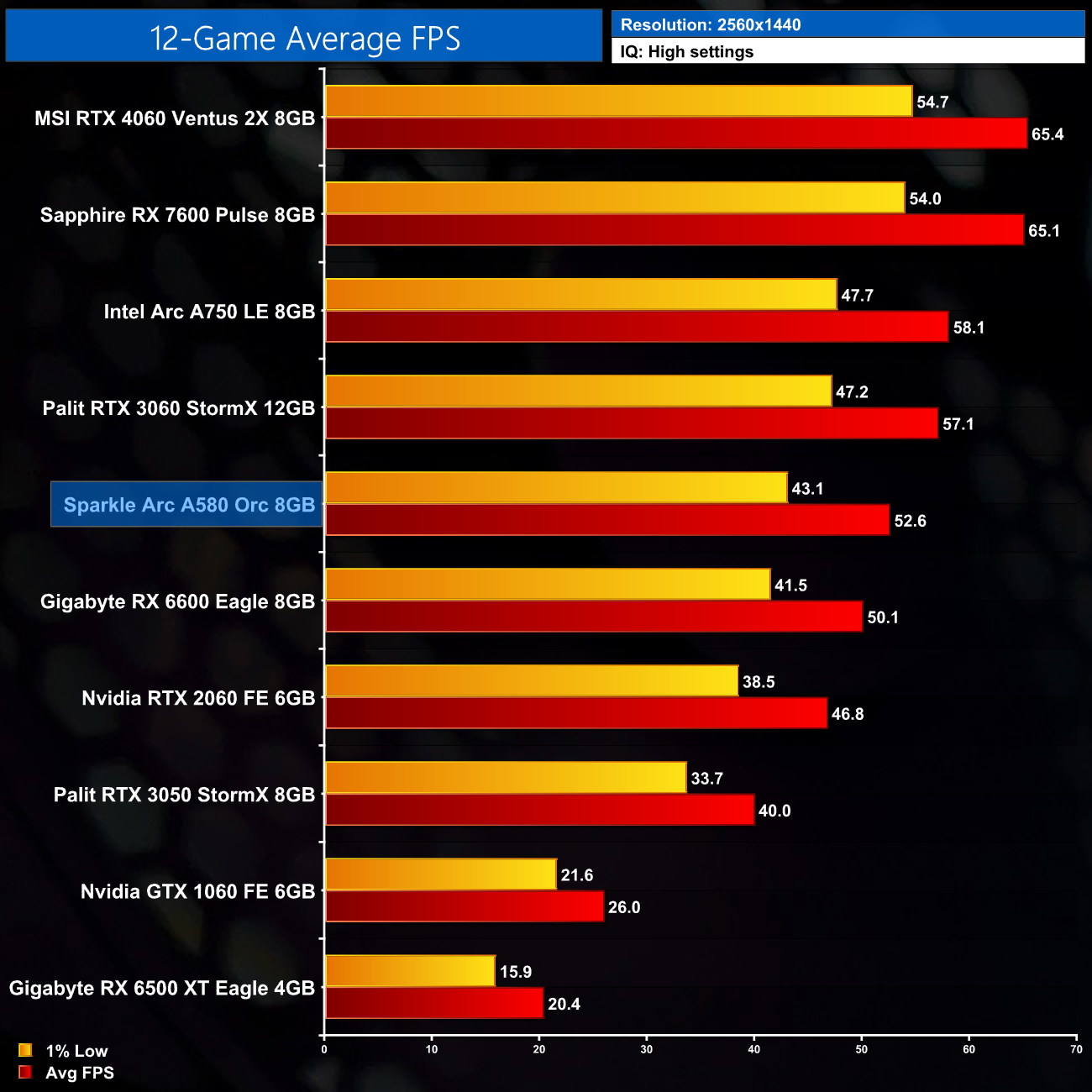

Here we present frame rate figures for each graphics card, averaged across all 12 games on test today. These figures can disguise significant variations in performance from game to game, but provide a useful overview of the sort of performance you can expect at each resolution tested.

Overall then, across the 12 games tested, the A580 delivered an average of 70FPS at 1080p. That makes it 6% slower than the RX 6600 looking at the average frame rate, but it's actually 9% slower in terms of the 1% lows. It's also 6% ahead of the RTX 2060, but just 7% behind the Arc A750.

1440p is slightly more impressive for the A580, but not every game can run at a decent frame rate as we saw. Still, here it is now 5% faster than the RX 6600, while it comes in 8% slower than the RTX 3060, while it's 10% behind the A750.

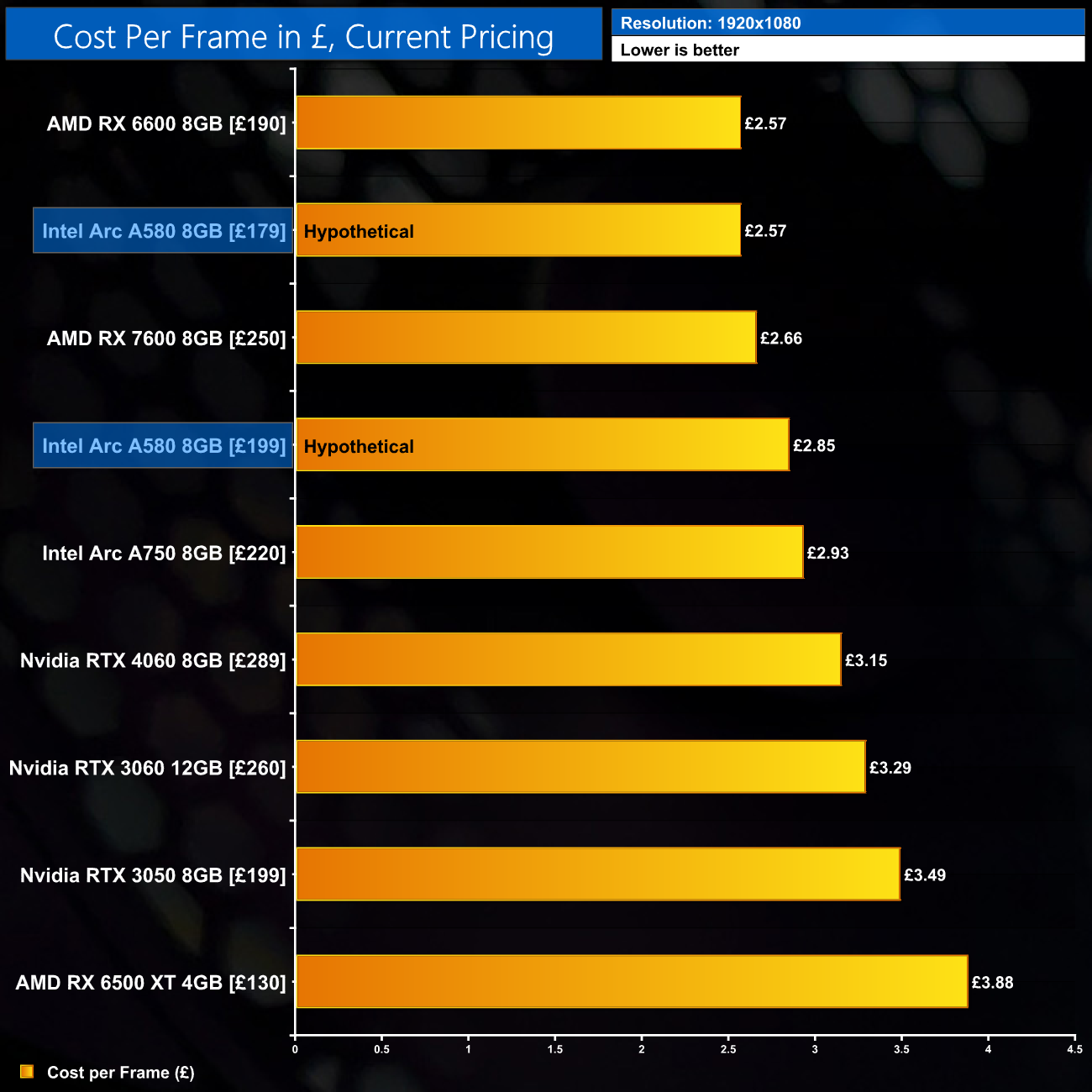

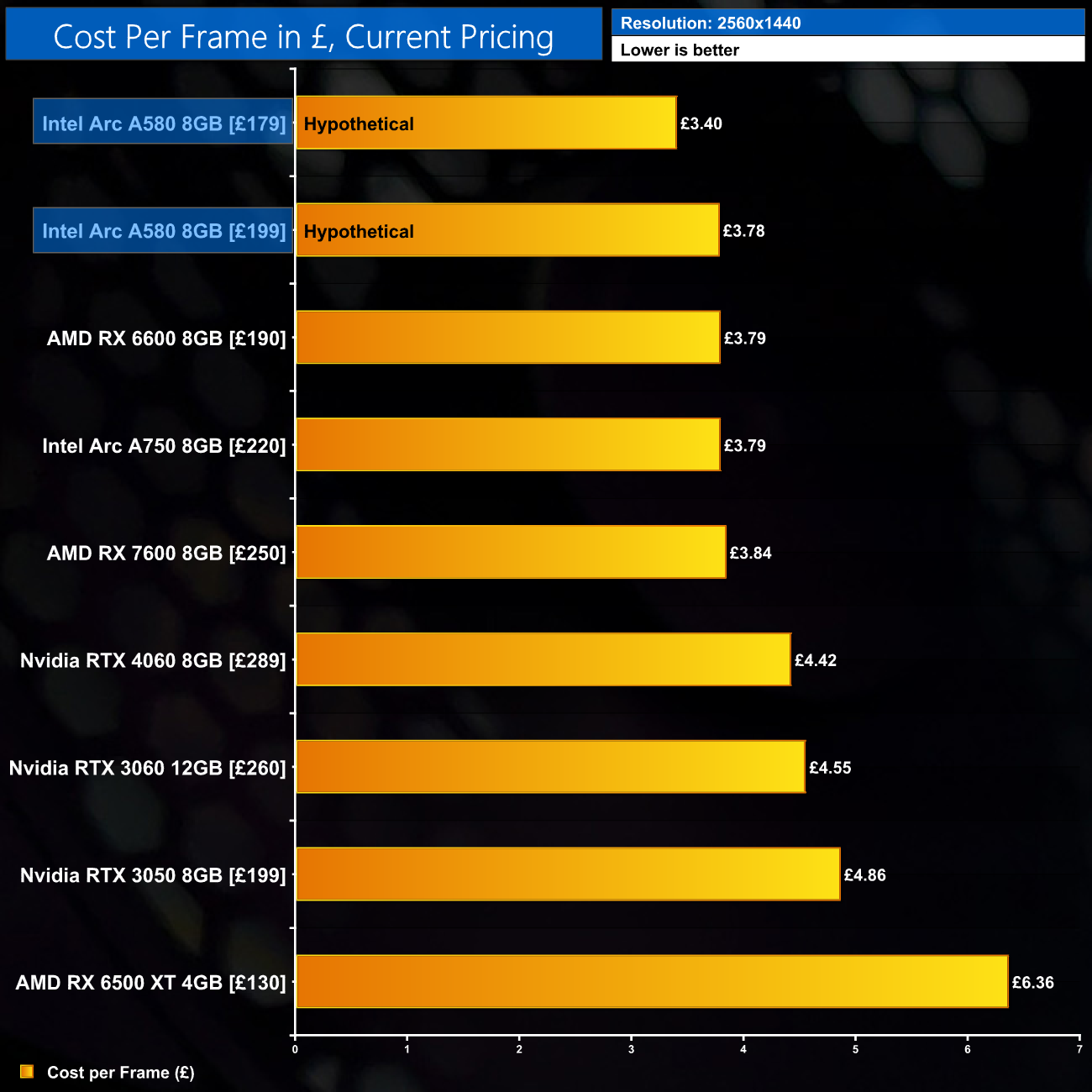

Using the average frame rate data presented earlier in the review, here we look at the cost per frame using the current UK retail prices for each GPU. Please note, UK pricing for the A580 is unconfirmed at the time of publication. The below data is based on two hypothetical price-points for this GPU (£179 and £199).

Just to give you an idea of value, depending on the price the A580 lands at, we have inputted data based on it coming to market at either £179 or £199 – of course we will have to wait and see for the actual figure!

Still, at 1080p, even if the A580 hit the UK market at £179, it would only be on par with the RX 6600, which has fallen as low as £190 in recent months. If the A580 comes in at £199, even the RX 7600 would be better value.

That does change at 1440p, where the A580 scales better, but not every game is playable at this resolution without dropping image quality settings by a fair degree.

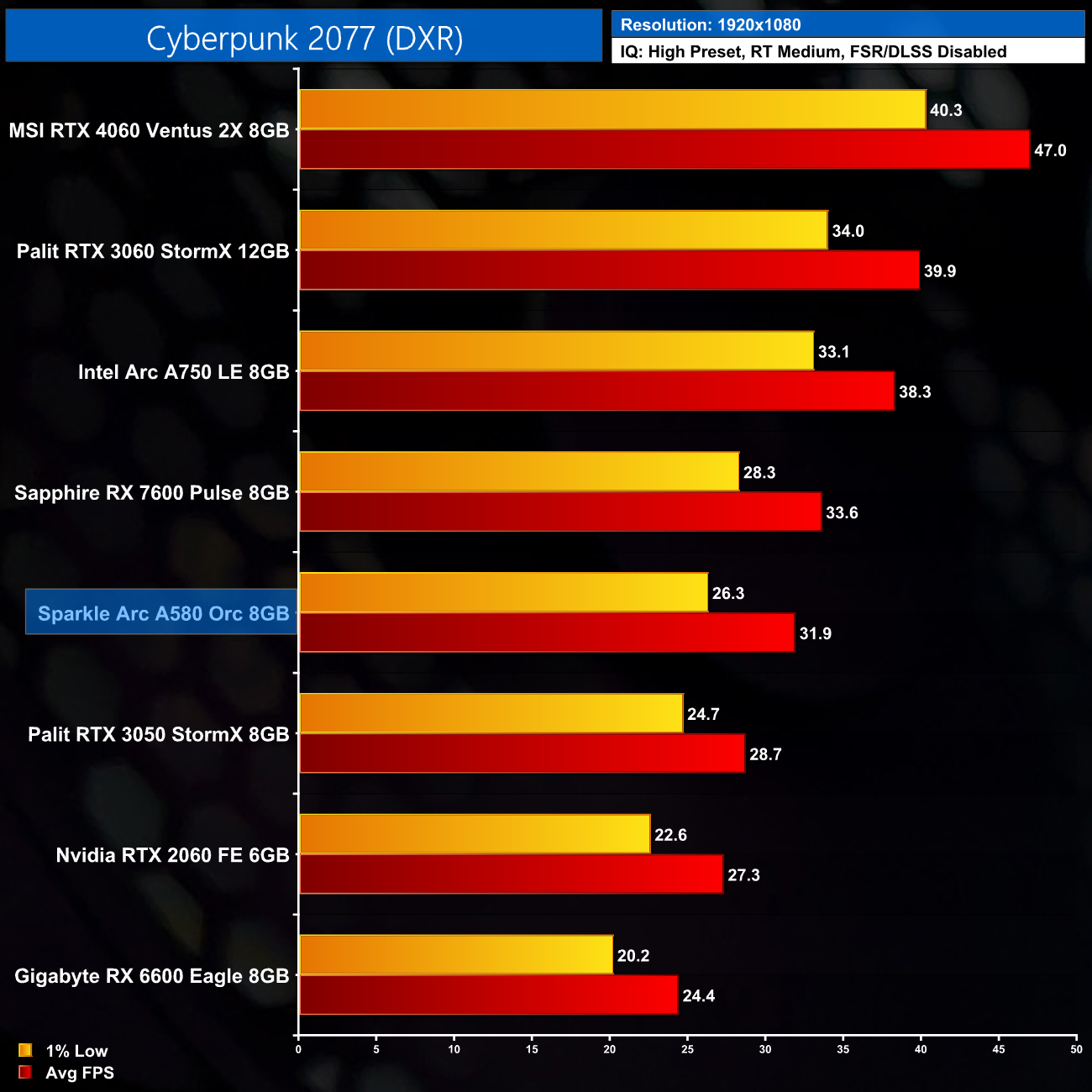

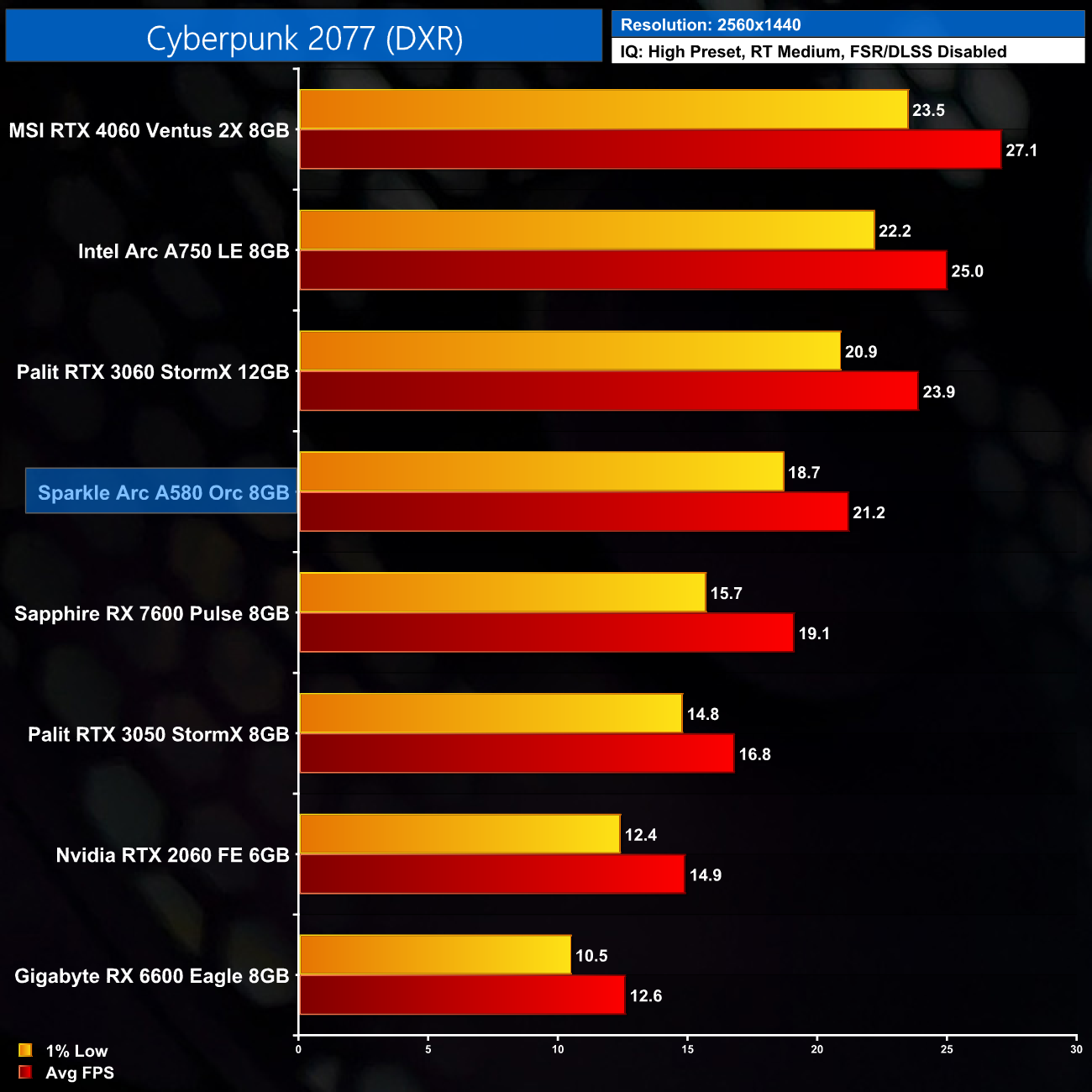

Cyberpunk 2077 is a 2020 action role-playing video game developed and published by CD Projekt. The story takes place in Night City, an open world set in the Cyberpunk universe. Players assume the first-person perspective of a customisable mercenary known as V, who can acquire skills in hacking and machinery with options for melee and ranged combat. Cyberpunk 2077 was released for Microsoft Windows, PlayStation 4, Stadia, and Xbox One on 10 December 2020. (Wikipedia).

Engine: REDengine 4. We test using the High preset, with RT Shadows enabled and RT Lighting set to Medium. DLSS/FSR are disabled. DXR API.

In terms of ray tracing, if we start with the Cyberpunk 2077, even using the RT Medium preset at 1080p – which only engages RT Shadows and RT Lighting – frame rates fall considerably and the 1% lows drop below 30FPS with the A580. It is massively faster than the RX 6600, but that's purely academic at this point as you simply wouldn't play the game like this.

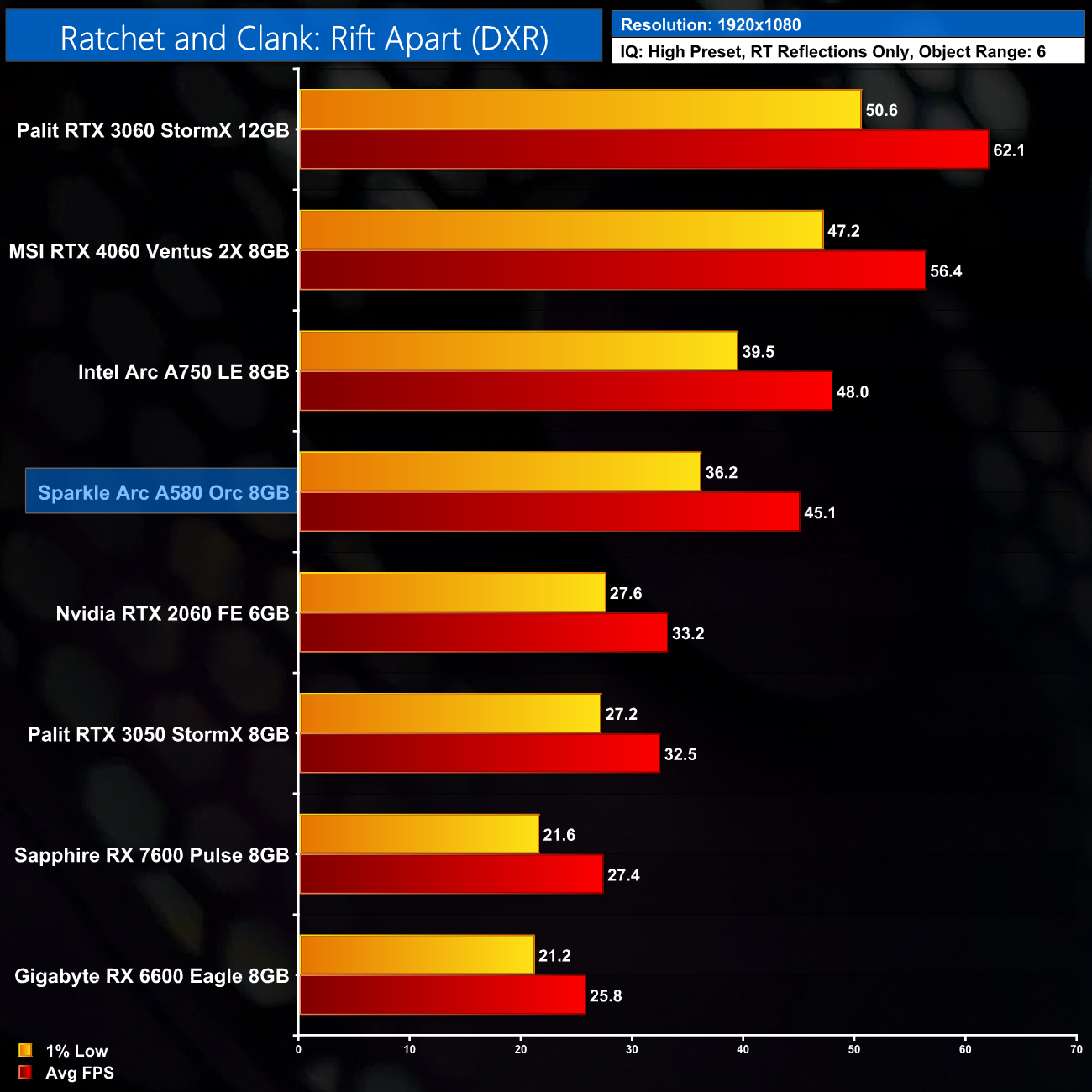

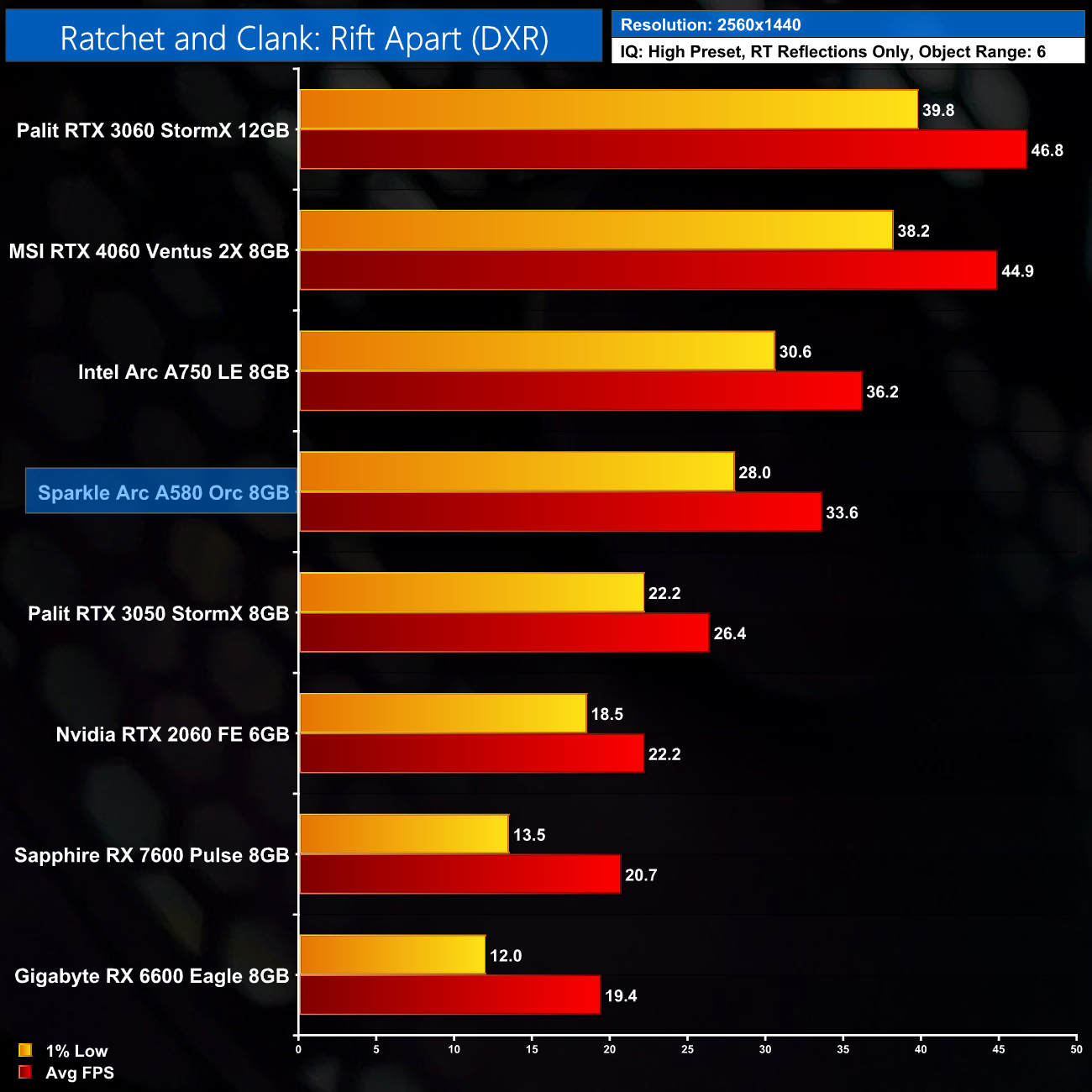

Ratchet & Clank: Rift Apart is a 2021 third-person shooter platform game developed by Insomniac Games and published by Sony Interactive Entertainment for the PlayStation 5. It is the ninth main installment in the Ratchet & Clank series and a sequel to Ratchet & Clank: Into the Nexus. Rift Apart was announced in June 2020 and was released on June 11, 2021. A Windows port by Nixxes Software was released on July 26, 2023. (Wikipedia).

Engine: Insomniac Games in-house engine. We test using the High preset, RT High Reflections only. DX12 API.

Ratchet and Clank is next and even with just RT Reflections, we're down to just 45FPS for the A580, and 36FPS for the 1% lows. It's considerably faster than the RTX 2060 and RTX 3050, but the fact the RTX 3060 12GB is actually faster than the RTX 4060 shows us that VRAM is the main limiting factor here, even at these settings.

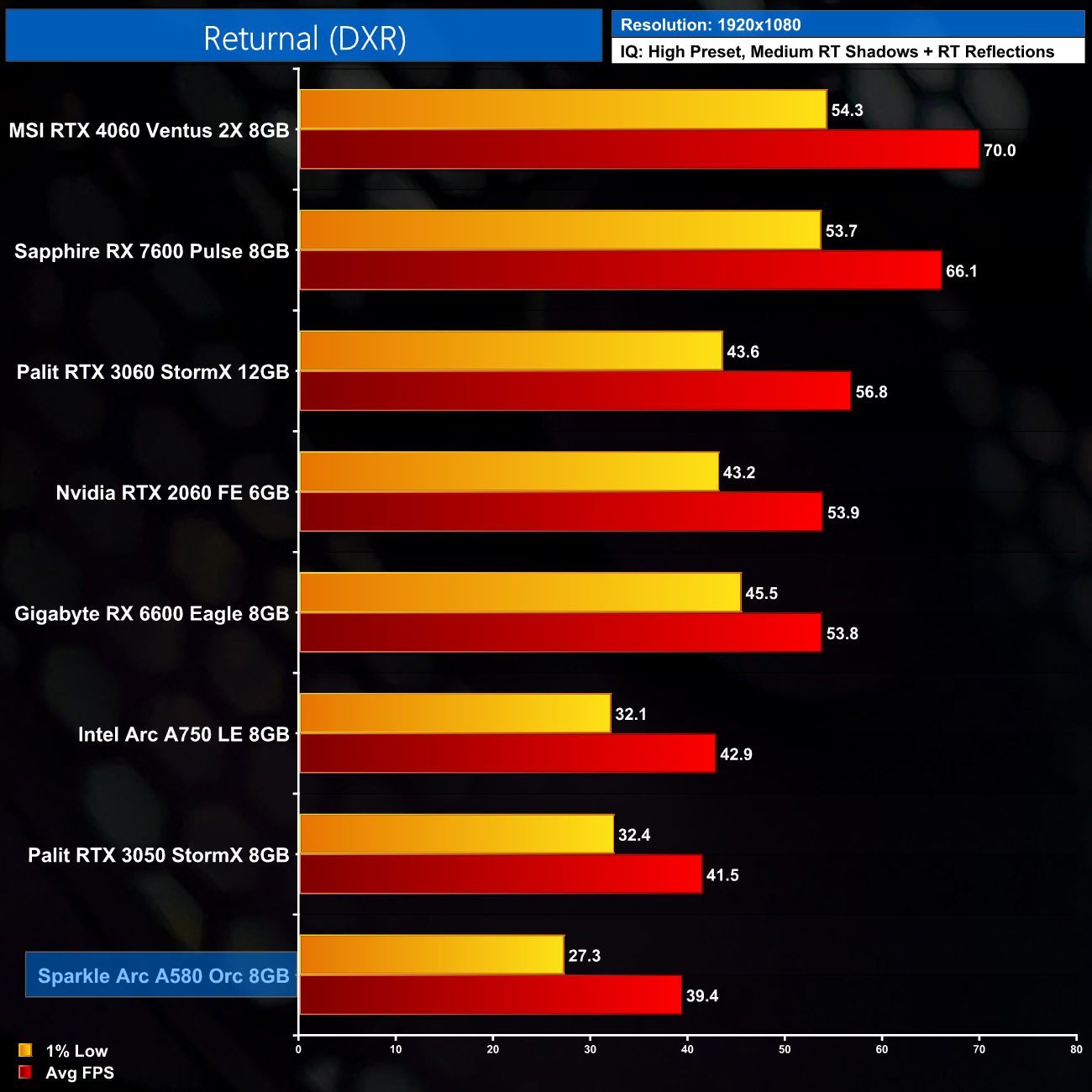

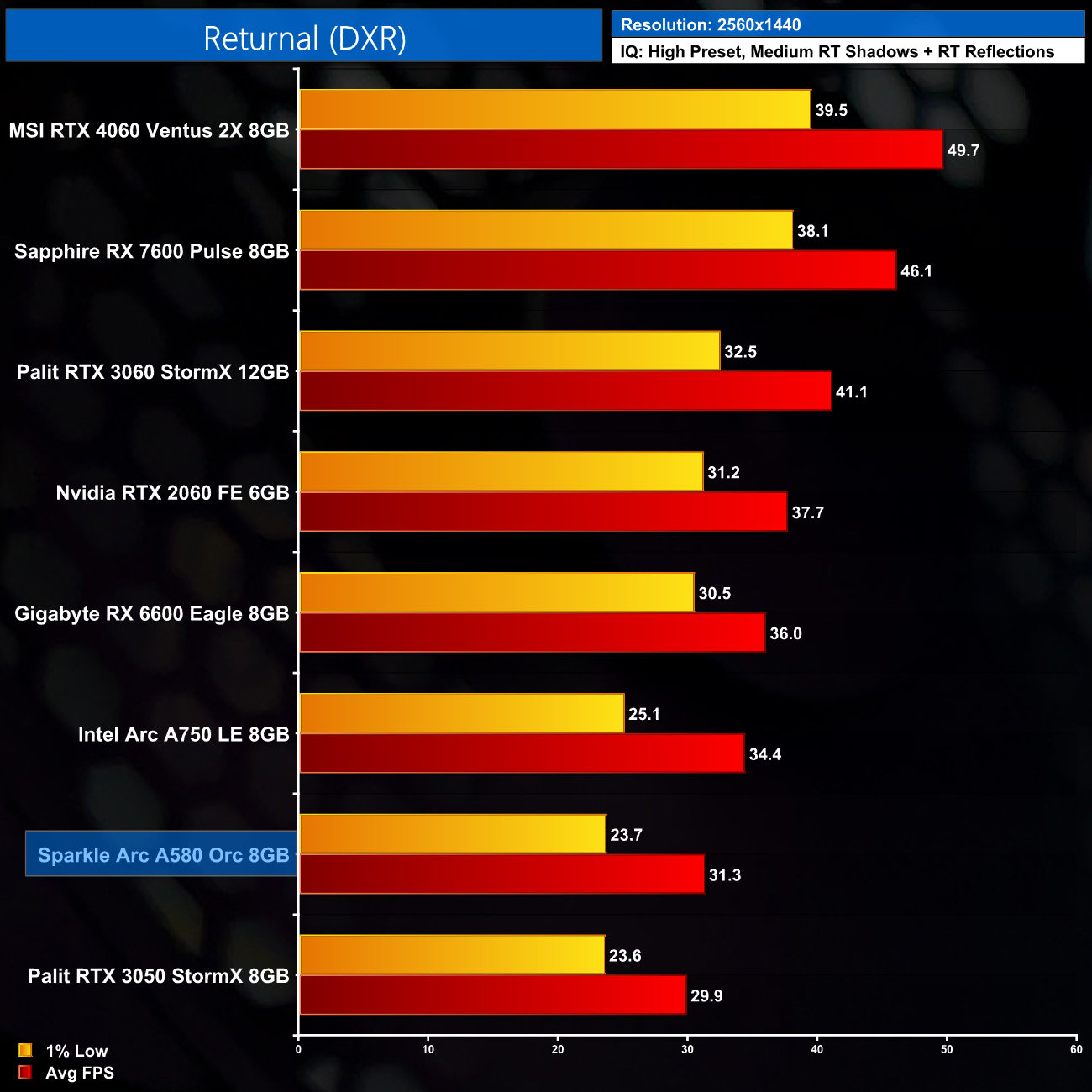

Returnal is a 2021 roguelike video game developed by Housemarque and published by Sony Interactive Entertainment. It was released for the PlayStation 5 on April 30, 2021 and Windows on February 15, 2023. The game follows Selene Vassos, an astronaut who lands on the planet Atropos in search of the mysterious “White Shadow” signal and finds herself trapped in a time loop. (Wikipedia).

Engine: Unreal Engine 4. We test using the High preset with ray traced Shows and Reflections set to Medium, DX12 API.

Next we come to Returnal and there's clearly some sort of driver issue with RT enabled in this one, the A580 drops like a stone to the bottom of the chart, and even the A750 does very poorly which goes against everything else we've seen today.

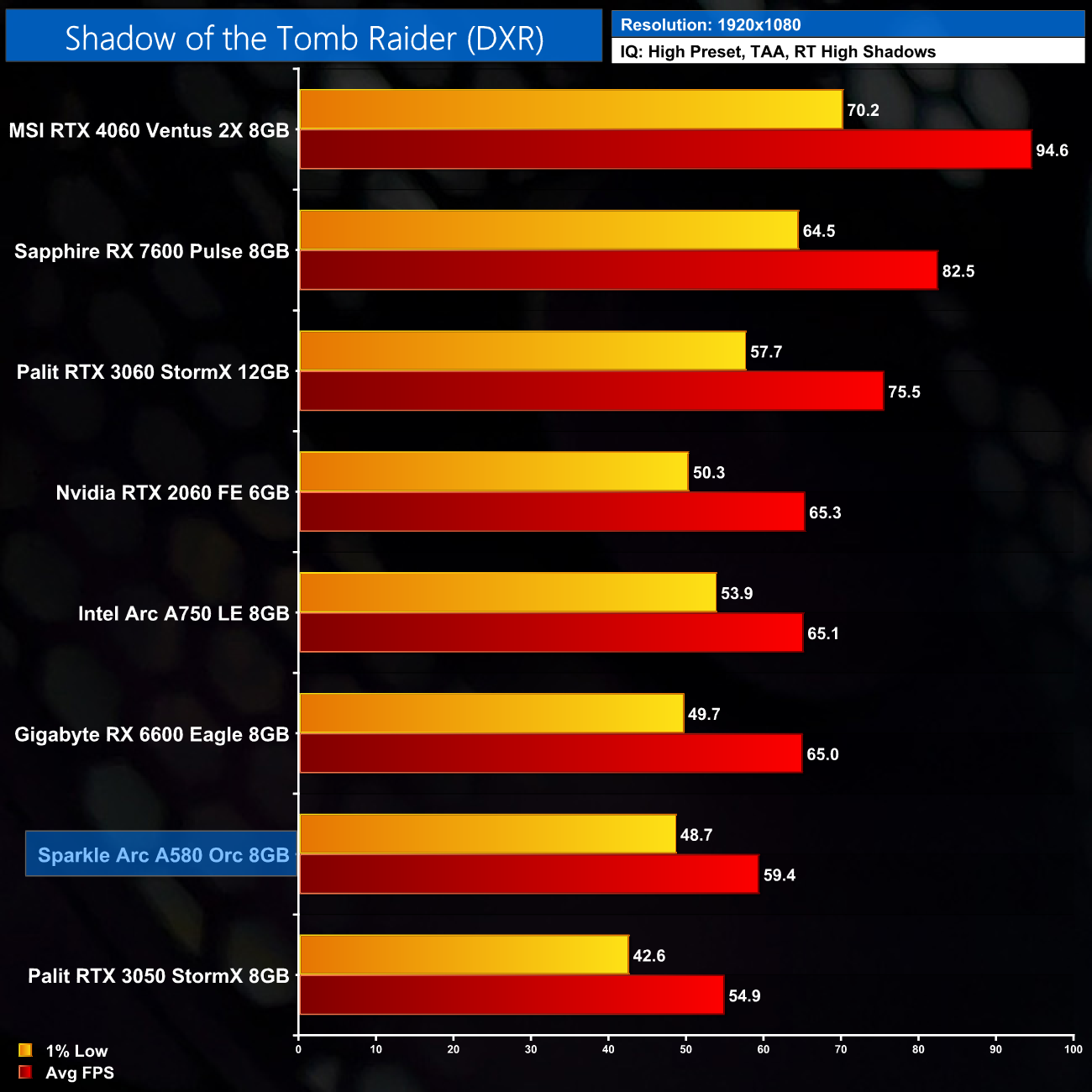

Shadow of the Tomb Raider is a 2018 action-adventure video game developed by Eidos-Montréal and published by Square Enix's European subsidiary. It continues the narrative from the 2015 game Rise of the Tomb Raider and is the twelfth mainline entry in the Tomb Raider series, as well as the third and final entry of the Survivor trilogy. The game was originally released worldwide for PlayStation 4, Windows, and Xbox One. (Wikipedia).

Engine: Foundation Engine. We test using the High Preset, RT High Shadows. DLSS/FSR are disabled. DXR API.

Lastly, we have Shadow of the Tomb Raider, which is limited to just RT Shadows. Here the A580 is just shy of 60FPS at 1080p, but it is slower than the RX 6600 by a 9% margin, and only 8% faster than the RTX 3050.

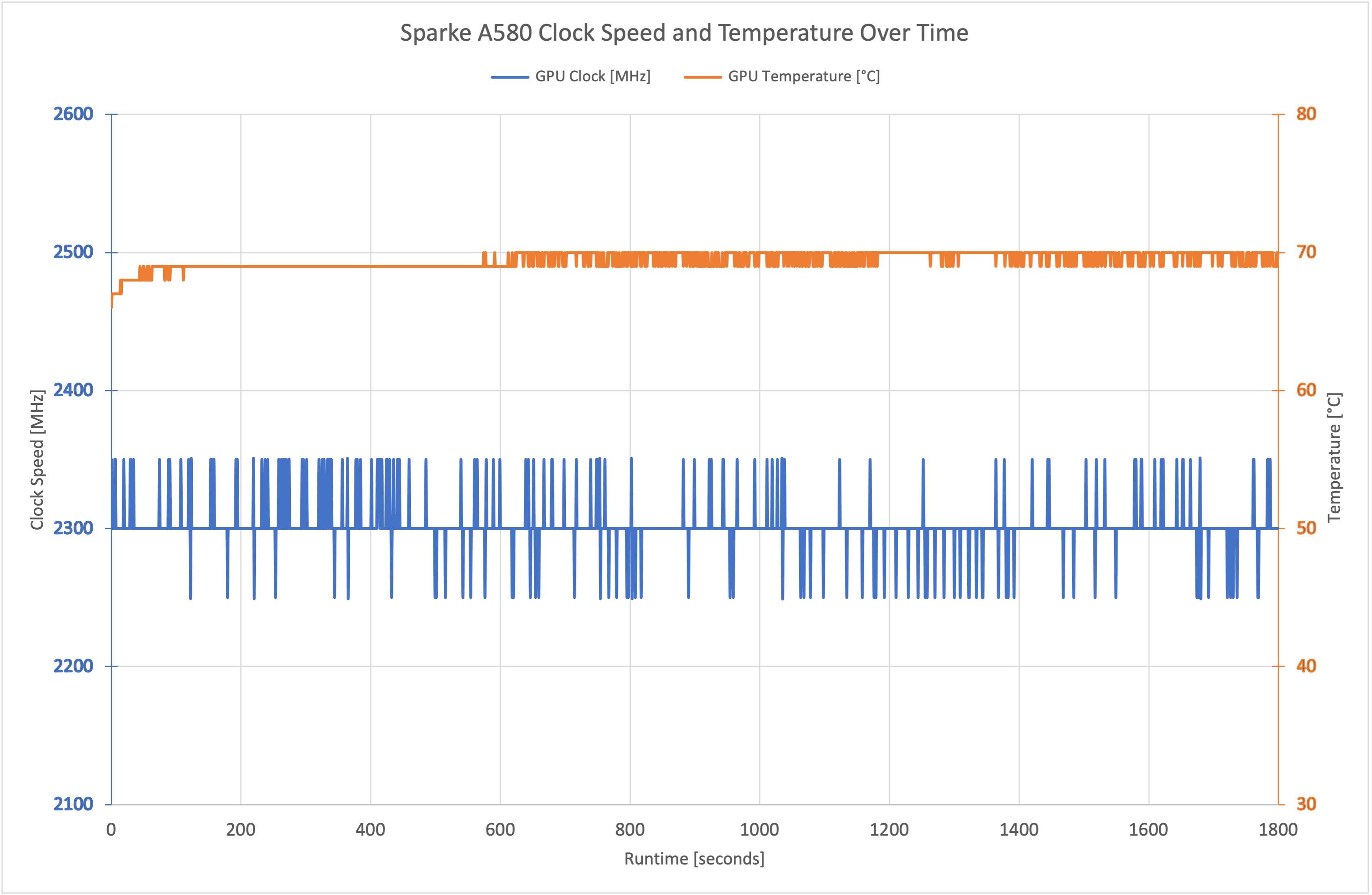

Here we present the average clock speed for each graphics card while running Cyberpunk 2077 for 30 minutes. We use GPU-Z to record the GPU core frequency during gameplay. We calculate the average core frequency during the 30 minute run to present here.

Clock speed for the A580 Orc fluctuates in 50MHz steps. By and large it held stable at 2300MHz, but with occasional drops to 2250MHz, or spikes up to 2350MHz.

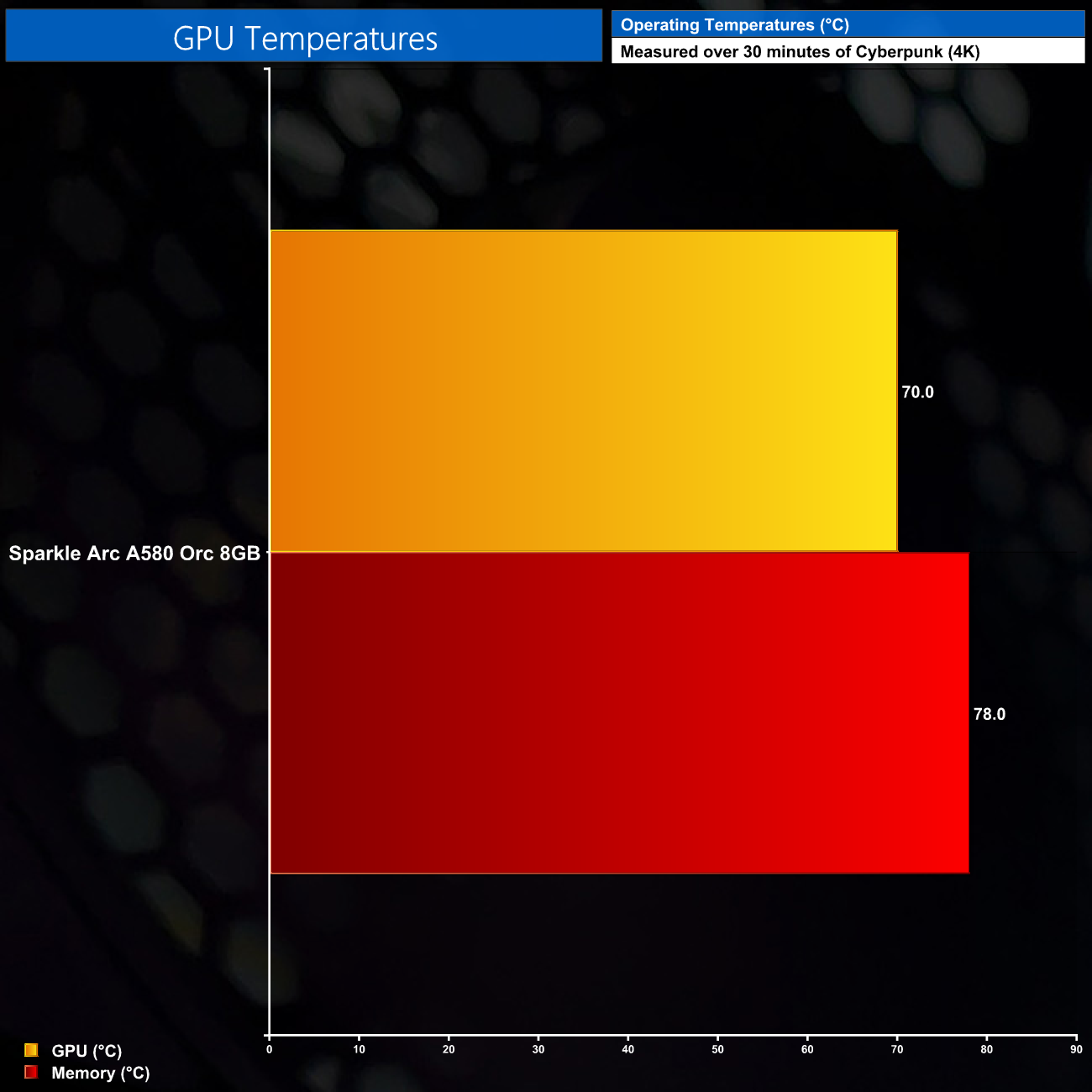

For our temperature testing, we measure the peak GPU core and memory temperature under load. A reading under load comes from running Cyberpunk 2077 for 30 minutes.

The good news is that thermals are not a problem for the A580 Orc. Over 30 minutes of Cyberpunk 2077, the GPU temperature peaked at 70C, while the memory hit 78C. We obviously can't say how this will compare to other A580 cards, but those results are well within safe limits.

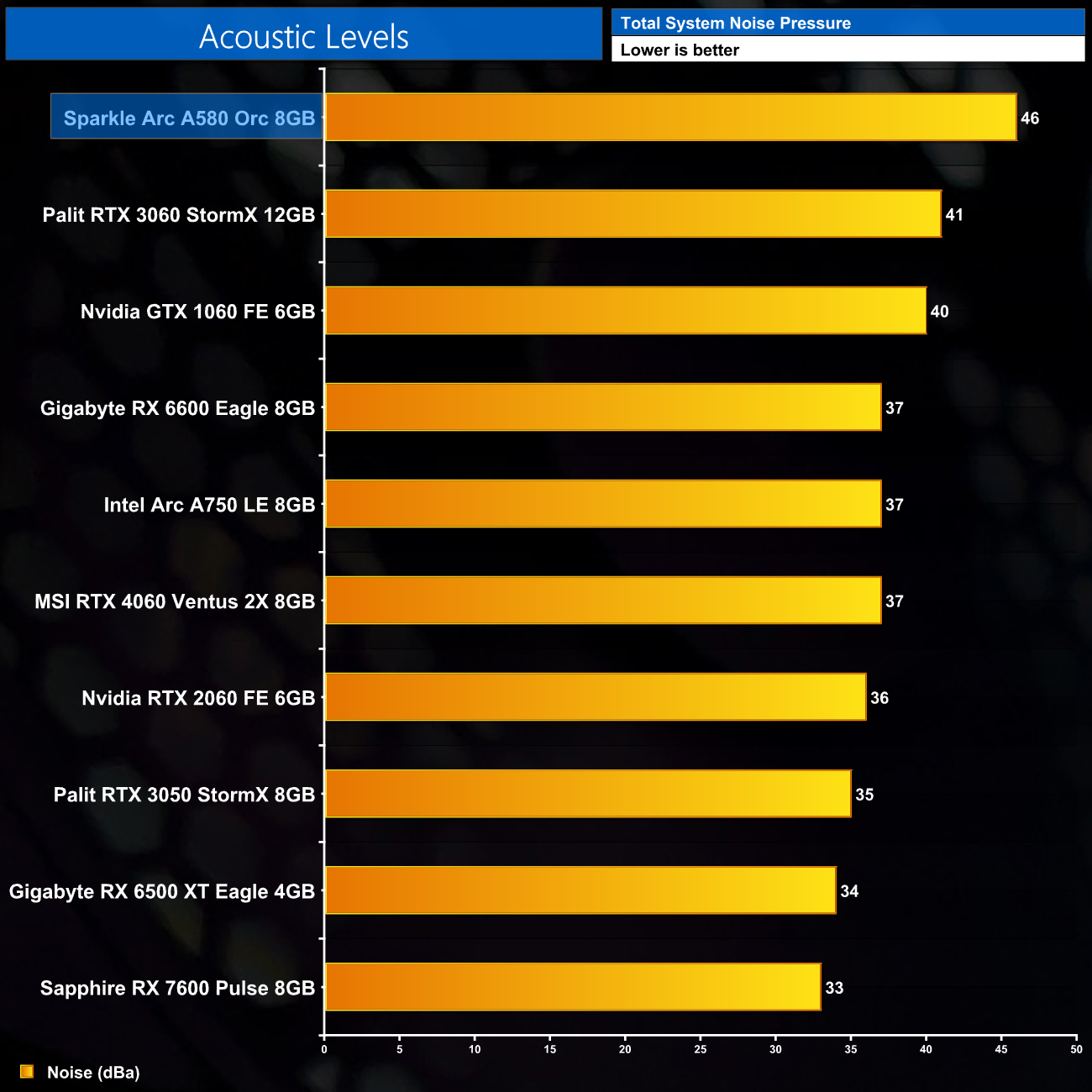

We take our noise measurements with the sound meter positioned 1 foot from the graphics card. I measured the noise floor to be 32 dBA, thus anything above this level can be attributed to the graphics cards. The power supply is passive for the entire power output range we tested all graphics cards in, while all CPU and system fans were disabled. A reading under load comes from running Cyberpunk 2077 for 30 minutes.

Unfortunately, the Orc is a loud graphics card. I saw the fans hit upwards of 2300RPM during a 30-minute stress test, and that resulted in a noise reading of 46dBa – it is noticeably louder than the A750 Limited Edition and will be clearly audible over your case fans. I didn't notice any coil whine, but the fan noise alone is fairly unpleasant.

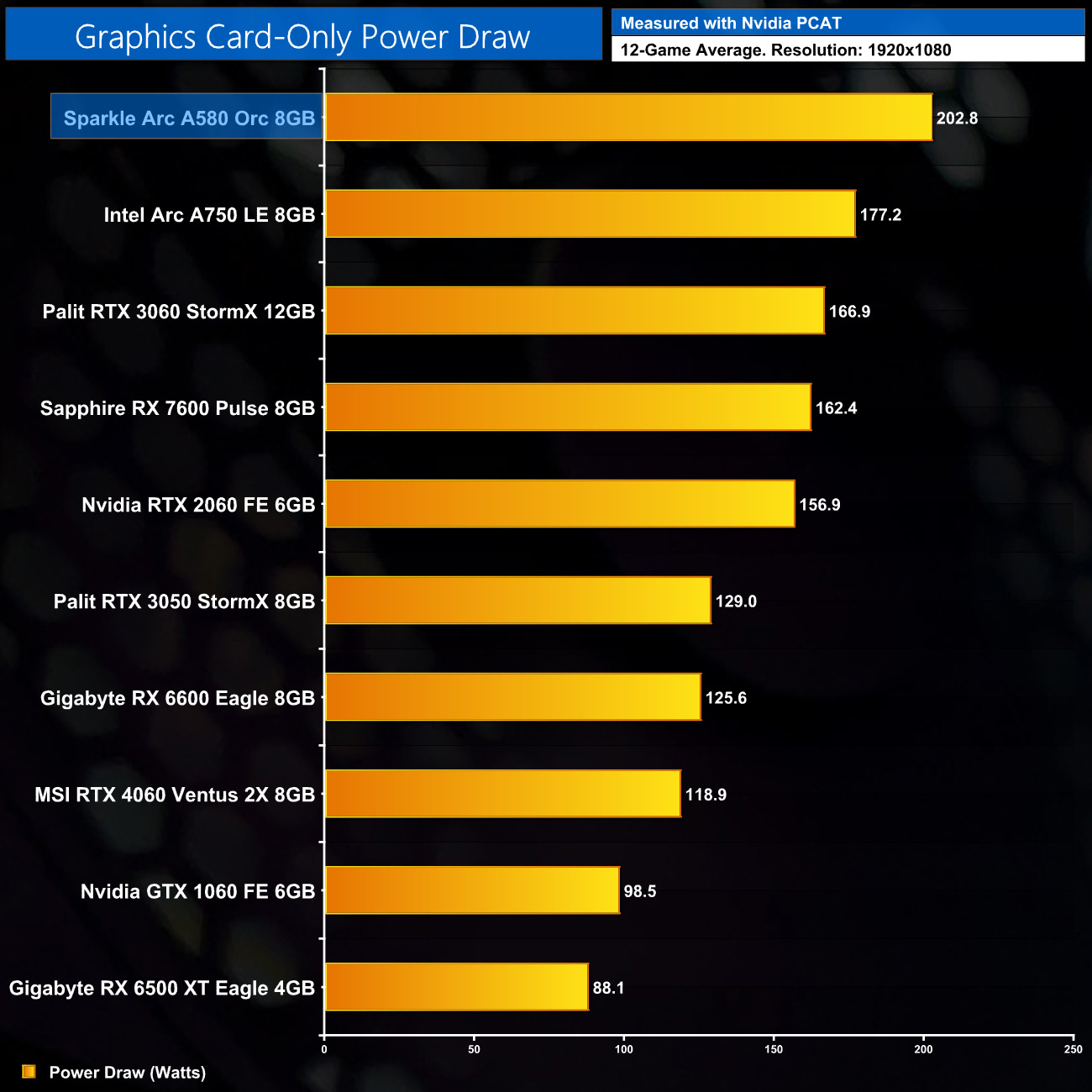

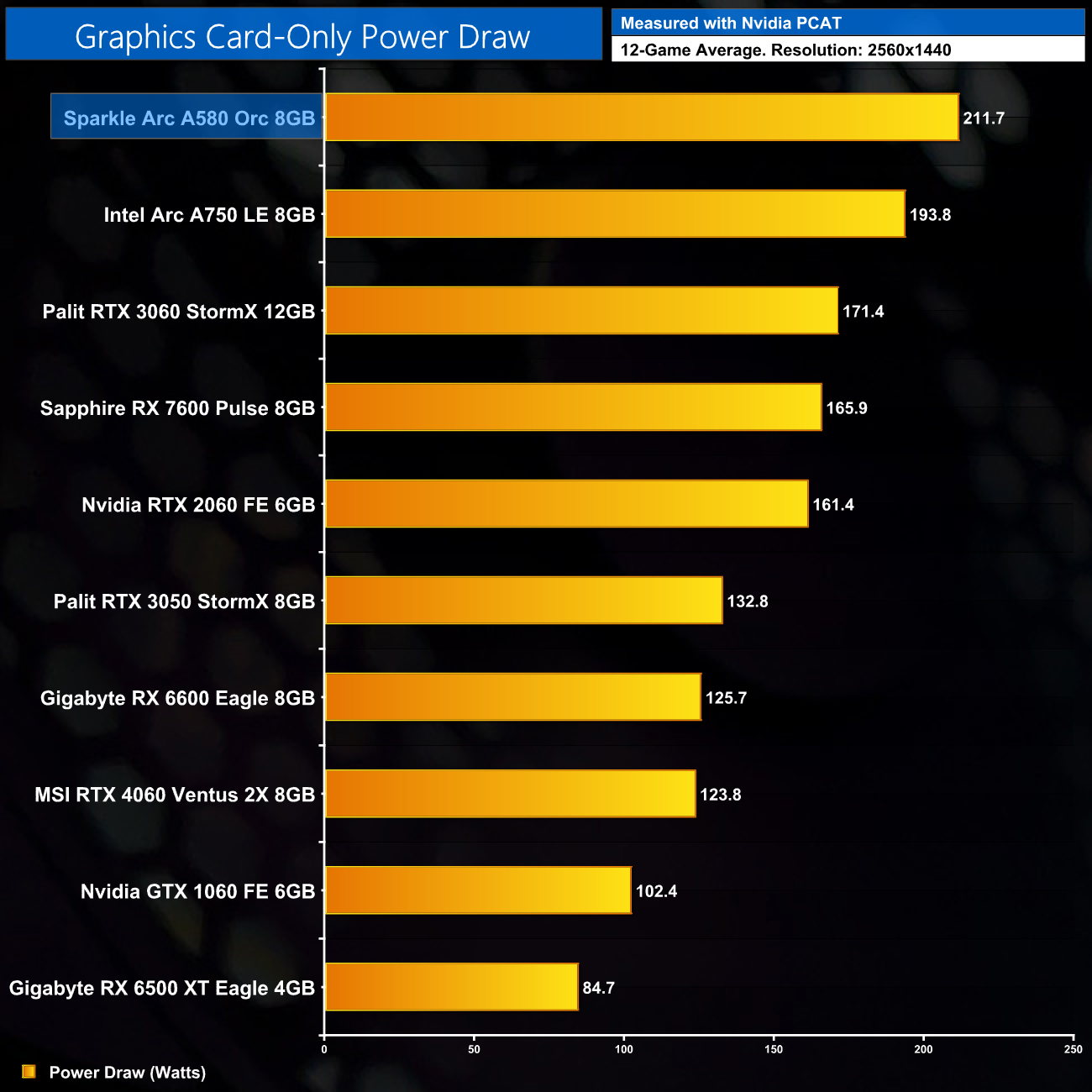

Here we present power draw figures for the graphics card-only, on a per-game basis for all twelve games we tested at 1080p. This is measured using Nvidia's Power Capture Analysis Tool, also known as PCAT. You can read more about our updated power draw testing methodology HERE.

Per-Game Results at 1080p:

Click to enlarge.

12-Game Average at 1080p:

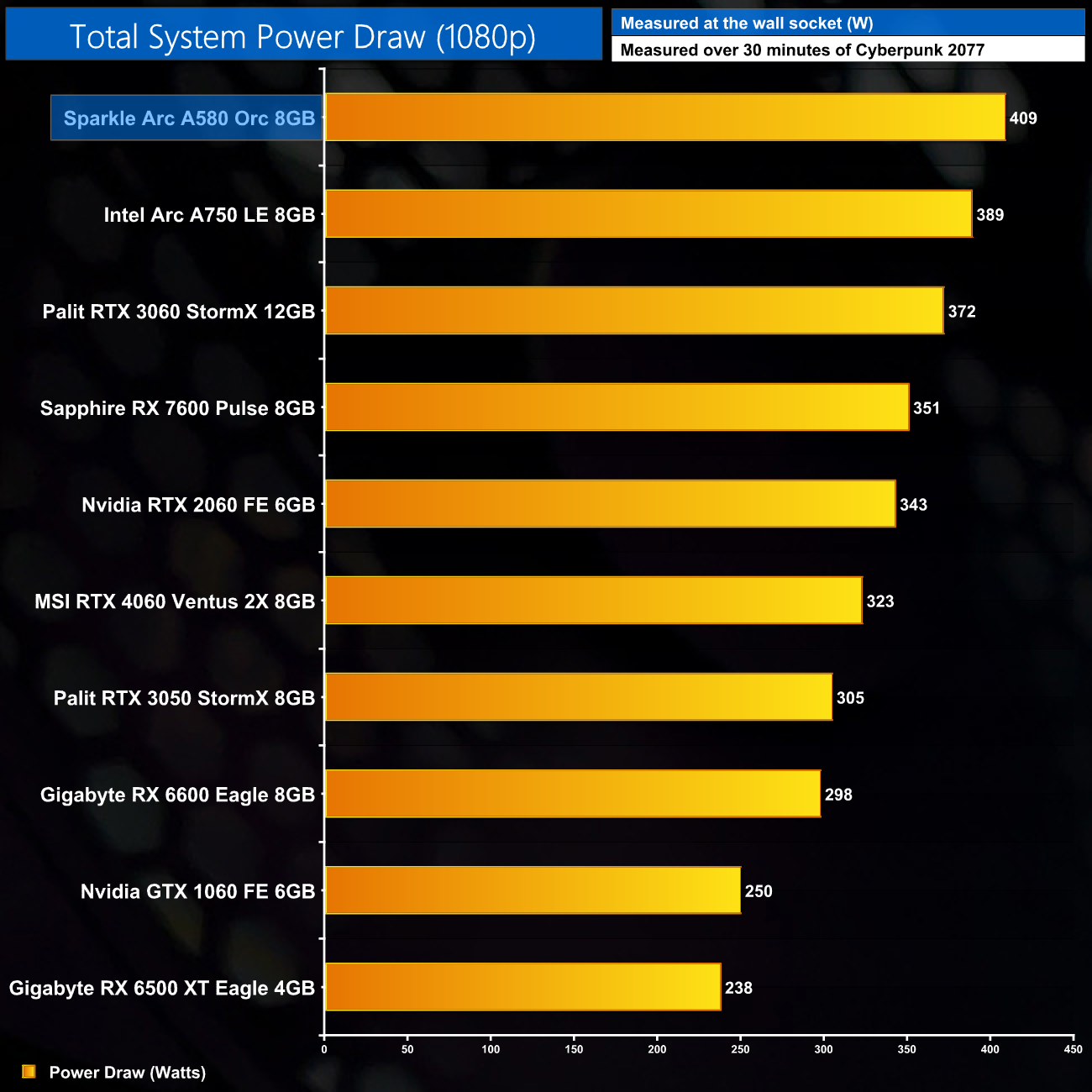

In terms of power draw, as discussed at the start of this review on page 4, Sparkle has increased the power limit significantly. Intel's reference spec suggests a 185W baseline, but the Orc appears to target 215W. It doesn't draw this much in every game we tested, but it always drew more power than the A750 Limited Edition, often by 30 or 40W.

Averaged over all 12 games tested, it drew 202.8W, which is a 14% increase over the A750, or a massive 61% increase over the RX 6600. This does not bode well for efficiency…

Here we present power draw figures for the graphics card-only, on a per-game basis for all twelve games we tested at 1440p. This is measured using Nvidia's Power Capture Analysis Tool, also known as PCAT. You can read more about our updated power draw testing methodology HERE.

Per-Game Results at 1440p:

Click to enlarge.

12-Game Average at 1440p:

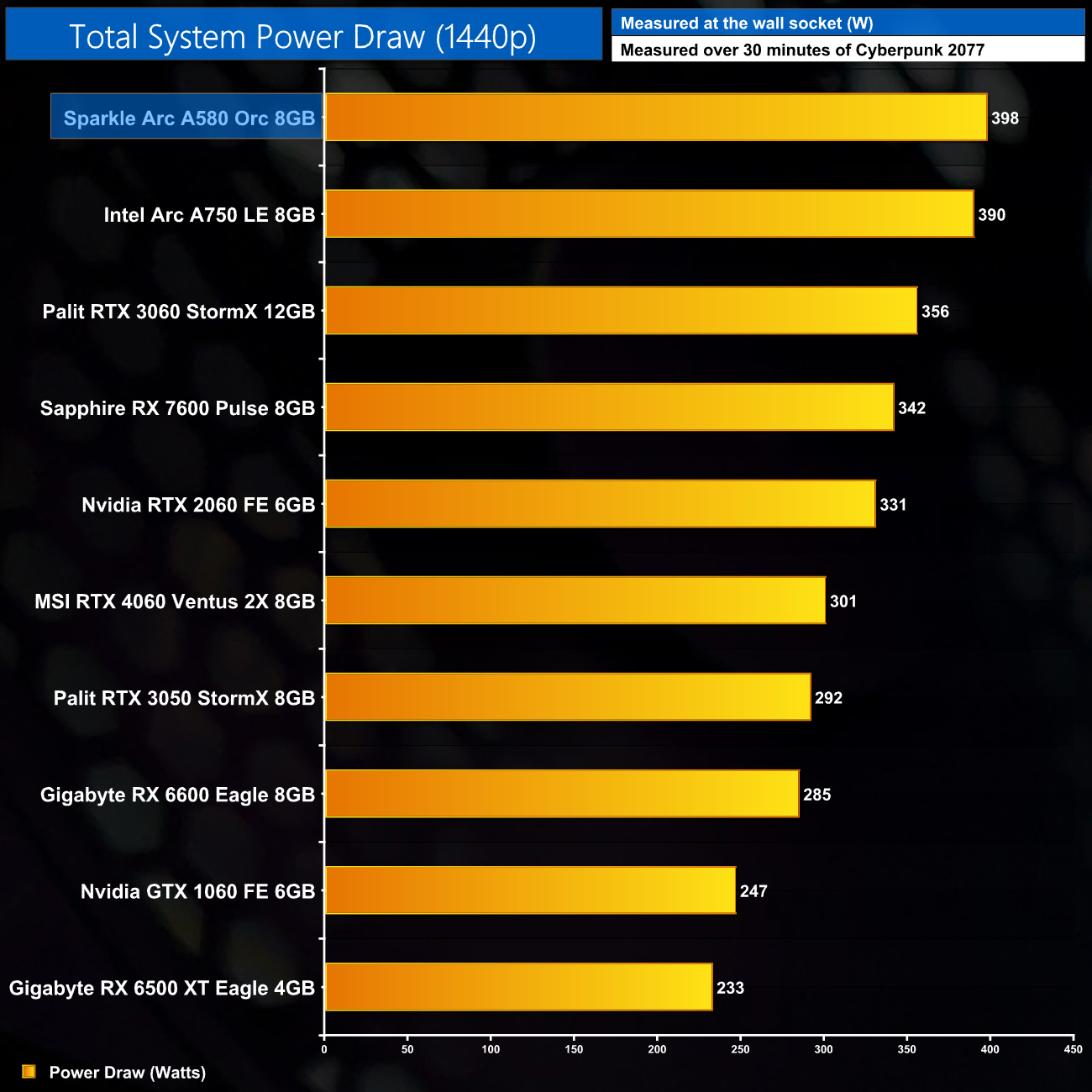

At 1440p, power draw is increased for the A580, this time averaging 211.7W. That's still a sizeable increase over the A750 Limited Edition and an eye-watering 68% increase versus the RX 6600.

Using the graphics card-only power draw figures presented earlier in the review, here we present performance per Watt on a per-game basis for all twelve games we tested at 1080p.

Per-Game Results at 1080p:

Click to enlarge.

12-Game Average at 1080p:

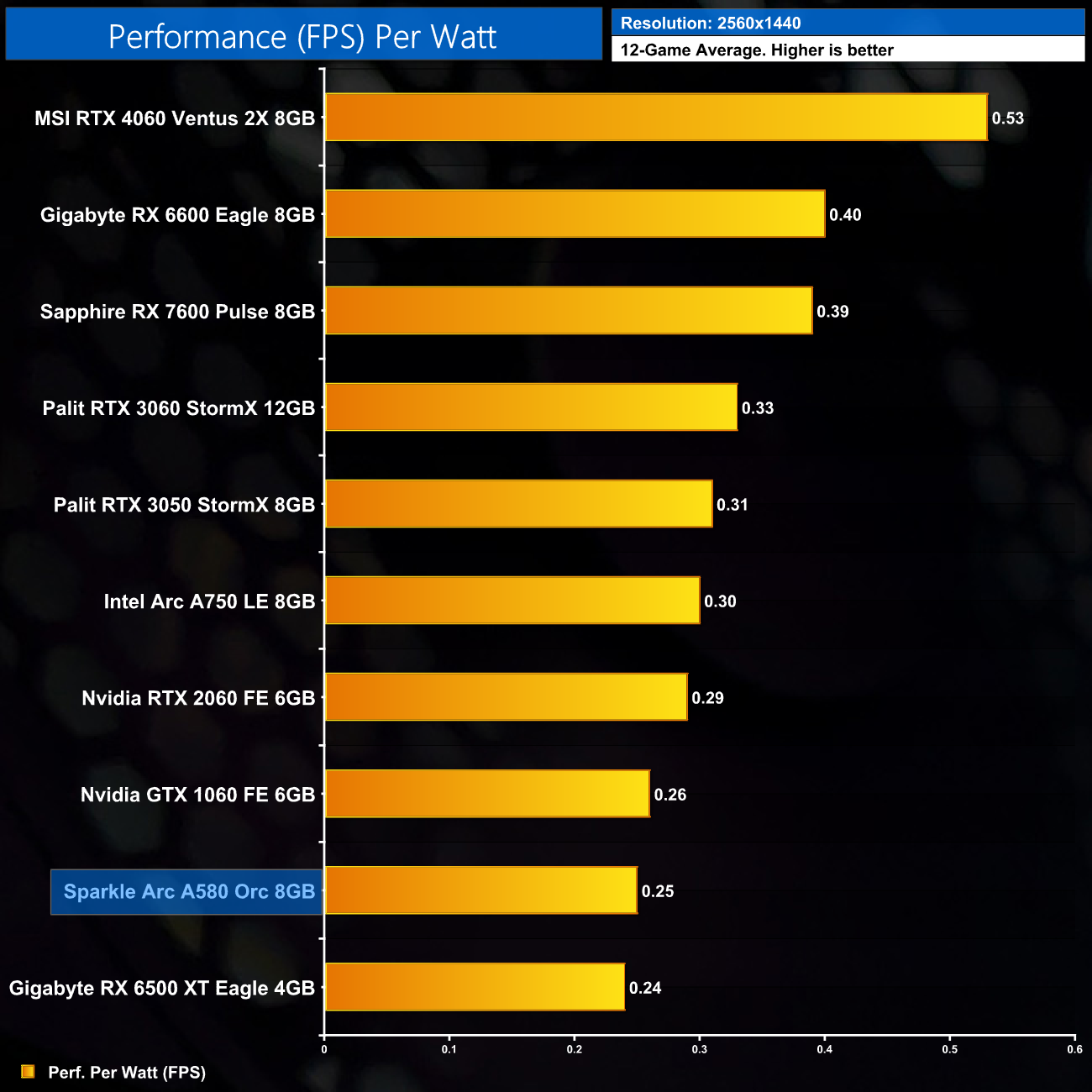

As it turns out, performance per Watt is simply awful for the Sparkle A580 Orc – there's no other way to put it. The power draw is just far too high for this level of performance, and it delivers lower efficiency than even the GTX 1060 6GB from 2016. I have to say I really don't know what Sparkle was thinking by increasing the power limit by this much – our testing shows it barely performs 2-4% better than when we tuned the GPU to 185W, resulting in pretty horrible efficiency levels.

Using the graphics card-only power draw figures presented earlier in the review, here we present performance per Watt on a per-game basis for all twelve games we tested at 1440p.

Per-Game Results at 1440p:

Click to enlarge.

12-Game Average at 1440p:

The same goes for 1440p, too. At this resolution it is now a touch more efficient than the RX 6500 XT, but it's still behind the 1060 6GB, and is miles off the pace when compared to the RX 6600.

We measure system-wide power draw from the wall while running Cyberpunk 2077 for 30 minutes. We do this at 1080p, 1440p and 2160p (4K) to give you a better idea of total system power draw across a range of resolutions, where CPU power is typically higher at the lower resolutions.

Total system power draw peaked at around 410W in my testing, and bear in mind this is with an i9-13900KS. I've not seen an official PSU recommendation from Intel, but I'd say 500W is probably the minimum you'd want to be looking at, depending on other factors such as your CPU and number of additional drives, fans, LED strips etc.

I have to say I was taken by surprise when Intel reached out and offered me a review sample of the new Arc A580. This was the GPU announced alongside the A750 and A770, but quickly vanished into the ether and was presumed by many to have been quietly cancelled. Not so, as today the A580 is hitting the market with a $179 MSRP, and we've been putting it through its paces over the last week. It's safe to say it is somewhat of a mixed bag.

Starting with the positives, as a slightly cut-down A750, using the same ACM-G10 silicon, the A580 is generally a capable gaming graphics card considering the price point. Across my testing it delivered near-60FPS experiences in pretty much every game at 1080p High settings, with the only real exception being The Last of Us Part 1. Its performance is generally comparable to the RX 6600, though it is 6% slower on average, while it is 6% ahead of the RTX 2060, and just 7% behind the Arc A750.

Due to the relatively wide 256-bit memory interface – at least in relation to other GPUs in this market segment – the A580 also scales well at 1440p, where it is on average 5% faster than the RX 6600. The only trouble is the silicon lacks the compute power to really be effective at this resolution, as many titles we tested dropped into the 30-40FPS region, even with slightly dialled down image quality settings.

Arguably more important, however, is just how far Arc has come since its rocky launch a year ago, and my testing over the last week is further evidence of this. I’d say that the A580 is much easier to recommend than either of the A750 or A770 were at launch, thanks to the very clear improvements in driver stability. I didn’t experience any crashes or BSODs during my testing, fan control is a lot less buggy now, and the Arc Control panel is significantly more responsive than I remember.

Minor issues do still persist, such as a couple of visual glitches in Ratchet and Clank and Red Dead Redemption 2 (as shown in the video review), while idle power draw is still too high, with the A580 drawing 45W just sitting on the desktop. ReBar remains effectively required for smooth gaming performance, too.

Those things may be annoying, but easily the most prominent quirk you’ll notice is just how variable performance can be from game to game. We saw titles including Ratchet and Clank and RDR2 where the A580 is an absolute world beater and looks incredibly strong for the price. But for every one of those, there’s the likes of Total War: Warhammer III which delivers poor 1% lows, or The Last of Us Part 1 where the A580 is over 20% slower than the RX 6600.

Indeed, the RX 6600 is clearly the main competitor the the A580, and I'd have to say it is generally the smarter choice. It's currently retailing well below its launch MSRP in both the UK and US, while it is generally a touch faster than the A580. It's also much more of a known quantity – you know what sort of gaming performance you're getting from the RX 6600 across the board, while the same can't always be said of the A580.

It doesn't help that the A580 is woefully inefficient – at least, the factory overclocked Sparkle Orc model is. For reasons I can't quite fathom, Sparkle increased the power target from the 185W reference figure up to 215W, and the result is the A580 draws 61% more power (!) than the RX 6600 on average. In other words, the RX 6600 offers performance per Watt that is 74% better than the A580. The Orc is also pretty loud, with the fans ramping up to 2300RPM in my testing, so there is definitely some work to be done there.

Taken as a whole though, the Intel Arc A580 is – as I said – much easier to recommend at launch than its previous Alchemist forebears, and if you are a general PC enthusiast who likes to have a play with new tech, the idea of owning an Intel GPU is still pretty neat. I still feel that the majority of PC gamers would be better served by the RX 6600 for the reasons outlined above, but the A580 shows Intel is moving in the right direction.

We don't have confirmed UK pricing yet, but the A580 is landing today for $179.

Discuss on our Facebook page HERE.

Pros

- Decent 1080p gaming performance at High settings.

- Scales better than the competition at 1440p, though not all games are viable at this resolution.

- Driver stability is vastly improved compared to the A750/A770 launch.

- 8GB VRAM makes sense for the price point.

- Ray tracing and XeSS are supported.

Cons

- Performance can vary significantly from game to game.

- RX 6600 is slightly faster for a similar price and is more consistent across the board.

- Power draw exceeds 200W in most games.

- Efficiency is terrible, worse than even the GTX 1060 6GB from 2016.

- Sparkle Orc card is too loud.

KitGuru says: Intel's Arc A580 may not be a clear winner over the RX 6600, but it gives us real hope for Battlemage.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards