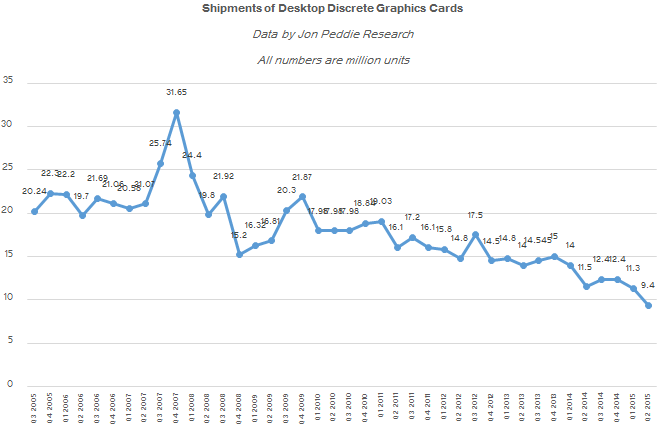

Sales of graphics cards for desktop PCs decreased once again in the second quarter of 2015, according to data from Jon Peddie Research. Market share of Advanced Micro Devices also hit a new low during the quarter.

Shipments of discrete graphics adapters for desktops dropped to 9.4 million units in Q2 2015, which is minimum amount in more than ten years. According to JPR, sales of graphics cards dropped 16.81 per cent compared to the previous quarter, whereas sales of desktop PCs decreased 14.77 per cent. The attach rate of add-in graphics boards (AIBs) to desktop PCs has declined from a high of 63 per cent in Q1 2008 to 37 per cent this quarter. Average sales of graphics cards have been around 15 million units per quarter in the recent years, but declined sharply in 2014.

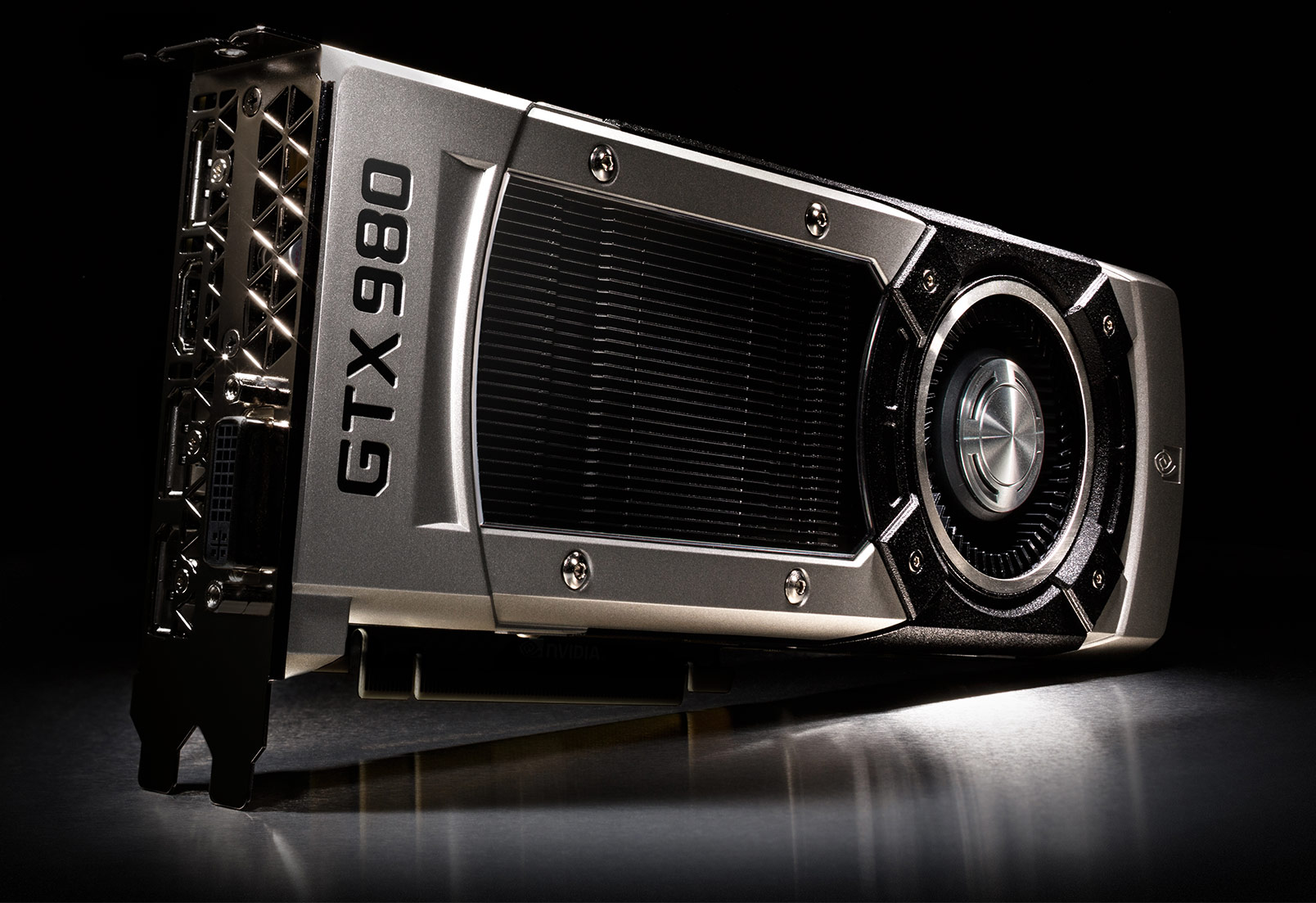

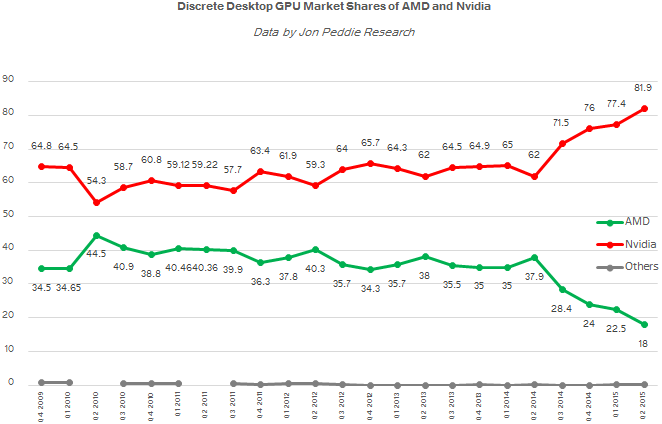

Nvidia continued its dominance in the market of desktop discrete graphics cards. Market share of the company increased to 81.9 per cent, an all-time high. By contrast, sales of AMD Radeon graphics adapters decreased to a minimum in more than 10 years. The share of AMD dropped to 18 per cent in Q2 2015, which is the lowest share that AMD had in history. The highest share of desktop discrete market that AMD ever had was 55.5 per cent back in Q3 2004, according to Jon Peddie Research. AMD shipped around 35 per cent or desktop discrete graphics processing units in the last four years. AMD’s share began to contract sharply after Nvidia introduced its GeForce GTX 970 and 980 graphics cards in Q3 2014. In about a year AMD lost 20 per cent of market share it controls.

Note: Some numbers are estimates.

JPR claims that while the market of desktop AIBs is shrinking, sales of expensive graphics cards used by gamers are increasing.

“However, in spite of the overall decline, somewhat due to tablets and embedded graphics, the PC gaming momentum continues to build and is the bright spot in the AIB market,” said Jon Peddie, the head of JPR.

AMD’s quarter-to-quarter total desktop graphics cards unit shipments decreased 33.3 per cent. Nvidia’s quarter-to-quarter unit shipments decreased 12 per cent.

Discuss on our Facebook page, HERE.

KitGuru Says: It is noteworthy that AMD is losing market share despite the fact that it has rather competitive graphics processing units. Further improvements of integrated graphics processors will decrease sales of add-in graphics boards.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

First off, it doesn’t help that AMD doesn’t help the development of games like Nvidia does, 2nd because Nvidia helps with TeamWorks in game development they will continue to intentionally code to cripple AMD cards so they can optimize theirs and sell more cards examples: Watch Dogs, Project Cars, The Witcher 3 Metro 2033, Metro Last Light all of these games were optimized by Nvidia and all of these games have Advanced Physx or Hair Works set by default. If AMD would have enforced Tress FX and made the software closed source they wouldn’t be in the boat they are. AMD has to learn how to play dirty in order to get ahead, it’s worked for Intel and it’s worked for Nvidia.

Doesn’t help development of games, true. Helps development of hardware itself.

nVidia would never do such a thing like trying to use new stuff. AMD 1st used GDDR5, now HBM.

They’re sacrificing themselves so we could get better stuff later on.

Or it is Just the fact and AMD just released the “1st GDDR5” video card. But it was horribly built and just utter sucked. So Nvidia took the idea and made it what it should have been. AMD keeps putting themselves in these “oh F*** me, we released to soon… the product was only 30% complete” AMD dug themselves their own grave. @timothyisenhart:disqus Is absolutely right AMD does not do one thing. Nvidia doesn’t just do GTX video cards but there GPUs are in everything. The Tesla and Audi cars. The US government asked Nvidia to use there titan super computer number cruncher. And the fact that Nvidia has and still is make things to cure cancer and to help with difficult surgeries…like heart and brain surgery + the cure for cancer. Nvidia is proactive with everything. AMD just has their thumb up the bum and make a “APU” that burns laptops from 8-12 months + their video cards and processors overall are just garbage. AMD cant do anything right.

Please go lower so we get lower prices. I hope 5 – 6 years from now these prices will go down further without having to trade your kidneys for it

▼▲▼▲▼▲▼▲▼▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲▼▲ ……

➽Look HERE➽➽➽➽ http://www.work-join.com ➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽➽ observe and discover more help by clicking any connectionv…

Everyone are waiting for 16nm GPU, that’s why sales.decline

well it is not like we need more power, I can play most of my games from high to ultra high with an old 7850 (the 1GB version) at 1080p and I don’t mind the frames as long as they are playable in my eyes, that is everything above 20fps are ok for me, not that I don’t love the feeling of 60fps, but I can play at lower.

And if I have to I don’t mind to drop at medium details or fine tune settings (like cutting shadows).

I made it all the way to 2012 with an old 4850, I guess I can last another 2 years with 7850 and then consider what mid-range card I can afford after that.

IIRC it was HD4770, and it kicked ass.

That is completely not true. If AMD didn’t go ahead and use GDDR5, nVidia wouldn’t have ever used it either. They make something, which isn’t perfect, and nVidia takes it and makes it better. Than AMD makes it better, and so on.

So please, proceed with using DDR3 GPUs. Go on. Let’s see how nVidia would be so “good” without AMD actually progressing. GDDR is reaching it’s limits. That’s why AMD moved to HBM.

They’re the one truly pushing the limits.

Also, if you didn’t know, AMD’s sheer computational power is much higher than nVidias. Exactly why everyone who needs that class of GPU, uses FirePros.

I made it from 2009-2015 with my 5850. I decided to buy a used GTX 660ti for $75 in Feb because some of the new games i wanted to play started lagging on medium settings and because i didn’t want any of this years graphics cards. I think 2016 will have big leaps in graphics so i will build a completely new comp then.

“Nvidia would never do such a thing like trying to use new stuff” So you are saying G-Sync was not trying new stuff? And with HBM, AMD got agreement with the makes that they was the only one to get the first one of them. So it’s not like Nvidia don’t want them, but they cannot get there hands on them, but lucky for nvidia, samsung is starting to make HBM aswell so nvidia can get there hands on them to use them on Pascal. And so now they don’t have to wait so long and we will maybe get Pascal sooner 😀

And GDDR5 is still base on DDR3 tech so not much of a difference 🙂

I meant hardware-wise. What’s the last time they pulled ahead of AMD in terms of new technology?

Also, if DDR3 and GDDR5 are so similar, feel free to game on DDR3.

GDDR5 is based on DDR3 SDRAM, not VRAM. GDDR3 VRAM is faster than DDR3 SDRAM. So yeah, go ahead.

You do know that G-Sync is a piece of hardware that Nvidia made to put in monitor? I will say thats new hardware that they made before AMD.

Well i do game on DDR3 😉 have have DDR3 in my pc where my SSD load all the games files to and then i run from there when im gaming, so yes im gaming in DDR3. But for my GPU i will i don’t care right now. HBM and GDDR5 dose not give a big difference in gaming right now. When 4K will be standard and we are on HBM2-3, then it will be great to have 🙂

I hope someday AMD will make something awesome and get some market share back. they need it and all gamer need it.

No shock there and it explains the push towards VR. No one needs high end gpus for games anymore. Heck, a £130/a 2-3 year old gpu will do pretty damn well nowadays so why upgrade?As an example I would like to have a dual gpu setup but as it stands there is no point.

nVidia’s proprietary software technologies such as PhysX are just bad for the industry. They limit the competition in unfair ways, and the intent there is clearly for games to not work as well on the competition.

After all the PS4 and XBO are both PhysX certified.

AMD IMO is the company that leads in terms of actual electronics and computing architecture. The most game changing architecture changes were made by AMD/ATI (first to support Unified Shader Architecture/ First to go Terascale/ First to introduce GDDR/ First to introduce HBM/ First APU with full HSA etc).

That point about the Unified Shader Architecture is why the PS3 GPU ended up weaker than the Xenon GPU in the XBox 360 despite being released a whole year after and having costed more. (nVdia’s electronics were a year behind and outdated, SONY had to use the CELL as a USA GPU)

Clearly SONY learnt the lesson this time around when they chose AMD. On console what matters is great hardware and middleware (PhysX etc.) have little meaning.

You are comparing G-Sync to new memory architectures like HSA, HBM, GDDR? and things like Unified Shader Architecture, Terascale processing, which today is the standard?

Please, nVidia is really good at putting the icing on top. They will also deliver performance for a hefty price. But their strength lies in gullible development teams and the showmanship of their CEO.

When you change architecture, you need time for the API and engines to adapt to the new architecture. GDDR spec didn’t change from AMD to nVidia, that’s just nonsense.

nVidia just waited it out. They are currently doing the same thing while also developing more GameWorks proprietary tech to work with their legacy architecture.

I made it through the same period with HD5870. Absolutely great card. Changed to GTX 680, and the performance was OK. But it seriously limited all my other component choices.

Monitor and TV had to be 3D Vision certified, and those choices were expensive crap compared to the rest of the 3D monitors and TVs.

Amd pricing is the issue. They are not competitively priced around the globe in my country r9 380 is costlier than gtx 960 and gtx 970 seems a much better buy. Plus they used to have great low priced cards which nvidia had nothing to compete with.

I think they were doing great a year back and hence they took a confident move of bumping the prices. The 750ti and gtx 970 literally killed amd lower end and just a notch below top end market were they did well

LMAO, You are clueless

Nvidia is the only one that does decent 3D gaming. AMD sucked so bad at it. It’s just a shame it didn’t catch on though. Witcher 2 in 3D is awe inspiring. You also did not have to buy those pricey 3D monitor or TV if you didn’t want to play 3D.

LMAO you can’t post any valid arguments, due to lack of brain cells.

I have more than you trust me on that.

Show ’em then. Otherwise, you’re just a poor liar 😉

I know someone who knows someone who doesn’t have to deal with nvidia /amd war jazz cause he uses an open source operating system like Ubuntu and don’t give a $#@% about benchmarks cause if he just cares about numbers then he have to play dirty like Nascar races.