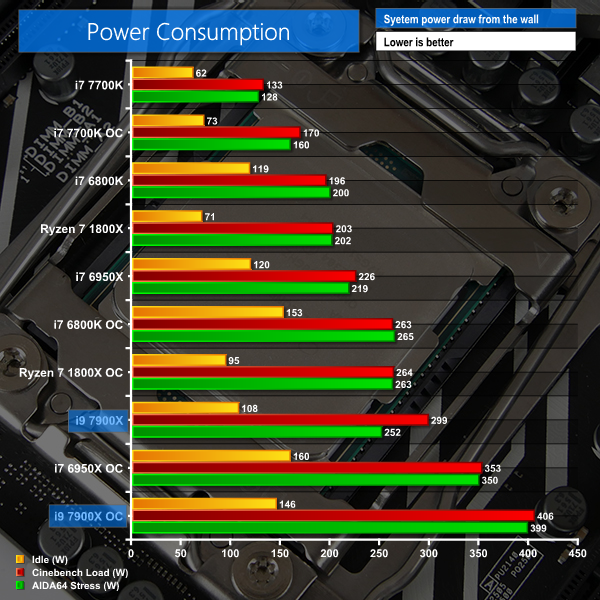

We leave the system to idle on the Windows 10 desktop for 5 minutes before taking a power draw reading. For CPU load results, we read the power draw while producing five runs of the Cinebench multi-threaded test as we have found it to push power draw and temperature levels beyond those of AIDA 64 and close to Prime 95 (non-AVX) levels. Even five continuous loops of Cinebench results in a short run time on high-performance CPUs which influences the validity of the temperature reading, so we run 5 minutes of AIDA64 stress test to validate data.

The power consumption of our entire test system (at the wall) is shown in the chart. The same test parameters were used for temperature readings.

Power Consumption

Power draw readings are accurate to around +/-5-10W under heavy load due to instantaneous fluctuations in the value. We use a Platinum-rated Seasonic 760W PSU (with 8-pin plus 4-pin power connectors where possible) and install a GTX 1070 video card that uses very little power.

The Core i9-7900X is a power guzzler, which partially explains its superb performance metrics. The 10-core Skylake-X system draws 73W (32%) more power than the next most power-hungry stock system which is the Core i7-6950X. Add almost 100W (almost 50%) onto the power draw of a Ryzen 7 1800X system to get to the Cinebench load usage for the Core i9-7900X. AIDA64 load sees the i9-7900X throttling its clock speed back to around 3.6GHz, down from 4GHz all-core, to reduce power consumption in the synthetic stress test.

Switching focus to overclocked results, nothing can match the 4.6GHz 1.20V Core i9-7900X when it comes to load power draw. With the system pulling more than 400W from the wall, many of us who said >1kW PSUs are unnecessary in the modern day may be about to eat our own words. Make sure that beefy PSU has 8-pin plus 4-pin CPU power connectors, as this level of power draw points to significant stress on a single 8-pin connector, not to mention the motherboard VRM. Despite its lower VCore (1.20V versus 1.275V), the 7900X draws 53W (15%) more power than the 4.2GHz overclocked 6950X in Cinebench. Looking at the 4.05GHz Ryzen 7 1800X, the increase in power draw to our overclocked 7900X is a sizeable 142W (54%). A 4.8GHz 4C8T Kaby Lake 7700K uses less than half the power of the 7900X.

I guess that spells a convincing end to those jokes about AMD being power-hungry. Judging by the power consumption numbers, Intel has taken a brute force approach with as much finesse as a bulldozer to achieving ground-breaking performance with the Core i9-7900X. Personally, I am all for higher power draw if the performance warrants it. However, it is also clear that many consumers value efficiency, not to mention a reduction in thermal energy pumped into their chassis or cooling loop or workspace.

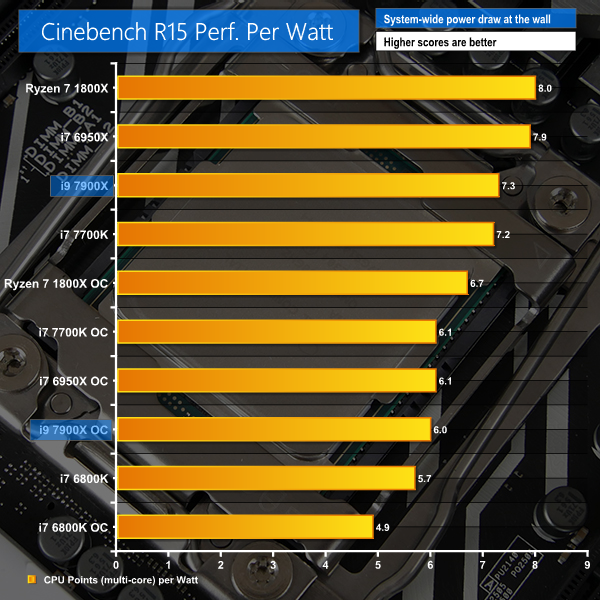

Performance per Watt in Cinebench, which we will loosely regard as rendering power efficiency, is better on the i7-6950X and Ryzen 7 1800X compared to the i9-7900X. Overclocking results in reduced performance efficiency for all CPUs and the 7900X is hit hard in this metric. Ryzen 7 1800X continues to look like a solid performance per Watt option even when overclocked. AMD's chip backs up that claim with strong all-out performance in Cinebench, though nowhere near the Intel 10-core CPU levels.

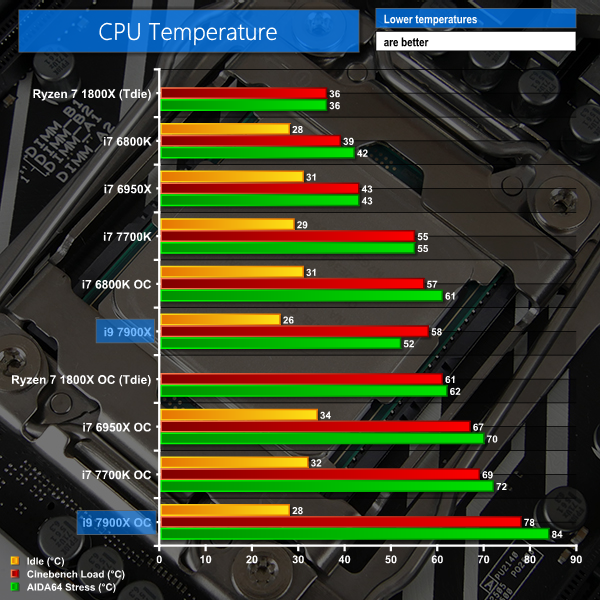

Temperatures

Temperature recordings were taken using a 280mm Corsair H110i GT all-in-one liquid cooler with its two fans running at full speed (around 2300 RPM). Ambient temperatures were held around 23°C (and normalised to 23°C where there were slight fluctuations).

We read the Tdie temperature for Ryzen which accounts for the 20°C temperature offset. The charted temperatures are without the 20°C offset added on. Ryzen idle temperatures are omitted due to inaccurate readings caused by sensor drift.

There is not much more to say about thermal performance of the Core i9-7900X that has not already been said – it's far from a cool-running chip. While that's fine in theory – you want higher performance, you'll have to accept higher operating temperatures – the reason for the poor thermal performance is what will leave a sour taste in enthusiasts' mouths. This is not simply a case of ground-breaking performance resulting in high load temperatures, it's largely driven by Intel's decision to use cheaper thermal interface material (TIM) beneath the heatspreader rather than the superior solder approach.

Reasons for Intel's decision to use TIM rather than solder are unclear; the per-unit costs differential between TIM and solder is likely to be insignificant on a $999 processor, even when factoring in the economy of scale. The large Skylake-X die is unlikely to present concerning failure mechanisms for solder, either, so perhaps the health and safety procedures and certification processes at Intel's factories are where TIM really shows its cost benefit over solder. Or perhaps Intel simply does not care that TIM starts to show limitations only when the vendor's processors are run outside of specification (overclocked).

Whatever the reason, it is not a move that has stood well with enthusiasts. Consumers were disappointed by the poor thermal performance of the Kaby Lake 7700K to the point where DIY de-lidding kits became popular. That's a whole new level of seriousness when trying to force the heatspreader off a $999 processor. I do hope that Intel realises the issues presented by using a lower-quality thermal design for HEDT parts and addresses the situation in future product iterations.

It's not just overclockers feeling the pain here, as prosumers and workstation buyers who value low-noise operation at stock operating parameters are also realising the caveats of a limited thermal design. The sub-60°C stock-clocked load results shown in our chart may seem good for the Core i9-7900X. However, when factoring in the 2300RPM fans used on a high-end 280mm AIO cooler to achieve those results, thermals look far less impressive.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Good review . Honestly even if Intel gets all the goodness of being a fast processor its highly priced. Also poor thermal performance is a big deal because processor eventually runs hot and performance will take a toll.

I am personally waiting for Threadripper AMD’s line up to compete with Intel’s half cooked CPU.If AMD TH has good price and good thermal efficiency then even if it is a bit slower then Intel’s offering people will go for it. Ryzen 7 and especially Ryzen 5 1600 cpu proved this already

What about VRM temperatures? 🙂

This is a review of the CPU. The VRM temps are going to vary depending on the board and its overly extravagant but largely useless cooling shroud.

Brilliant and thorough review, thank you very much.

I’d be happy with quad memory-channel i7-7740X with 44 PCI-e lanes. Fast enough for _everything_ I need, and would have enough memory and PCI-express bandwidth for the workloads I run (don’t need 10c/20t).

Unfortunately, it doesn’t exist. Either I have to buy the crippled i7-7740x with 28 PCIe and dual channel memory OR I have to buy the over-priced space heater that is the 7900X with a motherboard that fries eggs with the VRMs and sucks so much electricity out of the socket that I can hear the kill-o-watt whirr like crazy in the background while notes keep flying out of my wallet.

No thanks Intel. You really outdid yourself this time around.

I will wait for the ThreadRipper from AMD.

I like your test, but let me make a small correction, 7900X is faster then Ryzen only because of more cores and higher clock, not due to “modern architecture” (which while newer? then Ryzen is by no means better). But to count my chickens 🙂 Ryzen_OC is 4.05Ghz, 7900X_OC is 4.6Ghz

1776*1.25(because Intel has 25% more cores then AMD)=2220

now to equalize core speeds: 2220*4.6/4.05=2521 (the Ryzen score as it would be at 4.6Ghz) – IPC wise Intel (scored 2449) is still a bit worse then AMD in Cinebench.

I’m not saying that AMD is better since it can’t at the moment reach 4.6Ghz nor does it have 10 core CPU to fight with.

Similar thing happens with x265 encoding benchmark: 30,6*1,25*4,6/4,05=43,4444444 – a bit faster then Intel. And it is all in tasks that put use to AVX2 and there is still Intel advantage in 4 channel memory.

Well, at least you can wait a bit and see if Threadripper is actually better then Intel – there is no guarantee it is. Especially for lightly threaded tasks that you speak of (that per chance require high core clock that will most likely not be reachable on TR).

AMDs single core IPC is slightly worse than Intels, but AMDs implementation of SMT vs Intels HT brings it out on top by a slight margin at the same core/clocks in multi threaded benchmarks while losing in the single core benches. And then AMD doesn’t really OC well, neither does memory clock up well, its improving of course but not at any great pace.

Bottom line w/ Ryzen is mem clocks pf 2933 are a no brainer, and known to work 3200 components are common.

Thats the consensus on the street.

A pact with the devil will always bite u in the ass.

How can u trust intel?

Buy a $999 cpu w/ scads of lanes, & discover there is an charge to use nvmeS as they should be. Slimy.

The new keying system shows they use their research dollars to effect better ways of screwing customers. A good look guys.

I would buy amd gear if same money and 10% worse. My time, upgradeability, conscience and dignity are worth something too, thanks intel.

AMD probably are 10% worse in the criteria intel like to use, but AMDs modular/fabric architecture, is miles better than intel has or will have achitecture, for future cost effective ~equivalent outcomes.

BTW the prices u list for amd dont reflect reality. Both are much cheaper on amazon etc. Further, the widely regarded sweet spot is the 12 thread r5 1600, at ~$220

Lanes are just sufficient on ryzen, for a 16 lane gpu, a 4 lane nvme ssd onboard and a 4 lane pcie2 nvme ssd (~1.6GBps, or triple a sata ssd).. Beyond that, u have stacks of sata and .5GBps pcie2 expandability, but no 1GBps pcie3 lanes

note also, amd pcie3 lanes are direct to the cpu, not via switches and bridges like most intels.

Newsflash, the i7-7740X only has 16 PCIe Lanes, not 28!

Test w/ agesa 1006 please…..

Not sure why you would complain about a crippled quad core and decide to wait for a 10 core minimum threadripper lol. Amd has some amazing stuff coming out, but it will not have the IPC of intel.

The 8 core intel is actually a pretty sweet chip. It can overclock 2 of its best cores to 4.5 ghz for single or two thread tasks and 4.3 turbo on all cores. The thermal is only an issue when overclocking and when you get this many cores it will always be a problem when overclocking. Amd hasnt been able to go over 4.1 ghz yet.

Intel PCIE lanes are direct to the cpu as well. And if intel says a cpu has 16 lanes (like the 7700k) then those are dedicated to the GPU. The rest, in that case, come from the chipset via dmi 3. This is unlike AMD who is currently *overstating* PCIE lanes available for video. Sorry to burst your fanboy delusion bubble

I strongly suspect nothing you do requires 44 PCIE lanes or quad channel RAM. People complaining

about 7900x, and somehow not satisfied with *either* Kaby Lake *or* Ryzen 5/7, and obsessing instead over “Thread Ripper” sound generally clueless.

AMDs marketing is really working for TR. Somehow every hump on comment threads is now convinced they’re doing all sorts of things that somehow justify *32 threads*, even at lower IPC, and *dozens* of PCIE lanes and *mountains* of memory bandwidth. Enormously unlikely.

You can’t run a 32GB kit @3200Mhz on Threadripper cpu, it just fails to boot. Worst memory controller ever on a cpu i guess. It maxes out @ around 2800Mhz if you install 32gb of ram. If you go higher like 64gb, then you end up with 2133Mhz ddr4 speeds or 2400 if you are lucky.

Whereas I am running 64GB 3800Mhz CL15 kit with my 4.8Ghz 7900X. Unleashes my 2x overclocked Titan Xps in SLI. AMD doesn’t clock well, has the worst memory support and has lower IPC and thus lower gaming performance. It’s just good for rendering & encoding farms.