3DMark

3DMark is a multi-platform hardware benchmark designed to test varying resolutions and detail levels of 3D gaming performance. We run the Windows platform test and in particular the Fire Strike benchmark, which is indicative of high-end 1080p PC Gaming. We also test using the Time Spy benchmark which gives an indication of DirectX 12 performance.

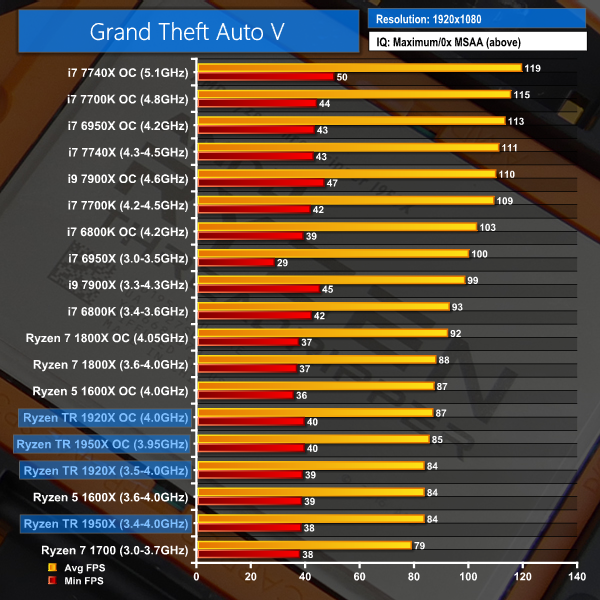

Grand Theft Auto V

Grand Theft Auto V remains an immensely popular game for PC gamers and as such retains its place in our test suite. The well-designed game engine is capable of providing heavy stress to a number of system components, including the GPU, CPU, and Memory, and can highlight performance differences between motherboards.

We run the built-in benchmark using a 1080p resolution and generally Maximum quality settings (including Advanced Graphics).

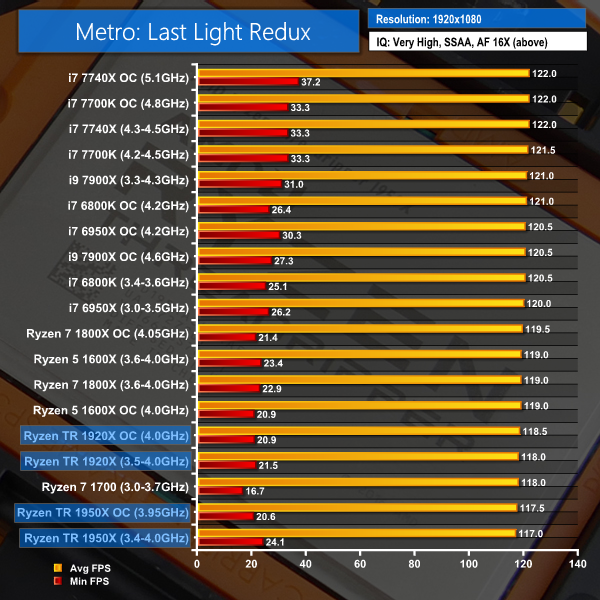

Metro: Last Light Redux

Despite its age, Metro: Last Light Redux remains a punishing title for modern computer hardware. We use the game's built-in benchmark with quality set to Very High, SSAA enabled, AF 16X, and High tessellation.

Gaming Performance Overview:

It is unsurprising by now that AMD loses to Intel when it comes to all-out gaming performance. The clock speed advantages on Intel's chips, as well as years of game development tuned for Intel hardware, generally put AMD processors at a performance deficit when looking at the high-end options.

3DMark Time Spy is well multi-threaded and therefore displays positive results with Threadripper. However, Intel's higher-clocked 10-core chips steal top spot away from the AMD offerings. Time Spy gives an indication that, even with the DX12 API, core count is less important than clock speed past a certain point.

Grand Theft Auto V is known to show preference for Intel hardware but the game's immense popularity and ability to stress CPUs justifies its reoccurrence in our test suite. The Ryzen chips, Threadripper included, generally hit a wall at around 90 FPS average using the maximum quality settings at 1080P. By comparison, Intel's chips including the i9-7900X can break through the 100 FPS average barrier.

If you are interested in high refresh rate gaming on your HEDT CPU, Intel's i9-7900X will be the better option for GTA V. With that said, minimum FPS numbers from Ryzen Threadripper are positive (despite the variance posed by the GTA V benchmark).

Metro: Last Light Redux is proof that any of these CPUs is perfectly capable of running certain GPU-limited games at high refresh rates. Threadripper chips still gravitate towards the lowest performance points on the chart and Skylake-X is marginally faster. However, the differences in this title are minimal between all CPUs and are limited by GPU performance (which is a more likely scenario for modern games and higher resolutions).

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

So it’s a beast for productivity [if it’s capable of delivering reliability in the long run], and it’s just fine for gaming, but not as cool as Intel. Not bad, not that great either. No reason to go away from X299 for now. I wonder how will the 12 – 18core Intel fare, both with performance and prices. Great review, proving my point in many discussions, thank You 🙂

so single core comparation is done with intel set at 4.5-5GHz and AMDs at 4.0GHz, and you’re surprised of that Intel’s is better 😐

So to test the maximum overclocked speeds of each you want Luke to achieve a 4.5ghz overclock on the Ryzen even though its just not possible. Perhaps Gandalf can help him with some magical powers.

Yes. All testing is done at stock and the realistic achievable overclocks on each CPU. Throttling Intel back to X GHz would be unfair if the Intel chips can OC further. Just as it would be unfair to throttle back Ryzen CPUs if they have faster clock speeds than their competitors.

Stock and realistic OC frequency is what we always test on all CPUs. Intel’s Skylake-X and Kaby Lake CPUs tend to OC higher than AMD’s Ryzen chips so that’s what people run their frequencies at and that’s what we test with.

Luke

Ok, i get that but …

1. Same cooling was used for both?

2. Is TR restricted, or why can’t it go pass the boosted clocks?

So, considering that AMD again gives 50 and 60 percent more cores for a same or the similar price those CPUs are a beasts for people who need productivity and content creation CPU

Who games at 1080p with a £1000 CPU and a GTX1080 ti? Wasn’t Ryzen equal to its intel equivalent at higher resolutions, Is this the same with ThreadRipper?

“Cons” in the review are so depraved you can not even imagine, and lacking 1 star out of 10…. For what? For not being available for free?

Oxymorons

I have to slightly disagree with the gaming analysis. Many times the reason RYZEN doesn’t perform well in gaming is that game developers still haven’t had enough time to optimize the RYZEN platform. Game developers haven’t had enough time with the AMD RYZEN hardware development kits as of yet.

The same cooling was used for all CPUs (280mm AIO) except Threadripper which used a 360mm Asetek AIO due to mounting compatibility.

The frequency limits for Ryzen look to be related to the manufacturing process technology used by AMD. At its own fabs, Intel looks to have the ability to manufacture dies that can operate at a higher frequency in general.

1080P and a fast GPU helps isolate CPU performance by ensuring no resolution- or GPU-induced bottlenecks are introduced. Our 4K testing shows Ryzen to be far more competitive against Intel when the performance onus is planted more firmly on the GPU.

Ok, thank you. Maybe Threadripper will be able to get higher OCs with some bios updates, how it happened to Ryzen 🙂

Are you confident its not a fabric/zeppelin die limitation rather than the zen core or the ccx?

If that hasnt been eliminated, perhaps raven ridge can shed some light eventually? As i understand it, and given it must be low power for mobile, it will be a single 4 core zen ccx & a single vega gpu on a die like ryzens zeppelin die. Point being, maybe it can clock better in that die form.

Agreed. Irrespective of the amount cooling available, no multi cores CPU would survive a Prime95 stress test on all cores for 30 minutes, let alone 1 hour or more. Prime95 should be taken off the web.

Current rumors predict $1,700 for the 16-core, and $2,000 for the 18-core.

Hey Luke. May I ask how you obtained the all-core turbo frequencies of the 1920X and 1950X? And how confident are you that they are correct? I ask, only because other sources have consistently stated 3.60 GHz for the 1950X. Thank you very much for this in-depth review.

Those are not rumors, they are official Intel pricing, confirmed by multiple outlets and reviewers.

18 core Intel i9 will be $1999.99 (USD)

16 Core Intel i9 will be $1799.99 (USD)

Price v Performance is going AMD’s route, they will end up taking a good portion of the HEDT market with their aggressive pricing and their performance.

“But muh i9 is faster”..

It also has less PCI-E lanes, uses more energy, when overlocked Intel’s 10 core gets hotter than the 16 core AMD, and dollar per dollar is less of a value. This again has been confirmed in testing via many respected hardware outlets/reviewers.

I only have 12 years as an IT professional in hardware management systems, what would I know.

They got the 16 core 1950X to 5.2Ghz on LN2 (Liquid Nitrogen), while that was obviously not representative of real world operations, it did show what can be done.

The issue Intel faces is the technology change that is currently happening where we are switching software from single core/thread ops to multicore and having code recognize the maximum amount of cores possible.

How is this a problem for Intel?

Despite having an immense about of capital to work with an state-of-the-art R&D facilities, Intel’s latest chips have issues with overclocking all cores and remaining efficient. In fact if you look at Intel’s turbo boosts they downclock heavily after 4 cores to keep TDP and energy consumption manageable and competitive. Intel’s biggest weakness is their ability to maximize silicon yields, this is one of the reasons they charge so much for their CPU’s, while AMD’s current approach allows them to scale as they need to with less transistors required on a single die, maximizing yield.

At this current rate I fully expect AMD to release their Zen 2 7nm CPU before Intel gets Cannon Lake (10nm) CPU’s out as AMD is already reporting over 80% yields with 14nm silicon, and anything above 60% yield allows for very competitive prices.

Okay, thanks John. And for the record, yes, I am fully supportive of Threadripper. Intel has been screwing people over for too long.

I’m not sure why you decided to say this, however.

Intel chips are NOT cool. Not even the 91w i5’s. Unless if you think 80c on watercooling for a STOCK i5 Skylake is cool.

Threadripper better as $1k for 16 cores 32 threads when intel like $2k for that. Ya had enough of limitations and greed

Why was enermax aio not used?

I agree. The 16-core 1950X was available on Amazon for $800 not too long ago.

Not as “cool”?, in what sense exactly? as in an over priced haircut kinda cool?

ur obviously a fanboy dick swinger, the only diff. this time u aint playing with the biggest dick.

All reviews out there on the net show Threadripper is faster in basically all productivity apps other than some outdated software that runs better on quads, TR uses less power, has better SMT, has more IO, costs less and has excellent motherboard support.

Wake up from ur delusional dream Bub, just ’cause ur sore that u overpaid for an overvolted stove top cpu that don’t do shit, don’t mean u have to spread ur bull shit propaganda.

Love the 1950x. Runs well at 4.1GHz, and running Prime95 simultaneously w/CPU-Z stress (all cores at 100%), T 105 fps. Other benchmarks show very good performance and durability.