Sandra Processor Arithmetic

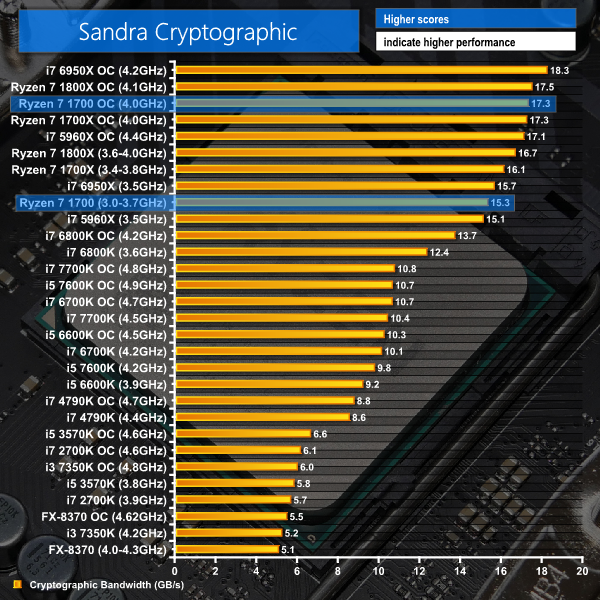

Sandra Cryptographic

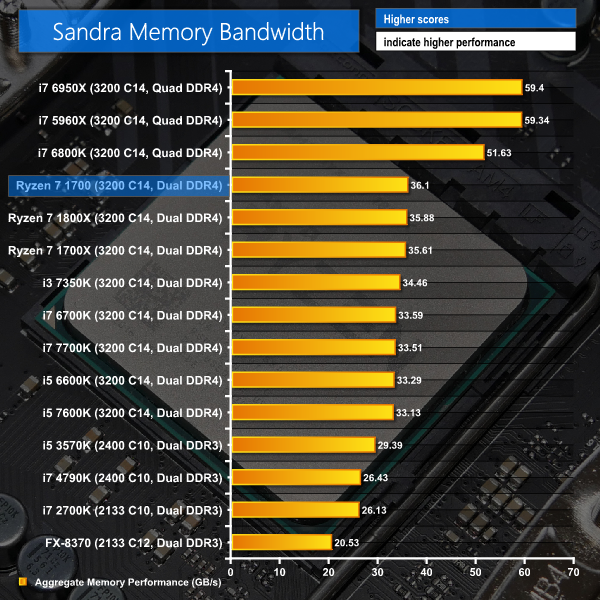

Sandra Memory Bandwidth

Synthetic performance also shines the 1700 under a positive light. You can extract most of the performance offered by Intel multi-core parts that are significantly more expensive. That's particularly true when overclocking the 1700 to 4GHz, at which point there is no distinguishable difference between it and the £70 more expensive 1700X. Intel's quad-core 7700K is comfortably beaten in Sandra's Arithmetic and Cryptographic tests.

Memory bandwidth is an advantage for LGA 2011-3 CPUs thanks to their quad-channel capability. This is also shown in tests such as 7-Zip and Handbrake which have an affinity for memory bandwidth.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

I was under the impression that XFR was only included on the X chips, yet you wrote that it is available on the 1700. Was this a mistake or is XFR actually enabled on the 1700 contrary to what I’ve read pretty much everywhere else?

XFR is enabled but only at half the headroom. The current X chips get 100MHz of XFR headroom whereas this non-X chip gets 50MHz. We validated this with multiple single-threaded tests and watched the frequency in CPUID HWMonitor report as 3750MHz on the loaded cores.

Interesting. I didn’t know that was a thing. Thanks!

Next gripe. Page 3 has the Ryzens listed at Summit Ridge, which isn’t their code name. The code name was Zen. Summit Ridge refers to the Zen based APUs that AMD has yet to release.

Thanks for the info. I’ve been following closely but I was completely unaware that the 1700 had XFR.

Good review, so in summary, if you want to play your games at very low resolutions, with refresh rates that your monitor does not support get the i7-7700k . But if you want a real PC that can do all other workstation tasks, and game at high resolution, get the Ryzen 1700. Anyhow, I don’t think anymore will buy a high end GPU and play in low res. What we need is a Real World comparison between the AMD and Intel chips. Can you do one for us please?

The 1700 is an impressive cpu. I can’t deny that it will do better in games once developers begin optimizing. I have mine sitting in the MSI Carbon Pro and is happily running at 4.0ghz 24/7 at 1.3875vcore. However try as I might I am not able to get my 3200 ram above 3000 at the moment. MSI have not been quick to produce new bios revisions and to date only have the one bios listed on their website!!!

There are 4K gaming tests in there also, which show very little difference between the Intel and AMD chips due to such demand being placed on the GPU. As for high refresh rate gaming, almost half of our readers like to game at 100 FPS or above: http://www.kitguru.net/tech-news/featured-announcement/matthew-wilson/kitguru-poll-shows-49-3-percent-of-readers-prefer-gaming-at-100hz-and-above/

This review is done so well except you don’t even have a 6900k!

wow I hate the smooth scrolling on your website, it’s the worst thing ever invented.

What your readers say and what they do is very different. Not that many people actually have >60hz monitors.

So my 1700 hits 3.75Ghz on all cores at 1.225v, is that a good result? I’ve only ever overclocked on intel’s x79 and x99 platform so I’m a bit clueless when it comes to AMD. Rock solid stable for 5 hours, even on the stock cooler didn’t see temps over 70C.

Any help haha?

I agree that monitors with refresh rates higher than 60Hz are less common. But we cannot ignore the fact that almost half of our readers are also interested in performance relating to higher refresh-rate gaming.

Either way, 1080P and 4K data are both in the review. If a reader is not interested in high refresh rate gaming, then checking that the CPU of interest can handle 60 FPS in the relevant game should be a sufficient interpretation of the results.

I would say that is a pretty good overclock. You could probably push the frequency around 150-250MHz higher but that will require a voltage increase and better cooler. The power draw and temperatures at 1.225V should be fine for long-term use. If that’s stable enough for your needs, I’d be inclined to stick with that balance of frequency and voltage, personally. But if you need more performance you may be able to push frequency a little higher with increased voltage.

I honestly can’t believe anyone would like to game at 100 FPS or above at Ultra Low Quality. Its like saying, that your readers also prefer to watch a block buster movie on their smart phones, instead of going to the cinemas. Anyhow, as you have mentioned there is no difference in gaming in the real world, the Ryzen wins easily when it comes to all other workstation use. The choice here is a no brainier.

That’s subjective. Each to their own preference. High refresh rate gaming doesn’t have to be low quality (resolution) either – there are 2560×1440 and 3440×1440 100Hz+ monitors on the market and 100Hz+ 4K monitors coming out this year. There are people using high refresh rate monitors so we can’t just ignore that fact. If it isn’t of importance to your usage scenarios, the performance on offer may be ideal for you.

I`m very curious what motherboard are you using to achieve that OC?

From what you are saying, I honestly can’t fandom why anyone would buy a 4K monitor with high refresh rate, then downscale it to 1080p, and buy high end gaming PC hardware just to play at high FPS at 1080p. Like I said, we need to focus on the real world tests here, not some unrealistic scenario. In the real world, people do more with their CPU than just gaming, many people multi-task while gaming. It would be very beneficial to do a multi-tasking gaming benchmark to see if the extra cores of Ryzen are really useful in the real world or not. A typical example would be streaming and gaming, do the extra cores help make Ryzen a better real world gaming PC?

But aren’t high refresh rates the domain of the GPU? How does a CPU affect refresh rates?

Check this out, there’s a YouTube Channel called “Game Testing” that pits different CPUs against each other and also different GPUs against each other and shows them in split-screen mode in real-time. On the left side of the screen you have one contender and on the right side you have the other contender both running at the same time.

I think that these are really creatively done (and I didn’t do them) and they really do show the difference (especially the lack thereof) in gaming between the Summit Ridge (R7-1700) and Kaby Lake (i5-7600K) architectures. Both CPUs are at stock clocks, the resolution is demonstrated to be 1080p and an nVidia GeForce GTX 1070 to ensure that there are no GPU bottlenecks. Enjoy!

We’ll start with a video that pits the R7-1700 against the i5-7600K in seven modern titles. Those titles are Project Cars, Arma III Apex, Fallout 4, Rise of the Tomb Raider, Hitman 2016, Just Cause 3 and Far Cry Primal.

https://www.youtube.com/watch?v=RBbJtOPUVcU

Now to add more like Grand Theft Auto V:

https://www.youtube.com/watch?v=UFffMS6m2vg

The Witcher 3:

https://www.youtube.com/watch?v=TXOHmZEQmAc

Crysis 3:

https://www.youtube.com/watch?v=piNUGg3pejA

Need For Speed 2015:

https://www.youtube.com/watch?v=y57Beci-vf0

Far Cry 4:

https://www.youtube.com/watch?v=jJ4Mi62bvkA

Watchdogs 2:

https://www.youtube.com/watch?v=rNq2hPMrmTw

And Battlefield 4:

https://www.youtube.com/watch?v=vgLNFpgFOl4&t=48s

So there’s fourteen games, all played at 1080p with a GTX 1070 with one side of the screen using an R7-1700 and the other side using an i5-7600K. I hope that this helps give a real and meaningful demonstration, far better than any benchmark bars ever could. I didn’t make these videos but they are the best thing I’ve ever seen for demonstrating real gameplay experience between Summit Ridge and Kaby Lake. Their channel has a whole bunch of other videos including videos saying that I don’t even need to upgrade my old FX-8350 for gaming just yet!

How do you like those benchmarks now? LOL

The CPU needs to prepare the data for the GPU. The faster the GPU requests that data, the faster the CPU needs to prepare it. That’s why high refresh rate gaming puts additional onus on CPU performance whereas 60 FPS gaming is generally comfortable for modern CPUs of this calibre (if the GPU is sufficiently fast).

That’s great, thanks for the benchmarks, as you said it gives a true picture of what is going on, much better than any graphs. So there is no real world difference between the game plays, i can’t tell the difference between 200 and 210 fps lol.

when i removed my cpu some accidental thermal paste went inside the pins. is it okay?

It’s not conductive but could cause a problem later making the joint between the pins capacitive in nature later on down the road as it dries somewhat. That’s a problem don’t risk it.

Make sure when you do this below that the alcohol level IS NOT higher then just to soak pins

and barely contacting the base. You don’t throw it in and submerge it. Use some common sense here.

And it will be fine.

So:

• Take a disposable bowl.

• Take 90% or higher isopropyl alcohol.

• Pour about one cup of alcohol into the bowl.

• Place chip pin side down into bowl.

• Let soak for about 5 – 10 minutes.

• Wait.

• Order a Pizza.

• Take a toothbrush, preferably a soft bristled one and dip that into the alcohol also.

• Softly and I mean softly brush in between the pins till all the thermal grease is removed.

• Let CPU dry on either a lintless towel or a paper towel till the alcohol is evaporated.

• Drink the remaining alcohol. Both in the bowl and in the bottle. It’ll put hair on your chest.

• Throw the bowl away. In a recycling bin. Good for the planet.

• Eat Pizza, remembering to wash your hands before returning to work.

• Replace CPU in socket, then reapply AS5.

ty

You can really see how the ryzen 7 line up is binned. 1800x better idle than 1700x -> better than 1700.

Intel: Anti-Competitive, Anti-Consumer, Anti-Technology.

https://www.youtube.com/watch?v=osSMJRyxG0k