Intel Corp.’s forthcoming central processing units code-named “Skylake” for personal computers will not support any AVX-512 instructions, according to a media report. Only Xeon processors for servers and, possibly, workstations will support 512-bit instructions.

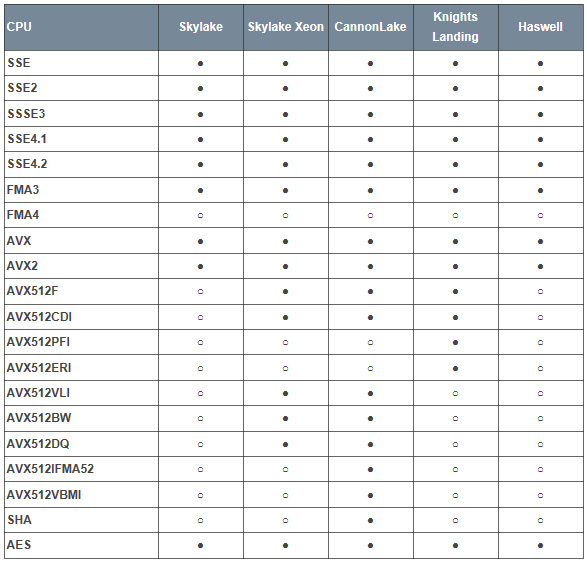

Support of 512-bit SIMD instructions – known as AVX3 – was expected to be a key feature of Intel “Skylake” processors, which would help the chips to demonstrate extremely high performance in applications that take advantage of the innovation. However, Intel decided not to enable any AVX-512 instructions in consumer versions of the code-named “Skylake” processors, reports Bits & Chips web-site. While future Xeon chips that belong to the “Skylake” generation will support select AVX-512 instructions. Apparently, even Xeon processors featuring the new cores will not support certain 512-bit instructions supported by Xeon Phi “Knights Landing” co-processors.

As it turns out, only “Cannonlake” processors due in late 2016 or early 2017 will support most AVX-512 instructions, but not all of them. It is also unclear whether consumer versions of “Cannonlake” CPUs will have comprehensive support of 512-bit instructions.

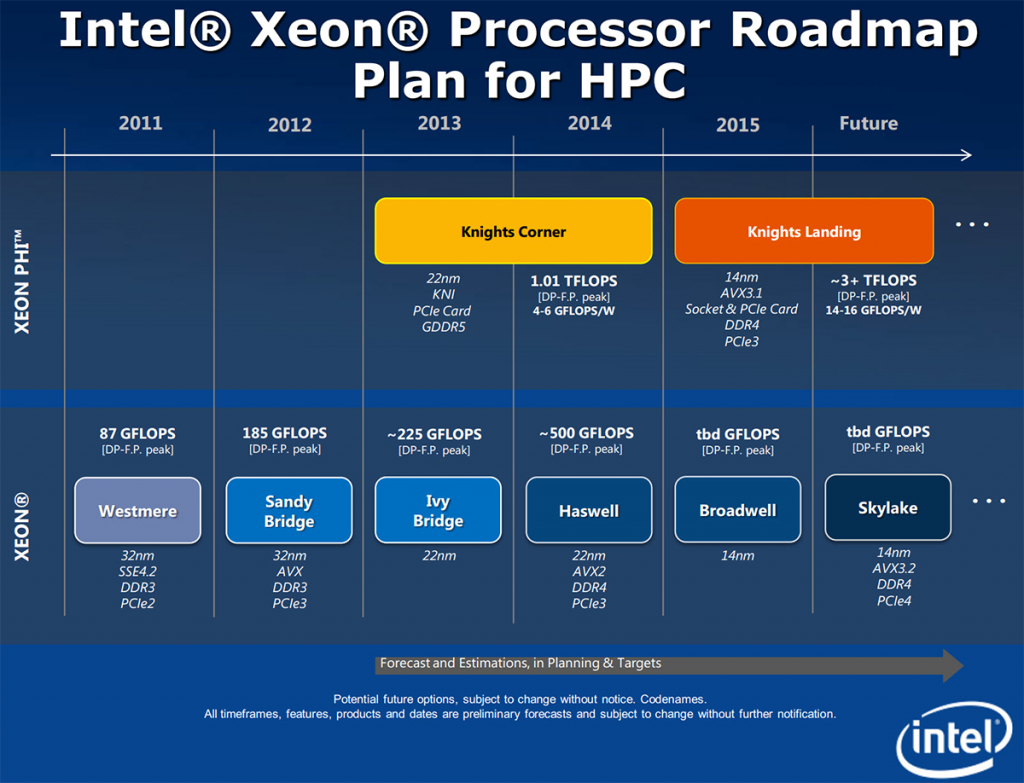

Several years ago it was reported that Intel Xeon processors with “Skylake” micro-architecture will support AVX 3.2 technology with 512-bit instructions. Intel Xeon Phi “Knights Landing” is expected to support AVX 3.1 instructions.

While 512-bit instructions will be useful for high-performance computing applications, in client PCs they could improve performance of demanding multimedia applications. Exclusion of AVX-512 support from consumer processors will slowdown adoption of the new instructions by software developers. In fact, without AVX 3.2 the new “Skylake” processors will bring almost no innovations compared to “Haswell” and “Broadwell” chips from instruction-set point of view.

Intel did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: Intel’s decision not to enable 512-bit instructions on consumer “Skylake” processors is clearly a strange one. The hardware to support AVX-512 is in the processors and it is unlikely that it uses so many transistors that disabling this technology dramatically improves yields of Intel’s central processing units.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Source might be right, but I don’t trust it until Intel announces this themselves.

Is this a good or bad thing?

yeah this is a pretty big deal and one of the biggest reasons for waiting for skylake. I hope Intel comments on this fairly soon. I’ve been holding out for years waiting for something significant that may make a big difference to upgrade and this seems to give the most potential, otherwise looks like we may still see another 5% bump.

Even bigger story for gamers is if DX12 makes upgrading cpus often even more irrelevant for gamers.

This is a stupid move.

You would still only see 5-15%. Intel and AMD have pushed hardware as far as it is going to go for old computing models and old software which doesn’t use SIMD instructions in any significant way. Software has to evolve. Hardware is way too far ahead for it to be worth Intel passing on AVX 512 to general consumers. No software currently made will use it.

Intel already has. They released the QEmu specs for all the Skylake chips a long, long time ago. AVX 512 only exists on their Xeons.

I can’t find the official announcement, but I’ll take your word for it. And here I thought Skylake was gonna be a gamechanger regarding instruction sets… oh well, I just hope the Union Point chipset is gonna support Cannonlake as well, so I can upgrade when they come out.

Hope its not a rebrand of Broadwell

Why bother when Software hasn’t even caught up to Nehalem and Sandy Bridge in instruction set usage? The problem right now is not hardware. Theres a 60+% gap between Sandy and Haswell with properly tuned software. It’s only old stuff running on SISD programming models that can’t be pushed much farther without jumps in clock rates. No matter what you do no instruction can execute in less than a cycle. Most of the single data instructions have been 1 cycle for a very long time. There’s not much room to gain there if there’s any left. This is partly why Excavator also didn’t gain much. Software must catch up. No amount of hardware can help if software doesn’t evolve to better utilize it.

You must be a blast at parties.

Hey, some of us use Gentoo and compile software ourselves. But even if you don’t – why would intel want to disable something that does no harm otherwise? Well, the answer is obviously – money, but then, they should be fucking straigtforward with us about that.

I am. When I’m at work I’m a cold, calculating machine. That’s how life works and how I roll.

Just get an E3 Xeon. They cost no more than I7s and, even though the clock rate is slightly lower, they still work with the same memory and Z-series boards (unless you choose to get ECC RAM).

Why bother to put instructions out there for the mainstream if they won’t be used? Intel has more important things to worry about, like maintaining the compute density advantage against AMD and IBM.

Not on laptops.

Nothing a laptop does needs AVX 512. Case closed. When software catches up to AVX 1.0 then we can talk.

Have you ever heard of auto-vectorization?

Guess what? If the software isn’t even compiled for SSE2, then there’s no point in asking for AVX 512. We haven’t even started using the 128 and 256-bit extensions beyond professional software, and in that case an E3 Xeon is no more expensive than an I7. When consumer software catches up, it’ll become obvious just how wrong the claim of “5%” performance gain the last 3 generations is. SISD software has been pushed up against the ceiling of performance. SIMD and MIMD must take over or it won’t matter what AMD and Intel do. Performance will rely on clock rates and being spread over multiple cores, and that’s only slowly changing as well.

http://llvm.org/docs/Vectorizers.html

The question still stands: It might be useless for 99% people, but why disable it when it’s already on the die?

Market segmentation. If you truly need it you’ll buy into the Xeons because you have no alternative. Or you’ll wait until Cannonlake which brings AVX 512 to all chip lines.

I’ll be staying on my Ivy Bridge for the time being then.

You just don’t get it. Right now, most people program the GPU on the laptop to do things. With SkyLake, I was prepared to port things to SIMD instead. But if all I’ve got is AVX 2.0, it’s just not worth it – there’s just not enough flops compared to the GPU to make it worth the programming effort. That’s the chicken and egg problem. Skylake makes the CPU a worthy target for data level parallelism – AVX 2.0 can’t compete. And BTW – the laptop needs it MORE than a Xeon because it has such limited horsepower in the first place.

You just don’t get it. Right now, most people program the GPU on the laptop to do things. With SkyLake, I was prepared to port things to SIMD instead. But if all I’ve got is AVX 2.0, it’s just not worth it – there’s just not enough flops compared to the GPU to make it worth the programming effort. That’s the chicken and egg problem. Skylake makes the CPU a worthy target for data level parallelism – AVX 2.0 can’t compete. And BTW – the laptop needs it MORE than a Xeon because it has such limited horsepower in the first place.

I’m betting by Cannonlake it’ll be game over and we’ll be switching to ARM CPUs. I really *wish* we could use Xeons, but in our development environment, fixed computers (non-laptops) are almost completely impractical.

I’m betting by Cannonlake it’ll be game over and we’ll be switching to ARM CPUs. I really *wish* we could use Xeons, but in our development environment, fixed computers (non-laptops) are almost completely impractical.

I doubt it. ARM still doesn’t do virtualization, and it still doesn’t have nearly the performance needed for even a mobile workstation.

I doubt it. ARM still doesn’t do virtualization, and it still doesn’t have nearly the performance needed for even a mobile workstation.

What programming effort?! If you use a decent compiler and the right optimization flags, getting SIMD is not difficult. You’d have to be royally incompetent (or trying too hard for legacy support) to not get SIMD just automatically, assuming you’re using C++ which, given its performance, is about the only language worth programming anything mission-critical in.

Also, BS. What the heck are you doing on a laptop that really needs that much power? Furthermore, why aren’t you using

#pragma omp offload target (gpu)

{//same C++ code as before here}

for your highly parallelizable code? Or OpenACC? Seriously, are you an actual industry professional, because what you’ve let through so far leads me to believe many of my fellows could program circles around you and/or you chose the wrong tool for the job.

What programming effort?! If you use a decent compiler and the right optimization flags, getting SIMD is not difficult. You’d have to be royally incompetent (or trying too hard for legacy support) to not get SIMD just automatically, assuming you’re using C++ which, given its performance, is about the only language worth programming anything mission-critical in.

Also, BS. What the heck are you doing on a laptop that really needs that much power? Furthermore, why aren’t you using

#pragma omp offload target (gpu)

{//same C++ code as before here}

for your highly parallelizable code? Or OpenACC? Seriously, are you an actual industry professional, because what you’ve let through so far leads me to believe many of my fellows could program circles around you and/or you chose the wrong tool for the job.

You’re one stupid fuck. Why disable it…..Why did Nvidia disable cores in the GTX 980? When all the nvidia versions of the Ti cards are just unlocked cores on the regular chips. Same with GTX Titan. Same gpu used on the 780ti but with processing cores unlocked. Same with all AMD and Intel processors. All the K versions are unlocked when typically the regular chips can overclock just as well. AMD has their black editions etc…..Its all about money and what consumers are willing to pay.