When we last ran a poll on KitGuru, less than 5% of readers who responded were running with an SLi configuration. There is no doubt that only a tiny percentage of enthusiast gamers have a system with more than one graphics card, and even less with 3 or 4.

With the release of Pascal Nvidia are making some big changes to SLi so we felt it was important to highlight in our analysis today.

While AMD have adopted a ‘bridgeless Crossfire' system in recent years, Nvidia SLI gamers have always had to rely on a physical SLi bridge and this won't change. What does change however is the implementation of the bridging system.

Two of the SLI interfaces have historically been used to enable communications between three or more GPUs in a system. The second SLI interface is required for 3 and 4 way SLI as all the GPUs in a system need to transfer their rendered frames to the display connected to the master GPU. Up to this point each interface has been independent.

With Pascal, the two interfaces are now linked together to improve bandwidth between the graphics cards. The new dual link SLI mode allows both SLI interfaces to be used together to feed one high resolution panel or multiple displays for Nvidia surround.

Dual Link SLI mode is supported with new SLI Bridge which Nvidia are calling SLI HB. This new bridging system allows for higher speed data transfer between the two GPUs connecting both interfaces.

So, do the old SLI connectors work with the new Pascal architecture? Nvidia say the GTX 1080 cards are compatible with ‘legacy SLI bridges, however the GPU will be limited to the maximum speed of the bridge being used'.

Sadly Nvidia didn't send us two cards so we have been unable to test any of this but they do say ‘Connecting both SLI interfaces is the best way to achieve full SLI clock speeds with GTX 1080's in SLI'.

The new SLI HB Bridge with a pair of GTX 1080's runs at 650mhz, compared to 400mhz in previous Geforce GPU's using a ‘legacy' SLi Bridge. To complicate matters even further, Nvidia claim that older SLI bridges will get a ‘speed boost' when paired up with Pascal hardware. Custom bridges that include LED lighting will now operate at up to 650mhz when used with the GTX 1080, utilising the faster IO capabilities of Pascal. Nvidia recommend the use of the new HB Bridge for 4k, 5k and surround panel resolutions.

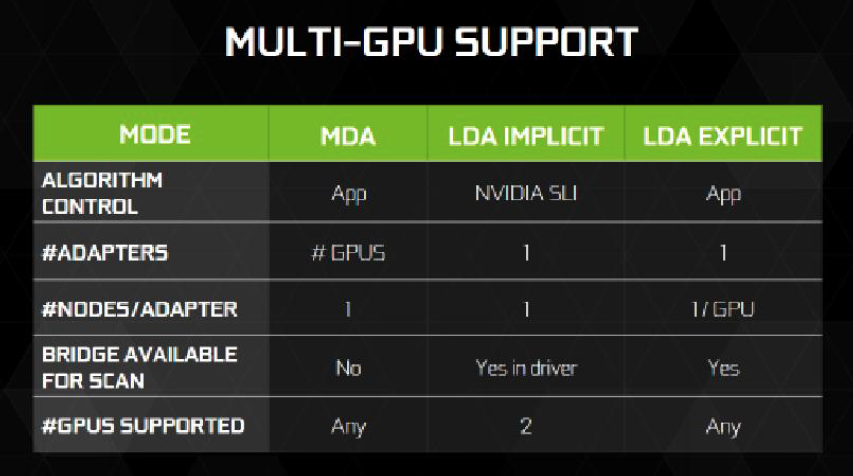

Microsoft have changed multi GPU functionality in the Direct X 12 API. There are two basic options for developers when using Nvidia hardware in multi GPU mode on Direct X 12. These are known as Linked Display Adapter (LDA) mode and Multi Display Adapter (MDA) mode.

LDA Mode has two forms: Implicit LDA Mode which Nvidia uses for SLI and Explicit LDA Mode which allows game developers to handle much of the responsibility for multi GPU operations. MDA and LDA Explicit Mode was created to help give game developers more control over the game engine as well as multi GPU performance.

In LDA mode each GPUs memory can be combined to appear as one large pool of memory for the developer. Although there are some exceptions regarding peer-to-peer memory. There is a performance penalty to be paid if the data needed resides in the other GPUs memory, since the memory is accessed through inter GPU peer to peer communication – much like PCIe. MDA Mode is different, in that each GPUs memory is accessed independent of the other GPU, so each of the graphics cards cannot access the other's memory.

LDA is a mode for multi GPU systems that have GPUs that are similar, while MDA has fewer limitations – discrete GPUs can be paired with integrated GPUs for instance, or even discrete GPUs from another manufacturer.

The Geforce GTX 1080 supports up to two GPUs. 3 Way and 4 Way SLI modes are no longer recommended by Nvidia. Nvidia claim that as games have evolved it is becoming more difficult for 3 and 4 way SLI modes to provide beneficial performance scaling. They add that games are becoming more bottlenecked by the CPU when running 3 and 4 way SLI and that games are using techniques that make it difficult to extract frame to frame parallelism.

Nvidia recommend systems to be built to target MDA or LDA Explicit, or 2 Way SLI with a dedicated Physx GPU.

Enthusiast Key

Nvidia are clearly moving away from 3 and 4 way SLI systems, however they are still offering some support for hard core gamers who demand 3 or 4 graphics cards. Nvidia do admit that some games will still benefit from more than 2 graphics cards.

To accommodate those gamers with 3 or 4 Nvidia graphics cards the company are incorporating a new system based around the concept of an ‘Enthusiast Key'. The process requires:

1: Run an app locally to generate a signature for the GPU you own.

2: Request an Enthusiast Key from an upcoming Enthusiast Key website.

3: Download the key.

4: Install the key to unlock the 3 and 4 way function.

Nvidia have yet to release details of the Enthusiast Key website, but they claim this will become public knowledge after the GTX 1080 cards are officially launched.

This seems rather convoluted to me but perhaps it will work better than I fear it will. I have also found SLI to be rather problematic in the last year.

Rise Of The Tomb Raider required a hack to get SLI working properly at all which I found quite shocking considering Nvidia were involved in the development of the game. At Ultra HD 4k resolutions the extra video card makes a huge difference to the overall gaming experience. More information on this over HERE.

Far Cry Primal ran very badly at Ultra HD 4k with our reference overclocked 5960X system featuring 3x Titan X cards in SLi. The best way around this was to manually force the Tri- SLi configuration into 2 way SLI by adjusting the Nvidia panel to use the third Titan X as a dedicated PhysX card.

With two cards in SLI the game was perfectly smooth at Ultra HD 4k, although a Titan X was completely overkill for simple PhysX duties. Clearly there was no effort invested on getting 3 way SLI working at all which is disappointing to see especially with a high profile AAA title.

Perhaps this rather public move to focus on simple 2 way SLI might help developers and Nvidia produce more working SLI profiles. Time will tell, although I have my doubts.

Fast Sync

Nvidia have implemented a new ‘latency conscious alternative' to traditional Vertical Sync (V-SYNC). It eliminates tearing while allowing the GPU to render unrestrained by the refresh rate to reduce input latency.

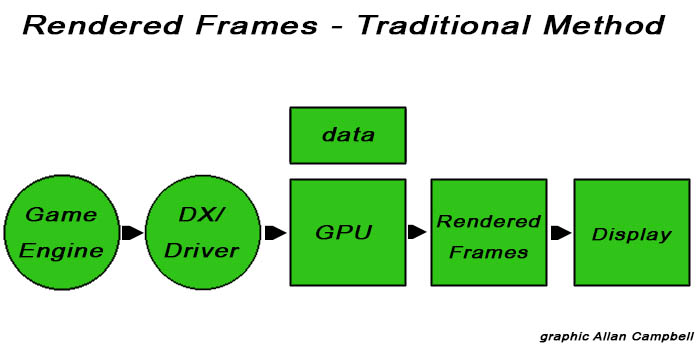

Above, a diagram showing how traditional frame rendering works through the Nvidia graphics pipeline. The game engine also has to calculate animation time and the encoding inside the frame which eventually gets rendered. The draw calls and information are communicated forward and the Nvidia driver and GPU converts them into the actual rendering and then produces a rendered frame to the GPU frame buffer. The last step is to scan the frame to the display.

Nvidia have adapted their thinking with Pascal.

They mentioned Counter Strike: Global Offensive as one such test subject. Nvidia say the Pascal hardware is able to power that game at hundreds of frames per second and currently there are two choices for most people – VSYNC on, or OFF.

A lot of people use VSYNC to eliminate frame tearing. With V-SYNC on however the pipeline gets back-pressured all the way to the game engine and the pipeline slows down to the refresh rate of the display. When enabled the display is basically telling the game engine to slow down because only one frame can be generated for each display refresh interval.

With VSYNC off the pipeline is told to ignore the display refresh rate and to produce game frames as fast as the hardware will allow. With VSYNC off the latency is reduced as there is no backpressure but frame tearing can rear its ugly head. Many eSports gamers are playing with it disabled as they don't want to deal with increased input latencies. Frame tearing at high frame rates can lead to jittering which can cause issues for gameplay.

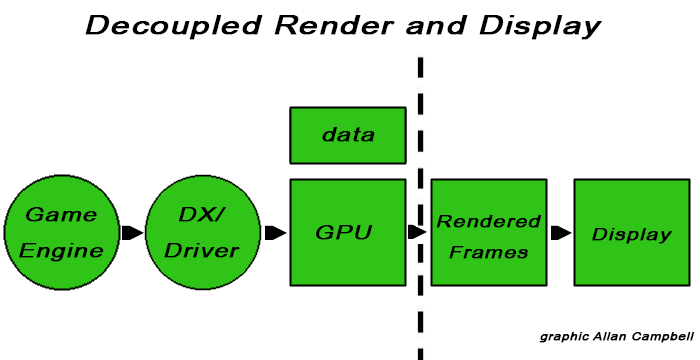

Nvidia have decided to look at the traditional process and are decoupling the rendering and display from the pipeline. This allows the rendering stage to continually generate new frames from data sent by the game engine and driver at full speed – meaning those frames can be temporarily stored in the GPU frame buffer.

Fast Sync removes flow control meaning that the game engine works as if VSYNC is off and with the removal of back pressure input latency is almost as low as with VSYNC OFF. Frame tearing is removed because Fast Sync selects which of the rendered frames to scan to the display.

| V-SYNC ON | V-SYNC OFF | FAST SYNC | |

| Flow Control | Backpressure | None | None |

| Input Latency | High | Low | Low |

| Frame Tearing | None | Tearing | None |

Fast Sync allows the front of the pipeline to run as fast as it can scanning out selected frames to the display and preserving entire frames so they can be displayed without tearing. Nvidia claim that turning Fast Sync on delivers only 8ms more latency than V-SYNC off, while producing entire frames without tearing.

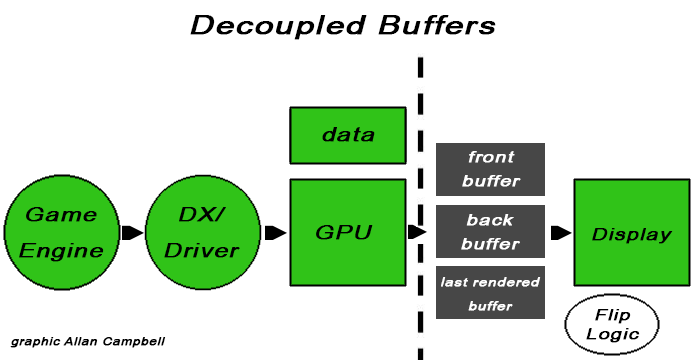

Imagine three areas in the frame buffer have been assigned in different ways. The first two buffers are very similar to double buffered VSYNC in classic GPU pipelines. The Front Buffer (FB) is the buffer scanned out to the display. The Back Buffer (BB) is the buffer that is currently being rendered and it can't be scanned out until it is completed. Standard VSYNC in high render rate games has a negative impact on latency as the game has to wait for the display refresh interval to flip the back buffer to become the front buffer before another frame can be rendered into the back buffer. The process is slowed down and latency is increased due to the addition of back buffers

Fast Sync adds a third buffer called the Last Rendered Buffer which holds all the newly rendered frames just finished in the back buffer – holding a copy of the most recently rendered back buffer, until the front buffer has finished scanning. At this point the Last Rendered Buffer is copied to the Front Buffer and the process continues. Direct buffer copies would be inefficient, so the buffers are renamed. The buffer being scanned to the display is the FB, the buffer being actively rendered is the BB and the buffer holding the most recently rendered frame is the LRB.

Flip Logic in the Pascal architecture controls the entire process.

High Dynamic Range

The new GTX 1080 supports all of the HDR display capabilities of the Maxwell range of hardware with the display controller capable of 12 bit colour, BT.2020 wide colour gamut, SMPTE 2084 (Perceptual Quantization) and HDMI 2.0b 10/12 bit for 4K HDR.

Pascal adds the following features:

- 4k@60hz 10/12b HEVC Decode (for HDR Video)

- 4k@60hz 10b HEVC Encode (for HDR recording or streaming).

- DP 1.4 ready HDR Metadata Transport (to connect to HDR displays using DisplayPort).

Nvidia are working on bringing HDR to games, including Rise Of the Tomb Raider, Paragon, The Talos Principle, Shadow Warrior 2, Obduction, The Witness and Lawbreakers.

Pascal introduces full PlayReady 3.0 (SL3000) support and HEVC decode in hardware, bringing the capability to watch 4K Premium video on the PC for the first time. Soon, enthusiast users will be able to stream 4K Netflix content and 4k content from other content providers on Pascal GPUs.

| Geforce GTX 980 (Maxwell) |

Geforce GTX 1080 (Pascal) |

|

| Number of Active Heads | 4 | 4 |

| Number of Connectors | 6 | 6 |

| Max Resolution | 5120 x 3200 @ 60hz (requires 2 DP 1.2 connectors) | 7680 x 4320 @ 60 hz (requires 2 DP 1.3 connectors) |

| Digital Protocols | LVDS, TMDS/HDMI 2.0, DP 1.2 | HDMI 2.0b with HDCP 2.2, DP (DP 1.2 certified, DP 1.3 Ready, DP 1.4 ready) |

The GTX 1080 is DisplayPort 1.2 certified, DisplayPort 1.3 and 1.4 ready – enabling support for 4k displays at 120hz, 5k displays at 60hz and 8k displays at 60hz (using two cables).

| Geforce GTX 980 (Maxwell) |

Geforce GTX 1080 (Pascal) |

|

| H.264 Encode | Yes | Yes (2x 4k@ 60 hz) |

| HEVC Encode | Yes | Yes (2x 4k@60 hz) |

| 10-bit HEVC Encode | No | Yes |

| H.264 Decode | Yes | Yes (4k@120hz up to 240 Mbps) |

| HEVC Decode | No | Yes (4k@120hz / 8k @ 30hz up to 320 Mbps) |

| VP9 Decode | No | Yes (4k@120hz up to 320 Mbps) |

| MPEG2 Decode | Yes | Yes |

| 10 bit HEVC Decode | No | Yes |

| 12 bit HEVC Decode | No | Yes |

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Fine work on the review, we are probably looking at £620 in the UK which is pretty steep

Too expensive

Can’t wait for the 1080 Ti to be released so I can upgrade my 980 Ti. Would love to have this 1080, but I think the price will be a bit too steep to validate a purchase at the moment. It does look like it will be a great upgrade for anyone else though, even with the 980 Ti it has quite a few games where it gets more than 10 fps extra on average.

It costs a small fortune, but holy crap that thing performs amazingly well. And with such little power consumption. Now the waiting begins, because it’ll take a few years before this kind of power becomes available to the less affluent consumers like me.

is that £619 for the founders edition? Because like many others I’m just going to go straight out for an aftermarket anyway so that gives a rough estimate on how they are going to be priced too (in the case that aftermarkets are based on the “normal” edition). Though it does offer more temptation to just wait for the Ti but I’ve done enough waiting by now xD

15% better than factory oced 980Ti for 700 euros isn’t a great jump in FPS/$ me thinks. 1080Ti or Vega are the ones for enthusiasts.

With these prices the to is looking £800+ probally more

Isn’t what usually happens is that by the time the Ti is released the 1080 will go down in price then Ti will cost the same amount as 1080’s release price?

What is the 980TI boosting too in this review? The G1 edition?

GTX980 to GTX1080Ti/Vega 11…come on, who will bring this HBM2 so I will play Star Citizen at 4K Ultra 60+ FPS?

Awesome card. Wish I could afford it. =x

Wait for custom cooled factory overclocked partner cards. They will be cheaper, faster and cooler.

Just a little off topic but will Fast sync have to be specifically supported by developer studios or can it just be enabled via the control panel (allowing for all games to make immediate use of it)?

AMD Radeon Pro Duo vs GTX 1080 vs GTX 1070 – Ultra performance test https://youtu.be/urYLez2aBew

So in overall, it is just a more efficient Maxwell with better granularity and less IPC per cluster, offset by higher clocks and a better software stack to make it up with the missing hardware scheduler, not impressed.

Does anyone know when EVGA will release their hybrid cooled GTX 1080s?

Think I’m going to wait to see what the Asus Strix 1080 OC (or whatever they’ll call it) can do. Happy with my 980 Strix until then 🙂

“This is the first time that Nvidia have introduced a vapour chamber cooling system on a reference card”

Uhm, the original NVTTM cooler used by the Titan, 780, and 780Ti used a vapor chamber. NVidia switched to the far worse heatpipe cooler for the Maxwell cards, which was the cause of their overheating problems.

I find the 1080 pretty underwhelming. It’s loud, it’s hot, it’s slower than a nearly two generation old 295X2 and barely faster than a 980Ti, it’s overpriced even compared to the faster AIB versions of itself, and since the entire NA market got a total of 36 cards for the launch, you can’t buy one anyways.

I am Brazilian , I need a gtx 970, but do not want to sell my motorcycle to buy , accept donation [email protected] my email

@4K+ the GTX 1080 is incredibly underwhelming, often only 8-9 FPS faster than a stock 980Ti.