Nvidia, ATI. Nvidia, AMD. The battle for top graphics card billing seems to have been around since time immemorial. We announce a new performance king, then another card is released and the position switches. While it may sound purely diplomatic, we genuinely feel that the last year has been very strong for both Nvidia and AMD.

Nvidia currently have the GTX780 Ti and Titan Black – two very expensive, killer boards which have appealed to the ultra high end audience. AMD have their premium R9 290 and R9 290X targeted at more aggressive price points – opening up the potential for Ultra HD 4K gaming to a wider audience.

With the recent Nvidia announce of the $3,000 Titan Z, AMD have taken the battle into the ‘dual GPU arena' by releasing their R9 295 X2 solution, comprising a mind blowing 12.4 billion transistors.

AMD have worked behind the scenes with Asetek in the development and creation of the proprietary liquid cooling system for the R9 295 X2. We will look at this in more detail on the next page of this review, however you can see from the picture above that it is based around a 120mm radiator – similar to a Corsair Hydro H60 V2, for instance.

Regular KitGuru readers will remember my appraisal of the reference AMD R9 290/X cooler. I can condense it down into a single word: atrocious. The move by AMD to watercooling is not only welcomed, but without question – unavoidable. We already know just how hot a R9 290 core gets under load – with two R290 cores on a single PCB the toasting of marshmallows is likely possible.

We have never received a graphics card with a power supply in the box. AMD are not taking any chances and include a BeQuiet! 1000W Power Zone to ensure review publications didn't decide to use a underspecified, unbranded Chinese power supply. We put our Fung Yung Wung 350W supply back in the cupboard.

Without delving into power demands right at the start of a review — in a nutshell AMD state that the power supply must have two 8 Pin PCIe power connectors that can each supply 28A of dedicated current. Combined power must be 50A or greater over the two 8 Pin power connectors. Realistically if you want to power one of these cards properly then a high grade 850W+ Gold or Platinum rated power supply should be in the system.

| AMD Radeon R9 295 X2 | AMD Radeon R9 290X | AMD Radeon R9 290 | |

| Process | 28nm | 28nm | 28nm |

| Transistors | 12.4 Billion | 6.2 Billion | 6.2 Billion |

| Engine Clock | Up to 1.02 GHZ | Up to 1GHZ | Up to 947 mhz |

| Primitive Rate | 8 prim / clk | 4 prim / clk | 4 prim / clk |

| Stream Processors | 5,632 | 2,816 | 2,560 |

| Compute Performance | Up to 11.5 TFLOPS | 5.6 TFLOPS | 4.9 TFLOPS |

| Texture Units | 352 | 176 | 160 |

| Texture Filrate | Up to 358.3 GT/s | Up to 176.00 GT/s | 152.00 GT/s |

| ROPs | 128 | 64 | 64 |

| Pixel Filrate | Up to 130.3 GP/s | Up to 64.0 GP/s | Up to 64.0 GP/s |

| Z/Stencil | 512 | 256 | 256 |

| Memory Bit-Interface | 2x 512 Bit | 512 Bit | 512 Bit |

| Memory Type | 8GB GDDR5 | 4GB GDDR5 | 4GB GDDR5 |

| Data Rate | Up to 5.0Gbps | Up to 5.0Gbps | Up to 5.0 Gbps |

| Memory Bandwidth | Up to 640.0 GB/s | Up to 320.0 GB/s | Up to 320.0 GB/s |

The chart above highlights that the R9 295 X2 is basically a single PCB comprising two R9 290X cores, with a combined 8GB of GDDR5 memory. It has a combined count of 5,632 stream processors, 352 texture units and 128 ROP's.

‘Underpowered' isn't a word we would associate with the R9 295 X2.

We have already mentioned the Nvidia GTX Titan Z graphics card. This $3,000 monster is powered by two Nvidia GK110 graphics processors in their maximum configuration with 2880 stream processors – giving the solution 5760 compute units in total to offer whopping 8TFLOPS of single-precision compute performance. The board is equipped with 12GB of GDDR5 memory (6GB per GPU).

We don't have an Nvidia Titan Z yet but we wanted to replicate the solution as best as possible. Today therefore are using two Nvidia GTX Titan Black cards, and will be comparing them to the R9 295 X2 in an SLi configuration.

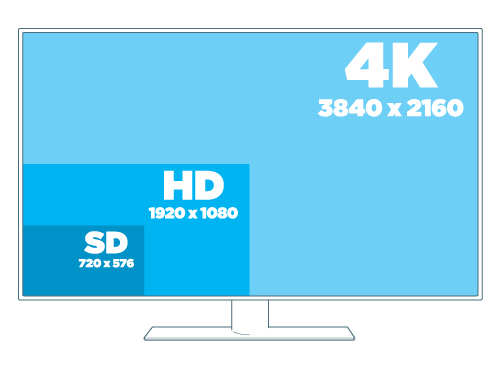

KitGuru was one of the first technology publications to start featuring an Ultra HD 4K screen in high end video card tests last year. We have been using the wallet busting Asus PQ321QE. As the price of other 4K monitors are now finally dropping to around £700, Ultra HD 4K is going to be the future of enthusiast gaming.

We also supplement our review today with 1080p and 1600p results, because every time we omit these we end up with hundreds of complaints in our inbox. It is worth pointing out that at 1080p there is likely to be some CPU limiting today, but we try and maximise the image quality settings as much as they will allow us.

The AMD R9 295 X2 arrives in a sexy silver flight case with AMD branded stickers and side panels.

Inside, the graphics card, radiator and hoses are all protected within hand cut, deep foam on all sides. You may think that this flight case is just a presentation idea for launch review websites such as KitGuru, but AMD told us that the end user should be getting the same thing when they buy from their local etailer.

The AMD R9 295 X2 measures 307mm, so you will need plenty of space to install. If you are using a small chassis with limited space, time to upgrade.

The AMD R9 295 X2 doesn't look quite like any previous graphics card we have seen released by the company in the past. The silver and black cooler actually reminded us a little of the XFX coolers on their previous generation cards. There is a light behind the ‘RADEON' logo. When the card is powered on this creates a red glow through the clear red fan and lettering. There is a picture of this later in the review.

The two hoses emerge from the top of the cooler, shown above. The hoses measure 380mm from exit point on the R9 295 X2 to the radiator.

The card is a ‘true' 2 slot design and it has a dual link DVI connector, along with four mini DisplayPort connectors. We had to use a mini DisplayPort converter cable for compatibility with our Asus PQ321QE monitor. We do hope AMD partners bundle at least one of these mini DisplayPort converters.

There are two eight pin PCI e connectors on the card. As mentioned on the last page the power supply must have two 8 Pin PCIe power connectors that can each supply 28A of dedicated current.

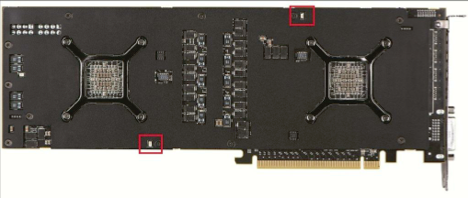

There is a dual bios switch on the other side of the PCB, which contains a backup of the default configuration.

The joint designed Asetek AMD R9 295 X2 liquid cooler features a 120mm radiator, measuring 38mm deep without the fan (64mm with). I have to admit I was slightly surprised to see AMD hadn't installed two fans to help drive more cool air through the radiator in a push-pull configuration. That said, it is quite straightforward to add another fan to the radiator – just check they are working together, not against.

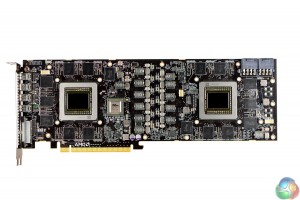

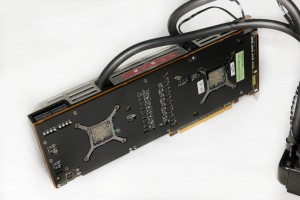

The rear of the card houses a metal backplate to protect the PCB, and to enhance cooling performance. Both GPU cooling mounts are visible.

The two images above show the R9 295 X2 ‘layers' – with the cooler removed in various sections. The memory and VRM's are cooled by a copper heatsink with the fan pushing air directly overhead, and the dual Asetek blocks are installed over the GPU cores on either side of the PCB. The pumps are integrated into the cooling heads.

AMD are using 8GB of SKHynix memory on the card, split into two 4GB GDDR5 sections on either side of the card. AMD have adopted a PLX bridge chip for the operation of the R9 295 X2.

AMD supplied a new driver specifically for use with the AMD R9 295 X2 graphics card. Catalyst 14.4 Beta dated March 28th was used in this review.

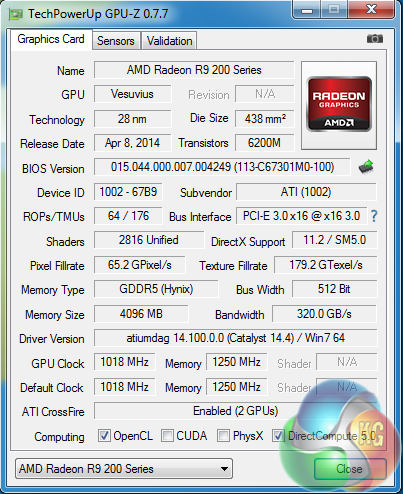

Special thanks goes out to Wizzard (Michael) over at Techpowerup for his help in supplying us directly with an early version of his excellent GPUz tool last week with full support for the R9 295 X2.

We discussed the specifications of the ‘Vesuvius' design in detail already – there are basically two R9 290X cores linked together by the PLX bridge chip to create the R9 295 X2.

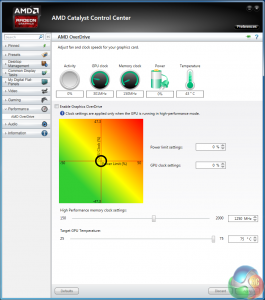

As we mentioned, the VBIOS switch on the card doesn't adjust any core clock or memory settings, the dual BIOS is a mirror. Catalyst Control Center indicates that the R9 295 X2 Powertune settings are configured to maintain temperatures to a maximum of 75c. This is a 20c reduction over the air cooled reference R9 290X.

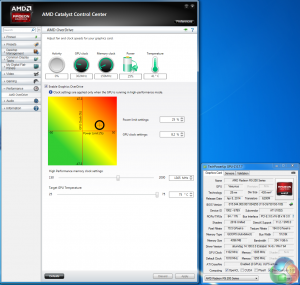

As we previously explained on R9 290/X launch day, the Catalyst Control Center has been redesigned to accommodate the new PowerTune technology. Overclocking and Power are now linked into a ‘2 dimensional heatmap'. AMD have said that this design makes it easier for the end user to adjust product performance. The R9 290/X and R9 295 X2 use a dynamic engine clock and overdriving the core speed works on a percentage.

The fan speed slider has also been reworked. Previous versions of Overdrive would set the fan to a specific RPM. This new system sets an upper limit on the fan RPM but otherwise allows the fan to be managed based on demand and graphics load. At default, the fan maxes out based on the current settings of the video BIOS that was booted. Adjusting the maximum fan slider will allow the user to select a different limit.

We discussed the R9 290/X reference cooler in previous reviews and how it struggled to maintain a 95c threshold without downclocking the core clock speed. AMD partners went to work on releasing a series of higher grade coolers after AMD's launch, and for the most part have succeeded. The Tri-X cooler from Sapphire comes to mind as being one of the best air coolers we have ever tested in our labs.

That said, there was no doubt in my mind that the R9 290/X would work best if it was watercooled. Many early R9 290/X adopters did just that – removed the reference cooler and watercooled it.

When AMD told us that they were creating a R9 295 X2 with two of these cores on a single PCB we knew it would have to be watercooled.

Watercooling and a partnership with Asetek is certainly a good move, but when I saw the small 120mm radiator and single fan I did have a moment of doubt. Was this really enough for two of these hot running cores? Surely a 240mm radiator would be a more logical choice for such a hot running piece of hardware?

Furmark always causes controversy. Many people say it pushes the graphics cards to a level unrealistic when gaming. We have always used it, but only to subsidize our game load testing. If you don't value it, then ignore the results and focus on the game testing in our reviews – simple as that.

The matter is more complicated now because a lot of people have been using AMD graphics cards to mine for coins. This works in a similar way to Furmark – loading the cards to an extreme level. People who mine 24/7 have had graphics card fans fail, and power supply cables have even melted on rare occasions.

When we tested the AMD R9 295 X2 with Furmark, the core would drop and fluctuate between 800mhz and 900mhz. The temperature would rise to a steady 75c and stay there. It didn't take long for the watercooling hoses to get very warm.

Fortunately, gaming is much less intensive and we didn't notice the same level of clock fluctuation. After an hour of playing Tomb Raider we did notice the core clocks would drop a little – the watercooling hoses again got very warm to the touch.

So my initial judgement was right, the 120mm radiator does struggle to cope. We really wouldn't advise overclocking this card either as the water temperature inside the cooler would rise more, especially under extended gaming sessions. We do overclock later in the review, because we know a lot of people will want to know the results.

There are some caveats when installing the AMD R9 295 X2. Ensure you mount it either at the top or rear of the case with the fan blowing air through the radiator and out. If you have the option of adding another fan and have the additional space for mounting, do it. We didn't get time to extensively test this, but another fan in a ‘push-pull' configuration certainly wouldn't hurt.

Ideally, AMD and Asetek could have developed a larger 240mm radiator, complete with 2 x 120mm fans. It would have undoubtedly performed better. I am sure AMD will argue that if the end user was already using a 240mm radiator to cool his or her CPU, then install space inside the case could certainly be a problem.

On this page we present some super high resolution images of the product taken with the 24.5MP Nikon D3X camera and 24-70mm ED lens. These will take much longer to open due to the dimensions, especially on slower connections. If you use these pictures on another site or publication, please credit Kitguru.net as the owner/source. You can right click and ‘save as’ to your computer to view later.

Today we test with the latest AMD Catalyst 14.4 Beta driver and the Nvidia Forceware 335.23 driver.

We are using one of our brand new test rigs supplied by PCSPECIALIST and built to our specifications. If you want to read more about this, or are interested in buying the same Kitguru Test Rig, check out our article with links on this page.

We are featuring results today with an Apple 30 inch Cinema HD Display at 2560×1600 resolution and an Asus PQ321QE Ultra HD 4K screen running at 4K 3840 x 2160 resolution.

Room ambient was held at 23c throughout testing.

Comparison Graphics cards:

Nvidia GTX Titan Black x2 (890 mhz core / 1,750 mhz memory)

Gigabyte GTX780 Ti Windforce OC (1020mhz core / 1750 mhz memory)

Palit GTX 780 Ti Jetstream OC (980 mhz core / 1,750 mhz memory)

Sapphire R9 290X Tri-X (1010mhz / 1250 mhz memory)

Software:

Windows 7 Enterprise 64 bit

Unigine Heaven Benchmark

Unigine Valley Benchmark

3DMark Vantage

3DMark 11

3DMark

Fraps Professional

Steam Client

FurMark

Games:

Thief 2014

Total War: Rome 2

Tomb Raider

Metro: Last Light

GRID 2

Battlefield 4

All the latest BIOS updates and drivers are used during testing. We perform generally under real world conditions, meaning KitGuru tests games across five closely matched runs and then average out the results to get an accurate median figure. If we use scripted benchmarks, they are mentioned on the relevant page.

Some game descriptions edited with courtesy from Wikipedia.

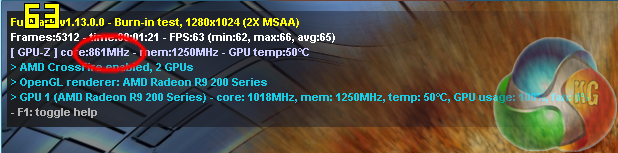

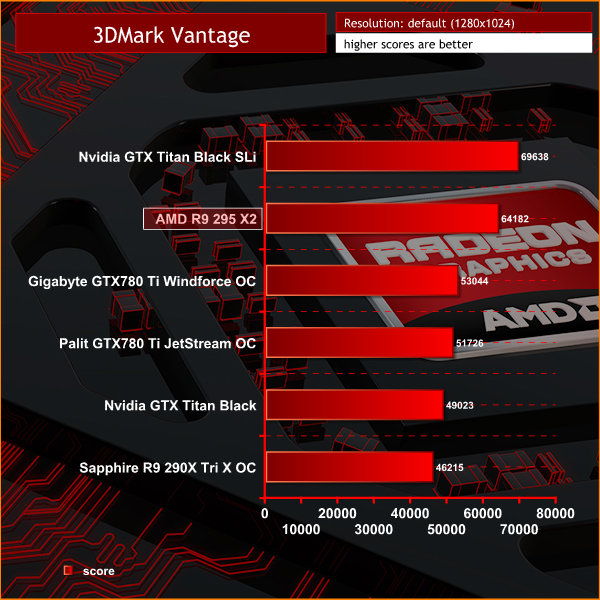

Futuremark released 3DMark Vantage, on April 28, 2008. It is a benchmark based upon DirectX 10, and therefore will only run under Windows Vista (Service Pack 1 is stated as a requirement) and Windows 7. This is the first edition where the feature-restricted, free of charge version could not be used any number of times. 1280×1024 resolution was used with performance settings.

The Nvidia GTX Titan Black cards in SLi win this older Direct X 10 benchmark, scoring 69,638 points, around 5,000 more than the AMD R9 295 X2.

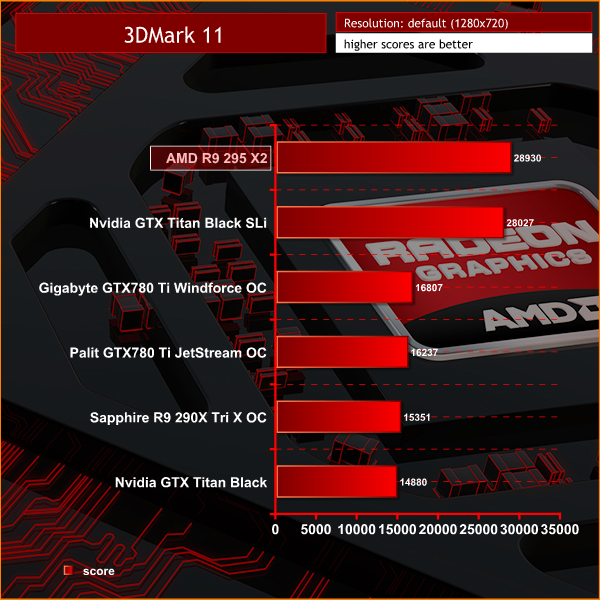

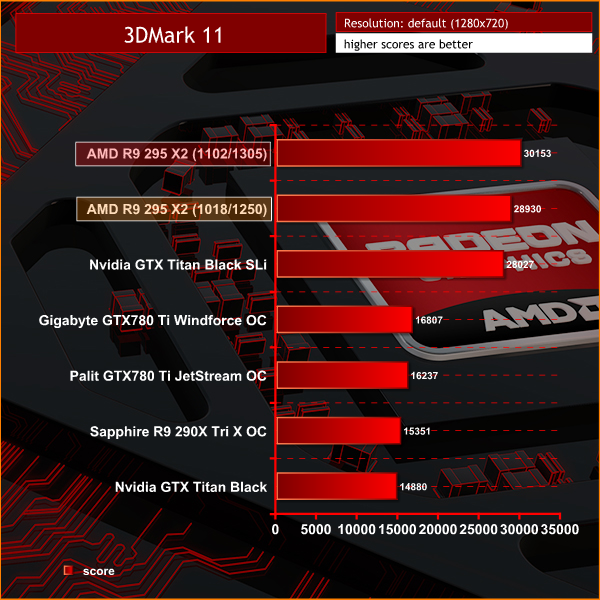

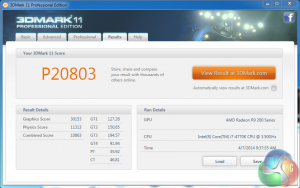

3DMark 11 is designed for testing DirectX 11 hardware running on Windows 7 and Windows Vista the benchmark includes six all new benchmark tests that make extensive use of all the new features in DirectX 11 including tessellation, compute shaders and multi-threading. After running the tests 3DMark gives your system a score with larger numbers indicating better performance. Trusted by gamers worldwide to give accurate and unbiased results, 3DMark 11 is the best way to test DirectX 11 under game-like loads.

While the GTX Titan Black's in SLi held the top spot in 3DMark Vantage the position shifts in favour of the AMD R9 295 X2 when we benchmark the Direct X 11 3DMark 11.

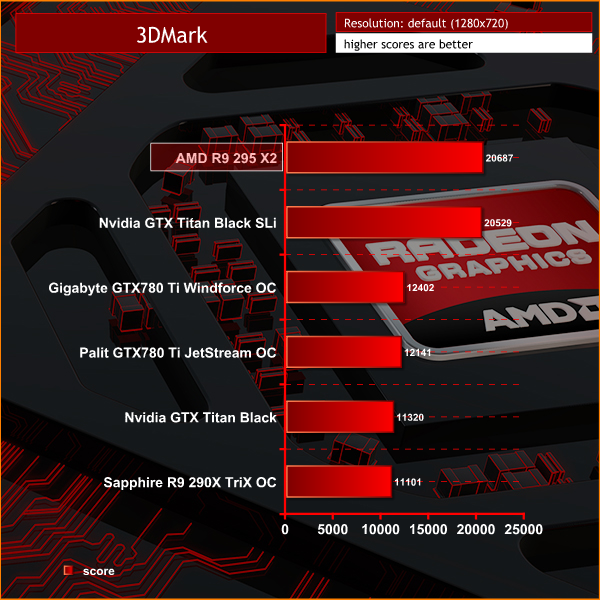

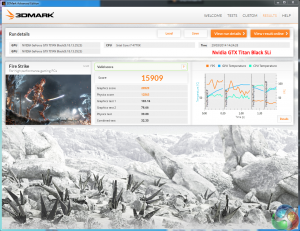

3DMark is an essential tool used by millions of gamers, hundreds of hardware review sites and many of the world’s leading manufacturers to measure PC gaming performance.

Futuremark say “Use it to test your PC’s limits and measure the impact of overclocking and tweaking your system. Search our massive results database and see how your PC compares or just admire the graphics and wonder why all PC games don’t look this good.

To get more out of your PC, put 3DMark in your PC.”

The latest Direct X 11 Futuremark benchmark shows how close it is between the AMD R9 295 X2 and the GTX Titan Black cards in SLi. The AMD card tops the chart however.

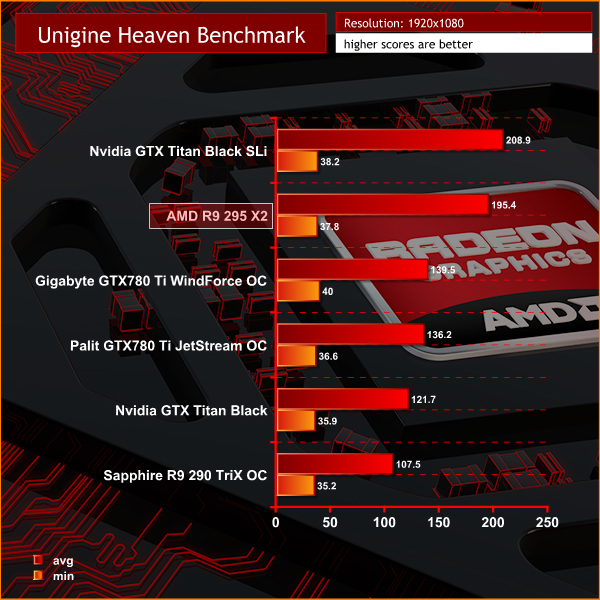

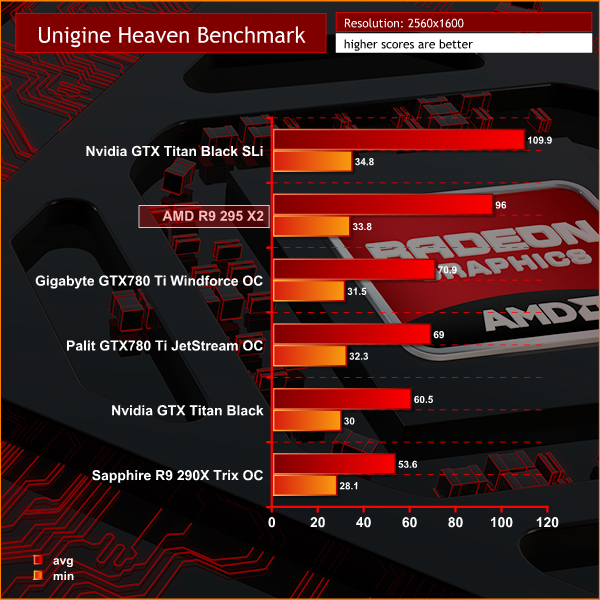

Unigine provides an interesting way to test hardware. It can be easily adapted to various projects due to its elaborated software design and flexible toolset.

A lot of their customers claim that they have never seen such extremely-effective code, which is so easy to understand.

Heaven Benchmark is a DirectX 11 GPU benchmark based on advanced Unigine engine from Unigine Corp. It reveals the enchanting magic of floating islands with a tiny village hidden in the cloudy skies. Interactive mode provides emerging experience of exploring the intricate world of steampunk.

Efficient and well-architected framework makes Unigine highly scalable:

- Multiple API (DirectX 9 / DirectX 10 / DirectX 11 / OpenGL) render

- Cross-platform: MS Windows (XP, Vista, Windows 7) / Linux

- Full support of 32bit and 64bit systems

- Multicore CPU support

- Little / big endian support (ready for game consoles)

- Powerful C++ API

- Comprehensive performance profiling system

- Flexible XML-based data structures

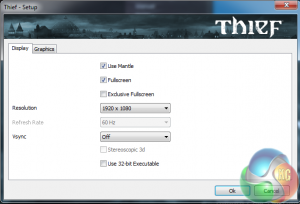

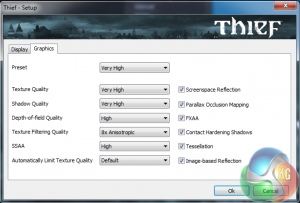

We use the settings shown above at 1920×1080 and 2560×1600.

The Nvidia GTX Titan Black in SLi perform better in this benchmark, both at 1080p and 1600p.

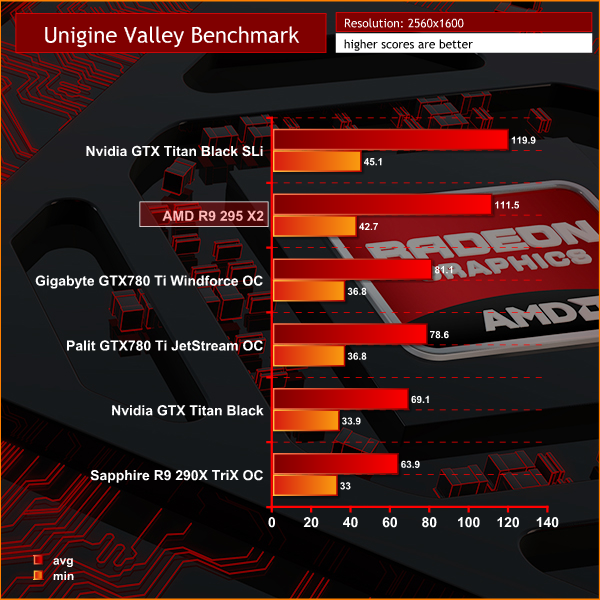

Valley Benchmark is a new GPU stress-testing tool from the developers of the very popular and highly acclaimed Heaven Benchmark. The forest-covered valley surrounded by vast mountains amazes with its scale from a bird’s-eye view and is extremely detailed down to every leaf and flower petal. This non-synthetic benchmark powered by the state-of-the art UNIGINE Engine showcases a comprehensive set of cutting-edge graphics technologies with a dynamic environment and fully interactive modes available to the end user.

We test with the settings above both at 1920×1080 and 2560×1600.

Very closely matched between the AMD R9 295 X2 and Titan Black's in SLi, although the Nvidia cards have the slight performance edge in this benchmark at both resolutions.

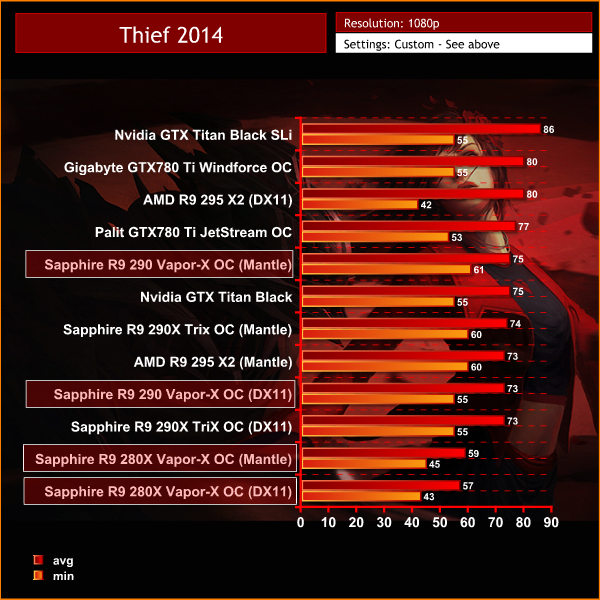

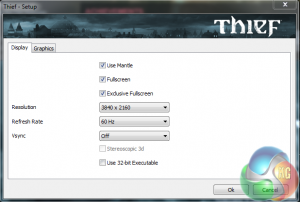

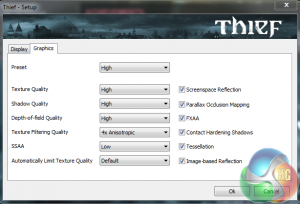

In Thief 2014 Players control Garrett, a master thief, as he intends to steal from the rich. Similar to previous games in the series, players must use stealth in order to overcome challenges, while violence is left as a minimally effective last resort.

We test at 1080p, both with Direct X rendering (Nvidia and AMD) and Mantle rendering (AMD only).

Mantle rendering is not yet Crossfire supported as we can see from the results. To get the most from the AMD R9 295 X2 we need to use Direct X rendering. We can see we are CPU limited at this resolution as the results are so closely matched.

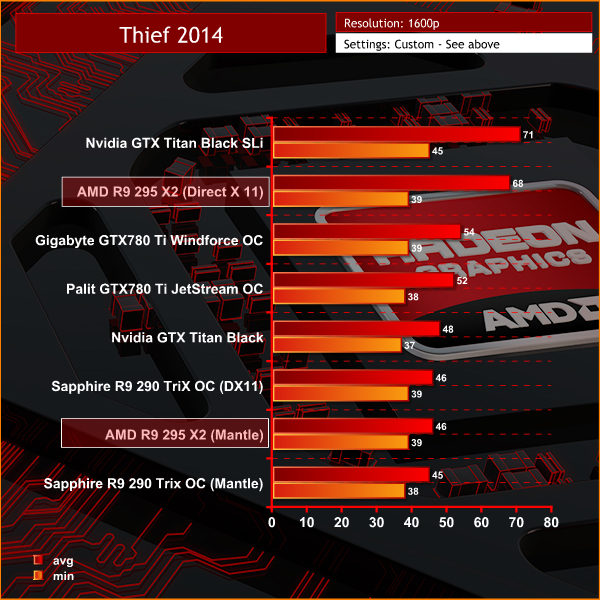

In Thief 2014 Players control Garrett, a master thief, as he intends to steal from the rich. Similar to previous games in the series, players must use stealth in order to overcome challenges, while violence is left as a minimally effective last resort.

We test at 1600p, both with Direct X rendering (Nvidia and AMD) and Mantle rendering (AMD only).

CPU limiting is less prevalent at 1600p and we can see the R9 295 X2 and Titan Black cards in SLi have a clear performance edge. As we mentioned on the last page, Mantle rendering is not yet optimised for Crossfire.

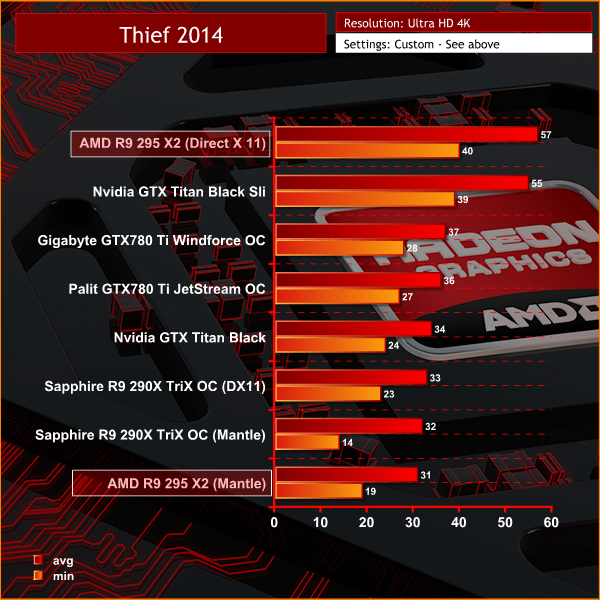

In Thief 2014 Players control Garrett, a master thief, as he intends to steal from the rich. Similar to previous games in the series, players must use stealth in order to overcome challenges, while violence is left as a minimally effective last resort.

We test at Ultra HD 4k with the HIGH preset, both with Direct X rendering (Nvidia and AMD) and Mantle rendering (AMD only).

If you want to play Thief 2014 at Ultra HD 4K then the clear choices are both AMD R9 295 X2 and the Nvidia Titan Black cards in SLi. The Titan Black cards in SLi led the way at 1600p, but the AMD R9 295X2 takes top position at Ultra HD 4k.

Mantle rendering is not yet optimised for Crossfire. Interestingly we noticed some significant frame rate drops from the Sapphire R9 290X TriX OC solution when using AMD's Mantle rendering.

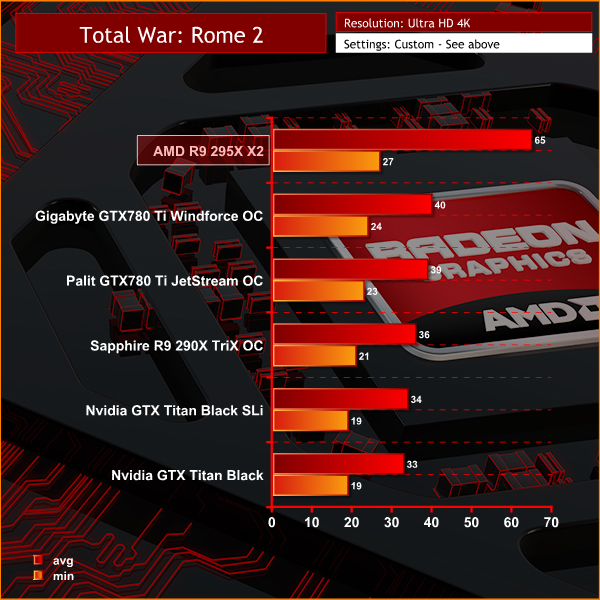

Total War ROME 2 is the eighth stand alone game in the Total War series, it is the successor to the successful Rome: Total War title. The Warscape Engine powers the visuals of the game and the new unit cameras will allow players to focus on individual soldiers on the battlefield, which in itself may contain thousands of combatants at a time.

Creative Assembly has stated that they wish to bring out the more human side of war this way, with soldiers reacting with horror as their comrades get killed around them and officers inspiring their men with heroic speeches before siege towers hit the walls of the enemy city. This will be realised using facial animations for individual units, adding a feel of horror and realism to the battles.

We test at 1080p with the image quality settings maxed out – at the ‘Extreme' preset.

We couldn't get SLi to work properly with ROME 2, and the Sli Titan Black cards are no faster than the single card. Even with some minor CPU limiting we can see just how far ahead the AMD R9 295 X2 card is in this particular game at 1080p.

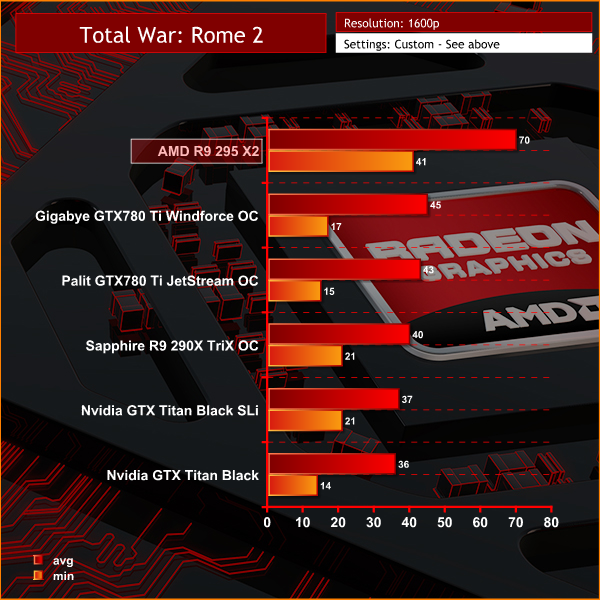

Total War ROME 2 is the eighth stand alone game in the Total War series, it is the successor to the successful Rome: Total War title. The Warscape Engine powers the visuals of the game and the new unit cameras will allow players to focus on individual soldiers on the battlefield, which in itself may contain thousands of combatants at a time.

Creative Assembly has stated that they wish to bring out the more human side of war this way, with soldiers reacting with horror as their comrades get killed around them and officers inspiring their men with heroic speeches before siege towers hit the walls of the enemy city. This will be realised using facial animations for individual units, adding a feel of horror and realism to the battles.

We test at 1600p with the image quality settings maxed out – at the ‘Extreme' preset.

Without a functioning SLi profile, the AMD R9 295 X2 takes top position by a clear margin and is the only solution perfectly playable at the maxed out settings.

Total War ROME 2 is the eighth stand alone game in the Total War series, it is the successor to the successful Rome: Total War title. The Warscape Engine powers the visuals of the game and the new unit cameras will allow players to focus on individual soldiers on the battlefield, which in itself may contain thousands of combatants at a time.

Creative Assembly has stated that they wish to bring out the more human side of war this way, with soldiers reacting with horror as their comrades get killed around them and officers inspiring their men with heroic speeches before siege towers hit the walls of the enemy city. This will be realised using facial animations for individual units, adding a feel of horror and realism to the battles.

We test at Ultra HD 4K resolutions with the ULTRA preset.

The AMD R9 295X X2 wins the Ultra HD 4K test by a clear margin, averaging 65 frames per second. The minimum frame rate was generally quite smooth, although we noticed a few drops just below 30 frames per second.

The AMD R9 295 X2 was the only solution we tested with Rome 2 that was perfectly playable at Ultra HD 4K with these very high image quality settings.

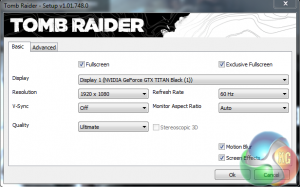

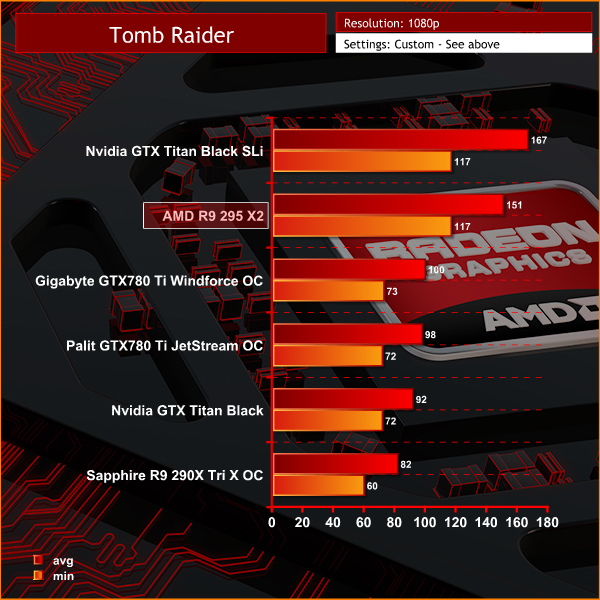

Tomb Raider received much acclaim from critics, who praised the graphics, the gameplay and Camilla Luddington’s performance as Lara with many critics agreeing that the game is a solid and much needed reboot of the franchise. Much criticism went to the addition of the multiplayer which many felt was unnecessary. Tomb Raider went on to sell one million copies in forty-eight hours of its release, and has sold 3.4 million copies worldwide so far.

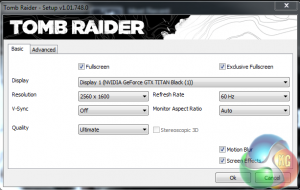

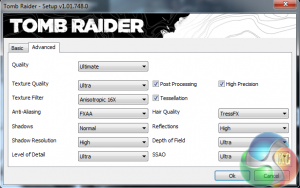

We use the ULTIMATE profile, as shown above. We want the best image quality possible.

The Titan Black cards in SLi claim the top performance position at 1080p, although the AMD R9 295 X2 matches the minimum frame rate figure of 117 frames per second.

Tomb Raider received much acclaim from critics, who praised the graphics, the gameplay and Camilla Luddington’s performance as Lara with many critics agreeing that the game is a solid and much needed reboot of the franchise. Much criticism went to the addition of the multiplayer which many felt was unnecessary. Tomb Raider went on to sell one million copies in forty-eight hours of its release, and has sold 3.4 million copies worldwide so far.

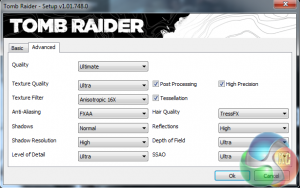

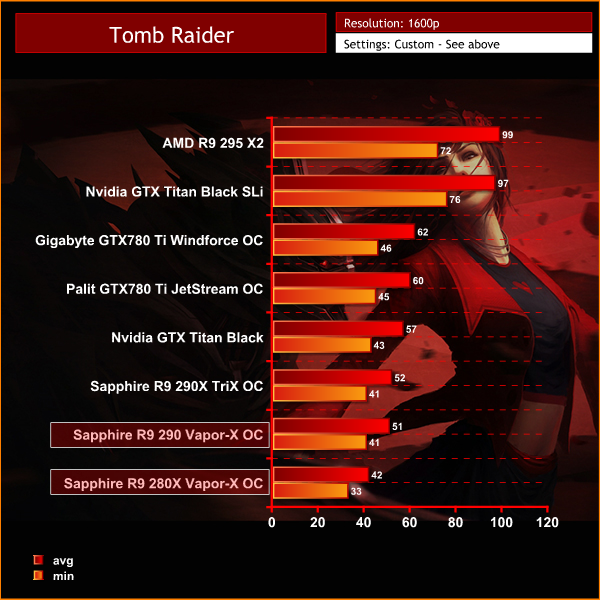

We use the ULTIMATE profile at 2560×1600, as shown above.

While the GTX Titan Black in SLi held the top position at 1080p, the position is reversed at 1600p. The AMD R9 295 X2 takes top position, averaging 99 frames per second. The GTX Titan Black in SLi do maintain a slightly better minimum frame rate however.

Tomb Raider received much acclaim from critics, who praised the graphics, the gameplay and Camilla Luddington’s performance as Lara with many critics agreeing that the game is a solid and much needed reboot of the franchise. Much criticism went to the addition of the multiplayer which many felt was unnecessary. Tomb Raider went on to sell one million copies in forty-eight hours of its release, and has sold 3.4 million copies worldwide so far.

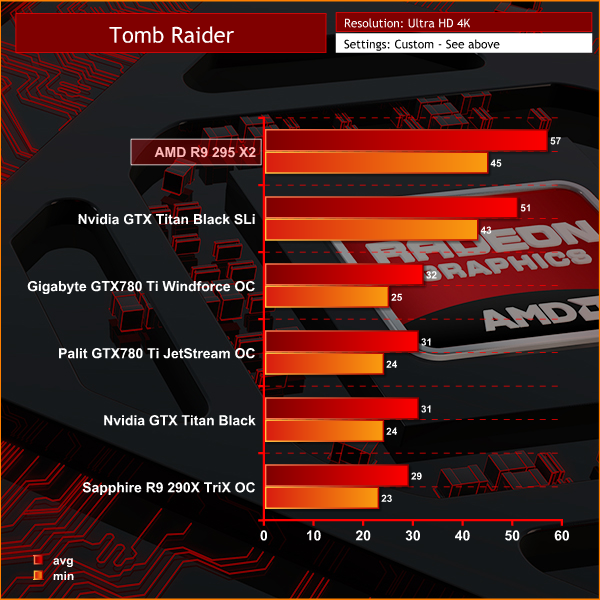

We switched over to the ASUS Ultra HD 4K monitor and set to the ULTIMATE profile for maximum image quality.

As we increase the resolution to Ultra HD 4K, we can see the AMD R9 295 X2 pulls away a little from the GTX Titan Black cards in SLi. There is around a 6 frame difference in favour of the AMD solution.

Metro: Last Light takes place one year after the events of Metro 2033, proceeding from the ending where Artyom chose to call down the missile strike on the Dark Ones. The Rangers have since occupied the D6 military facility, with Artyom having become an official member of the group. Khan, the nomad mystic, arrives at D6 to inform Artyom and the Rangers that a single Dark One survived the missile strike.

4A Games’ proprietary 4A Engine is capable of rendering breathtaking vistas, such as those showing the ruined remnants of Moscow, as well as immersive indoor areas that play with light and shadow, creating hauntingly beautiful scenes akin to those from modern-day photos of Pripyat’s abandoned factories and schools.

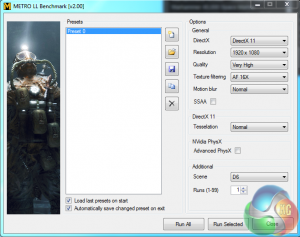

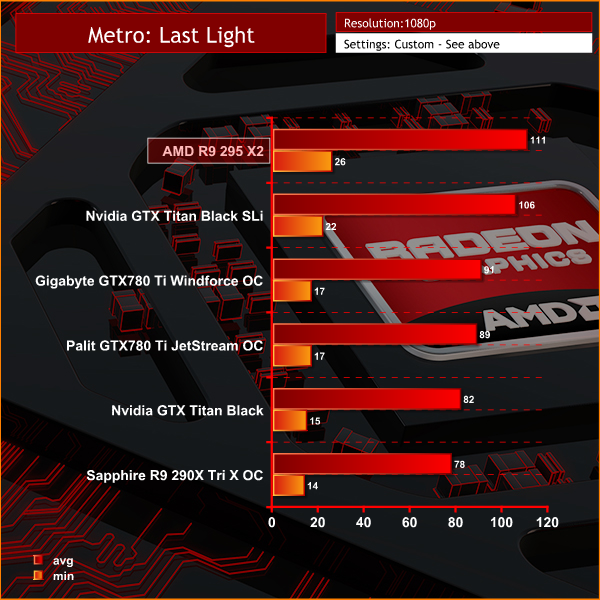

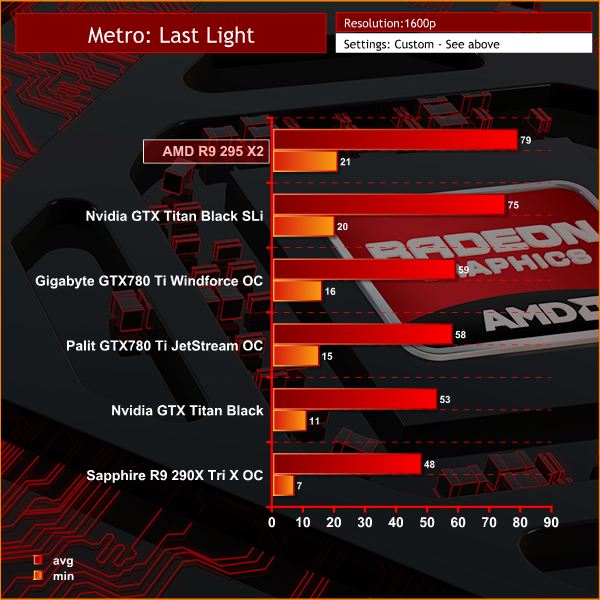

We tested this particular game with the extremely demanding built in benchmark. Settings detailed above. Direct X 11 mode, Quality is set at Very High, 16 AF, normal Motion blur, Tessellation Normal, Advanced PhysX disabled and SSAA disabled.

A very demanding engine at these settings, and the Nvidia GTX Titan Black cards in Sli show the other cards a clean pair of heels, even at 1080p. The AMD R9 295 X2 however claims top position, averaging 111 frames per second.

Metro: Last Light takes place one year after the events of Metro 2033, proceeding from the ending where Artyom chose to call down the missile strike on the Dark Ones. The Rangers have since occupied the D6 military facility, with Artyom having become an official member of the group. Khan, the nomad mystic, arrives at D6 to inform Artyom and the Rangers that a single Dark One survived the missile strike.

4A Games’ proprietary 4A Engine is capable of rendering breathtaking vistas, such as those showing the ruined remnants of Moscow, as well as immersive indoor areas that play with light and shadow, creating hauntingly beautiful scenes akin to those from modern-day photos of Pripyat’s abandoned factories and schools.

We tested this particular game with the extremely demanding built in benchmark. Settings detailed above. Direct X 11 mode, Quality is set at Very High, 16 AF, normal Motion blur, Tessellation Normal, Advanced PhysX disabled and SSAA disabled.

Again, the AMD R9 295 X2 claims top position, averaging 79 frames per second.

Metro: Last Light takes place one year after the events of Metro 2033, proceeding from the ending where Artyom chose to call down the missile strike on the Dark Ones. The Rangers have since occupied the D6 military facility, with Artyom having become an official member of the group. Khan, the nomad mystic, arrives at D6 to inform Artyom and the Rangers that a single Dark One survived the missile strike.

4A Games’ proprietary 4A Engine is capable of rendering breathtaking vistas, such as those showing the ruined remnants of Moscow, as well as immersive indoor areas that play with light and shadow, creating hauntingly beautiful scenes akin to those from modern-day photos of Pripyat’s abandoned factories and schools.

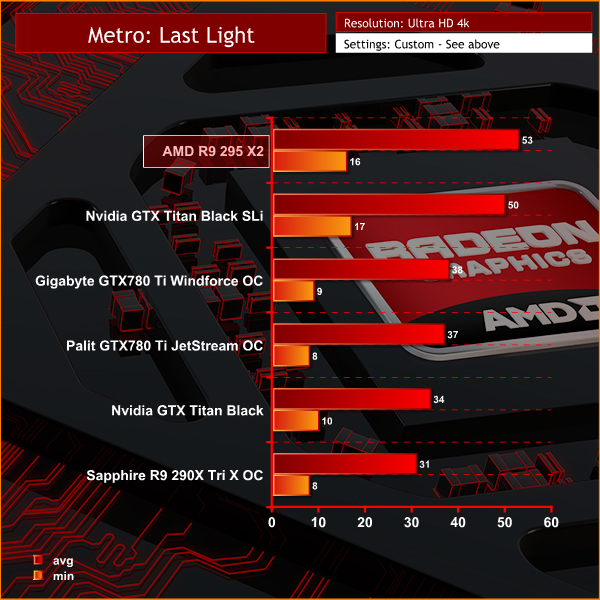

We tested this particular game with the extremely demanding built in benchmark. Settings detailed above. Direct X 11 mode, Quality is set at High, 16 AF, normal Motion blur, Tessellation Normal, Advanced PhysX disabled and SSAA disabled.

A close run battle for the top position, with the AMD R9 295 X2 managing a minor performance edge.

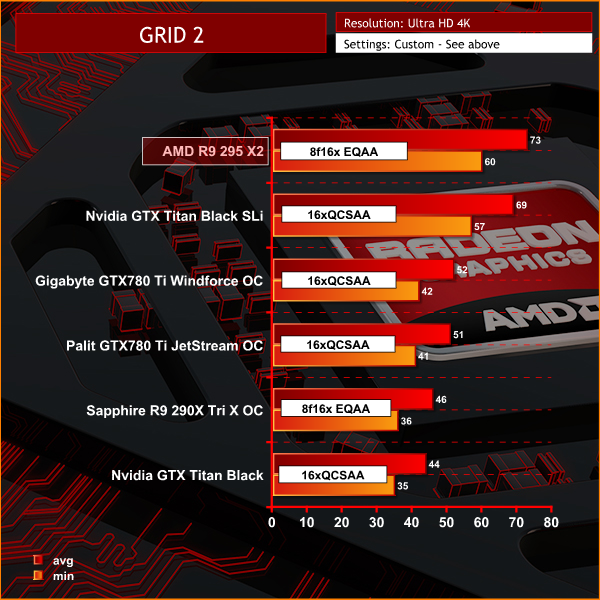

Grid 2 is the sequel to the racing video game Race Driver: Grid. It was developed and published by Codemasters. The game includes numerous real world locations such as Paris, numerous United States locations, and many more, and also includes motor vehicles spanning four decades. In addition, it includes a new handling system that developer Codemasters has dubbed ‘TrueFeel’, which aims to hit a sweet spot between realism and accessibility.

We test at the maximum image quality settings, which are different for both AMD and Nvidia cards. The Nvidia hardware is tested at 16xQCSAA and the AMD hardware is tested at 8f16x EQAA.

The results below therefore are NOT directly comparable. We wanted to show performance from both AMD and NVIDIA solutions in GRID 2 when maximising the image quality settings.

There is no doubt that the AMD R9 295 X2 gives the best experience in GRID 2 at Ultra HD 4K. Even though the IQ settings are inherently different due to Nvidia and AMD driver configurations, you would be hard pressed to notice any ‘real world' image quality differences between the AMD R9 295 X2 and the Nvidia GTX Titan Black cards running in SLi.

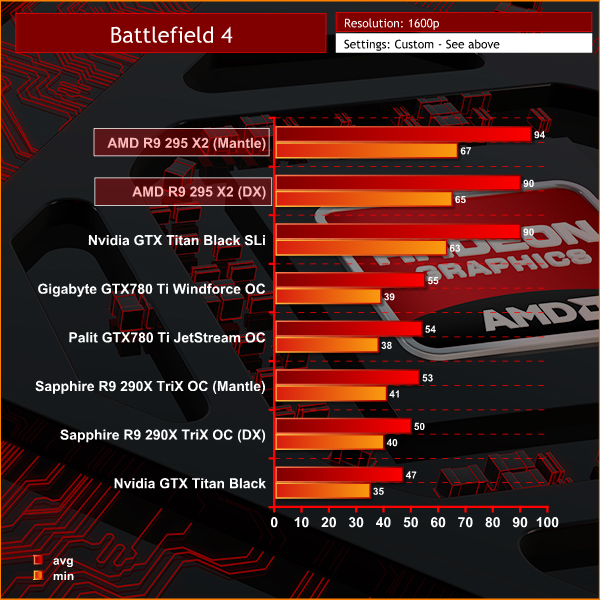

The AMD R9 295 X2 is the only solution on test today that can maintain a constant 60 frames per second or greater at Ultra HD 4K with the highest image quality settings possible.Battlefield 4 (also known as BF4) is a first-person shooter video game developed by EA Digital Illusions CE (DICE) and published by Electronic Arts. The game is a sequel to 2011′s Battlefield 3. Battlefield 4 is built on the new Frostbite 3 engine. The new Frostbite engine enables more realistic environments with higher resolution textures and particle effects. A new “networked water” system is also being introduced, allowing all players in the game to see the same wave at the same time.Tessellation has also been overhauled.

We test at 2560×1600 with the image quality settings on the ‘ULTRA' preset. We test the AMD hardware with both Direct X and AMD's Mantle rendering. Nvidia hardware is tested with Direct X rendering.

The AMD R9 295 X2 takes the top position at 1600p, just ousting the SLI'd GTX Titan Black cards. MANTLE rendering scored a little higher than the Direct X rendering mode.

Battlefield 4 (also known as BF4) is a first-person shooter video game developed by EA Digital Illusions CE (DICE) and published by Electronic Arts. The game is a sequel to 2011′s Battlefield 3. Battlefield 4 is built on the new Frostbite 3 engine. The new Frostbite engine enables more realistic environments with higher resolution textures and particle effects. A new “networked water” system is also being introduced, allowing all players in the game to see the same wave at the same time.Tessellation has also been overhauled.

We test at Ultra HD 4K with the HIGH Image preset.

The AMD R9 295 X2 scores at the top of the chart, averaging between 80 and 82 frames per second. MANTLE rendering scored a little higher than the Direct X rendering mode.We have built a system inside a Lian Li chassis with no case fans and have used a fanless cooler on our CPU. The motherboard is also passively cooled. This gives us a build with almost completely passive cooling and it means we can measure noise of just the graphics card inside the system when we run looped 3dMark tests.

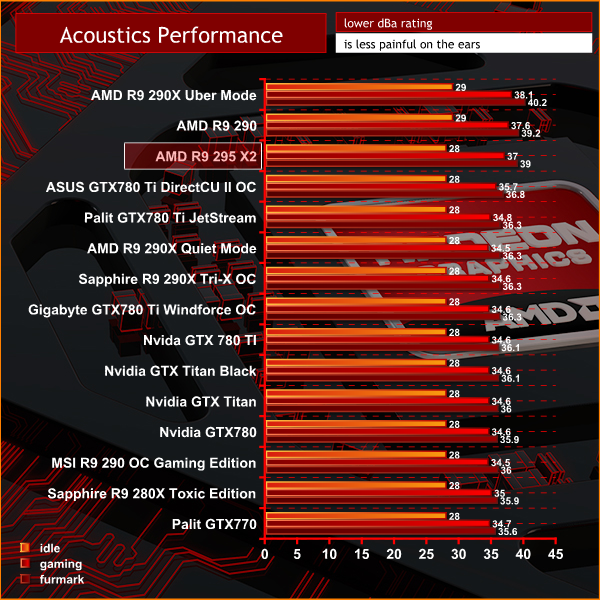

We measure from a distance of around 1 meter from the closed chassis and 4 foot from the ground to mirror a real world situation. Ambient noise in the room measures close to the limits of our sound meter at 28dBa. It isn’t a real world situation to be measuring with a case panel off only a few centimeters away from a video card. Our noise figures may therefore be lower than other publications who record at closer distances, or without a fully closed case muting the noise.

Why do this? Well this means we can eliminate secondary noise pollution in the test room and concentrate on only the video card. It also brings us slightly closer to industry standards, such as DIN 45635.

KitGuru noise guide

10dBA – Normal Breathing/Rustling Leaves

20-25dBA – Whisper

30dBA – High Quality Computer fan

40dBA – A Bubbling Brook, or a Refrigerator

50dBA – Normal Conversation

60dBA – Laughter

70dBA – Vacuum Cleaner or Hairdryer

80dBA – City Traffic or a Garbage Disposal

90dBA – Motorcycle or Lawnmower

100dBA – MP3 player at maximum output

110dBA – Orchestra

120dBA – Front row rock concert/Jet Engine

130dBA – Threshold of Pain

140dBA – Military Jet takeoff/Gunshot (close range)

160dBA – Instant Perforation of eardrum

As we mentioned earlier in the review, AMD didn't opt to use a 240 mm radiator with dual fans, instead using a single fan on a 120mm radiator. Under extended load the water temperature heats up and the fan has to work harder. After 1 hour of gaming, the noise levels rated at 37dBa. This is clearly noticeable and far from quiet.

We do think AMD should have opted for a larger radiator to cool the dual cores. The single 120mm radiator does seem to struggle under extended load situations.

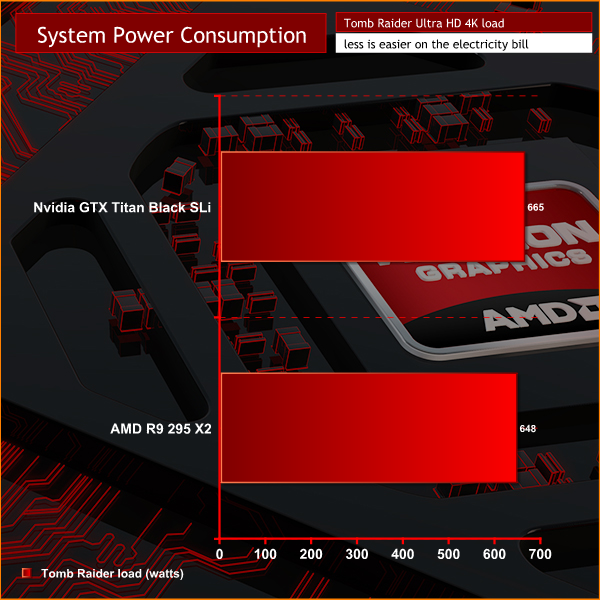

To test power consumption today we are measuring power demand system wide. We measure results while gaming in Tomb Raider at Ultra HD 4K.

The R9 295 X2 adopts ZeroCore Technology. This can completely power down the core GPU while the rest of the system is active.

When both GPU's on the R9 295 X2 are in ZeroCore Power mode the component fan in the center of the graphics card will stop spinning. The radiator fan however will continue to run.

AMD have two green LED's on the back of the card so you can check the status of this feature. When either GPU is in ZeroCore power mode the corresponding LED will light up.

Both systems place a huge load on a power supply. The AMD R9 295 X2 when loaded with Tomb Raider 2014, demands between 645 and 655 watts at the socket. The GTX Titan Black cards in SLi take slightly more combined, around 15 watts more on average.

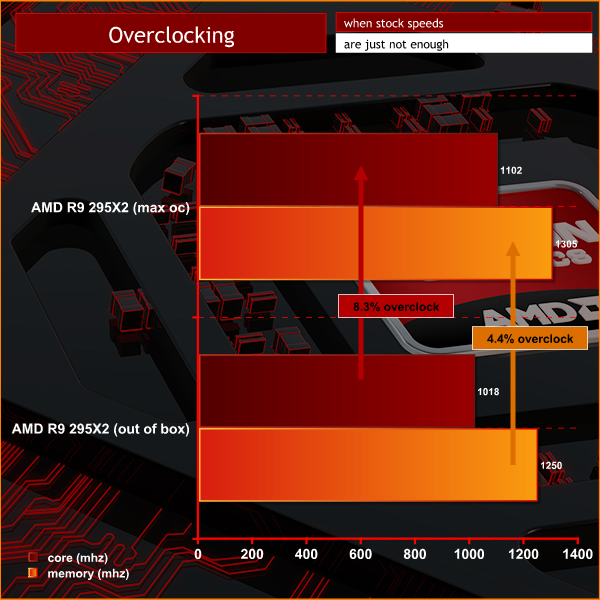

To overclock the R9 295X2 today, we use Catalyst Control Center with the latest beta drivers specifically designed for the hardware.

The 120mm radiator and fan have to work hard to keep temperatures in check. That said, there is some headroom available – we managed to overclock the cores to 1,102mhz before the system would freeze under load. This translates into around 8 percent.

The clock increase gave another 1,223 points in 3DMark 11, taking the final graphics score to 30,153 points.

KitGuru was one of the first tech publications to regularly incorporate Ultra HD 4K testing into high end graphics card reviews last year. When you see titles such as Battlefield 4, Tomb Raider, Thief 2014 and Metro Last Light running at 3840×2160 there really is no going back.

Even though we have been using the (£2,300) flagship Asus PQ321QE monitor, we did say that we should see cost effective panels hit the market in 2014. It actually happened sooner than we predicted; if you check leading etailers such as Overclockers UK, there are pre orders in place for 60hz 4K screens at only £500.

While Ultra HD 4k screens are quickly becoming affordable it does take an extremely powerful graphics solution to maintain smooth frame rates at the native 3,840 x 2,160 resolution. A single GTX 780 Ti and R9 290X have proven powerful enough to drive many titles at 4K, however there are a handful of Direct X 11 games which can bring a single card to its knees.

Nvidia's recent announce of the $3,000 Titan Z has caused much debate among our readership. This monster card is likely to cost around £2,200 in the UK and clearly targets the professional sector. The combination of two Titan Black solutions on a single PCB will surely appeal to a small audience of wealthy gamers who simply must own the most powerful, cutting edge technology. Nvidia may say that the Titan series of cards has always targeted the professional sector – but it hasn't stopped gamers buying them en masse.

We don't have Nvidia's Titan Z in hand yet, but we did the next best thing – we SLI'd two GTX Titan Black cards. In all of the games we tested at Ultra HD 4k, the AMD R9 295X2 was the performance leader.

AMD have struggled in recent years to develop a powerful, quiet reference cooling system. We have mentioned this in previous reviews, such as the launch article for the R9 290X back in October last year. This hot running card delivered plenty of performance, but it also emitted high noise under load with a nasty tendency to downclock while trying to maintain the default 95c temperature profile.

AMD may have scraped through with a frugal reference air cooler fitted to the R9 290X, but the architecture of the R9 295X2 would insist on a more substantial cooling system. AMD decided to work with Asetek on the ‘reference' watercooler for the R9 295X2 – and, for the most part, it should be considered a success.

The R9 295X2 ships with a small 120mm radiator and single fan mounted to drive cool air through the radiator and outside the case. I have to admit I was quite surprised AMD and Asetek didn't opt for a 240mm radiator with dual fans. After a long gaming session, the hoses get very warm to the touch and the single 120mm fan spins very fast to compensate for slowly increasing water temperatures.

While I am actually quite surprised the cooler works as well as it does, I feel a 240mm radiator with dual fans would have been a more sensible choice for AMD. The larger radiator would have been able to remove the heat quicker, and with another fan in place, they both could have been set to rotate slower. Less noise and better cooling performance, a win for everyone.

Playing Devils Advocate I do somewhat comprehend AMD's reasoning behind the adoption of a 120mm radiator.

An enthusiast user contemplating a R9 295X2 is likely to already own a high end, hot running Intel i7 processor. Corsair (among others) have a range of highly successful all in one liquid coolers available such as the H100i, H105 and H110 which adopt 240mm and 280mm radiators. The majority of chassis have only space for one of these radiators … so finding a free position to mount the R9 295X2 cooling system may have proven difficult, if not impossible. The very small audience considering two R9 295X2 cards for Quad Crossfire would have had to get very creative.

As already mentioned, Nvidia have said their upcoming Titan Z will cost $3,000. When we factor in 20% Vat, this takes the price to around £2,200 in the UK. AMD will be selling the R9 295X2 for €1099. We would expect to see the R9 295X2 in the UK for between £900 and £1,000. At this extremely competitive price point, I can overlook my minor concerns with the adoption of a small 120mm radiator and slightly higher than desired noise emissions.

AMD crafted the AMD R9 295X2 specifically for Ultra HD 4K gaming, and based on our findings there is no doubt they have released the world's fastest graphics card.

Discuss on our Facebook page, over HERE.

Pros:

- Fastest graphics card that money can buy.

- looks set to be at least half the price of the Titan Z from Nvidia.

- Ultra HD 4K leader.

- we love the flight case.

- no maintenance watercooling system.

Cons:

- Can get quite loud under load when the water heats up.

- smallish cooling system has to work hard.

- 240mm radiator and dual fans would have performed better.

- Needs a beefy, high quality power supply.

Kitguru says: Look up the word ‘performance' in the English Oxford dictionary and you will see the description ‘AMD R9 295X2'. Testing at Ultra HD 4K resolutions, AMD's R9 295X2 beat a pair of Titan Black Edition cards in every test… It is the 4K champion.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

best review of the hardware online today ! what an awesome board, and great price from AMD too!

that is a beast – im impressed with that even though I do prefer Nvidias drivers. It actually shows how much overpriced the titan Z will be.

That said, nvidia wouldn’t have to watercool their card, and they aren’t. I just hope its not too loud.

I love the looks!, can you add your own fans to the card or is the AMD fan specifically designed for the right RPM profile?

Bleh, Nvidia Z Titan will be better than this – its too much heat for a GPU, the case will get warm inside.

Well colour me impressed with this – its a monster card, wouldn’t fit in my case! Its cool to see companies doing stuff like this, even if we can’t afford it. I know I can’t afford it

Its not that expensive. two 290xs are around £900, and this has a better cooler and its water.

AMD need a pat on the back for this one.

£1000 is a bargain for this. I want one.

Im ordering one too when its out, I have a new 1000W PSU and am selling my 780. Just got a new Dell 4k screen. I am not paying over £2,000 for a Titan Z.

thats a lot of testing zardon, have you slept much in the last week ? i guess not.

Its a good card and you show it in a good light. Its basically two overclocked R9 290Xs with a watercooler, for the same price as two crappy reference boards. Hard to fault it.

AMD are hard to beat on price, Nvidia must be kicking themselves.

wow what a lot of AMD fanboys here getting their panties in a twist.

You do know that Nvidia could easily sell the titan Z with the cores at 1.05ghz right? over the reference 890mhz of the standard titan black. that would beat this based on the results i see.

Its a good price, the power demands are high, not exactly a single GPU everyone should just rush out and buy.

Good price, ill agree with everyone, but I think it needs a 240mm radiator. my mate is buying one, but will strip it down and add his own watercooling. so he says.

how much in america? $1500 ?

I think most guys will remove the cooler and use their own watercooling. I think its a pretty stupid idea to use a single 120mm watercooler. a 4770k runs much cooler with a dual radiator and thats probably not putting out as much heat as one 290X core, nevermind 2.

120mm radiator? is this a joke? what a way to f*ck up a good card. might still buy one and cool it properly! surprised ASETEK gave that the OK.

Not available anywhere, another paper launch from AMD. way to go.

I had wondered why the nvidia side was so low compared to other review sites. Your OC settings are terrible low, and your drivers are not up to date for both cards. If you are going to use beta drivers for one side, you should probably stick to the most recent beta drivers for the other side as well (i realize you may have done much of your testing before they came out, as they only came out yesterday for nvidia).

I would like to see some benchmark videos on this card, as i really am having troubles imagining a dual card performing better than two single cards. I’ve noticed this kind of pattern in previous reviews on this site and on anandtech, and guess i will just have to wait until more reviewers have their numbers out. Gratz on being one of the first though. Im willing to bet that sli 780 properly overclocked will hold up to this, but obviously that is yet to be tested.

@ BRANTYN GERIK. Why would they overclock the GTX Titan cards as a ‘standardised’ SLI test? they were SLi’d as they are sold at 890mhz (889mhz). The R9 295X2 wasn’t tested overclocked either throughout the review. Both solutions tested as sold.

The latest Nvidia drivers were released yesterday afternoon. I would imagine this review was started a week ago. they only change results by 2-5% in most instances anyway. (I have a GTX780 Ti).

I have the new nvidia drivers, they help my Titan performance in some games by around 5%, so I would imagine the performance after using this driver would be very close. I wouldn’t expect this review to use them though, Nvidia only released them yesterday and I would guess this review took about a week.

I prefer Nvidia and wouldn’t own this R9 295X2, but I think its a good idea to point out that AMD are likely to improve performance soon too, as this is the first driver for the card.

I am no fan boy, best card for the price, gets my money.

Titan Z has a lot to do now to justify that $3,000.

It just goes to show how much heat those AMD cards are putting out.

Nvidia Z? single fan in the middle. AMD? 120mm radiator, water, two pumps, fan also in the middle, still gets too hot.

240mm radiator needed. they dropped the ball.

I think this is a briliant card for the money, better than Nvidia’s,. ASUS ARES,MARS etc and watercooled.

Great looking card too and well priced. I just wish they had went for a larger raidator, my case can take two 240mms 🙁 Have you tried changing the fan and adding two quiet fans like the sharkoon dead silence zardon?

So many stupid people. Titan Z is NOT a gaming card! Its not meant to be for this audience. who the hell would spend $3,000 to play games?

@Slashwat. What are you talking about? of course people will buy the Titan Z for gaming. I own a Titan and I know the 780Ti is cheaper and just as good or even faster depending on the cooler you get.

TONS of people are buying Titans to game, probably more than those buying it to develop CUDA on. The Titan Z will be bought by very rich gamers and probably in pairs too. Nvidia know this, but get around the price with the whole double precision thing.

People who say Titan cards are not sold for gaming are so niave and reading the nvidia playbook. people want more than 3GB of memory and the status associated by using the best. Whether you like it, or even if it makes sense, doesnt matter – its true.

I think it’s time for AMD to evolve their GPU with customizable cooler. This will unlock better option in performance cooling & OC headroom