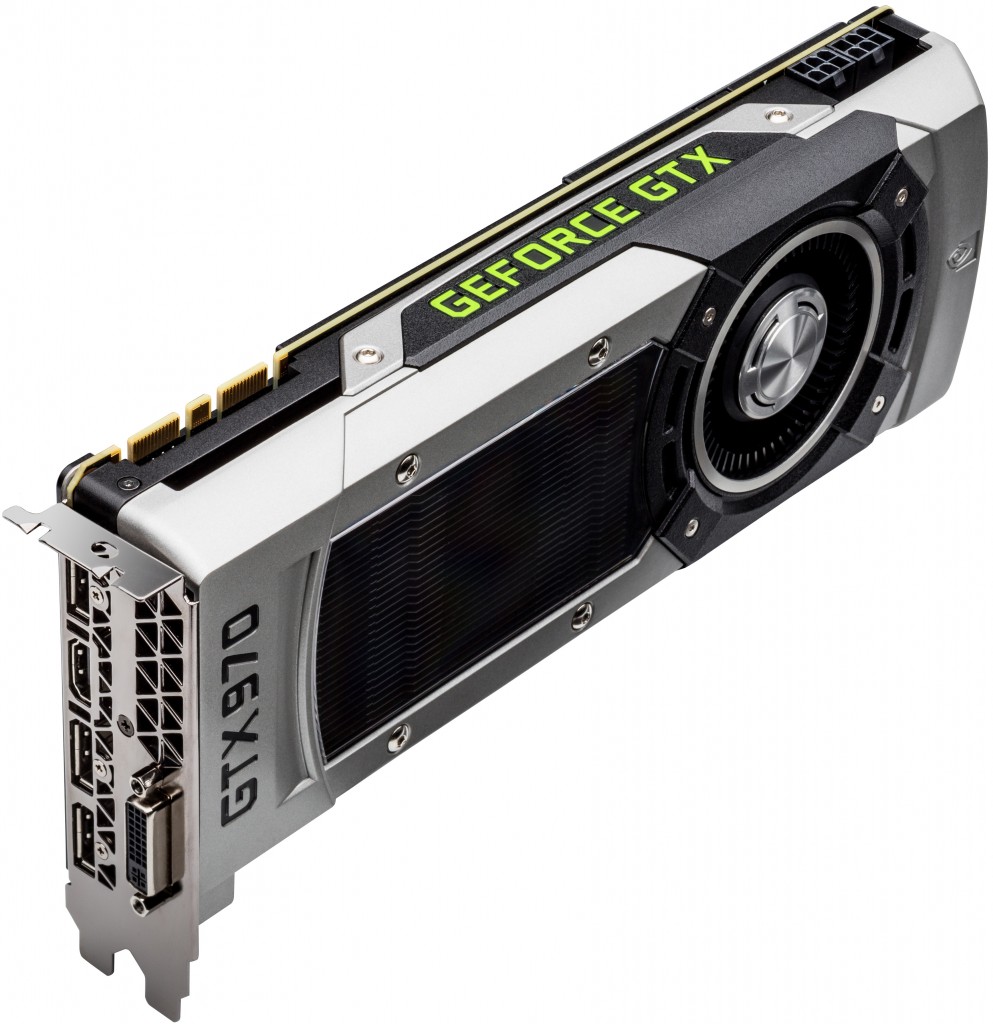

Although Nvidia Corp.’s partners will clearly suffer financial losses because of the scandal with incorrect specifications of the GeForce GTX 970, the designer of graphics processing units does not plan to admit its mistake at all costs, according to a media report.

Even though return rates of the GeForce GTX 970 are very low, Nvidia does not want to take responsibility for incorrect specifications and memory allocation issues. The company officially claims that the GeForce GTX 970 was designed this way and that its performance is rather high despite of the fact that it has 56 raster operations pipelines, 224-bit memory bus and 3.5GB of memory.

Nvidia has not apologized to its customers for incorrect specifications and does not want to admit any guilt because this will give its partners an opportunity to ask for material compensation, reports Heise.de. Profit margins of graphics cards makers are pretty low, which means that for them even 1 – 5 per cent return rate could result in losses.

Since Nvidia does not want to admit its guilt, it is now illogical to expect any kind of compensation to the end-users.

Nvidia did not comment on the news-story.

Discuss on our Facebook page, HERE.

KitGuru Says: While performance of the GeForce GTX 970 is pretty high and is very good for the money, Nvidia’s behaviour and arrogance greatly damages the company’s reputation.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Way to court a class action suit.

I don’t understand what you mean in this case: “admitting guilt”? Nvidia has already admitted guilt. They admitted that they accidentally gave wrong specifications to review sites upon release of the card. If there is something more you are accusing them of being guilty of it’s not clear to me from reading the article what it is.

They already did? For god sake, none of this horseshit changes the 970’s benchmarks..

Benchmarks that often used only a single 970… A single 970 is not as likely to reach the 3.5GB limit due to computational limits, but when you SLI two 970s, you are very likely to be able to up the settings to the point that the slower 0.5GB segment will be used resulting in stuttering and thus a bad experience. This should not have happened if the memory configuration was as they said.

And again: they advertised a 4GB card at 224GB/s. The segmented memory should have been mentioned at the least. Assuming that all buyers only buy cards based on the average fps shown in benchmarks and not the actual specifications of the hardware is not very professional imho.

GTX 970 is a good card for the now but not a year on. Games progressively demand more and more vram and for those of us who look for the truly immersive titles on the horizon, we won’t bother with a 970.

It doesn’t really stutter that bad, do you own a 970? I do, and I’ve been pushing nearly every game to it’s limit and I know darn well I’ve used past 3.5GB and I haven’t had any stuttering.. also, why would you sli two 970s? If you have that much money you might as well spend more and sli more powerful cards. You can easily push the 3.5 limit, I know first hand.

I am one of the people who have SLIed 970s (and going on what I’ve read, there are plenty). I bought them a couple of months apart (one at launch and one in January) since I couldn’t sped that much at once. As you said, I was indeed thinking about getting a 980, but since I bought the GPU during a complete system build, the total costs would be more expensive than I liked at the time. Besides, the difference between the 970 and 980 seemed not that large at the time: same memory config, only slightly lower average fps, …

As for the SLI: 2 SLIed 970s are more powerful than 1 980 and cost only a bit more. This is the main reason to get SLIed 970s.

You’re not the first one to say not to note any difference between 3.5GB VRAM usage. But many others have reported problems, and I do as well get a performance drop when going over 3.5GB. It is not really an average fps drop, but a short freeze which seems to be occurring on a timely manner (about every 2/3 seconds or so), which obviously is very annoying.

Interesting issue there. I have a MSI 4G 970 SLI setup Oced them even more. AC unity everythign maxed except shadows, FXAA DSR 3K. Avg Fps of 53 with 3930mb to 4060MB usage with no stutter whatsoever. I also have oen Samsung RAM model and one Hynix. Wondering what exactly causes the issues for the people who do get stutter. I ran up and down buildings etc constantly in AC lookign for stutter or freezes none was to be found.

Its about mind state not card performance , i do get an MSI 4G 970 too and its work fine for me past 3.5 gb

who cares, the gtx 970 is an awesome card

Nvidia never apologized, instead they just passed the buck by saying marketing accidentally distibuting the wrong specs to reviewers. That is probably one of the worst coporate apologies I have ever read. Furthermore they continue on their specification page to market the card as having 4 gb with a memory bandwidth of 224 gb a second. This is a blatant lie it is 3.5 gb at 196 gb/s and 0.5 gb at 28 gb/s. Kitguru i salute you for calling out Nvidia on its skulldrudgery, the only way Nvidia will stop these lies is if they get bad press. I urge you to continue to investigate this issue and continue to place pressure on Nvidia to come clean. I would also urge the reviewers to revoke all awards given to the gtx 970 as these were given out under false specifications. I would also urge them to make public the specifications that the marketing team gave them. This is the duty of a free press and we should not be critizing this article but aplauding it for its honesty when other review sites are trying to sweep this under the rug.

And because you have no issues, nobody can? If nobody would have had issues, the segmentation would have never been noticed in the first place.

A lot depends on what data is loaded into the 0.5GB segment, explaining why some are experiencing more issues than others. And even then, the nature of the memory still should have been mentioned to begin with. Nvidia seemed to assume that nobody would have noticed, needed to know or would have cared, which is just dishonest marketing really.

The fact that you don’t have problems in AC Unity is remarkable by itself looking at how bad the game was optimised in the first place (although the patches vastly improved this).

I believe it has something to do with how the driver handles the data. If it recognises data as high priority it might shift some lower priority data over to the 0.5GB segment if using more than 3.5GB (I’m just guessing here). However, if it does not see the data as high priority, it might get stuck in the 0.5GB segment causing trouble. But this is just an assumption really…

“Profit margins of graphics cards makers are pretty low” I’d like to see someone back that up with facts. They are crying poor just like the mobile phone makers do. No way the $390 GTX 970 is a “low” markup item in a manufacturers product line…

Err ATI never said sorry or refunded me for there 2900XT. But the diffence now is my 970 is a perfomer.

Not defendign them I have called it appauling multiple times. Fact of the matter is it is more than likely as you said driver. In which case the driver will load the normal windows vram usage which is 250mb+ for me into the low priority leaving the rest for games. So as long as the driver assigns the low priority vram correctly there really is not an issue. Yet again what Nvidia done is appauling yes. However the 970 is still a great card and 2 970s are serving me amazingly well. I downloaded a trial of BF4 recently and can 4K it 70+fps with no OC easy. Smooth as butter. What Nvidia done is appauling and very bad business practice. Yet it does not take away from the fact the 970s are great cards. I would not have bought a second one a month later if this issue was as overblown as it is being made.

Good for you but for Nvidia’s partner who will suffer financial loss, yes they care.

What? You were fully aware of all the performance, noise and temperature characteristics of the HD2900XT in reviews. Most of us chose 8800 series instead. The fact that you purchased HD2900XT is entirely your fault. Every once in a while AMD/ATI/NV make duds like GeForce 5 or 7 or HD2000/3000 series. The situation with the 970 is not the same as NV doesn’t want to own up to their mistake and even refuses to provide refunds as an option.

this 512mb of vram…..am I right its being used as a buffer of some sorts ? kinda like when amd were advertising apu systems as having 4gb of gpu ram, when if fact, it was system memory, however, when you expect a 4gb card and get one thats “chopped” you wouldnt be happy, now, if the 512mb was FASTER ram, then I could see why nvidia partitioned it, but its way slower than the 3.5gb, 4 times slower in fact, so why do it, and piss off your base ?, I realise they have to chop silicon a certain way to hit a price point, but taking a good chip and giving it a big toe just hampers what we all want, nvidia had thier eye on beating the 280x, instead of trying to make the current nvidia owners happy, make sure you learn the lesson nvidia, or plenty of 970 owners will walk, with their wallets….

nvidia has been playing this con game with it’s customers ever since kepler core were implemented…absurd delays forcing failed products and by-products of their server GPU R&D on customers at absurd prices…It is about time some one teach them humility. stringing along customers with excuses and delays and fraud should earn nvidia a public chastisement or they will never understand how far they have strayed from the customers who made them famous.

they admitted nothing. It was a small glitch in the performance of the card that spurred a technical question that one of their tech reps answered probably without consulting the top brass because he thought it was insignificant matter. The corporation itself does not admit wrongdoing such as peddling the card on the world market as under false pretense of the card being a single 4 GB nvram when in reality it is using two separate memory pools at different data rates plus the GPU core component count it self is falsely stated.

While detrimental effects of the deliberately built in flaws won’t be visible until the next gen games are out, It makes a lot more sense as to why nvidia claimed they were going to remake the 970 and 980 in 16/20 nm flavor. It clearly indicates they knew about the flaw all along…and they were really hoping no one would figure it out until their 16nm process gpu’s were market ready. Too bad for them their lie has been exposed and they should learn the consequences of lying to the people to make profits.

the only game that shows the stuttering problem is battlefield 4 which at 4k res requires about 3.7 GB of nvram. That means the card is fine for those who like to burn through their pocket buying new cards as they arrive on the market (OFC the card has no resale now that it’s flaw has been exposed). But for those who bought the card hoping to get 3-5 years out of it….BAD NEWS! The next gen games due to start rolling out this year are all using engines that far exceed Battlefield 4 Dice engine specs. hence 4k aside 970 might start showing it’s achilles heel at lower res……not to mention no one wants to buy a card with no future.

Nvidia, this is real bad from and for them, maybe its what huge amounts of money do to people/companies? Who knows. I have only ever owned AMD gfx cards from back when they were ATI, first card I bought from NVidia was the 970, and now this! Lets clarify, there IS 4gb on the card, how 3.5gb and the remaining .5gb is accessed is differently, my card goes over the 3.5gb mark in Dying Light (all high settings/1080p) and shows no drop in frame rate whatsoever, no stuttering, I did the testing myself just to see for myself. Then the amount of advertised ROPS was incorrect, card still performs great despite this, of course, I still reckon NVidia should say something that shows that their customers are important to them. I had commented on this NVidia stuff here before (another 970 topic) but I really did consider sending my card back but after testing a day or 2 ago I changed my mind. In the end it is completely up to the individual how they think and react to this situation. I’m sure, if we really look at things, we will see many companies shafting their customers in one way or another.

AMD never settle, Nvidia never Apologize!!!!!!

for the last time you noob, low return rates are due to no one accepting returns since all of them are waiting for Nvidia, and clearly Nvidia is saying “this is a gr8 card and we are proud of being assholes” , so customer asking for refund, shop waiting for manufacturer response, manufacturers waiting for Nvidia response, Nvidia says “fuck you all”, so those who managed to return their cards are just few people, but if nvidia issue a recall, then returns will exceed 90%.

Wht a terrible article. You cannot even get the facts straight. The 970 is a 4GB card, not 3.5GB.

Also, the matter of compensation is far from decided. If all it took to avoid consequences was a denial of guilt, prisons would be empty.

It will be decided by the laywers.

Low profit margins are BS. If the 980 costs $550 and is the exact same as the 970 but the 970 has some stuff cut out that is still present. They make over $250 per 980…. otherwise the 970 (you can get a 970 for $300-$330) would be losing money and somehow I doubt that. It would have cost them the same amount to give us full 980s in the first place…

It’s not a flaw any more than the disabled SMs are a flaw. The lack of ROPs and 4GB of 220GB/s RAM will never be exposed because the card is SM bound before those limits are reached in today’s games and will similarly be SM bound before those limits are reached in future games. Future games are not going to require LESS processing power.

NVIDIA designed the card that way because they knew what they were doing and knew that those extra ROPs and the extra RAM specs were close to useless with the number of SMs, and they could deliver a nearly identically performing card for less money by disabling them. NVIDIA released the incorrect specs to the press one time at launch. They did not actively advertise them. Any consumers who took the time to go to hardware review sites to research the card and then bought the card because it has 64 ROPs instead of 56 ROPs are fools. Obviously what those consumers should have been and mostly actually were looking at were the real-world performance numbers which are as accurate now as then, and which still, after all this time of people knowing the real ROP and RAM situation of the card, cannot be shown to be significantly negatively affected in real-world situations under playable framerates by the design decisions whose specs were originally falsely reported.

If NVIDIA designs future Maxwell cards, no matter what process technology they are to be produced on, they will consider their options for the design and optimize the number of enabled SMs and ROPs for those cards on an individual basis. Most importantly, as far as what you are trying to say, NVIDIA didn’t have to sweat away the days until the 16nm process was available because they were not forced to make the 970 with a 1/2 enabled ROP unit to begin with. No one puts “deliberately built in flaws” into their chip unless they are working for the CIA and want the chip to go kablooey if it gets into enemy hands. And in any case, if they did choose to put “deliberately built in flaws” in their chip why would they do that and then anxiously await for a future time when they can undo it, when they could just as easily have never done it to begin with?

NVIDIAs share price is forecast to increase and outperform the market

I don’t think you know how to calculate bandwidth. The capacity isn’t a factor. NVidia just need to change their page, because 7GHz * 224bit bus = 192GB/s

It’s not segmented, though.

Nvidia’s webpage says 256 bit memory interface with 4 gb vram at 224 gb/s bandwidth. Yes I agree with you they haven’t changed their webpage so they are continuing to falsely market the gtx 970.

From the official Nvidia statement:

“However the 970 has a different configuration of SMs than the 980, and fewer crossbar resources to the memory system. To optimally manage memory traffic in this configuration, we segment graphics memory into a 3.5GB section and a 0.5GB section.”

So you’re saying ignore it, it’s your fault for buying the cards anyway.

“but if nvidia issue a recall”

Will never happen.

They understand only money, and money is what I am gonna use it

It’s still a 4GB card. If it’s 224bit, rather than 256bit then they need to revise that.

It’s only a firmware partition though. There is physically 4GB on the card. Having seen the technical explanations the last thing that comes to mind is that it’s a 3.5GB + 512MB card.

Equally, the GTX 980 suffers a performance hit above 3.5GB VRAM. The GTX970 performs, in relation to the GTX980, consistantly. I really, really don’t see the issue.

http://www.anandtech.com/show/8931/nvidia-publishes-statement-on-geforce-gtx-970-memory-allocation

I’m saying the card is still a really good deal when it comes down to money vs performance. Sure, you can make a big deal about this if you want but, I still got a great card that runs everything I wanted at 1080p or better at ultra.

they have admitted to getting specs wrong they have admitted to that, and they have promised to try and avoid the same situation in the beginning, so saying they haven’t is lying, but yeh they could have easily made card 970 owned a apology with say a free game as a form of apology or even a significant amount of their next flag ship, cause lets face it 300 pounds is a lot of money to a lot of people. Either way I love this card and have no plans to refund it, its just too dam good but when I buy another card in 2 years time ill be thinking about this issue

The thing is, those average fps hide the problem somewhat due to being, well, an average. You can get a fps drop from 80 to 20 for half a second, which would be visible by the user, but even when occurring multiple times during the measured time segment, the total average fps can be around 60.

Looking at the frame times, there are scenario’s where the memory architecture causes a clear increase in frame times, which is then perceived as stuttering:

http://www.pcper.com/reviews/Graphics-Cards/Frame-Rating-GTX-970-Memory-Issued-Tested-SLI

Yep, and their closing statement says

“For the others out there, the GeForce GTX 970 remains in the same performance window it was at prior to this memory issue and specification revelation. For $329 it offers a tremendous level of performance, some amazing capabilities courtesy of the Maxwell GPU and runs incredibly efficient at the same time.”

That the card is still good, but you should get a GTX980 if you’re going to push it hard, which was the case before this stuff come to light.

Data as of today (Sourced from Yahoo Finance who gathered the data from Capital IQ) shows the following:

Nvidia has a profit margin of 12.77%

AMD has a profit margin of -7.32%, most likely due to the overall poor sales in the CPU division.

Intel had a profit margin 20.95%

ARM Holdings (they license their processor designs out, iPhone processors are from them) had a profit margin of 23.25%

Qualcomm (they make mobile processors usually found on Android/Windows phones) had a profit margin of 29.91%

Google had a profit margin of 21.89%

Seagate had a profit margin of 14.30%

Western Digital had a profit margin of 10.37%

As you can see, both of our GPU manufacturer options have among the lowest of profit margins of companies in a similar area of business. Most of the big names that aren’t listed (Corsair etc.) are not listed because there is no data for them, and most are privately held or traded on only the Taiwan stock exchange so any data that is available is not reliable.

You’re absolutely right. But they’ve also found that “users with or looking at an SLI setup of GeForce GTX 970 cards to be more likely to run into cases where the memory pools of 3.5GB and 0.5GB will matter”.

Look, I’ve never said that the GTX 970 is a bad card by itself. However, the different memory layout should have been explained from the beginning within the framework of the difference between a 970 and 980. I actually have been doubting between a 970 and a 980 at the beginning, but finally decided on 2 970s seeing that the difference between the 970 and 980 was only the core speed/CUDA count and the price difference was rather small. And SLIed 970s blow a single 980 out of the water in terms of computational power. Now it turns out the difference was a bit bigger than Nvidia announced, and this difference in memory architecture has an impact on the users who actually spend more on 2 or even 3 of these cards.

I’m not saying that everybody should turn in their 970. But users that want to, should be allowed to do so. As long as Nvidia keeps saying “we’ve done nothing wrong” the only way to do this however (for most stores in Europe at least) seems to be filing a complaint, which really is not something you should put your costumers through after messing up (even if it actually was a miscommunication between departments, which I actually doubt by now).

Except it’s not 4GB of “GDDR5” it’s 3.5gb at gddr5 speed and .5gb at roughly gddr2/3 speed at best. And it still only has 56 rop and 1.79kb l2 cache, which are still outright lies and still being advertised on the card. They continue to sell the cards despite this, which is illegal. They can only continue to sell if they change the advertising to show the correct specs, so they’re basically just digging the hole they’re in deeper and deeper every day they continue selling with false advertising.

No, it’s 4GB of GDDR5, regardless of the speed. It’s still, physically, 4GB of GDDR5.

I’m not defending them, or whatever, but I don’t think it’s appropriate for everyone to suggest it’s not 4GB of GDDR5, when it most definitely is. You can count the modules on the PCB and look up their codes to verify.

You don’t understand, GDDR5 means double data rate x 5, this implies the ram runs at 5 times the speed of DDR1 vram/ram, therefore the fact that 500mb of it runs four times slower than GDDR5 speed means it is NOT 4gb of GDDR5, it’s 3.5gb of GDDR5 with .5gb of GDDR2 speed thrown in. The fact of the matter is. that a 224 bit card can not be labeled as GDDR5 because it doesn’t qualify at that bandwidth speed. And regardless of yoru opinion on the vram you CAN NOT say that there are 64 ROPs and 2mb of L2 cache because it is absolute FACT that there are only 56 ROP and 1.79mb L2 cache.

Lol, that’s not what GDDR5 means. Your “opinion” is fucked from the get go. Go and educate yourself on what GDDR5 actually is (hint, it’s based on DRR3 SDRAM 😉 )

NVidia’s official marketing information doesn’t list ROPs or L2 cache, just the bandwidth and bus width: http://www.geforce.co.uk/hardware/desktop-gpus/geforce-gtx-970/specifications

Also, hint #2: The GPU has a memory clock of 7GHz (or 3.5GHz x 2… “Double Data Rate” ).

You’re an idiot, I give up. If you can’t understand the fact that the card is touted as being 256 bit 4gb GDDR5 223gb/s and is only ~190gb/s on the first 3.5 and less than 50gb/s on the rest than you are the one in need of education. If someone was selling DDR3 system RAM that only runs at 600mhz it doesn’t qualify for JDEC minimum standards as DDR3, therefore it is “not” DDR3, although in this case it’s more like 3.5gb of the 4gb stick of ram is DDR3 while the rest is DDR1. Second, Nvidia DID list ROP and L2 cache right on their official website, although they’ve removed it to cover their asses. And if you actually knew anything about this issue you’d realize that bandwidth IS part of the problem, they advertised it as 223gb/s, and it’s NOT; 3.5gb of it is 190gb/s while the rest is less than 50gb/s, that’s another lie on the list. So go “educate yourself” on how graphic cards actually work.

Oh and here’s a link to Nvidia’s OFFICIALLY APPROVED reviews of the 970 and they all list the incorrect ROP and L2 cache as well as the incorrect memory speed and bandwidth

http://www.geforce.com/hardware/desktop-gpus/geforce-gtx-970/reviews

You just lost any credibility for being an asshole 🙂 You’re wrong, and there’s no need to insult me because of it.

This is still GDDR5 and it meets the spec. Bandwidth speed isn’t a requirement, only memory clock. It’s a 7GHz card so it meets the requirements. They could run it on a 64bit bus and 7GHz if they wanted and it’s still GDDR5. The only way it’s not GDDR5 is if you remove the memory chips from the PCB.

All 4GB of the memory is GDDR5. This is a fact and no amount of trying to rearrange it or interpretation will change that.

I don’t disagree that the first 3.5GB is faster than the last 0.5GB. That’s also a fact.

Again, you should go and read up on these things, because you’re wrong and you’re trying to speak with authority. That’s setting yourself up to fall.

Also, those are reviews by third parties. If NVidia sent them a card to review, which they do for most reviews, then that could be considered endorsement.

The page I linked to is the official page and it never mentioned ROPs or L2 cache. NVidia don’t list that info on those pages, so you saying they removed it is a fabrication (you lied). Check the 980, 960, 780, 680, 670, 580. That’s never been included.

No insults going on here, i’m just telling the truth, idiocy is idiocy plain and simple. YOU are the wrong one, YOU are the one in need of “education”. I’ve been working in pc development for years and i know what the hell GDDR5 means, if anyone is insulting anyone it’s you insulting my intelligence with your false nonsense. It’s already been confirmed by judges and lawyers involved in this case that there are LEGAL standards on what can be called GDDR4/GDDR5 and so on. And SPEED AND BANDWIDTH are two of those requirements. JDEC standards are legal industry requirements, it’s no different than Taco Bell being sued because it’s “all beef burger” only contained 20% beef. This “nvidia taco” only has 3.5gb of 80% “beef” mixture with the remaining 500gb being less than 20%, therefore it does not add up to the minimum JDEC legal standards. And none of this matters anyway, they LIED to us, END…OF…STORY. False advertising is illegal.

No i did not lie, now who’s insulting asshole? I have screenshots of nvidia with ROP and cache info on their site, but due to this they decided to cover their asses and remove all mention of detailed specs from their gpu pages on the site. You are beyond ignorant, it’s amazing, your so far up nvidias rear that no words could ever reach you. It’s people like you that wouldn’t be missed if they walk down dark alleys a bit too much.

Post the screenshots.

Riiiight. “working in PC development”? You don’t sound like an electrical engineer to me. You don’t really know what you’re talking about. “legal” standards. Haha. Government regulated, are they?

Bandwidth isn’t a requirement for GDDR5, or any DDR standard. It’s the number of data lines on the memory chip, which has nothing to do with the bandwidth. The first GDDR5 chip ran at 512MB@20GB/s on a 32bit bus.

Please, stop 🙁 I’m embarrassed for you.

Oh, and I own an R280 😉 I just like facts to be presented properly.

xonstrowx is a retard.

I had an 8800GTX i won the 2900XT at an ATI conevtion when i worked for EA games. ATI still said that the 2900XT was a great card and it was not.

I still have that said 8800GTX…

“False advertising is illegal.”

If you are SO concerned about this, go out and actually DO something about it, rather than spout your legal BS on the internet.

An addendum – With the advent of HBM (Soon in AMD Fury and Pascal 2016 for nvidia) developers will have the opportunity to optimise vram usage more efficiently.

This efficiency should see the days of ever increasing vram slow significantly.

Might save us all a dollar as well.