The wait is finally over for what seems to be one of the most eagerly-anticipated pieces of computer hardware in recent history. Four years in the making and over 2 million engineering hours put into bringing it to life, AMD's Zen is finally here. Leading the pack for AMD's new Zen-based processors are three eight-core, sixteen-thread Ryzen 7 SKUs. Fabbed on Global Foundries' 14nm FinFET process and sporting a transistor count of 4.8 Billion, the new AM4-based chips are targeting prosumers who would have otherwise been tempted onto Intel's High-End Desktop (HEDT) platform.

With pricing starting at $329 (around £320), we can now finally say that CPUs with eight high-performance cores have hit a level that will be considered affordable to many, rather than a select few. First in line for review is the flagship Ryzen 7 1800X – an 8C16T, 95W TDP chip with a base clock of 3.6GHz, boost clock up to 4.0GHz, and a 4.1GHz Extended Frequency Range (XFR).

“I'm still happy with my *insert Sandy Bridge or Haswell-based CPU model name here* quad-core processor” is perhaps the most common quote that I read any and every time we publish a new CPU-related article. Sandy Bridge was legendary, AMD's Piledriver couldn't compete in many respects, and Haswell offered a well-timed upgrade path.

With Intel's mainstream processors, that sell by the truck load to enthusiast PC builders, still limited to four physical cores, many people simply do not see the need to upgrade their ageing piece of silicon. Add in the ~£400 buy price for Intel's cheapest more-than-four-core enthusiast CPU and it's easy to see why so many people are holding on so tightly to their ‘good enough' processor and investing that upgrade budget elsewhere.

Of course that's not the entire picture, as many prosumers have already jumped to higher core count chips in order to improve productivity. It is, however, a fair even if somewhat crude depiction of the current mainstream, affordable CPU market. You want more than four cores from Intel? Be prepared to pay up. An eight-core Broadwell-E chip tick the right boxes for you? Don't expect any change out of a four-figure payment.

AMD is aiming to change that with Ryzen 7. Eight cores, sixteen threads thanks to Simultaneous Multi-Threading (SMT), and retail pricing spanning $329-499 (around £320-490) are some of Ryzen 7's notable head-turning points. You could quite comfortably add a high-performance graphics card or a decent 4K monitor to your shopping list simply by re-allocating the Ryzen 7 cost differential against Intel's 8-core HEDT offering. That six-core 6800K you were planning on dropping £400+ on; it now goes up against an 8C16T Ryzen 7 chip that is punching close to a 4GHz clock speed.

On the face of it, AMD is set to give the consumer processor market a significant shake-up with the introduction of its Zen-based 8C16T Ryzen 7 offerings.

We got our first look at Ryzen in-the-silicon last week at AMD's Ryzen Tech Day in San Francisco. The headline features, such as a 52% IPC increase versus Excavator and an 8C16T chip with a 95W TDP, had a large proportion of the technical press surprised. When the slides showing computational performance against Intel's $1000 8C16T LGA 2011-3 Core i7 were shown, the reaction changed from surprised to one that was bordering speechless.

In Ryzen 7, AMD is bringing more-than-four-core CPUs to a price point that is as low as a third of the purchase fee for Intel's comparable octa-core options. And the Austin-based chip vendor is doing so while reducing the TDP below triple figures to 95W – a 32% reduction compared to Intel's 6-, 8-, and 10-core consumer LGA 2011-3 offerings.

This is not the first time that we have seen affordable eight-core ‘enthusiast' CPUs – AMD released Bulldozer over 5 years ago, though we'll not get into the debate of exactly what determines a core. It is, however, an interesting change to the processor landscape to be faced with the prospect of a true 8-core CPU that doesn't have a four-figure dollar price and is being touted as offering performance that lives up to its core count.

| CPU | AMD Ryzen 7 1800X | AMD Ryzen 7 1700X | AMD Ryzen 7 1700 | Intel Core i7 6950X | Intel Core i7 6900K | Intel Core i7 6800K | Intel Core i7 7700K |

| CPU Codename | Zen | Zen | Zen | Broadwell-E | Broadwell-E | Broadwell-E | Kaby Lake |

| Core / Threads |

8 / 16 | 8 / 16 | 8 / 16 | 10 / 20 | 8 / 16 | 6 / 12 | 4 / 8 |

| Base Frequency | 3.6GHz | 3.4GHz | 3.0GHz | 3.0GHz | 3.2GHz | 3.4GHz | 4.2GHz |

| Boost Frequency | 4.0GHz | 3.8GHz | 3.7GHz | 3.5GHz | 3.7GHz | 3.6GHz | 4.5GHz |

| Maximum Frequency | 4.1GHz (XFR) | 3.9GHz (XFR) | 3.75GHz (XFR) | 4.0GHz (TBM 3.0) | 4.0GHz (TBM 3.0) | 3.8GHz (TBM 3.0) | n/a |

| Unlocked Core Multiplier | Yes (x0.25 granularity) | Yes (x0.25 granularity) | Yes (x0.25 granularity) | Yes (x1 granularity) | Yes (x1 granularity) | Yes (x1 granularity) | Yes (x1 granularity) |

| Total Cache | 16MB L3 + 4MB L2 | 16MB L3 + 4MB L2 | 16MB L3 + 4MB L2 | 25MB L3 + 2.5MB L2 | 20MB L3 + 2MB L2 | 15MB L3 + 1.5MB L2 | 8MB L3 + 1MB L2 |

| Max. Memory Channels |

2 (DDR4) | 2 (DDR4) | 2 (DDR4) | 4 (DDR4) | 4 (DDR4) | 4 (DDR4) | 2 (DDR4 & DDR3L) |

| Max. Memory Frequency |

1866 to 2667MHz | 1866 to 2667MHz | 1866 to 2667MHz | 2400MHz | 2400MHz | 2400MHz | 2400MHz / 1600MHz |

| PCIe Lanes | 16+4+4 | 16+4+4 | 16+4+4 | 40 | 40 | 28 | 16 |

| CPU Socket | AM4 | AM4 | AM4 | LGA 2011-3 | LGA 2011-3 | LGA 2011-3 | LGA 1151 |

| Manufacturing Process | 14nm | 14nm | 14nm | 14nm | 14nm | 14nm | 14nm |

| TDP | 95W | 95W | 65W | 140W | 140W | 140W | 91W |

| MSRP | $499 | $399 | $329 | $1723-1743 | $1089-1109 | $434-441 | $339-350 |

| UK Street Price | Approx. £500 | Approx. £400 | Approx. £330 | Approx. £1650 | Approx. £1000 | Approx. £400 | Approx. £330 |

All Ryzen CPUs feature an unlocked multiplier that allows them to be overclocked without adjusting the BCLK, using a compatible motherboard chipset.

You can read our Ryzen 7 1700X review HERE and our Ryzen 7 1700 review HERE.

Ryzen 7 1800X Specifications:

- 3.6GHz base frequency (up to 4.0GHz Precision Boost frequency).

- Up to 4.1GHz XFR frequency.

- Unlocked core ratio multiplier.

- BCLK overclocking capability.

- 8 cores, 16 threads.

- 512KB of dedicated L2 cache per core and 8MB of shared L3 cache per 4-core module.

- Dual-channel DDR4 1866-2667MHz native memory support (up to two DIMMs per channel).

- 16 PCIe 3.0 lanes for PCIe slots, 4 PCIe 3.0 lanes for high-speed storage, 4 PCIe 3.0 lanes for connection to the chipset.

- 95W TDP.

- AM4 socket.

- $499 pricing (~£490 UK e-tailer price).

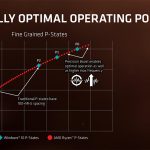

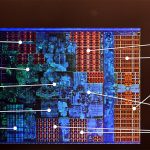

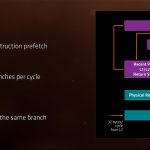

Presentation slides outlining key features for the Zen architecture

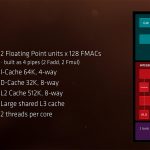

Ryzen 7 is an eight-core chip that combines two four-core CPU Complex (CCX) ‘modules'. Each CCX features 8MB of shared L3 cache for the four cores with 512KB of L2 cache dedicated to each individual core. Two threads per core are what gives Ryzen its SMT functionality that is similar in theory to Intel's proprietary SMT implementation known as Hyper-Threading.

Total area of a Zen 4C8T CCX is 10% lower than that of Intel's alternative, as many news articles highlighted recently. AVX2 instructions are supported and a pair of AES units are implemented for security purposes.

SenseMI is AMD's name for a group of sensing, adapting, and learning technologies relating to the processor's operation. These technologies are: Pure Power, Precision Boost, Extended Frequency Range (XFR), Neural Net Prediction, and Smart Prefetch. I will encourage you to visit AMD's webpage HERE if you are interested in more in-depth technical details of the Zen architecture.

I will try to summarise the SenseMI features concisely. Pure Power is essentially an energy saving tool that uses a large number of monitors to adapt system operation. Precision Boost adjusts processor clock speed in real time with 25MHz increments to hit an optimal point. XFR is similar to precision boost but it allows the CPU to push above its ‘maximum' boost clock if power and cooling budgets permit.

One way of validating that XFR is operating is to load up a software utility that can display the real-time frequency of each CPU core. We re-checked this by setting a single-core loading scenario (using Prime 95, WPrime, or Cinebench, for example) and observed that one of the cores operated at 4100MHz while the others sat at their default 3700MHz clock speed.

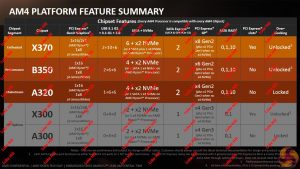

If Ryzen 7 is considered a brain, the AM4 platform can be considered the heart. AMD's new socket is designed specifically for new Zen-based processors and the company has committed to it for a number of years, meaning that compatibility with future Zen 2 (and so on) products is promised.

The AM4 socket itself shares similarities with the AM3 socket it replaces, though mounting holes are spaced differently so many CPU coolers will need new installation brackets. A Pin Grid Array (PGA) design is still used meaning that the 1331 insertion pins for AM4 are found on the processor.

New additions to the platform include support for DDR4 memory, native PCIe Gen 3.0, and native support for modern storage interfaces and protocols (USB 3.1, SATA-Express, NVMe). The three primary launch chipsets (X370, B350, and A320) differ primarily by their support for multiple GPUs and overclocking. X370 is the enthusiast chipset that most gamers and prosumers will lean towards, though B350 is an overclocking-capable mainstream option that limits multi-GPU compatibility and storage interfaces. The upcoming SFF-based X300 and A300 chipsets will be interesting.

X370 is the only high-bandwidth CrossFire- and SLI-capable chipset. USB 3.1 and M.2 PCIe NVMe-capable connections are available on all non-SFF chipsets, though the number of storage options decreases as you go down the list.

DDR4 support is new for AM4 and Ryzen. The platform supports dual-channel, two-DIMM-per-channel DDR4 memory and offers different frequency capability depending upon the module configuration used. Populating all four DIMM slots with dual-sided memory will give you official support for 1866MHz speed, while a pair of dual rank DIMMs will run at 2400MHz or higher. Using single-sided DIMMs gives you 2133MHz frequency support with 4 DIMMs installed or 2667MHz compatibility with 2 sticks. ECC memory is supported by the CPU, which may be music to some prosumers' ears.

These are simply the supported frequencies as promised by AMD, not what you can reasonably expect through overclocking (we used two single-sided DIMMs operating at 3200MHz in dual-channel mode). One thing worth outlining, however, is that AMD's platform does not seem to be as capable as Intel's Z170/Z270-based platform when it comes to high-speed memory support.

Initial guestimates relating to overclocking capability can be made by looking at AMD's SKUs. The Ryzen 7 1800X is a 3.6-4.0GHz (4.1GHz XFR) flagship that costs $100 (£90) more than the ~200MHz slower Ryzen 7 1700X. That gives the indication that the 1800X is cherry-picked silicon that sits more favourably on a voltage-frequency curve and therefore commands a 25% price increase (consumers may disagree with AMD's logic).

Having two similar SKUs operating at different clock speeds may imply that overclocking capability is going to be tight. If the CPUs generally overclock well, it would be unlikely that AMD would think a 25% price increase for a <10% higher frequency would be worthwhile as savvy consumers would simply buy the cheaper 1700X and push it to 1800X speeds (which may prove to be the case).

However, if strong overclocking performance is no guarantee, that 25% price increase for more golden silicon and slightly higher clock speeds seems like a more perceivable option.

That's enough of the guestimates. Let's look at real overclocking capability.

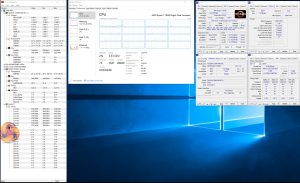

AMD has created a new piece of software – Ryzen Master – that allows the system to be overclocked from within an OS environment. I tested it out and it works well. Users can change CPU clock speeds in 25MHz increments, the memory data rate can be switched, cores can be disabled, and voltage adjustments can be made.

There's also a good amount of information pertaining to temperature and clock speeds available. The settings are applied following a system reboot and up to four profiles (currently) can be saved.

Despite working well for overclocking, I found that the Ryzen Master software utility was using around 10% of the CPU cycles on a Ryzen 7 1800X CPU. As such, I quickly switched to the old-school BIOS approach for system tuning.

After many hours spent tweaking VCore, SOC, and NB (if available on the motherboard) voltages, I finally reached settings that I was happy to use to push the CPU frequency. Default voltage for manual tuning should start at around 1.3625V, according to AMD. Users should be fine pushing to 1.40V with a decent CPU cooler and up to 1.45V with a high-end dual-tower heatsink or dual-fan AIO radiator.

At 1.45V, AMD suggests that processor longevity could be affected according to their models. Temperatures, however, are not a limiting factor at this voltage, provided you have a Noctua D15- or EKWB Predator 240-calibre CPU cooler; we saw temperatures stay below 80°C under short periods of heavy load at around 1.44V.

Another factor that can aid stability is SOC voltage. We pushed this to 1.25V in our testing as there are suggestions that it can aid stability with high-speed memory modules. Using the MSI X370 XPower Gaming Titanium motherboard, pushing NB voltage to around 1.10-1.15V seemed to aid stability. This option was not clearly labelled in the ASUS UEFI and therefore could not be adjusted.

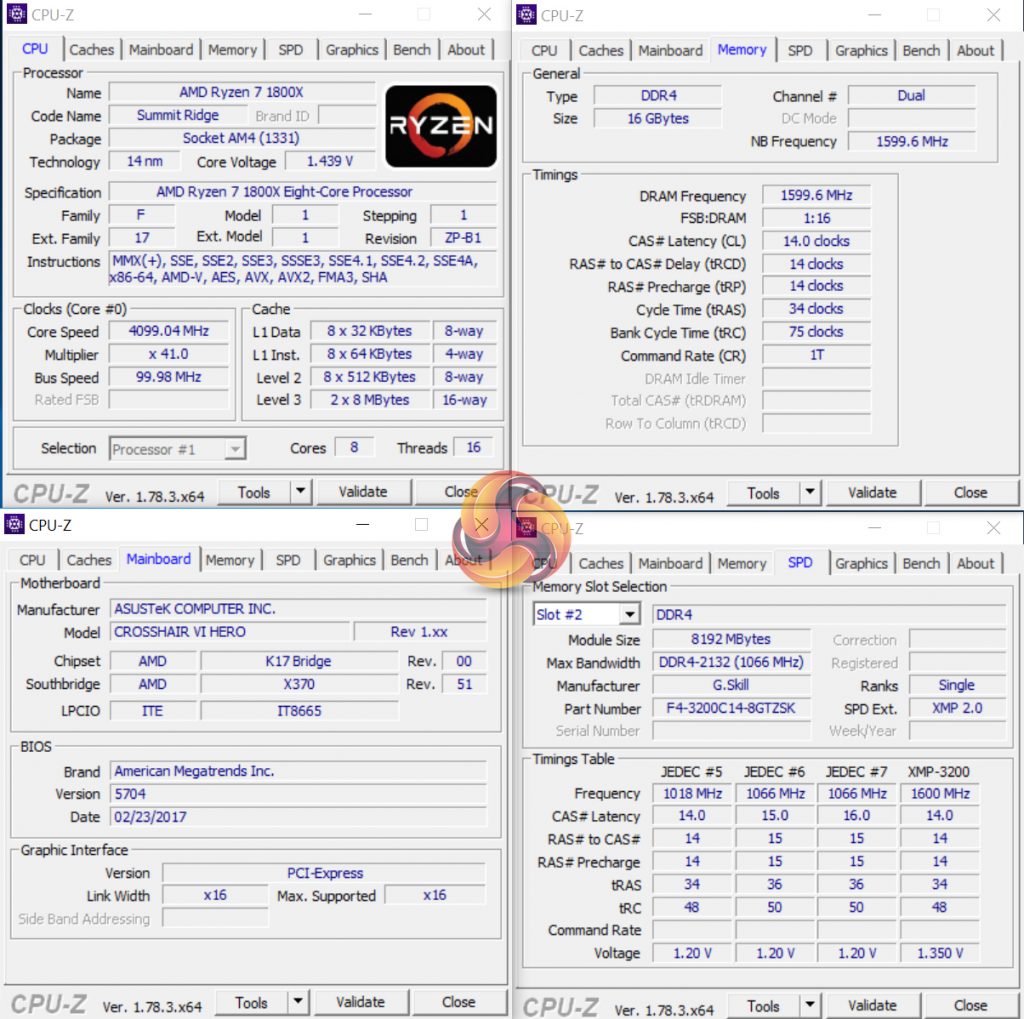

In short, our final Ryzen 7 1800X overclocking settings using an ASUS Crosshair VI Hero motherboard were:

- 1.43125V CPU VCore.

- 1.25V SOC voltage.

- Level 3 LLC (around 1.439V load VCore in OS).

- Multiple Cinebench R15 multi-core runs to validate stability, as well as AIDA64 CPU stress test.

- DDR4-3200MHz 14-14-14-34 @ 1.35V.

AMD Ryzen 7 1800X Overclocking

The highest Cinebench-stable processor frequency that we could achieve with all 8 cores and SMT enabled was 4100MHz. Frequencies such as 4150MHz and 4200MHz would boot into Windows 10 but could not retain stability under Cinebench loading.

4100MHz at ~1.44V load voltage was perfectly stable when using Cinebench R15 as the validity test. AIDA64 CPU stress test also validated stability. Prime 95 was a little flaky in some instances (it would sometimes drop a single-thread worker after a few minutes of sustained load) but the FFTs testing procedure is extremely strenuous on CPU stability.

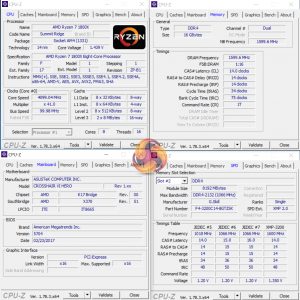

We were able to keep the memory running at 3200MHz C14 when the CPU cores were overclocked to 4.1GHz. This is good as it results in a direct 400MHz boost over the default 3.7GHz all-core turbo frequency of the default Ryzen 7 1800X.

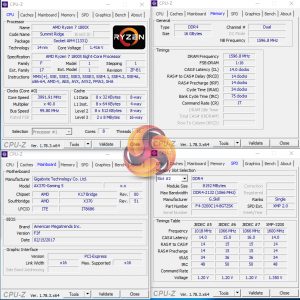

We tested a second, different Ryzen 7 1800X with the MSI X370 XPower Gaming Titanium and Gigabyte AX370-Gaming 5 motherboards. This chip was not as good an overclocker as our initial one. We were limited to 4050MHz Cinebench stable with this second Ryzen 7 1800X sample though this did allow us to drop the voltage down to 1.425V instead (we didn't attempt lower), which is more sensible for long-term usage.

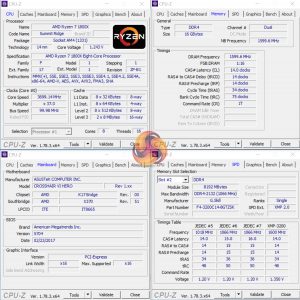

Rolling back the VCore even further to 1.40V netted us perfect stability (even with Prime95) at 4GHz on both the Gigabyte and MSI motherboards.

Overclocking comments:

Based on our testing, in addition to other reports that we are hearing, around 4.1GHz should be considered quite a good overclock for a Ryzen 7 1800X CPU while limiting the voltage to 1.45V. Dropping down to 1.40V may force you to back off by around 100MHz to 4.0-4.05GHz. 4GHz on all eight cores with SMT enabled should be comfortable for many Ryzen 7 1800X CPUs.

There are suggestions that 4.2GHz should be achievable using 1.45V, though neither of the chips that we received were able to hit such levels. AMD also suggests keeping voltage below 1.45V (more towards 1.40V for long-term, 24/7 usage) in order to enhance processor longevity.

Barely crawling past 4GHz when over-volted may be seen as weak overclocking performance by some. That's especially true when one considers that even Intel's 10-core 6950X can push pust 4.1-4.2GHz comfortably, and you could almost guarantee 4.3GHz-4.4GHz on an 8-core i7-5960X. With that said, Intel also has significant experience with its FinFET process. Ryzen is AMD's first enthusiast, overclockable chip using 14nm FinFET process technology so earth-shattering (or Intel-matching) frequencies may have been a little optimistic.

Nevertheless, 4GHz on an 8C16T processor is nothing to be underwhelmed by, especially not for a brand new CPU on brand new (for AMD) process technology.

High-speed Memory Support

As already highlighted, we were able to use 3200MHz dual-channel DDR4 (2 single-sided DIMMs) with the Ryzen 7 1800X while at stock clocks and when overclocked to 4.05-4.1GHz. This was the case using the ASUS Crosshair VI Hero motherboard and when using XMP on the Gigabyte AX370-Gaming 5 motherboard.

We tried to push to 3600MHz using a built-in D.O.C.P setting for the memory frequency on the ASUS board. This ended very badly as the system tried to POST into a BIOS update mode (despite no update being initialised), at which point I had to kill the power which seemed to result in a bricked BIOS chip (not motherboard as the LEDs still worked).

As was the case when Intel launched Haswell-E/X99 and DDR4 was fresh out of the fabs, early compatibility issues with high-speed memory are commonplace. BIOS updates and better tuning from memory vendors are likely to improve the picture over time, though we certainly wouldn't anticipate easily-achievable 4GHz+ Kaby Lake-esque memory capability from Ryzen in its current state.

We will be outlining the Ryzen 7 1800X CPU's performance while using an ASUS Crosshair VI Hero AM4 motherboard.

A 16GB (2x8GB) kit of G.Skill's Trident Z DDR4 memory serves our test system. The kit's rated frequency of 3200MHz with CL14 timings should ensure that memory-induced bottlenecks are removed. A strength for the ASUS board is its ability to run this memory at 3200MHz CL14, which is pushing on the limit of memory speed capability for the AM4 platform.

Today's comparison processors come in the form of:

- Piledriver FX-8370.

- Sandy Bridge i7-2700K.

- Ivy Bridge i5-3570K.

- Devil's Canyon i7-4790K (Haswell-based).

- Haswell-E i7-5960X.

- Broadwell-E i7-6800K and i7-6950X.

- Skylake's i5-6600K and i7-6700K.

- Kaby Lake's i3-7350K, i5-7600K, and i7-7700K.

These form some of the best and most popular CPUs in their respective pricing ranges and product hierarchies for this generation and past ones. They also give a solid overview of where the Ryzen 7 1800X slots into the current market in terms of performance.

We went out and paid over £1,500 (!) to buy a Core i7-6950X 10C20T CPU in order to compare Ryzen 7 1800X to the current fastest consumer processor on the market. Our trusty old Haswell-E Core i7-5960X serves as the 8C16T competitor for Intel's HEDT platform as we do not have access to the Broadwell-E i7-6900K (which is very similar in performance).

The Ryzen 7 1800X sat comfortably at its 3.7GHz all-core boost frequency throughout testing thanks to our strong Noctua D15 CPU cooler and solid power delivery from the ASUS motherboard. XFR was confirmed as operating at 4.1GHz by running multiple different single-threaded workloads and checking the real-time clock speed.

We test Intel CPUs using the forced turbo (multi-core turbo – MCT) setting that most motherboard vendors now enable by default or when using XMP memory. This feature pins all of the CPU's cores at the maximum turbo boost frequency all of the time. The voltage is bumped up to enhance stability but this results in greater power consumption and higher temperature readings which are important to remember when testing those parameters.

We also tested all CPUs' achievable overclocked frequencies so that you can see how your overclocked chip compares to another stock or overclocked chip.

CPU Test System Common Components:

- Graphics Card: Nvidia GeForce GTX Titan X Pascal (custom fan curve to eliminate thermal throttling).

- CPU Cooler: Noctua NH-D14 / Noctua NH-D15 / Cryorig R1 Ultimate / Corsair H100i v2 / Corsair H110i GT / EKWB Predator 240.

- Games SSD: SK hynix SE3010 SATA 6Gbps 960GB.

- Power Supply: Seasonic Platinum 1000W / Seasonic Platinum 760W.

- Operating System: Windows 10 Pro 64-bit (Anniversary Update).

We use a mixture of high-end air and AIO coolers to gather performance measurements without thermal throttling playing a part. Seasonic's Platinum-rated PSUs provide ample power to really push the CPU overclocks. Nvidia's GTX Titan X Pascal is the fastest gaming GPU on the planet, making it ideal for alleviating GPU-induced bottlenecks and putting the onus on CPU performance.

While we use a mixture of cooling and PSU hardware for general testing, where it is important to keep those items identical (power draw and temperature readings) we ensure that the correct hardware is used to deliver accurate data.

Ryzen AM4 System (Ryzen 7 1800X):

- 1800X CPU: AMD Ryzen 7 1800X ‘Summit Ridge' 8 cores, 16 threads (3.6-4.0GHz stock w/ 4.1GHz XFR & 4.1GHz @ 1.43125V overclocked).

- Motherboard: ASUS Crosshair VI Hero (AM4, X370).

- Memory: 16GB (2x8GB) G.Skill Trident Z 3200MHz 14-14-14-34 DDR4 @ 1.35V.

- System Drive: Crucial MX300 525GB.

Kaby Lake & Skylake LGA 1151 System (7600K, 7700K, 6600K, 6700K):

- 7600K CPU: Intel Core i5-7600K ‘Skylake' (Retail) 4 cores, 4 threads (4.2GHz stock MCT & 4.9GHz @ 1.35V overclocked).

- 7700K CPU: Intel Core i7-7700K ‘Skylake' (Retail) 4 cores, 8 threads (4.5GHz stock MCT & 4.8GHz @ 1.35V overclocked).

- 6600K CPU: Intel Core i5-6600K ‘Skylake' (Retail) 4 cores, 4 threads (3.9GHz stock MCT & 4.5GHz @ 1.35V overclocked).

- 6700K CPU: Intel Core i7-6700K ‘Skylake' (Retail) 4 cores, 8 threads (4.2GHz stock MCT & 4.7GHz @ 1.375V overclocked).

- Motherboard: MSI Z270 Gaming Pro Carbon & Gigabyte Aorus Z270X-Gaming 7 (LGA 1151, Z270).

- Memory: 16GB (2x8GB) G.Skill Trident Z 3200MHz 14-14-14-34 DDR4 @ 1.35V.

- System Drive: Samsung 840 500GB.

Broadwell-E & Haswell-E LGA 2011-3 System (5960X, 6800K, 6950X):

- 5960X CPU: Intel Core i7 5960X ‘Haswell-E' (Engineering Sample) 8 cores, 16 threads (3.5GHz stock MCT & 4.4GHz @ 1.30V overclocked).

- 6800K CPU: Intel Core i7 6800K ‘Broadwell-E' (Retail) 6 cores, 12 threads (3.6GHz stock MCT & 4.2GHz @ 1.275V overclocked).

- 6950X CPU: Intel Core i7 6950X ‘Broadwell-E' (Retail) 10 cores, 20 threads (3.5GHz stock MCT & 4.2GHz @ 1.275V overclocked).

- Motherboard: ASUS X99-Deluxe (LGA 2011-v3, X99).

- Memory: 32GB (4x8GB) G.Skill Trident Z 3200MHz 14-14-14-34 DDR4 @ 1.35V.

- System Drive: SanDisk Ultra Plus 256GB.

Devil's Canyon LGA 1150 System (4790K):

- 4790K CPU: Intel Core i7 4790K ‘Devil's Canyon' (Engineering Sample) 4 cores, 8 threads (4.4GHz stock MCT & 4.7GHz @ 1.30V overclocked).

- Motherboard: ASRock Z97 OC Formula (LGA 1150, Z97).

- Memory: 16GB (2x8GB) G.Skill Trident X 2400MHz 10-12-12-31 DDR3 @ 1.65V.

- System Drive: Kingston SM2280S3/120G 120GB.

Sandy Bridge & Ivy Bridge LGA 1155 System (2700K, 3570K):

- 2700K CPU: Intel Core i7 2700K ‘Sandy Bridge‘ (Retail) 4 cores, 8 threads (3.9GHz stock MCT & 4.6GHz @ 1.325V overclocked).

- 3570K CPU: Intel Core i5 3570K ‘Ivy Bridge' (Retail) 4 cores, 4 threads (3.8GHz stock MCT & 4.6GHz @ 1.30V overclocked).

- Motherboard: ASUS P8Z77-V (LGA 1155, Z77).

- Memory: 16GB (2x8GB) G.Skill Trident X 2400MHz 10-12-12-31 DDR3 @ 1.65V (@2133MHz for 2700K due to CPU IMC limitation).

- System Drive: Kingston HyperX 3K 120GB.

Vishera AM3+ System (FX-8370):

- FX-8370 CPU: AMD FX-8370 ‘Vishera' (Retail) 8 cores, 8 threads (4.0-4.3GHz stock & 4.62GHz @ 1.45V CPU, 2.6GHz @ 1.30V NB overclocked).

- Motherboard: Gigabyte 990FX-Gaming (AM3+, SB950).

- Memory: 16GB (2x8GB) G.Skill Trident X 2133MHz 12-12-12-31 DDR3 @ 1.65V.

- System Drive: Patriot Wildfire 240GB.

Software:

- ASUS Crosshair VI Hero BIOS v5704 (pre-release).

- GeForce 378.49 VGA drivers.

Tests:

Productivity-related:

- Cinebench R15 – All-core & single-core CPU benchmark (CPU)

- HandBrake 0.10.5 – Convert 6.27GB 4K video recording using the Normal Profile setting and MP4 container (CPU)

- Mozilla Kraken – Browser-based JavaScript benchmark (CPU)

- x265 Benchmark – 1080p H.265/HEVC encoding benchmark (CPU)

- WPrime – 1024M test, thread count set to the CPU's maximum number (CPU)

- SiSoft Sandra 2016 SP1 – Processor arithmetic, cryptography, and memory bandwidth (CPU & Memory)

- 7-Zip 16.04 – Built-in 7-Zip benchmark test (CPU & Memory)

Gaming-related:

- 3DMark Fire Strike v1.1 – Fire Strike (1080p) test (Gaming)

- 3DMark Time Spy – Time Spy (DX12) test (Gaming)

- VRMark – Orange room (2264×1348) test (Gaming)

- Ashes of the Singularity – Built-in benchmark tool CPU-Focused test, 1920 x 1080, Extreme quality preset, DX12 version (Gaming)

- Gears of War 4 – Built-in benchmark tool, 1920 x 1080, Ultra quality preset, Async Compute Enabled, DX12 (Gaming)

- Grand Theft Auto V – Built-in benchmark tool, 1920 x 1080, Maximum quality settings, Maximum Advanced Graphics, DX11 (Gaming)

- Metro: Last Light Redux – Built-in benchmark tool, 1920 x 1080, Very High quality settings, SSAA Enabled, AF 16X, High Tessellation, DX11 (Gaming)

- Rise of the Tomb Raider – Built-in benchmark tool, 1920 x 1080, Very High quality preset, SMAA enabled, DX12 version (Gaming)

- The Witcher 3: Wild Hunt – Custom benchmark run in a heavily populated town area, 1920 x 1080, Maximum quality settings, Nvidia features disabled, DX11 (Gaming)

- Total War Warhammer – Built-in benchmark tool, 1920 x 1080, Ultra quality preset, DX12 version (Gaming)

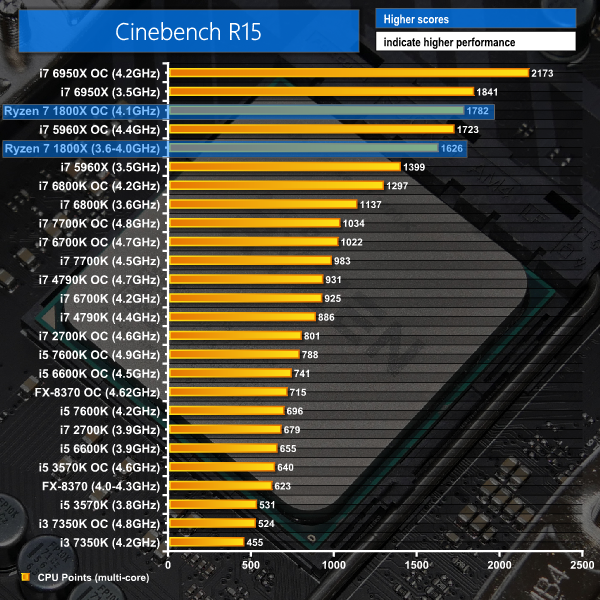

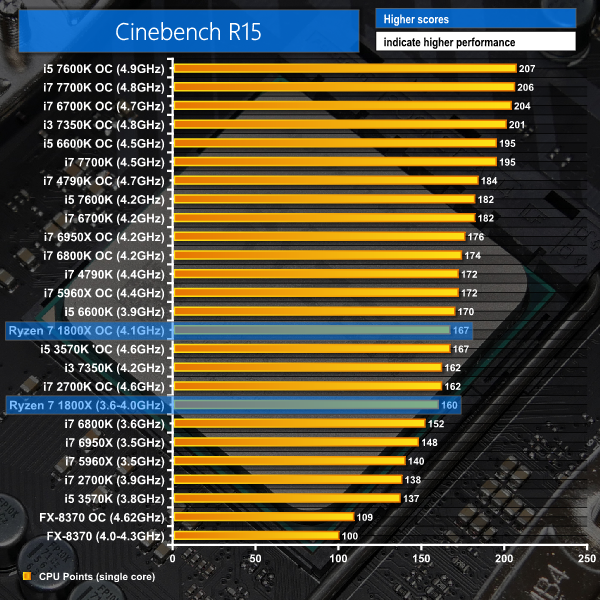

Cinebench

Cinebench is an application which renders a photorealistic 3D scene to benchmark a computer’s rendering performance, on one CPU core, all CPU cores or using the GPU. We run the test using the all-core CPU and single-thread CPU modes.

Talk about fast off the start line!

Cinebench is first up in our test suite and AMD's Ryzen 7 1800X doesn't simply enter itself onto the enthusiast consumer processor scene, it kicks the front door down. The 8C16T Ryzen 7 1800X is over 16% faster than Intel's 8C16T Haswell-E based flagship from not-so-long ago.

Keeping focus on stock frequencies, at which the Ryzen 7 1800X sees all cores pushing to 3.7GHz, the <£500 AMD chip is only around 12% slower than Intel's 10C20T Broadwell-E Core i7-6950X… which costs more than three times as much (~£1,650). A 12% performance loss for a 70% price reduction doesn't seem like too bad a deal.

Equally impressive is the Ryzen 7 1800X's ability to maintain its positive performance when overclocked. The gap between itself and the stock-clocked 10C20T 6950X is reduced to around 3%, while the 300MHz higher-clocked Haswell-E Core i7-5960X can't quite catch the 4.1GHz Ryzen 7 1800X.

Shifting focus to a price competitor, the ~£400 6C12T Broadwell-E i7-6800K is nearly 500 points (30%) slower in the Cinebench multi-core test. Only when overclocked to 4.8GHz can the 4C8T Kaby Lake Core i7-7700K manage to get within 600 points (~35%) of the performance of stock-clocked Ryzen 7 1800X.

Single-thread performance from Ryzen 7 1800X is better-than-anticipated. A 160 point Cinebench score puts it above Haswell-E single core performance from the 3.5GHz i7-5960X and places it roughly a little faster than Broadwell-E in terms of single-core numbers. Ryzen 7 1800X is helped by its boost speed of up to 4.0GHz when a low core count is active, which increases to 4.1GHz XFR for the Cinebench single-thread test.

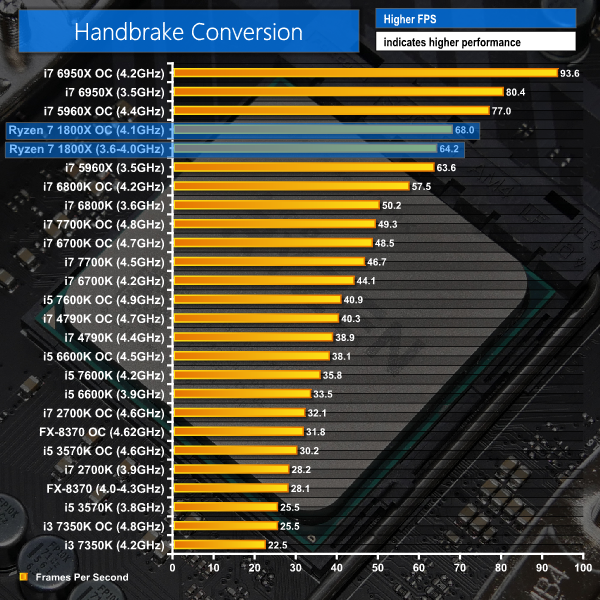

Handbrake Conversion

Handbrake is a free and open-source video transcoding tool that can be used to convert video files between different codecs, formats and resolutions. We measured the average frame rate achieved for a task of converting a 6.27GB 4K video using the Normal Profile setting and MP4 container. The test stresses all CPU cores to 100% and shows an affinity for memory bandwidth.

Handbrake conversion of an x264 video paints a similar picture to Cinebench rendering. This time, however, memory bandwidth has more influence on the result and the stock-clocked Core i7-5960X is able to more-or-less match the stock Ryzen 7 1800X performance. Handbrake gives an insight into the value of high bandwidth from quad-channel memory on Intel's HEDT platform, as opposed to Ryzen's dual-channel approach.

When both chips are overclocked, Ryzen 7 1800X cannot keep pace with the 300MHz faster 8C16T 5960X and its quad-channel 3200MHz C14 DDR4. The performance deficit for OC Ryzen 7 1800X vs OC Haswell-E i7-5960X is around 13% (around 7% of which could be put down to a 300MHz slower clock speed, if Handbrake scaled linearly with CPU frequency). Still, that's not a bad amount of performance for Ryzen 7 1800X to give up in return for a ~£500 saving versus the 8C16T Intel part.

Looking at the £400 i7-6800K against £490 Ryzen 7 1800X, the AMD chip is 28% faster than Intel's latest six-core at stock clocks and its quad-channel DDR4-3200 memory. With both CPUs overclocked, Ryzen 7 1800X is 18% faster, thanks in large to its 33% (two) extra cores. Compared to the popular 4C8T, Skylake Core i7-6700K, Ryzen 7 1800X is over 20 FPS (46%) faster.

If you're a media aficionado with a large Bluray collection ready to rip and convert onto a home media server, the eight-core Ryzen 7 1800X looks like a superb bang-for-buck option.

x265 Encoding

x265 Encoding tests system performance by encoding a 1080p test file using the x265/HEVC format.

The x265 benchmark is slightly different to Cinebench and Handbrake as it does not saturate the full set of threads on eight- or ten-core chips unless an overclock is thrown into the equation. The data has to be sent fast enough for it to be split and divided up to keep all eight or ten cores fully active, and that's where clock speed has value.

This is exactly what the chart shows. Stock-clocked Ryzen 7 1800X is faster than the 5960X thanks to its 200MHz higher all-core boost frequency. Overclock Ryzen 7 1800X to 4.1GHz and you have 6950X-matching performance. The catch here is that Intel's chips will generally overclock to higher frequencies than Ryzen 7. As such, the 4.4GHz 5960X is able to take second place behind the overclocked 10-core 6950X.

Compared to the 6C12T 6800K, Ryzen 7 1800X is one third faster at stock clocks and 27% quicker when overclocked. It's also around 22% more expensive than the 6800K, though.

CPU-related testing overview:

The 8C12T Ryzen 7 1800X processor makes an extremely promising start in our CPU-heavy rendering and video conversion benchmarks. Sixteen threads pinned at 3.7GHz all-core boost frequency give AMD's latest-and-greatest CPU i7-5960X-beating performance. Even when the 8C16T 5960X is clocked 300MHz higher, the Ryzen 7 chip still puts up a solid fight.

Don't fancy overclocking a £1,650 Core i7-6950X due to its extreme cost? If that's the case, you'll get better performance from a 4.1GHz Ryzen 7 1800X chip for certain x265 encoding workloads and will be almost level with the 10-core part for rendering, as suggested by Cinebench.

Single-core performance is also strong, though it certainly isn't Skylake-level strong. Decent single-threaded performance is good to see as this has been AMD's Achilles heel for past high-core-count chips such as those based on the Bulldozer and Piledriver architecture.

7-Zip

7-Zip is an open source Windows utility for manipulating archives. We measure the Total Rating performance using the built-in benchmark tool. The test stresses all CPU cores to 100% and shows an affinity for memory bandwidth.

7-Zip likes memory bandwidth so it's no surprise to see the quad-channel-equipped 5960X outpacing Ryzen 7 1800X and its dual-channel DDR4. The overclocked 6C12T 6800K also gets close to stock-clocked Ryzen 7 1800X performance thanks to its greater memory bandwidth and higher clock speed.

When factoring in price, the 1800X is a compelling option if you have workloads that involve a lot of file compression and decompression.

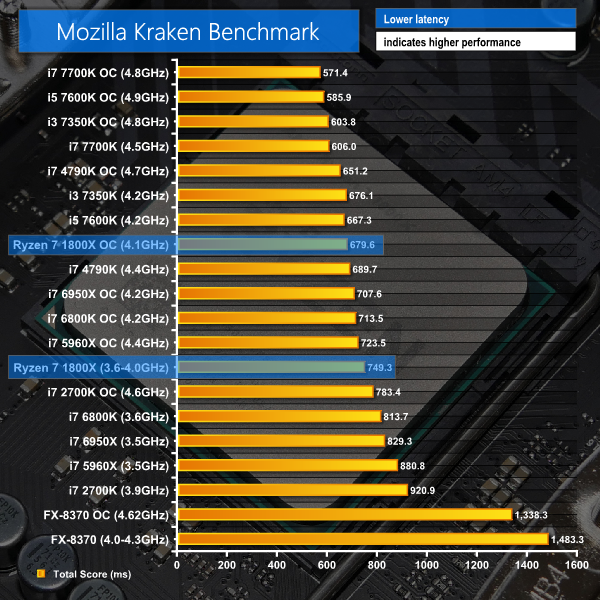

Mozilla Kraken

Mozilla Kraken is a browser-based JavaScript benchmark that tests a variety of real-world use cases. We use Chrome as the test browser. The test exhibits very little multi-threading and shows an affinity for CPU clock speed and IPC.

Kraken essentially analyses single-thread performance with a mixture of high clock speed and strong IPC being primary driving factors.

As such, it is absolutely no surprise to see highly-overclocked Intel chips topping the chart, irrelevant of their core count. AMD's Ryzen 7 1800X puts in a really good show, though, managing to outpace the entire Broadwell-E and Haswell-E comparison stack at both stock and overclocked speeds.

WPrime

WPrime is a leading multithreaded benchmark for x86 processors that tests your processor performance by calculating square roots with a recursive call of Newton’s method for estimating functions. We use the 1024M test in WPrime to analyse processor calculation performance.

Computationally-intensive calculations will work very well on the Ryzen 7 1800X processor if they can be parallelised across its sixteen threads. Only the 10-core 6950X and higher-clocked 8C16T 5960X manage to outpace the Ryzen 7 1800X configurations in WPrime's 1024M test.

CPU-related testing overview:

As was the case with video-centric computational tasks, AMD's Ryzen 7 1800X continues to display strong performance irrelevant of whether the operation is multi-threaded or cares more for IPC and clock speed.

Mozilla Kraken speaks volumes for the single-threaded performance of Ryzen and WPrime continues to prove the worth of a high core count to software that can parallelise operations. Concern does, however, creep into the frame with the 7-Zip result where the eight-core Ryzen 7 chip starts to look limited on memory bandwidth compared to Intel's HEDT, quad-channel alternatives.

Refreshing our memory to the <£500 retail price helps emphasise the impressiveness of the computational performance thus far.

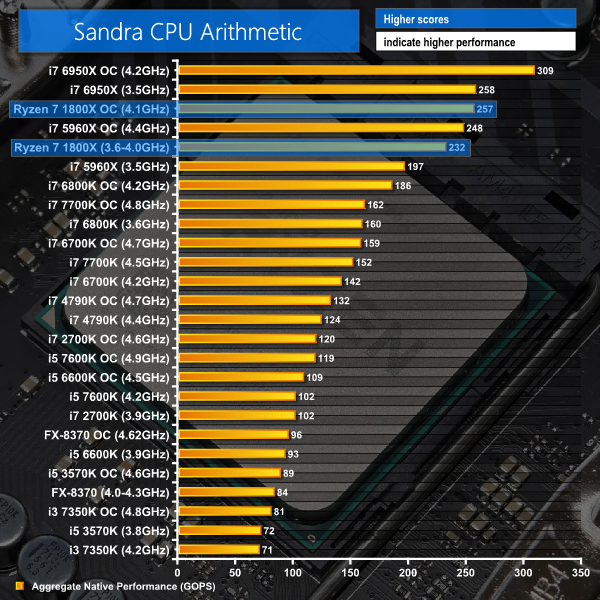

Sandra Processor Arithmetic

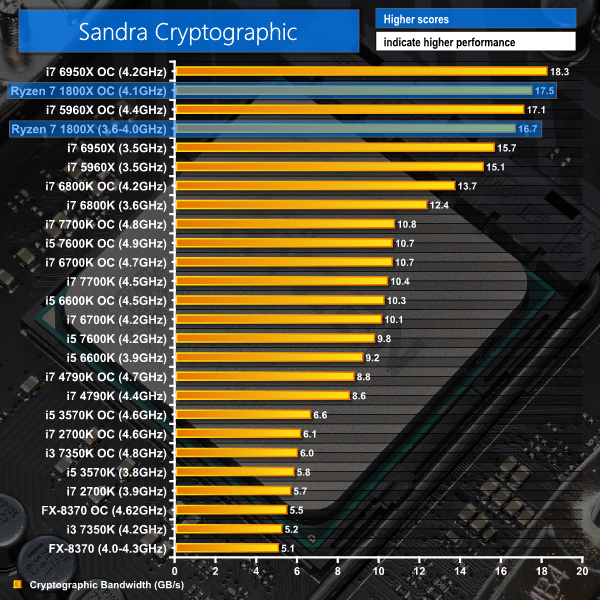

Sandra Cryptographic

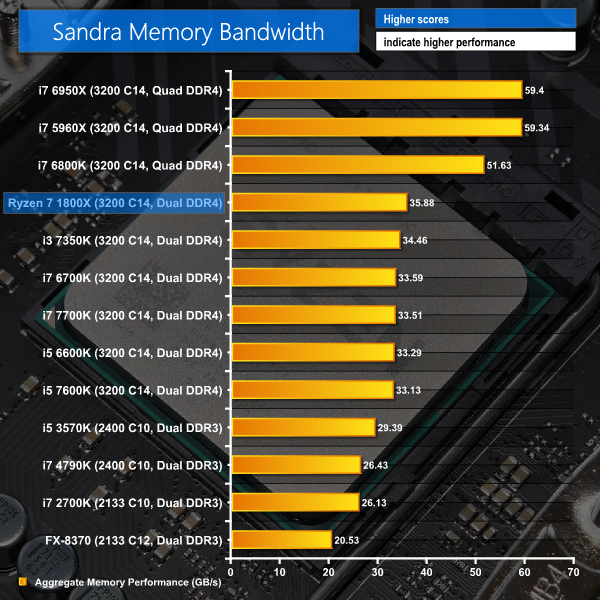

Sandra Memory Bandwidth

SiSoft Sandra places raw processing power of the 4.1GHz 1800X on par with a stock-clocked 6950X… at less than a third of the price. Cryptographic performance for Ryzen 7 is also strong thanks to the 1800X's ability to throw a large number of fast threads at the task. The 4.4GHz 5960X is actually beaten by overclocked Ryzen 7 1800X despite the AMD chip's 300MHz clock speed deficit.

Memory bandwidth is on par with Intel's latest mainstream, dual-channel platform when using similarly-fast 3200MHz C14 DDR4. However, the quad-channel Intel HEDT platforms have more than 60% greater memory bandwidth for an identical memory clock but four DIMMs versus two. This could be an influencing factor to prosumers who have workloads that scale well with increased memory bandwidth.

A note on memory performance: we saw Cinebench scores jump from mid-1700s to high-1700s when switching from 2133MHz DDR4 to 3200MHz. We also noticed slightly higher frame rates in certain games (Rise of the Tomb Raider DX12, for example).

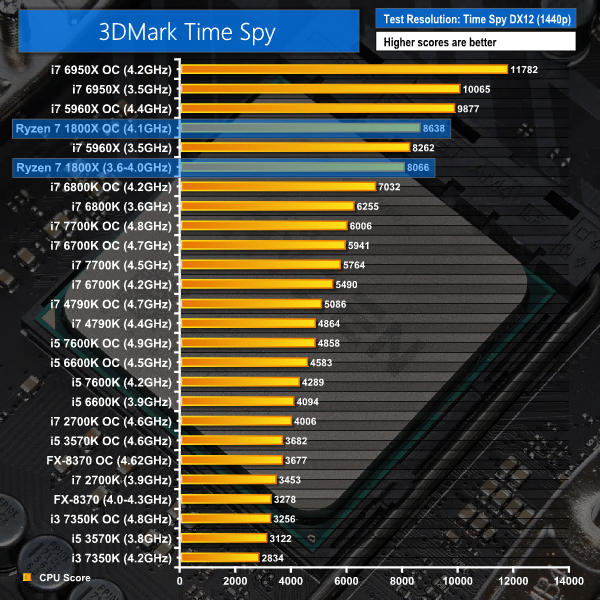

3DMark

3DMark is a multi-platform hardware benchmark designed to test varying resolutions and detail levels of 3D gaming performance. We run the Windows platform test and in particular the Fire Strike benchmark, which is indicative of high-end 1080p PC Gaming. We also test using the Time Spy benchmark which gives an indication of DirectX 12 performance.

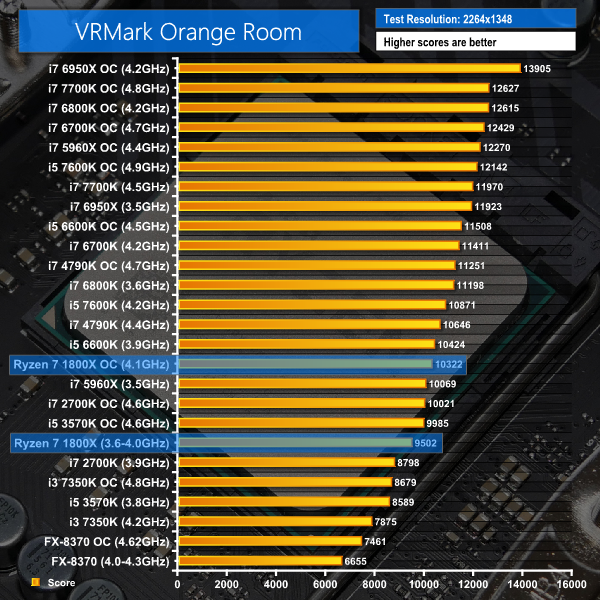

VRMark

The recently-released VRMark benchmark aims to score systems based on their VR performance potential by using rendering resolutions associated with VR devices of today and the future. We test using the Orange Room benchmark which uses a rendering resolution of 2264×1348 to analyse the capability of hardware with current devices such as the HTC Vive and Oculus Rift.

The CPU-related tests in 3DMark scale well across cores and therefore place the eight-and ten-core chips at the top of the chart. This is not, however, necessarily an ideal interpretation of gaming performance in the real world due to differing design strategies for game engines. Overclockers interested in ranking highly on the 3DMark leader-boards (without going crazy with LN2) may want to take note of Ryzen 7 as a value option.

VRMark has an affinity for CPU clock speed and cores but also with IPC thrown into the mix. Ryzen 7 1800X does not perform particularly well in VRMark, though its performance does improve by way of overclocking.

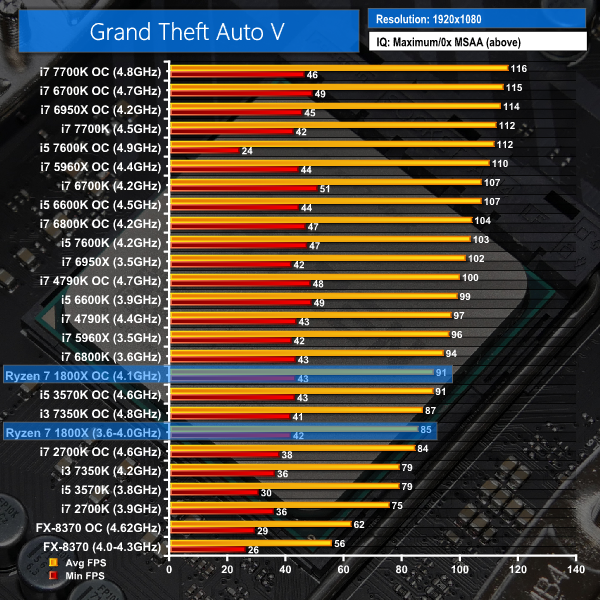

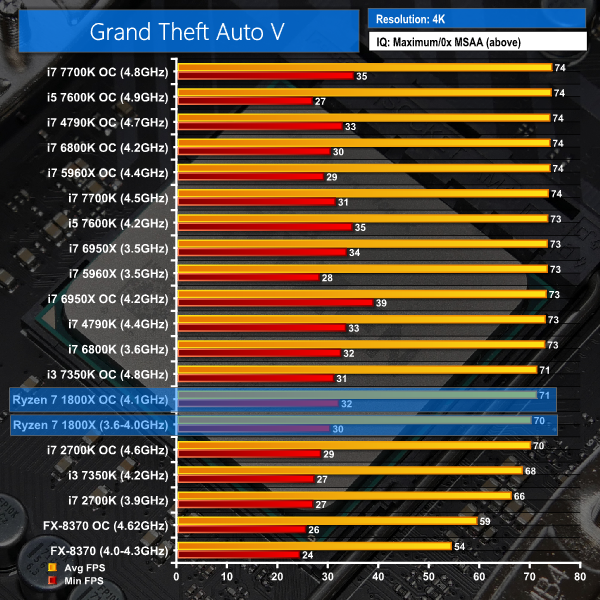

Grand Theft Auto V

Grand Theft Auto V remains an immensely popular game for PC gamers and as such retains its place in our test suite. The well-designed game engine is capable of providing heavy stress to a number of system components, including the GPU, CPU, and Memory, and can highlight performance differences between motherboards.

We run the built-in benchmark using a 1080p resolution and generally Maximum quality settings (including Advanced Graphics).

Clock speed and IPC, mixed with enough threads, are important metrics for strong GTA V performance. Ryzen 7 1800X cannot hit a 100 FPS average using the maximum image quality settings and therefore bottlenecks a GTX Titan X Pascal graphics card.

4.1GHz Ryzen 7 1800X performance is close to that of the stock-clocked 6800K and 5960X. Ryzen 7 is faster than the Sandy Bridge 2700K, even when overclocked to 4.6GHz, and it also gets close to Haswell-based 4790K performance despite the Devil's Canyon chip running 300MHz faster.

If you have just dropped £1k on a fancy new 3440×1440 100Hz ultrawide gaming monitor, you'd be better served by Intel's 8C16T Haswell-E 5960X (despite its 2x cost) or, more sensibly, a fast Skylake-based chip for GTA V gaming.

It is, however, worth pointing out that Ryzen 7 1800X had around 70% spare CPU cycles on its SMT threads and around 40-60% spare on its 8 cores (GTA prefers actual cores to threads). So, if you want to stream GTA V over Twitch, you have plenty of spare processing horsepower to do so whereas the 6C12T 6800K is slightly more heavily loaded and a Skylake i5 will be pushing well above 90% CPU load.

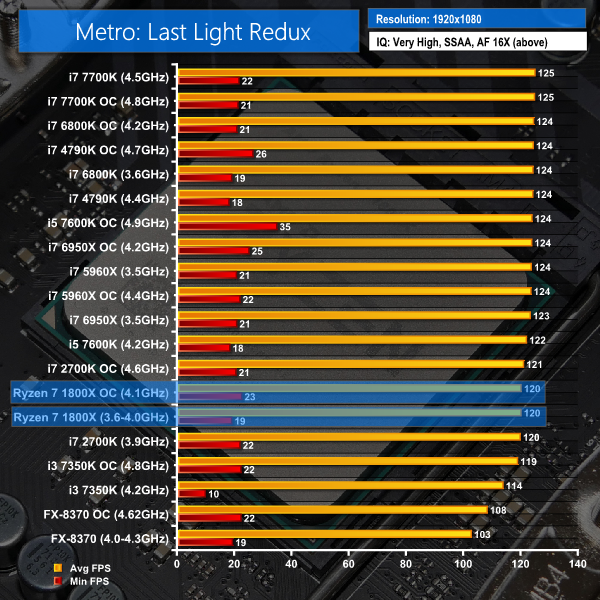

Metro: Last Light Redux

Despite its age, Metro: Last Light Redux remains a punishing title for modern computer hardware. We use the game's built-in benchmark with quality set to Very High, SSAA enabled, AF 16X, and High tessellation.

Metro: Last Light Redux is GPU-limited at around 120-or-so FPS. Ryzen 7 1800X is a little slower than the modern i5 and i7 chips, on average, though it is a significant improvement over AMD's Piledriver-based FX-8370 (not a significant achievement).

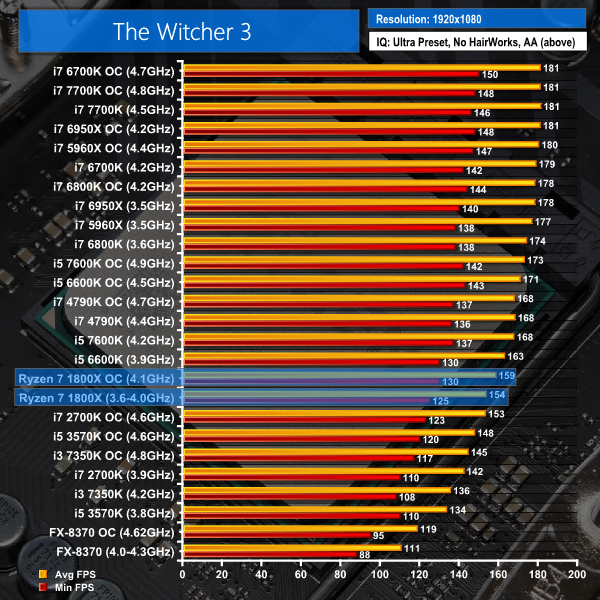

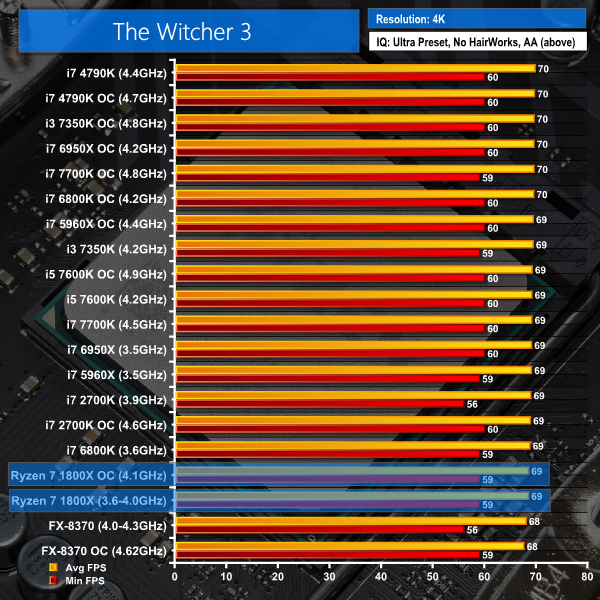

The Witcher 3: Wild Hunt

The Witcher 3 is a free-roaming game which can feature heavy interaction from NPCs in densely-populated urban areas, making it ideal for testing CPU performance. The well-designed game engine is capable of providing heavy stress to a number of system components and will happily use more than four CPU threads when such hardware is available.

We run a custom benchmark which is located in a heavily populated section of an urban town area. A 1080p resolution and Maximum quality settings are used (Nvidia Hairworks settings are disabled).

The Witcher 3 scales well with thread count and CPU frequency. Unsurprisingly, Skylake architecture is king and the extra threads of a Core i7 boost performance to a new level. Ryzen 7 1800X cannot keep pace with modern Core i7 CPUs and actually loses out to Skylake-based i5s (though those i5s are pinned to 95+% CPU load, as opposed to 30-70% on only 9 threads for the 1800X).

What Ryzen 7 1800X is able to do, though, is outperform an overclocked Sandy Bridge i7-2700K even at stock speeds. To put this into perspective, Ryzen 7 1800X beats the Sandy Bridge i7 that is commonly referred to as “fast enough for gaming”. It can't quite hang with more modern Intel architectures but we are talking ~160 FPS average versus ~170/180 FPS.

Pair Ryzen with a GPU not as fast as Titan X Pascal and limit it to a more reasonable 120Hz and you'll have a stellar gaming experience that's practically indistinguishable from modern Intel chips.

DX11 Gaming Performance Overview:

The Ryzen 7 1800X CPU's DX11 gaming performance in these three titles is fine, though it's far from remarkable at somewhere between Sandy Bridge Core i7 2700K and Devil's Canyon (Haswell) Core i7 4790K levels. You'll struggle to push past 100FPS in GTA V at maximum quality settings, though Metro: Last Light and The Witcher 3 should play nicely on your 120Hz monitor, even if the Intel Haswell, Broadwell, and Skylake chips are faster.

GTA V likes Intel hardware so if it is your most played game and you run at 1080p, there are better options than Ryzen 7. Metro: Last Light plays as well on Ryzen 7 1800X as it does on modern Intel i5s and i7s. The Witcher 3 is faster on modern Intel CPUs with 4+ cores, though at more than 150 FPS average (and 120+ FPS minimums) the additional performance is of questionable use.

Ryzen 7 1800X is a solid DX11 gaming chip but it isn't quite as fast as Haswell-E, Broadwell-E, or Skylake at the bleeding edge of performance and ultra high frame rates, based on our three tested titles. If, however, you want to do things in the background (such as stream your gameplay using OBS), Ryzen 7 operates at low overall load in DX11 games and can conduct useful work on many of its under-utilised sixteen threads.

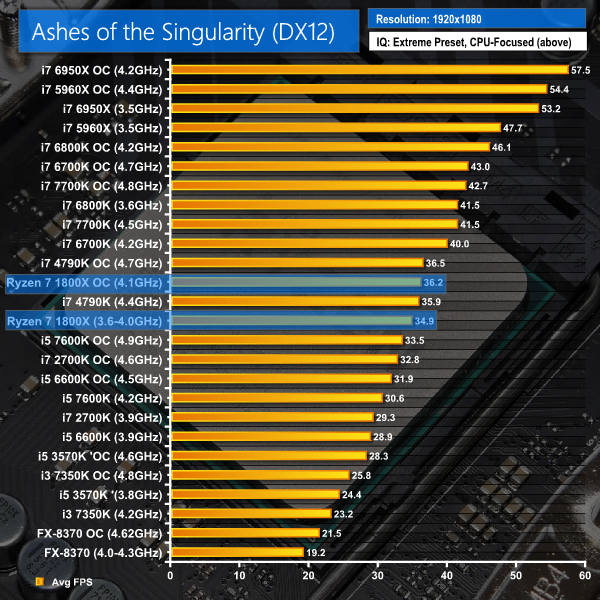

Ashes of the Singularity

Ashes of the Singularity is a Sci-Fi real-time strategy game built for the PC platform. The game includes a built-in benchmark tool and was one of the first available DirectX 12 benchmarks. We run the CPU-focused benchmark using DirectX 12, a 1080p resolution and the Extreme quality preset.

This result is surprising. Ashes of the Singularity is a very well multi-threaded benchmark, as indicated by the Haswell-E and Broadwell-E performance figures for Intel. All of Ryzen 7's threads were pinned to around 80% load when overclocked, which is a similar value to the 5960X at 4.4GHz.

Ashes is simply indicating that Ryzen 7 gaming performance, even when the engine is heavily multi-threaded, is not as strong as Intel on a core-for-core basis.

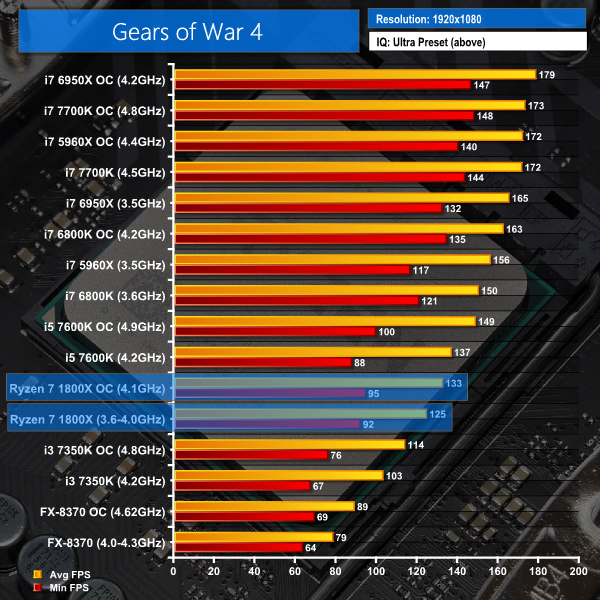

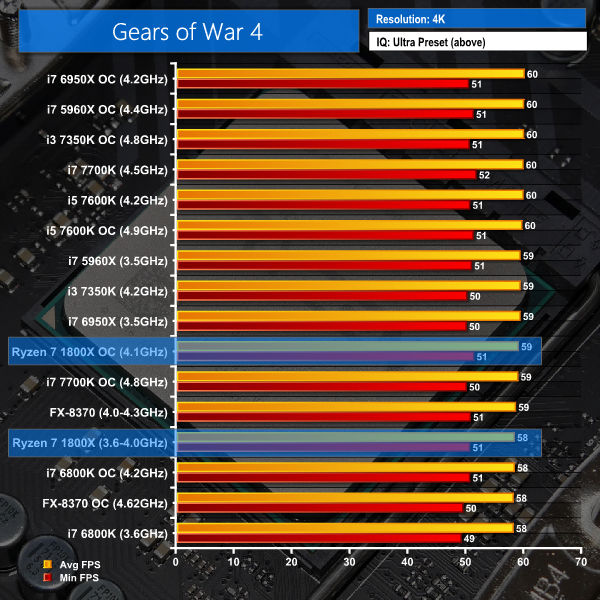

Gears of War 4

Gears of War 4 is a third-person shooter available on Xbox One and in the form of a well-optimised DX12-only PC port. We run the built-in benchmark using DirectX 12 (the only API supported), a 1080p resolution, the Ultra quality preset, and Async Compute enabled.

Note: The Core i7-2700K, i5-3570K, and i7-4790K are not shown in Gears of War 4 as the game download was too large to install on their system SSD and the clunky Windows Store platform gives errors when moving games installed on a secondary SSD between test systems.

Gears of War 4 and its DX12 operating mode place Ryzen 7 1800X performance within around 10-15% of Kaby Lake Core i5-7600K. The 1800X can push more than 120FPS average at both stock and overclocked speeds, while also keeping minimums above 90FPS.

In isolation, this is good gaming performance from the AMD chip but when compared to Intel's modern Core i7 processors, there a noticeable performance gap. On average, the £400 i7-6800K is 20% faster at stock and 23% faster when overclocked. Move the sights to the 5960X and Intel's $1000 chip is 25% faster than Ryzen 7 1800X at stock and 29% quicker when both are overclocked.

If Gears of War 4 is the type of game that you want to run at a high refresh rate, the Ryzen 7 1800X will do just that. However, the Broadwell-E and Skylake-based chips will do it better, keeping minimums and averages well above 1800X levels.

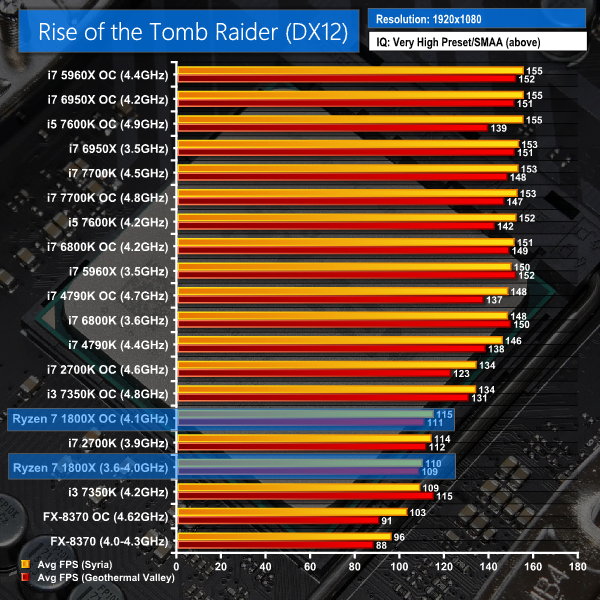

Rise of The Tomb Raider

Rise of The Tomb Raider is a popular title which features both DX11 and DX12 modes. Heavy loading can be placed on the CPU, especially in the Syria and Geothermal Valley sections of the built-in benchmark.

We run the built-in benchmark using the DirectX 12 mode, a 1080p resolution, the Very High quality preset, and SMAA enabled.

ROTTR in its DX12 mode is proficient at balancing load across Ryzen 7's 16 threads though it does like to pin a thread or two at very high loads (90%+). This loading was significantly higher than what was observed for the 8C16T 5960X and seemed to materialise as a limiting factor for game performance.

Ryzen 7 1800X is around Sandy Bridge i7 2700K performance in ROTTR DX12. The modern i5s and i7s based on Haswell architecture and newer are around 30% (or more) faster than Ryzen 7 on average. This is another disappointment, especially given that ROTTR is a DX12 title that should be able to leverage the high core count of Ryzen 7.

To put this in perspective, though, if you have a 60Hz or 75Hz monitor, Ryzen 7 will do the trick. If you game at 100Hz, Ryzen 7 1800X still looks to be a compelling option. But for 120Hz+ gamers, a modern i7 is a better choice for ROTTR.

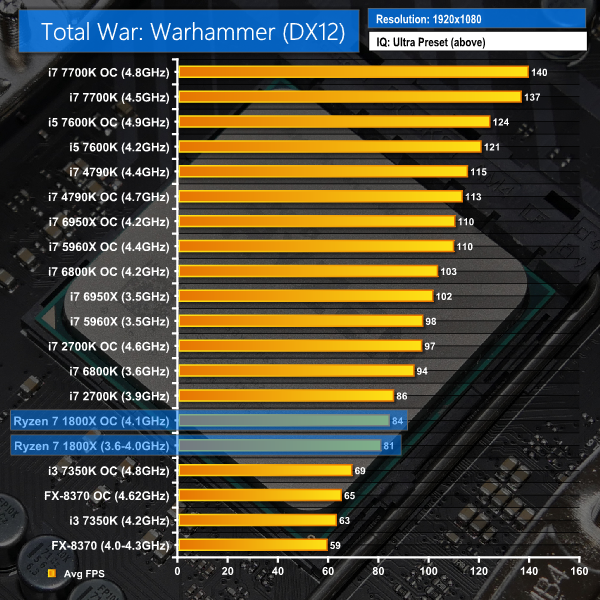

Total War: Warhammer

Total War: Warhammer is another title which features both DX11 and DX12 modes. Heavy loading can be placed on the CPU using the built-in benchmark. The DX12 mode is poorly optimised and tries to force data through a low number of CPU threads rather than balance operations across multiple cores. As such, this gives a good look at pure gaming performance of each CPU in titles that aren't well multi-threaded.

We run the built-in benchmark using the DirectX 12 mode, a 1080p resolution, and the Ultra quality preset.

Total War: Warhammer uses a poorly optimised DX12 mode that tries to force data through one or two threads, resulting in at least one thread being locked at 100% utilisation and dictating overall gaming performance. So, by being badly optimised, Total War proves its worth in our results by giving an indication of generalised gaming performance on games that do not effectively scale their load across multiple CPU threads.

Average performance for the Ryzen 7 1800X is slightly below that of a stock-clocked Sandy Bridge 2700K. Only the old FX-8370 performs worse in this game. If you have a desire to drive Total War: Warhammer at a high frame rate, you will be better served by a fast, Skylake-based chip.

DX12 Gaming Performance Overview:

Ryzen is a solid gaming CPU in our array of DX12 titles and it's certainly a vast upgrade over the FX-8370. However, Intel's modern i7 CPUs are faster than the 1800X and the high-frequency Kaby Lake i5 also beats the Ryzen 7 chip in 3 our of 4 tests.

You can play ROTTR and Gears of War 4 at or around 100 FPS with Ryzen 7 1800X and a Titan X Pascal at Very High or Ultra image quality settings. The Core i7 chips offer more performance headroom, though, so if you are a 120/144Hz+ gamer, they will be better choices than Ryzen 7. Provided you don't plan on conducting intensive background tasks, such as OBS streaming, that is.

The market for people buying an almost £500 CPU and using it for gaming at 1080p is likely to be very slim. What 1080p does is give a good indication of the CPU's raw gaming performance as GPU power is sufficient to push frame rates to a level where the CPU and memory limitations can be observed.

We supplement the 1080p gaming results with a trio of games tested at 4K. That $500 saved against Intel's competing 8C16T CPU could buy a nice 4K monitor, so we will show how Ryzen 7 performs at such a resolution.

Gears of War 4

We run the built-in benchmark using a 4K resolution and the same settings as the 1080p test (Ultra quality preset, Async Compute enabled).

Note: The Core i7-2700K and i7-4790K are not shown in Gears of War 4 as the game download was too large to install on their system SSD and the clunky Windows Store platform gives errors when moving games installed on a secondary SSD between test systems.

All of the CPUs will deliver a solid gameplay experience at 4K in Gears of War where GPU performance is the limiting factor.

Grand Theft Auto V

We run the built-in benchmark using a 4K resolution and the same settings as the 1080p test (generally Maximum quality settings including Advanced Graphics).

Ryzen 7 1800X performance in GTA V is very slightly behind the modern Intel pack. You can expect better frame rates with Ryzen 7 than on a Sandy Bridge i7 2700K system. There was no major bottlenecking of the Titan X Pascal with Ryzen 7; the GPU spent most of its time running above 90% load when tasked with a 4K resolution.

The Witcher 3 Wild Hunt

We run our custom 107-second benchmark in a densely-populated town area using a 4K resolution and the same settings as the 1080p test (Maximum quality, Nvidia settings disabled).

You are unlikely to notice any difference in performance between Ryzen 7 1800X and competing Intel processors when playing The Witcher 3 at 4K. The Titan X Pascal is hammering along at 99% load and Ryzen 7 1800X does not do anything to limit that.

There are also plenty of CPU cycles spare with the 1800X, allowing you to conduct other tasks in the background without significant slow down to the game.

4K Gaming Performance Overview:

Ryzen 7 1800X is a perfectly good processor for 4K gaming. So much onus is placed on GPU horsepower that even the mighty Titan X Pascal is pushed to its limits before all-out CPU performance hits the microscope. With the Ryzen 7 1800X (and competing solutions in our testing) being capable of around 60FPS+ at 4K, there's no need to wish for more when 60Hz is currently as good as it gets for 4K monitors.

That $500 you can save by opting for the Ryzen 7 1800X instead of an 8C16T HEDT Intel chip can be put towards a GTX 1080 Ti (or future AMD competitor) which will be a superb 4K gaming combination. Of course, that point can be taken further by saying that a sub-$300 Core i5 is equally sufficient but factoring in the computational performance of Ryzen 7, outside of gaming, puts the comparison into perspective.

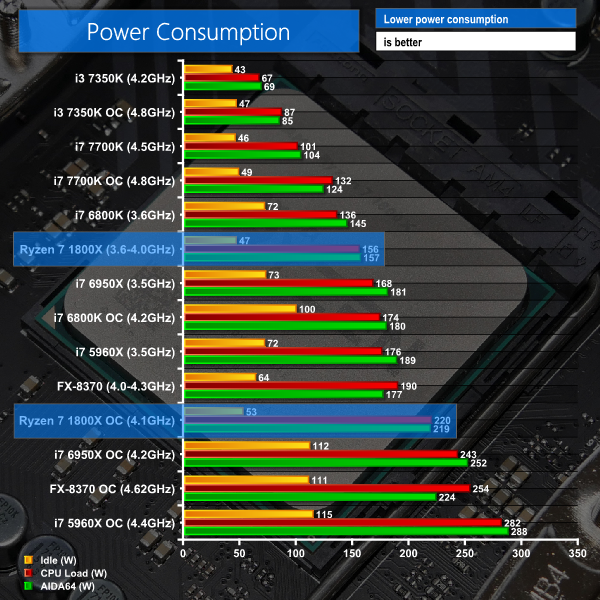

We leave the system to idle on the Windows 10 desktop for 5 minutes before taking a power draw reading. For CPU load results we read the power draw while running the Cinebench multi-threaded test as we have found it to push power draw and temperature levels beyond those of AIDA 64 and close to Prime 95 levels. Cinebench has a short run time on high-performance CPUs which has an effect on the validity of the temperature reading, so we run AIDA64 stress test to validate data.

The power consumption of our entire test system (at the wall) is shown in the chart.

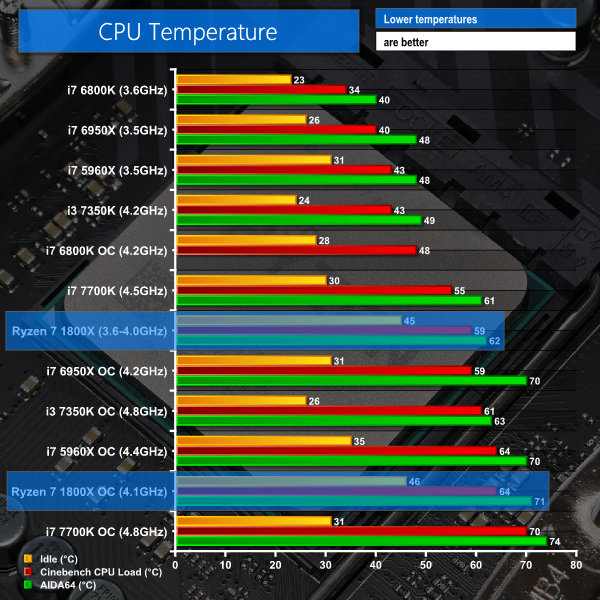

The same test parameters were used for temperature readings.

Power Consumption

Power draw readings are accurate to around +/- 5W due to fluctuations in the value even at sustained load. We use a Platinum-rated Seasonic 760W PSU and install a GTX 1070 video card that uses very little power.

The Ryzen 7 1800X CPU's power draw numbers are fine. It won't win any efficiency medals but it isn't going to ramp up your electricity bill or the thermal energy output into your room, either.

Overclocking the CPU forces a large jump in power drawn by the chip. This also stresses the VRM and can potentially make a more premium motherboard a worthwhile investment. Even when overclocked, the Ryzen 7 1800X draws less power than its performance competitor – Intel's Core i7-5960X, though this does depend heavily on the voltage applied for a given frequency.

Temperatures

Temperature recordings were taken using the Noctua NH-D14 for the LGA 1151 test system, Cryorig R1 Ultimate for the LGA 2011-3 system, and Noctua NH-D15 for the AM4 system. We use different coolers for speed purposes. Each CPU cooler's fans were running at full speed. Ambient temperature was maintained at 20°C.

Due to the use of slightly different CPU coolers, our temperature measurements should only be a guideline. Performance differences below around 3°C are small enough to say that cooling results were similar.

Update 17/03/2017: AMD has announced that Ryzen 7 1800X and 1700X have a +20°C temperature offset on the reading currently displayed by software, over the actual junction temperature. AMD is advising that 20°C can be subtracted from the reported Ryzen 7 1800X and 1700X temperature values to understand the actual junction temperature.

Just like power draw, temperature readings are fine. You are unlikely to push close to the 95°C thermal throttling point when using a premium CPU cooler and sensible long-term voltage level. We could have perhaps pushed a little higher than 1.45V on the CPU overclock though this draws into question chip longevity.

Even when overclocked, the 1800X remained below 80°C throughout our testing when cooling it with the NH-D15.

Clearly the liquid metal thermal interface is functioning correctly in removing heat from the die.

AMD's Zen architecture is alive and it starts life in the form of Ryzen 7 by serving a heavyweight blow to Intel's prosumer HEDT platform. AMD‘s 8C16T Ryzen 7 1800X offers similar computational performance to Intel's 8C16T HEDT chip but at half the price. It destroys the 6C12T 6800K's computational performance for around 20% higher cost. Even after seeing the results, I am still trying to identify the catch with Ryzen 7 that indicates why it is priced so competitively. For a general prosumer who doesn't care for gaming, there simply do not seem to be any glaring weaknesses. Ryzen 7 redefines the term bang-for-buck in the high-end consumer processor market.

Computational performance of Ryzen 7 1800X is superb. Whether your workload is related to rendering, video encoding, media conversion, file archival, cryptographic operations, mathematical computation, or even simple old web browsing, Ryzen 7 has performance on tap. Rendering results in Cinebench put Ryzen 7 1800X not too far from i7 6950X performance but at less than a third of the price. The 8C16T 5960X, which retailed at $1000, needs an overclock to outperform AMD's new flagship, which itself can also be overclocked to jump back above the Haswell-E chip's performance.

Single core performance is also strong for Ryzen 7, though not to Skylake levels. In the single-thread Cinebench test, the 1800X offers comparable performance to a Broadwell-E processor when clock speed differences are accounted for. The improvement in single-core performance over Piledriver is more than 60% in Cinebench and roughly 50% based on Mozilla Kraken numbers.

There are, however, interesting discussion points relating to Ryzen 7 that could influence purchasing decisions. Intel's competing HEDT platform offers quad-channel memory and support for greater RAM capacity with currently available DIMMs. That could be an important factor for some prosumers but it is also added expense of the preference towards four memory modules, rather than two, to others.

Both platforms support ECC DDR4. PCIe connectivity is also more plentiful through LGA 2011-3 CPUs, which allows for more graphics cards or additional high-bandwidth storage interfaces. That said, if you are a video editor with one GPU and a single PCIe SSD, the AM4 platform can tick your boxes.

Then we get on to the topic of overclocking. At 3.6-4.0GHz with a 3.7GHz all-core boost, 4.0GHz higher boost, and 4.1GHz XFR frequency, out-of-the-box speeds for Ryzen 7 1800X are high, even compared to Intel competitors. However, whether due to binning strategy or the fact that a 14nm FinFET process is new to AMD's CPU team, overclocking performance is uninspiring.

You should be confident about hitting 4GHz on all eight cores and 4.1GHz is likely to be achievable if you are willing to push the voltage. It is not reasonable to expect clock speeds of 4.4GHz+ for 24/7 usage as we have seen on Intel's high core count Haswell-E CPUs in the past. With that said, Broadwell-E CPUs are far from good overclockers, so Ryzen 7 doesn't seem so red-faced with its likely 4.0-4.2-ish GHz capability.

Relating closely to overclocking comes temperatures. AMD uses a liquid metal thermal interface and the heatspreader is soldered to the die. Translating from engineering terminology, temperatures are good. At just below 1.45V with all cores enabled, we saw less than 80°C with a Noctua D15 air cooler running an extended bout of Prime 95 or Handbrake. Chose a slightly less demanding loading condition, such as AIDA 64 or Cinebench, and you can expect to stay below 75°C. There does not seem to be need to de-lid the chip, though if you are feeling adventurous, der8auer has managed to de-lid a Ryzen 7 CPU without killing it.

Power draw is fine. The chip isn't an efficiency king but it's also not a power hog compared to its closest performance rivals. You shouldn't need to worry about investing in a new 750W+ PSU if you already have a good quality unit (depending upon GPU power draw).

Gaming performance is a disappointing aspect of the Ryzen 7 CPU. Despite its high clock speed and solid single-thread performance (shown by Cinebench), the CPU cannot compete with modern Core i7 CPUs in many games and sometimes gets beaten by a 4.2GHz Kaby Lake i5. To put this into perspective, all performance differences were identified using a Titan X Pascal and 1080p resolution with frame rates well above 60 FPS.

So if you game at 60 FPS (4K monitors don't go above 60 FPS yet) or don't have a card anywhere near as powerful as the Titan X Pascal, Ryzen 7 gaming performance is likely to be sufficient for your needs. However, if you enjoy high refresh rate gaming and like seeing consistent numbers above 140 FPS, there are better options than Ryzen 7 from Intel's Broadwell-E and Skylake/Kaby Lake product stack.

One point of contention when discussing gaming is the factor of spare CPU performance. Running your Kaby Lake Core i5 at 90%+ CPU load is fine if it delivers good frame rates. However, that scenario soon changes if you want to allocate resources to streaming on Twitch using OBS or you want to output the HD football match onto your second monitor.

While Ryzen 7 may not have been as fast as other 4C8T Intel i7 CPUs, it had significantly more processing power in reserve. That can be useful for streaming or background operations and it could also influence future multi-threaded game design if market conditions dictate.

To prosumers who simply want a high-performance CPU without pushing into four-figure dollar pricing, AMD has put forward a compelling option in Ryzen 7. Looking at the computational performance of 8C16T Ryzen 7 in a variety of workloads, one could convincingly say that AMD has wiped out the entire logic of Intel's Broadwell-E CPUs and LGA 2011-3 platform in all but a few niche scenarios.

Intel will need to adjust its current HEDT CPU pricing if it wants to remain competitive in the prosumer marketplace, as additional PCIe lanes and greater memory bandwidth alone are unlikely to justify such a significant price premium over AMD's offerings. Why spend $1000 on a Core i7 that offers basically the same non-gaming performance as a chip half its price?

Hats off to AMD; the chip vendor has hit hard in a market segment where many tech enthusiasts thought Intel commanded unchallenged – its prosumer HEDT platform.

The AMD Ryzen 7 1800X is available for £488.99 (at the time of writing) from Overclockers UK.

Discuss on our Facebook page HERE.

Pros:

- Superb multi-threaded performance at stock clocks.

- Boost frequencies help to enhance single-threaded performance.

- $499 (~£490) selling price (!).

- Soldered heatspreader allows for good cooling.

- Unlocked CPU multiplier.

- Overall cost can be low thanks to the AM4 platform scalability.

Cons:

- Gaming performance is not well suited for ultra high refresh rates.

- Some memory speed limitations – difficult pushing past 3.2GHz.

- Dual-channel memory and sixteen PCIe lanes for GPUs may be limitations to some prosumers.

KitGuru says: AMD has kicked through the front door of Intel's HEDT processor party and shown no mercy on the 6C12T and 8C16T chips. Retailing for £500 less than an 8C16T Intel chip which performs similarly makes AMD's Ryzen 7 1800X a relative bargain. We now turn our attention to the even more wallet-friendly Ryzen 7 1700X…

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Intel is dead.

AMD is back. Gonna grab 1700 with R9 Nano.

Interesting, although i admit to being disappointed with performance in the gaming arena. No faster than my overclocked 4790K. I’ll wait this one out for now or until Intel brings Kaby Lake priced down. the 7700K or 6800K may be a better buy with a price reduction…

It’s a very mediocre gaming chip, I’d be better off just moving up to a 4790K from my current 4690K on my Z97 rig rather going to all the hassle & expense of installing new CPU, Mobo & RAM.

Damn…

The Gaming performance is disappointing. :'(

Damn…

The Gaming performance is disappointing. :'(

Something just doesn’t seem right here. How can a chip score so well in benchmarks (both single and multi-core) and then do rather poorly in gaming performance? Is this an issue to do with the quality of how the games are programmed?

looks to me like a optimisation issue, Ryzen wasn’t out when those games were launched, also, the platform is very young etc, so looks to be something that will solve itself with updates to BIOS, drivers and windows, still don’t expect to see 1800x beating 7700k it will just more closely compete with the x99 6900k, 6950x etc. lower-end ryzen SKUs may have better single core performance with higher frequency (that’s a guess) translating into better gaming performance.

I mean DX12 should scale better with higher core counts shouldn’t it? but Ryzen looks to be doing even worse at DX12, that’s the main reason that I think there’s a lack of optimisation, not horsepower.

You are indeed, a kok.

From the wide array of tests I’ve seen in the last hour I wouldn’t recommend the 1800X for 1080p gamers unless they’re streaming or performing other CPU-intensive tasks. The 1800X is clearly perfectly competitive at 4k as you’re more GPU-bound, but at 1080p I think the 7700K is going to be better value for money, which is not something I thought I’d ever say about Intel. Intel have been on the top for too long. If game developers had had Ryzen for the last two-three years then AMD might be more competitive, but sadly they do fall behind in these gaming tests. Maybe the lower core models will be better options for gamers as theoretically the clock frequencies could be higher.

All those games will have been compiled using the Intel tool set, which will naturally favour Intel CPU’s.

Because AMD simply have not been competitive for the last few years in the CPU market, all the game development studios will be using Intel’s compilers, so it will hurt AMD gaming performance.

At least that’s what I suspect is going on.

That does sound about right because regardless of what benchmarks are used, across the board it hammers most CPUs, so to say that then translates into lower performance for games like Witcher 3 and GTA V does seem to be more of an optimisation issue than crap architecture.

Agreed.. 4790K + GTX 1070, I’m good for now.

Agreed. 100%..

But i’m sure AMD will beat Intel XD now is that AMD 1 step a head… 😀

Can you do some gaming benchmarks while doing other background tasks like streaming. I wanna see how well it does for my next streaming build.

LOL of course this is right, why do you think AMD didn’t let anyone release reviews until the chip was actually shipping?

Fucking called it. AMD is on par with i7 cores of roughly same price range. Lacking in gaming power, but with great encoding points.

Thanks, but no thanks. My $210 (USD) i5 6500 is well within tolerances of price per performance.

AMD didn’t fail to let my mediocre expectations down. Maybe the next iteration of Ryzen 7 chips might be interesting to look at. For now, I’d still rather go with Intel if this is all that AMD’s top of the line can offer.

6/10 would definitely buy a 7700k before the 1800X.

very early on for it to be fully optimized by their development team, but even as is not at all optimized compared to now old to very old highly optimized Intel flagship chips, I still think Ryzen is outstanding, need more reviews from many sites to make a valid opinion as everyone does testing differently for many reasons claiming it can only push around 60FPS in some of the listed games while others are reporting same games pushing north of 90-120. TitanX though powerful might be inducing some AMD hateon 😛 anyways we shall see how the landscape changes in a few weeks/months once things have been polished and AMD various partners have had a chance to tune and optimize as well as the other variants come around.

For AMD to even be within spitting distance with Ryzen of flagship or even midrange Intel dominated space speaks volumes of Ryzen being very well engineered, compared to previous offerings many years now that were at best another choice rather then truly competing with 🙂

This is something I want to look into though I’ll openly admit that I am not a game streamer and am therefore not proficient with operating (never mind testing with) OBS and the likes.

If you have suggestions for the sort of gaming+streaming tests you’d like to see, please get in touch using the contact form (http://www.kitguru.net/contact/) and we can discuss some test procedures via email :).

Luke

DX12 benefits AMD graphics but hinders AMD CPUs? For the purpose of “shifting the load to the GPU” and “distributing load into many threads” it seems that DX12 failed here, miserably. Absurd stuff that seems to have gone unnoticed. I’m glad you didn’t neglect this issue like other reviewers (sadly some of them are big-name).

You dont’ need 4K. All it takes is 1440P and the gaming performance gap closes to non existent in most games. Look at at the Guru3D review. All these reviews only showing 1080P with titan Xs are doing Ryzen a HGUE disservice. No one plays games like that unless they are playing competitive CSGO or something. For the VAST VAST majority Ryzen will offer the same gaming perforce as intel for practical puposes.

Only 4.1GHz at 1.44v damn, thats gonna suck for games that require that singlecore speed. My 7700K at 4GHz takes 1.03v by stock and runs great at -0.1v offset, resulting in 0.93v at 4GHz, and much much cooler.

These chips are great powerhouses but will suffer from that dreaded issue of single threaded performance for games that dont support multi threading.

Half tempted to switch… almost… maybe a few years down the line when my 5.3Ghz 7700K starts to struggle… if it ever struggles.

Learn to read – I wasn’t saying the results are a lie, but how it’s very bizarre that benchmarks put the 1800X at the top, whereas for games it’s at the bottom. It only makes sense if the games have been created focusing solely on Intel architecture.

Most games are ports from consoles, PS4 and Xbone run AMD hardware, so the guy’s assertion above that they are optimized for Intel is completely false.

So you recon the PC ports aren’t optimised for Intel? They’re just “ported”? Stick to KFC m8, outta ur depth here.

man, if it was a simple matter of bringing the game code to the PC don’t you think there would be simulators for the PS3, 4 and Xbox 360, one? That’s not this simple, the game IS INDEED optimised to run in PC otherwise the port would just be BAD remember that last Batman game? That game wasn’t optimised for PC that’s why it launched so badly on PC.

Also, again we have lots of things not yet optimised for ryzen, if you read other reviews like gamer nexus’s reviews you’ll see that turning SMT off gives a rather considerable performance increase (same thing happened when intel introduced hyper-treading) which means a future windows/BIOS update will remove this bottleneck and free that performance for us even with SMT ON plus other tweaks that certainly will come our way. Guru3D benchmarks are also interesting since they’ve tested 1440p as well

more amd hype another faliure games are 20 to 30% slower this is not good

AMD explains why Ryzen doesn’t seem to keep up in 1080p gaming

http://www.kitguru.net/components/cpu/matthew-wilson/amd-explains-why-ryzen-doesnt-seem-to-keep-up-in-1080p-gaming/

If AMD chips are exactly the same as Intel’s then I don’t see any fault in your statement. But the fact of the matter is, they are different. They may have similarities thanks to the cross licensing agreement but they’re both different architectures and will run differently from the other.

they do have a point though. 1080p is like the minimum resolution people play at now.

nonetheless, it’ll get fixed soon enough.

LOL I’m the one out of my depth? You’re the one that didn’t see this coming and you’re surprised grasping at straws as to why this CPU sucks. Just look at AMD’s history. Bulldozer optimized for integer and binary calcs and used clustered multi threading and got crushed on floating point, Excavator tried to fix that problem by using SMT instead of clustered multi threading but still failed miserably. Now we have a new CPU that guess what? Sucks at multi threading. Shocker that it works better with SMT disabled. LOL. You salty AMD fanboys are just hilarious. Hope you enjoy cinebench!

or maybe like, do encoding while gaming? just so we could see how it would perform under that kind of load.

in the Ryzen official launch, they had a demo called “Megatasking” or something like that where they would run multiple benchmarks at a time. though they’re all productivity benchmarks, we’d like to see some with gaming.

it seems to me on 1080p there is something issue maybe it can corrected with some software update.

in 2560×1440 resolution everything is alright.

Lol magic port button. Doesn’t exist. Go back to Wendy’s bruh.

Yes but they don’t do it with a 1080 or Titan liek the reviewers are using. They do it with 1060s,1050s,460s,470s,and 480s which again will make a GPU bottle neck. In any realistic use case for 99% of people it will be the GPU deciding the FPS not the CPU.

Someone is butt hurt because they don’t know wtf I just said

Says the burger flipper who thinks there’s a magic port button. Salty logic bruh.

LOL, you’re just salty AMD couldn’t come out with a decent CPU and your mom can only afford a Ryzen 1700 which is garbage. Good luck with your “optimizations” just like bulldozer right? HAHAHAHAHAHAHA

AMD said they’re working on Windows drivers that they hope to be released next month. It is said to improve gaming performance specifically.

The Nano is so sexy.

Facepalm.

I’ll probably wait a month or two before making my own assessment. By then, the hype surrounding Ryzen would have subsided. What I’m more interested to see is a compatibility test for Ryzen on Windows 7 seeing AMD isn’t keen on supporting it. Hoping for slim chance that Kitguru will do one soon.

The link gave me error 404 🙁 I would suggest doing a live stream using twitch of a game like For Honor or Battlefield 1 at 1080p/1440p. Do the same for Intel’s i5 and i7 kabylakes and then of course throw in a broadwell-E. We may also need to see dual screen setup of playing an HD youtube vid with 2-3 tabs for like tech sites on one screen while doing gaming/streaming on the second. Also try to clock all cpu’s to something like 4Ghz so we can see clock for clock performance along with stock clock comparisons. Thanks!

This is one of the better reviews I’ve seen… But all DO point to an optimization issue with AMDs SMT implementation… Some games show higher perf without SMT turned on…

But I will give up a few fps for the scientific and compiling level of work… I guess we’d need to see execution traces to tell where it’s bottlenecking… But it SEEMS like they are trying to load the second thread and it takes too long to return…

I watched a video of gaming which showed thread number two barely getting any work…

As close as it is and the way it performs at 4K, once they get the threading model right in game engines it may get another 10-20% of perf… This review makes it clear that there is plenty of CPU % left on the table…

solution: Offer the R7 1850x, clock it at 4ghz, and sell it at $375. Boom

Sorry, my 6800K, 4.1 GHz @1.199 V, 100% stable, how much voltage the destroyer 1800X has @ 4.1 GHz? Heat and power consumption do count, mind you.

lol pethetic! 4.1 max over clock? while you can get a skylake that is much cheaper now and go all the way to 4.5ghz easy

http://www.kitguru.net/contact/

When I noticed that EVERY review site only listed 1080p I thought to myself something was WRONG here. There is certainly something going on here most likely and I am glad other people are seeing it too.

I think Intel is playing dirty games with enthusiast data and offering incentives for unfavorable / mis-leading reviews. It is just a little too convenient that all the sites list only 1080p…

We know a ton of people using high refresh monitors, we have reviewed plenty of 120hz and 144hz panels,- so those ultra high frame rates are important to get the most from those screens. 1080p is still the most popular resolution in our polls. We even have a new 240hz panel from asus in our labs to test!

We also showed 4k resolution which is a good indication of gpu limiting. Its not like we downscored the processor for the 1080p gaming results – it won our highest award as we think this will improve over time. There is simply not enough time before these reviews to run muliple games at every resolution on many gpus. simply leaving out 1080p testing with a powerful gpu would be sloppy work for any professional review site, we are here to find problems with any product and report on them, not hide them behind limiting situations. Amd have even answered the issue publicly. http://www.kitguru.net/components/cpu/matthew-wilson/amd-explains-why-ryzen-doesnt-seem-to-keep-up-in-1080p-gaming/

1080p is still by far the most popular resolution for PC gamers: (http://store.steampowered.com/hwsurvey/?platform=pc). Granted it is unlikely that people using such high-end hardware will be gaming at 1080p but they can be using any number of resolutions – 1080 ultrawide, 1440p, 2560×1600, 1440 ultrawide, 4K UHD, 5K, triple screen. We simply cannot test all of those eventualities so sticking to the ‘industry standard’ 1080p resolution makes sense, while also adding in 4K to show performance in a GPU-limited gaming scenario.

The reason a Titan XP is used is because testing hardware is all about eliminating bottlenecks. We could have used an RX 480, or any other GPU from our stack, but that doesn’t do any good because the GPU will be limited before CPU limitations come into play. The Titan XP and fast RAM put the performance onus solely on the CPU so that we can observe its performance and analyse it. That’s what our testing allowed us to do and, as written in the review, if you don’t want to game at high refresh rates above 60FPS, Ryzen is a perfectly capable CPU. If you game at a resolution, or with a GPU, where you can’t even get anywhere close to more than 100FPS, Ryzen is a perfectly capable CPU. If you game at 120FPS+ high refresh rates, Ryzen can start to show some issues against Intel’s competitors. 1080P allows us to show that as GPU power is in such excess at the resolution and it’s down to the CPU to keep pace. This logic is the same across other resolutions if it is only enhanced GPU power required to operate at those higher resolutions (not like some open-world games where a higher resolution means more on-screen action and possibly more NPC or background physics calculations).

As we showed with 4K, Ryzen will keep pace in GPU-limited scenarios. That point scales – if your gaming resolution is highly GPU-limited and less than 100FPS, Ryzen looks good. If you GPU isn’t as fast as a Titan XP and you cannot get anywhere near 100FPS+, Ryzen looks good. 1440P strikes a middle ground between 1080P and 4K, but it won’t be entirely GPU-limited if you are pushing past 100FPS in some games. I use a 3440×1440 100Hz monitor myself and therefore made interpretations as to Ryzen’s performance for my own gaming PC. The 1080P result for GTA V (my favourite game) showed Ryzen hitting less than 100FPS average. At 1440P there will be higher GPU load which will reduce the performance difference between CPUs but what if you simply want to throw more GPU power at your gaming PC (not unreasonable when spending £1k on a high-end gaming monitor)? You are still going to be limited by the pace set by the Ryzen CPU in that case. If I want to play GTA V at 100Hz, the 1080P test data in this review showed that Ryzen is going to struggle more than some modern Intel competitors, even if I add more GPU power.

I want to do some more gaming-focussed testing over the coming weeks/months with Ryzen so that I can test more of these scenarios that gamers are curious about (1440P, more 4K, less GPU power, more GPU power through XFire/SLI, etc.). As it stands, this review contains more than four months worth of gathered, validated, and re-validated test data so adding 1440P resolution (as well as 4K which I specifically added for this review) would have simply been impossible in the given timescale.