Power

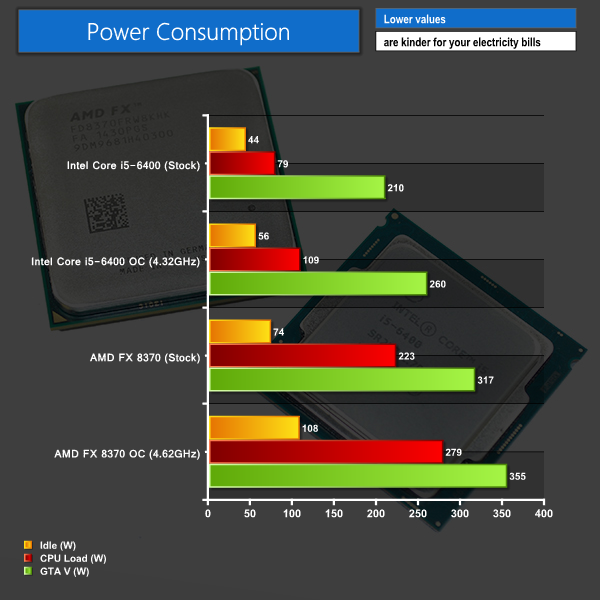

We measured the power consumption with the system resting at the Windows 7 desktop, representing idle values.

The power consumption of our entire test system (at the wall) is measured while loading only the CPU using Prime95′s in-place large FFTs setting. The rest of the system’s components were operating in their idle states, hence the increased power consumption values (in comparison to the idle figures) are largely related to the load on the CPU and motherboard power delivery components.

We also record power draw at the wall while the final car chase section of GTA V's benchmark is running. We pick this section due to it consistently tasking the CPU with the highest load of the entire benchmark.

Power consumption is one key area where Intel is leaps-and-bounds ahead of AMD. If it was possible, you could throw another overclocked Core i5-6400 into the Z170-based system and the Prime95 power draw would still be lower than a single AMD FX 8370-based solution.

This chart pretty much single-handedly shows the benefit of Intel's newer CPU design (which puts emphasis on reduced power draw) and the two-generation advantage of Skylake's 14nm process node, compared to Vishera's 32nm.

Despite offering significantly higher performance in GTA V, both the stock and overclocked Core i5-6400 configurations draw less power while running the benchmark. The power usage differences are clear as day on a chart, but this should be put into perspective.

Based on the numbers above, if you play GTA V for 10 hours per week, the overclocked Intel Core i5-6400 system will save you about £7 on your annual electricity bill compared to the 4.62GHz AMD FX 8370 system. That annual cost saving jumps to £13 if you spend the same amount of time running your overclocked CPU at full tilt.

You may notice how the power draw delta between CPU load and GTA V differs between each chip's stock and overclocked configuration. This is tied to the reduced bottlenecking allowing the power-hungry GTX 980 Ti to better flex its muscle (and power connectors).

Thermal Performance

As already mentioned, gathering temperature numbers from the Intel system is impossible due to the use of an ‘unofficial' Non-K BCLK overclocking BIOS. As such, we will focus on the thermal performance of AMD's new Wraith CPU cooler when used on the FX 8370 chip.

We record the number output by the TMPIN1 reading in HWMonitor, as this tends to indicate CPU temperature on a Gigabyte AM3+ motherboard. The CPU fan is set at its default PWM state for the stock-clocked testing and a more aggressive fan-speed curve when overclocked.

- AMD FX 8370 @ Stock: Idle temperature = 28°C. Load temperature = 45°C.

- AMD FX 8370 @ 4.62GHz, 1.425V, Medium LLC: Idle temperature = 32°C. Load temperature = 53°C.

AMD's Wraith CPU cooler is a decent unit that has the potential to cool an 8-core chip even when a voltage boost is applied. The stock-clocked load temperature is achieved with a fan speed of around 2100RPM, at which point the cooler was clearly audible and somewhat intrusive. When overclocked, the fan quickly ramped up to almost 3000RPM in order to cooler the chip.

Did we feel that the Wraith limited our overclocking potential? Somewhat. While the CPU cooler was able to keep temperatures in check, we would have liked to increase the voltage to see if higher core speeds were achievable. A load voltage of 1.428V is about as high as the cooler could handle, judging by our testing. AMD's chips, however, can be pushed past 1.45V with relatively positive 24/7 stability.

If around 1.425V is far as you plan to push your CPU, then the Wraith cooler is fit for the job. But if you plan on running a higher CPU voltage for long-term usage, it would be wise to invest in a better cooler. It's worth noting that the FX 8370 with the old reference cooler should retail for slightly less, with the new Wraith-laden offering taking the previous price point.

At 2400RPM (stock-clocked heavy load) the cooler's noise output was around 43dBa. With an overclock and load applied, a 2600RPM fan speed resulted in a 45dBa noise output. Running Handbrake when overclocked resulted in the highest noise output which was a lofty 47dBa.

If you're looking for quiet load operation, you'd be wise to invest in a different CPU cooler. But if you are more concerned by cooling performance than noise output, AMD's Wraith does a good job at cooling an overclocked FX 8370.

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

KitGuru KitGuru.net – Tech News | Hardware News | Hardware Reviews | IOS | Mobile | Gaming | Graphics Cards

Not bad for an old CPU. When you compare them by price point, AMD isn’t really far behind at all. They just don’t have anything that competes with the i7’s.

The issue is that AMD are miles behind in terms of efficiency and chipset. Unless they can offer like-for-like performance with gaming, no one will go with them.

People do buy AMD cpus to game. Where is this “No one will buy the” coming from? Plenty of people have am3+ motherboards that want a bit more life to them and the fx8370+wraith is a nice upgrade from say a fx 4150.

I would like to see the power draw on the AMD cpu while playing a game that properly utilizes the AMD cores. GTAV isn’t the best engine to fully realize the power of an AMD CPU.

We have no idea what their new chips will bring to the table. I really do hope they are able to compete with Intel.

Witcher 3 does it especially in heavy draw call areas like cities/largely populated township areas (eg: Novigrad). BF3/4 offers plenty of CPU load on active 64 player MP sessions however such tests are not very repeatable on BF3/4 MP compared to Witcher 3. You can see in this review AMD CPU giving great minimum frame rates in Witcher 3 for such scenarios over even heavily overclocked i5 skylake.

Still there are far too many games out there that rely on raw IPC per core than being efficiently multi-threaded. Zen can’t come soon enough, even somewhere near Haswell level of IPC is more than enough to attract buyers when AMD will obviously price them much cheaper than Intel’s offerings.

$250-300 16 core Zen fully unlocked CPU, whats not to like eh?

That’s not gonna happen. It’ll be priced as it performs when it’s available freely. before that it’ll be priced higher than it performs.

Fiji’s shortage scenarios don’t apply here, GloFo yields are excellent contrary to what some might claim. AMD is in no position to charge the margins Intel commands on their lineup, AMD is aiming to grab market share rapidly, even if the processor turns out to be a stellar Skylake beater (unlikely) even then the pricing will be aggressive in order to win back market share rapidly.

Trying to fight it out with Intel with comparable prices is fool hardy and they more than anyone else realize this.

An FX 8370 goes for $189 on newegg, a top line zen cpu for $300 is already a high enough price for launch and if it is that good then $350 also makes it very competitive price wise compared to anything Intel has at the moment or in the future.

People naively think if AMD beats/matches Intel’s performance then they have automatic right to charge more or equal to what Intel charges, market factors don’t respond in that way and AMD knows it’s place in the market well enough to not do that given it’s financial situation. The motive is to be profitable within this year, not compete with Intel on margins. Smaller profits will do, a profit nonetheless. What if it doesn’t match Skylake and only barely comes close to Haswell? They can’t even charge anything more than $300 to be attractive today, let alone by the end of this year.

Like I said, AMD doesn’t have to beat Intel, just come close enough to be a serious alternative at a much cheaper price range.

But as the review shows, anyone who buys an AMD to game bought badly compared to buying the equivalent Intel proc. Just stump up the money and buy a new mobo at the same time and as the charts show, you will gain fps at a cheap price.

40% IPC increase of XV makes zen close to haswell. I don’t see any reason why 8 core zen should not be around the price of 6 core intel. Something like $400ish. Cheapest intel 6 core is at $385.

http://www.newegg.com/Product/Product.aspx?Item=N82E16819117402&cm_re=6_core_intel-_-19-117-402-_-Product

AMD itself says mass availability will be in 2017. So in 2016 price should be higher. Also if frequency is lower in the GF node, price would be adjusted likewise.

40% IPC over XV based on the traces AMD ran, apart from them no one else knows what those traces are. The 40% increase is meaningless without the knowledge of the traces the simulations team ran. Every company has it’s own idea and methodology as to what these traces should be. When AMD made claims back during the Bulldozer launch they weren’t lying, they just happened to have traces that don’t confirm well to mainstream software behavior. Apparently they bet on something heavy that didn’t turn out how they expected.

People should be wary of taking AMD’s 40% IPC claims when people have no real context of the benchmark traces AMD ran internally.

Let’s just assume for the sake of argument AMD matches Haswell or there abouts, by the time they launch Intel will have Skylake based 6-8 core HEDT 130W models for q3/q4 2016 timeframe. $400 for AMD’s latest that just matches Haswell when Intel has replacements in the HEDT segment from the same $380-400 mark and upwards based on Skylake, who do you think people prefer when they are spending that kind of money already? Yeah Intel.

http://static4.gamespot.com/uploads/scale_super/92/929129/2864691-4045566372-45110.jpg

When money is no object for the uber enthusiast they don’t care for the cheaper option that comes 80 85 or even 95% as fast as Intel’s offering, They just want the fastest option outright. Logic doesn’t matter to such consumers, even that 5-10% increment is the halo for them, enough to justify an Intel purchase. Not to mention the horde’s of review sites that will be putting out rose tinted reviews in Intel’s favor, oh yes that is a reality even if one chooses to ignore it. You influence the elite consumer and that trickles down to the average consumer who doesn’t care for detailed reviews. Who is in the outright lead? I’ll buy their stuff comes the answer from the average joe. This is the market reality. If AMD wants to charge as high as Intel or what they perceive as their product’s true worth then they are going under, and fast.

Like I said if they really want to match Intel for price they need a Skylake beater by a wide margin, then they can begin to charge higher rates.

A bunch of nerds on sites like these cant help AMD’s bottom line if they started pricing their CPUs for their real worth. Market realities are different. AMD is seen a cheap low performing alternative in the eyes of millions, you or I putting out hundred of comment posts showing AMD’s virtues wont change that mindset. Many times people go with Nvidia GPUs that are well beyond their budgets just because they fear AMD “overheats”, is a cheaper alternative, is the poor man’s option. You see this FUD spread by the likes of Intel and Nvidia have done a dandy fine job in ruining AMD’s reputation for the long term. It will take consistent and persistent clever pricing or outright engineering to overcome this perception.

Thankfully AMD is no longer as naive, they will price it aggressively.

❝my .friend’s mate Is getting 98$. HOURLY. on the internet.❞….few days ago new McLaren. F1 bought after earning 18,512$,,,this was my previous month’s paycheck ,and-a little over, 17k$ Last month ..3-5 h/r of work a day ..with extra open doors & weekly. paychecks.. it’s realy the easiest work I have ever Do.. I Joined This 7 months ago and now making over 87$, p/h.Learn. More right Here;;80➤➤➤➤➤ http://GlobalSuperEmploymentVacanciesReportsJobs/GetPaid/98$hourly…. .❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2:❦2::::;;80……..

fuck off louise ive had enough of your shit

I just like to go AMD to fund them to keep them in the battle vs Intel. If Intel ever becomes a full monopoly, PC gaming will be hurting.

I don’t know about 16 core, but 8 core, 16 threads? Sure.

Yeah I am talking about the 16T/8core option. Damn realize now that my first post says 16core. That was an oversight while I typed up something quickly on the phone.

I can see why the other guy might have seen that as absurd to charge just $300-350 for 16 core 32T option.

I don’t expect average joe to help AMD. Main money will come from servers. Sure there will be people who choose AMD. But they’ll be few and far between. And it’s my personal view that AMD should price it a little lower than it’s performance. Not too low as it’ll hamper their profits nor too high that it people flock to intel.

Didn’t you read the article? AMD does offer like-for-like performance in some games. Frankly, new games are only going to get more multi-core optimized, not less, so in that respect, the FX-8370 seems like it’s only going to get better and better vs. the 4 core i5.

Wishful thinking. History has demonstrated that when AMD can beat Intel, their prices are higher. When they’re equal, their prices are equal, etc.

AMD’s pricing for Zen will be entirely based on its relative performance. If it’s faster than Intel’s best, it will cost more than Intel’s best. If it’s equal, it will be equally priced. Only stupid people pay more for Intel just because they’re Intel if they’re slower.

Pretty obvious which titles were AMD optimised (and Intel optimised… Maybe just “optimised”?).

It’s more complex then “more corez!”. The issue AMD face is that Intel can push through almost twice as much info per core as AMD, so even though they have less cores, they have a higher throughput of info. It’s all to do with the core style of architecture and sadly AMD chose wrong. There are some gnarly wiki pages explaining it which are a good but hard read.

Depends on what resolution you game at. If you game at ultra settings at 1440p then cpu matters very little.

Your reply reminded me of somethings, maybe off topic.

I know for sure about one thing based on conversations with both current and former AMD employees whom am connected with professionally, this time AMD’s zen development direction was totally influenced by high end server customers. The reasoning behind this (now this is based on based their opinions not mine) AMD still sees the client segment (meaning our DIY and OEM desktop market) as not a good enough reason to return to HEDT and mainstream client section to compete with Intel. I don’t doubt them, looking at the declining PC sales it certainly is a volatile market that AMD cannot depend much on, the rise of gaming PC is simply not enough to cover the R&D efforts of such an undertaking. Of course Lisa Su says otherwise publicly but internally they have decided to return to high performance CPU market simply because they have confirmed interest from HPC and other server clients provided AMD meets target performance metrics. This means specialized instructions added to the ISA to serve those special interest customers, uArch is tailored to focus on their workloads and certain power envelopes are targeted. This is all well and good for the targeted customers but here is where the dangers of expectations lie for the client side.

Their 40% IPC claim is unclear based on what traces they ran, consumer oriented or their server customer oriented ones is anyone’s guess. There maybe enough reason to believe that it was customer oriented trace benches because some other slides have been floating around that claim “greater than 40% IPC”, interesting thing to note was those slides were targeted at the high end server customer meetings. This has plausibility because server oriented tasks can perform a lot differently and the IPC gains when married with specific instruction and uarch implementations can be much higher. Now if those slides were bogus and AMD is making a claim based on their server oriented traces then that 40% IPC is worrying, in most cases such traces imply even lesser IPC for mainstream applications and games. Also take note of the words “upto”, they are not talking about the average IPCs in their traces just the best case scenario. To be fair AMD can also deliberately quote ultra conservative numbers from conservative trace runs, then that 40% IPC starts to look better as there is more of a chance to have much higher IPC in today’s applications and games. But given the handling of the Fiji XT launch with “overclocker’s dream”, “world’s fastest card” claims there still seems a tendency to slightly over exaggerate the true state of affairs, granted Fury X is faster than the 980Ti/Titan X at 4k in more than a few games but that’s really stretching it when the performance clearly falls short below 4K against the 980Ti and Titan X. Based on their recent history I would honestly lean towards the possibility that those 40% IPC increase claims are slightly embellished in way that they are playing with unknown trace runs.

There is another dimension to the server side interest from their customers, there is actually no real need to have performance that matches or rival’s Intel’s across the board. As long as per/watt is met for those very specific workloads the customers are more than happy to have such a solution that is cheaper because it forgoes many other areas that Intel might be stronger at. This saves logic development effort and therefore die real-estate (less transistor budget, i.e less hardware). Focus is on the customer’s specific requests. For the areas where their Zen cores fall short they want to push their Zen based server APUs with the GPGPU compute side of things.

TL:DR, AMD is confident of Zen for the market their are really targeting, high end servers for their special interest customers, the performance there might not translate well over to client side and that’s were the dangers lie in terms of expectations.

When Suzanne Plummer says the performance will be certainly better I believe her, this will not be another bulldozer, period, but some of the expectations out there by client side consumers on how much of an improvement it will be compared to Intel’s leading offerings maybe misplaced.

Me personally I am all set to build a Zen based system by the end of this year, I am not overly concerned over the few fps I may or may not lose to Intel’s leading chips when it comes to games.

I have never anywhere in here said AMD hasn’t ever beaten Intel on pure performance.

The rest of your post about pricing I have already addressed my points in posts above to others so no point in rehashing them, I see it entirely differently and for good reasons as I have explained above.

One aspect I will address here is your implication that nothing says AMD can’t beat Intel again like they did in the glory days of the Athlon/Opterons. If we are talking mathematical probability, of course there exists a mathematical probability that AMD launches a stonking good killer core based CPU again and have Intel by the jugular but in reality today this probability is exceedingly small and therefore unlikely.

Here’s why, Intel. AMD, Nvidia et al have R&D budgets, sure, but how that budget is spent is very different depending on how much they have available to spend. Intel has a huge budget and when they say spent x amount on R&D a good portion of this is not for in-house R&D it is actually towards academia. Mentoring Professors, Postdocs, PhD candidates, masters students and bachelors students. A company like Intel invests heavily into them at multiple universities every year. These academics are the ones who come up with most of the key research and Intel’s exclusive rights to those works in patent ownership rights comes by way of their funding contributions. The academics are free to have their names in the patent filings and journal publications but ultimate ownership and usage rights lie with Intel. This is why several key innovations are in Intel’s chips and not found in rival solutions from the likes of AMD. AMD too has similar programs in places but since their budgets are much lower and given current cut backs into R&D most of their R&D is in-house with a small distribution towards academia, I know AMD spends a good deal of what little they have at the University of Toronto for example.

Most of these academic students (sometimes even professors themselves) who complete the research are absorbed by the funding company and put in charge to lead that research development into commercialization within the company. For example University of Wisconsin – Madison is world famous and a top university when it comes to pure micro-architectural research. Their contributions to the field are enormous. For example Professor Gurindar Sohi’s PhD students have contributed a a lot to current day Intel Core family of processors, there were even a number of legal wranglings between the university and Intel over some of the patents.

Today when you are not able to have a lot of funded academia working for your interest areas it is tough to compete in terms of innovations. AMD surely can have a stroke of genius from their in-house staff with their own R&D and come up with a world beater of a core design, really this is AMD’s main strength at the moment but practically AMD’s top staff are limited in numbers compared to an army of researchers working for Intel’s research interests every year not including Intel’s own in-house efforts. It is a simple case of numbers, Intel has at it’s disposal far too many brilliant minds compared to cash strapped AMD. There surely is nothing stopping AMD’s limited world class researchers from beating Intel’s combined researcher efforts, its just highly unlikely.

I know the interview process for Intel and AMD, in general I can confidently say AMD’s more rigorous for almost all levels of their engineering segments, this is to imply that a good percentage of AMD’s employees, if not all, are some of the best minds in the industry but their numbers are simply not enough when it comes to the number of resources Intel has at it’s disposal. When job cuts are announced AMD is really letting go some of the cream to rival firms, it is truly a pity it comes to that very often these days.

There is no chance they will be able to as they are so far behind intel.

Like for like apart from it’s hotter and far more power hungry, yet costs the same…

People are also idiots. The AMD CPUs are inferior in every way to their intel counterparts – just look at the chipset differences.

That’s wrong because the kind of GPU needed to game at those settings could easily get bottlenecked by a bad CPU.

There is certainly a chance and what they’ve shown so far shows promise. Intel haven’t exactly been innovating at maximum speed. They have slowed down to pretty lame gains each year because they have a monopoly on the high end right now. The FX 9590 is only around 20-30% behind the 6700k. And the new AMD chips are said to have 40% better performance per clock cycle along with probably more cores than Intel. Do the maths and you can see that the new chips should put them right back in the race.

A year from now all those Intel processors are going to be priced south of where they are today. Intel has an inventory problem with Broadwell. They built too many which led them to stall the skylake rollout and blame the shortages on demand.

Two years of dropping PC sales has finally caught up with Intel. Their first reaction was to push out their future product release cadence by six months, future node advancements have been moved to three years from the two year goal in the past. Their discounts to OEMs appear to already have been increased as HP, Lenovo are both offering machines with $300 Intel processors at the same price point as $150 Carrizo machines from AMD.

The last and most obvious price move they will make is in the direct to consumer market. If the PC market somehow stabilizes or, heaven forbid, grows this year Intel may be able to maintain pricing power in the consumer market. However, if the PC market continues to decline you will see more affordable Intel chips during the year. Intel, being an inhouse fab, has to do all they can to keep volumes up so that there per chip costs stay flat. Should volumes lower fab utilization rates low enough margins suffer, then again, price cuts lower margins too.

Fortunately, Intel saw this coming and changed their depreciation method from four years to five years effective this year. This little accounting trick adds $1.3 billion to margins for 2016. For the sake of Intel’s share price they better hope that was enough to hide the margin erosion they are surely going to suffer this year.

Wrong, an fm2+ Athlon X4 860k can power at Fury Nano at 4k just as well as an i5. There is no reason to spend more than $200 on a cpu to game.

Looking, and I own both brands. You sound like a paid advertisement.

Right, even though the review proves my point. Dumbass.

Where’s the benchmarks for that?

You’re barking up the wrong tree if you think AMD will overcome years of failure with one new chip.

Just look up what gaming at 4k does to a cpu, absolutely nothing. Resolution doesn’t effect a CPU and is put 100% on a GPU, an Athlon X4 with a highend GPU will do 4k as well as most CPUs on games that are optimized anyway. Example at 4k, my amd fx 8350 is the same speed as my i5 6500 with my nitro OC. No difference because the GPU becomes the bottleneck.

Woah, barely brother. You are talking about very small differences in games that are actually optimized. I can only think of Unreal4 games that don’t use multithreading AT ALL would actually matter.

You don’t understand how this works. Higher resolution means GPU becomes bottleneck, not CPU.

Mazty, you’re an idiot.

Also, the i5 clearly wins in 2 games. 4 out of 6 games, the CPU’s are neck and neck. Not bad for a CPU architecture from 2011. You sound foolish when you talk like that.

With a low level API like…oh I don’t know…Directx 12…Vulkan…Higher IPC won’t be needed for gaming.

Not sure what you mean. Games that use single threads are generally not optimized at all. You will see a difference when a low level api is used and an old 4 core 8 thread cpu like the fx 8370 works very well for gaming.

I was skeptical of nano and gcn4 efficiency after ‘untrue’ claims of bulldozer and furyX. But I was pleasantly surprised by those products or for gcn4’s case their demonstration of product. So I’m more positive than earlier on their 40% IPC improvement claim.

On the more than 40% improvement slide, it’s a true slide from AMD but I think in those areas the comparison is from piledriver and not excavator.

Greater than 40% over piledriver is a good point since those where the last high end opterons to be released for the server market.

R9 Nano shows great efficiency since it is using lower clocks and aggressive powertune to pin to desired TDP. This is because the process AMD uses even though is at TSMC 28nm is not the same as the one Nvidia uses. Publicly things are stated in simplistic fashion that they use the same process but this is absolutely not true. They are customized to customer needs/requests. The difference is the level of doping, increasing density leads to high parasitic capacitance deep in the channel if the doping is strong, but without strong doping carrier mobility reduces and therefore less current. It seems AMD’s choice results in steep nose dive in efficiency past a certain switching frequency and adding more voltage to compensate only makes things worse. AMD’s choice of process customization is hurting their higher ceiling clock efficiency on Fury X and not to mention any overclocking potential. Yet this is only part of the story.

Many users and reviewers tend to hit a familiar wall in term of clock frequencies, we can ignore the issues of high power consumption for a moment. The more likely explanation is that Fiji XT’s critical path’s timing margins are already ultra tight and there is no more room to increase clocks further without horrendous clocks skews and/or ensuing hold time and setup time violations, clock to output paths etc. Timing violations in a large densely packed design is a big headache. Choice of clock distribution structure makes big impact on how you can deal with these issue on large dies. I expect Polaris to have solved all of this and improved their timing margins so this situation isn’t repeated again since they claim this generation of GCN is significant improvement over anything they have done before. Part of the reasons for such scenarios to even happen in the first place must be purely a cost based decision in terms of cost, effort and man hours needed when they have Tonga already developed. Using IP re-use they can tile up Fiji’s SP blocks and incorporate HBM PHYs in place of GDDR5 PHYs and a have product that serves the purpose with shortest time to market. The extra power efficiency gains coming from improved power and clock gating in areas they left out for past GCN revisions, this they admitted to during launch day as far as I can recall.

So you want to pay the same for a cpu that is hotter, slower, and has huge power consumption.

The question is then; what would make you NOT buy and AMD cpu as it seems that you merely look at branding over anything else.

…so when the gpu isn’t the bottleneck you run into the same problem as we have now, which is AMD perform worse if the game is demanding on the cpu.

You’re really fishing for excuses here. AMD have an old design which is dated. Heck we all want better as it means better and cheap cpus from both manufacturers, but we need to be honest about how AMD are performing at the moment.

I’m saying that games like Tomb Raider were obviously well optimised since they work well on AMD CPU’s as well as Intel, whereas the others let Intel pull ahead. That points to a bias towards Intel imho.

Intel were behind AMD for years. You have no idea what will happen. The detailed specs of the new chips are impressive.

Perform worse, and perform badly are two different things bud. All those games average 60fps which is fine for the majority of 60hz monitors.

I bought my fx 8350 on sale for 149.99

I own both Intel and AMD, I have an fx8350 and an i5 6500. I use both. I prefer AMD.

We do need to be honest about amd cpus. Honestly they are really old tech and still are very useful. You can buy what you want. Do not, I repeat, do not expect people to stop buying something because it’s not the absolute best. If everyone thought like you there would be no technological improvements ever. Why would companies want to improve their products if they had no competition? Why would we (the consumer) want to settle for what everyone else has when there “could” be something better out there if only we ask for it, or demand it by supporting competition. Don’t expect things to get better with Intels tick tock release cadence because until they are pushed by an other company that has the rights to design and produce x86 chips, they won’t.

You didn’t answer the question. Clearly nothing will put you off so you are just as bad as any other fanboy out there – you buy 100% based on the label rather than the product. Enjoy wasting money on junk for the rest of your life.

Well not buying something that is better for the same price is just stupid.

Go on, I bet you have some deluded argument against that.

I’ll give you an example. An i5 matched with a gtx 970 say with ultra settings at 1440p on Rise of the Tomb Raider gets about 45 fps avg because the gpu is bottlenecked, but lower the resolution and you average about 60 fps. Use the Amd fx 8350 and maybe at 1080p you average about 45fps, at 1440p the Amd will still get 45 fps because that’s all the cpu allows at 1080p. Raising the resolution doesn’t bottleneck the cpu, just the gpu.

I already answered that in my comment lol. Read. Support competition is good. Support monopoly is bad.

I’ll buy Amd as long as they function as an x86 cpu developer. I’ll buy Intel as well. I buy Amd gpus, and Nvidia gpus. I buy Samsung ssds and SanDisk ssds. Do you understand where this is going? I support technology in general and I never have “fanboyd” over anything. I will always support competing brands because all tech companies have a good product.

Gotcha, I didn’t read your sentence like that. It’s always obvious when developers are lazy when a game only runs well on a heavy single thread.

That isn’t how capitalism works…*facepalm* Besides letter the market determine the quality of the product, do you really think your decisions is based on some warped idea that you will change AMDs years of bad development in cpus, rather than that being down to inept CEOs?

That’s just stupid though….and you must know it.

To not support the best product is to allow other companies to push crap and still make money on it. You want the market to drive competition, where as you would keep a dead horse going.

Oh, and to say all tech companies have a good product just shows you are naive and ignorant. I doubt you would even know the difference between something good and something bad.

I doubt you know the difference between getting milked and getting a deal.

I can’t deny AMD had bad leadership fr a while. You can’t deny they AMD was sent into a death spiral from Intels illegal competitive practice. Please don’t say I don’t understand how capitalism works and also say buy Intel. You sound like an advertisement.

AMD just like bottom feeder in real world , Intel like a astute funding manager . Which one you choose ?

Which begs the question why would you in that situation go for the AMD CPU as the i5 is clearly superior to it.

Guaranteed that comment will bite you in the ass.

I sound foolish pointing out that the review demonstrates one of the CPUs is clearly superior. Uhuh.

That’s just you bud – give me a reason why someone would go for the AMD CPU over the i5. Idiot.

Wrong, look at performance of AMD CPUs with the 295×2.

I would buy an fm2+ athlon x4 860k instead of an pentium g4400 and it’s the same price. If you want to compare an i5, compare an i5 6500 to an i5 2500k, Intel hasn’t improved since 2011, you argue that Intel is the better buy, but the better Intel is a used i5 2500k, not a skylake because let’s face it the LGA1151 is just a cash cow. If you want Intel to even remotely improve, you should support the competition. I will speak from experience here, I own two Intel rigs and one amd rig, I have two i5 6500 and an amd fx 8350, I game, I write music, I use the Internet. So far the ONLY improvement is playing games made with the unreal 4 engine and power consumption. I really cannot justify buying anything new until Zen is released because nothing is revolutionary like the first 64bit cpu or the first dual-core cpu. You act like saving money on a cpu is foolish when buying a new cpu is also foolish. Grow up.

https://www.youtube.com/watch?v=tN52LudHrXU fx 8350 cinebech r15 653 cb (msi 990fxa gd80) …. fx 8370 660 cb (msi 990fxa gaming)

Somehow I think my degree in Elec engineering makes me more qualified than you. What is your degree in again?

Anyone with a degree in anything can be a fool. You don’t want to go there with me Btw.

Yes I do – I really want to know your experience in this field as all you bleat on about is buying worse gear so you can encourage the manufacturer to keep making junk.

http://www.tweaktown.com/tweakipedia/58/core-i7-4770k-vs-amd-fx-8350-with-gtx-980-vs-gtx-780-sli-at-4k/index.html

http://www.technologyx.com/featured/amd-vs-intel-our-8-core-cpu-gaming-performance-showdown/4/

Well benchmarks prove you’re talking total horse shit m8 – “Intel hasn’t improved since 2011”. Go be a retard somewhere else.

http://www.technologyx.com/featured/amd-vs-intel-our-8-core-cpu-gaming-performance-showdown/2/

Only a dumb fuck would go for AMD over Intel when it comes to gaming.

Only a sociopath would get their panties in a twist over 5-7 fps. You need help bud.

Nice grammar on that one bud. Stay in electrical engineering. I digress, if you haven’t noticed, the “worse gear” is only a fraction slower and is five years behind the competition. You should schill less and go outside more.

Hahaha, nice deflection. Come on bud, what is you qualifications in this area as you claimed to know so much. Let me guess; none. Nothing. Zero.

How is a 25% drop in some games “a fraction slower”? How is compute per core being 50% less than Intel “a fraction slower”? You clearly are living in a different reality to everyone else.

I live in a world…*duhn duhn duhhhhhnnn* Where “some games” run better on some hardware, and some games run better…..on other hardware. Mind blown.

What games run better on AMD?

And what qualification do you have again? Oh right, ignorance with a strong dose of stupidity. Stop being a fanboy and just give up.

I only see 1 fan boy.

Question 1. Do you own AMD?

Question 2. Do you have first hand experience with many different hardware brands?

Question 3. Are you paid to recommend one brand over an other?

Point is, Big Jim. If I can game on a Quad-core cpu that costs me $64.99, and know for a fact that a low level graphics API can double my gaming performance in Directx 12, hell yeah I’m buying it.

Notice how with AMD gpus that the Performance gains increase with a low level API. Now granted, the added 2 cores on the FX-6300 gives an edge in DX12 over an Athlon X4 860k, but the IPC is better on the Athlon due to smaller DIE and matured architecture. I know that when a comparable cpu in the same price range from Intel is the Pentium G4400, most games need at least 4 cores to play smoothly, or at all. I own a Pentium G4400, not an X4 860k, but I wish I had bought the AMD instead because the Pentium couldn’t even play Planetside 2 for a minute without crashing. The Athlon would have been great but I already bought DDR4, and a Asrock B150 ITX Motherboar, would be a waste of money from restocking fees to send it all back so I bought a second i5 6500, and am selling the G4400.

You really need to learn what the word sociopath means, because what you said makes no sense. #oxforddictionary

Lol you’re not a charity kid, there’s literally no point in supporting the underdog because your misguided purchase won’t swing the market.

I’ve owned both AMD and Intel CPUs, and green and red GPUs. End of the day all that matters is price and performance, something Intel and Nvidia are vastly superior to compared to AMD. Benchmarks prove that AMD do not offer valid for money

So you have no qualifications in this area.

Why did you claim to have then? Scared I would call your bluff? Pahaha.

Anywho, I have owned AMD cpus and gpus. I currently run one of their gpus.

Yes, I have used pretty much every major brand.

Paid to recommend? Wow you are deluded. Of course I’m not. Are you as you seem to be more adamant about AMD good rather than looking at facts.

Why are you talking about entry level kit then post Ashes benchmarks with top range gpus?! What moron would pair a low end cpu (of any brand) with a gpu that is almost x10 its price? Who would try to run a DX12 game on sub $100 cpus and not expect problems?

Also PS2 is horribly optimised which is why it most likely needs 4 cores over 2 cores that have a higher throughput (but I doubt you know what that means).

Plus the fact you bought ddr4 without realising compatibility shows you have no idea what you are on about.

http://cdn.wccftech.com/wp-content/uploads/2016/03/Hitman-PC-DirectX-12-CPU-Scaling.jpg

So you just proved my point. 20% fps drop with AMD and that is with DX12. Before it was 50% drop.

No point proven. That’s a 5 year old cpu In spitting distance of a $400 i7 6700k

When was 20% “spitting distance” ?! And that is only in that game (and one that supports DX12). What about other games, and what about many cpu heavy programs? Just give it up. You have no idea about this area and no qualifications so stop it.

Haha, I know a bit more about this than you. Name a cpu heavy program that doesn’t use multithreading. An FX 8 core will do just as well as an i5-i7. And gaming only is an issue when the CPU is the bottleneck, if you have a good GPU then you don’t need a faster CPU. Do some research. http://www.tweaktown.com/tweakipedia/56/amd-fx-8350-powering-gtx-780-sli-vs-gtx-980-sli-at-4k/index.html

My comment was deleted that showed the FX 8370 very close to the brand new I7. Read here. http://www.computerbase.de/2016-03/hitman-benchmarks-directx-12/2/

You have just proven you are an idiot. You don’t even know what a chipset is, hence your ddr4 mess-up. But of course you have to be arrogant and deluded as always.

AMD and Intel use multithreading in two different form, AMD choosing the losing side, hence why they are slower with more cores. So cram it and do a real degree about this topic rather than waving your flag and claiming to know everything.

What ddr4 mess up?

And there we go. You have no education in this area and just like to talk big and bluff. Now go away .

You are full blown my friend. Take your pills and get off the computer.

See? Total denial from you. You claimed to be educated in this are – which you are not. You claimed to know more – yet provide no evidence. You claimed AMD were the same, yet showed they are worse.

Simply put, what would make you change your mind? *rolls eyes*

+1

i will comment here.. stop fighting it just a cpu. u open internet browser and use it for facebook simple as that. all that 60+ FPS isnt going to be shit. last time i play CS no graphic card, my FPS is around 25 and it still kick ass that was like from 2001. n u all complain to about that shit. CHEAP is better 🙂 trust me..

The Ryzeens say different

Looks like it didn’t bite him in the ass. The Ryzeens are looking pretty good right now